centos7 + hadoop 2.7.7 完全分布式搭建

Posted luosenfit

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了centos7 + hadoop 2.7.7 完全分布式搭建相关的知识,希望对你有一定的参考价值。

一 完全分布式集群(单点)

Hadoop官方地址:http://hadoop.apache.org/

1 准备3台客户机

1.1防火墙,静态IP,主机名

关闭防火墙,设置静态IP,主机名此处略,参考 Linux之CentOS7.5安装及克隆

1.2 修改host文件

我们希望三个主机之间都能够使用主机名称的方式相互访问而不是IP,我们需要在hosts中配置其他主机的host。因此我们在主机的/etc/hosts下均进行如下配置:

[[email protected] ~]# vi /etc/hosts 配置主机host 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.100.21 hadoop0 192.168.100.22 hadoop1 192.168.100.23 hadoop2 将配置发送到其他主机(同时在其他主机上配置) [[email protected] ~]# scp -r /etc/hosts [email protected]:/etc/ [[email protected] ~]# scp -r /etc/hosts [email protected]:/etc/ 测试 [[email protected] ~]# ping hadoop0 [[email protected] ~]# ping hadoop1 [[email protected] ~]# ping hadoop2

1.3 添加用户账号

在所有的主机下均建立一个账号hadoop用来运行hadoop ,并将其添加至sudoers中 [[email protected] ~]# useradd hadoop #添加用户通过手动输入修改密码 [[email protected] ~]# passwd hadoop #更改用户 hadoop 的密码 hadoop #密码

#passwd: 所有的身份验证令牌已经成功更新。 设置hadoop用户具有root权限 修改 /etc/sudoers 文件,找到下面一行,在root下面添加一行,如下所示: [[email protected] ~]# vim /etc/sudoers

#找到这里 ## Allow root to run any commands anywhere root ALL=(ALL) ALL hadoop ALL=(ALL) ALL

修改完毕 :wq! 保存退出,现在可以用hadoop帐号登录,然后用命令 su hadoop ,切换用户即可获得root权限进行操作。

1.4 /目录下创建文件夹

1)在root用户下创建hadoop文件夹 [[email protected] /]# cd / [[email protected] /]# mkdir hadoop 2)修改hadoop文件夹的所有者 [[email protected] opt]# chown -R hadoop:hadoop /hadoop 3)查看hadoop文件夹的所有者 [[email protected] /]# ll

drwxr-xr-x. 4 hadoop hadoop 46 May 10 16:56 hadoop

2 安装配置jdk1.8

[[email protected] ~]# rpm -qa|grep java #查询是否安装java软件: 这里使用官网下载好的tar包传到服务器 /hadoop 下 [[email protected] hadoop]# tar -zxvf jdk-8u181-linux-x64.tar.gz [[email protected] hadoop]# mv jdk1.8.0_181 jdk1.8 设置JAVA_HOME

[[email protected] hadoop]# vim /etc/profile

#在文件末尾添加

#JAVA

export JAVA_HOME=/hadoop/jdk1.8

export JRE_HOME=/hadoop/jdk1.8/jre

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib:$JRE_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/sbin

[[email protected] hadoop]# source /etc/profile #重启配置文件生效

#检查是否配置成功

[[email protected] /]$ java -version

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)

3 安装hadoop集群

3.1 集群部署规划

| 节点名称 | NN1 | NN2 | DN | RM | NM |

| hadoop0 | NameNode | DataNode | ResourceManager | NodeManager | |

| hadoop1 | SecondaryNameNode | DataNode | NodeManager | ||

| hadoop2 | DataNode | NodeManager |

3.2 设置SSH免密钥

关于ssh免密码的设置,要求每两台主机之间设置免密码,自己的主机与自己的主机之间也要求设置免密码。 这项操作可以在hadoop用户下执行,执行完毕公钥在/home/hadoop/.ssh/id_rsa.pub

[hadoo[email protected] ~]# ssh-keygen -t rsa [[email protected] ~]# ssh-copy-id hadoop0 [[email protected] ~]# ssh-copy-id hadoop1 [[email protected] ~]# ssh-copy-id hadoop2

node1与node2为namenode节点要相互免秘钥 HDFS的HA

[[email protected] ~]# ssh-keygen -t rsa [[email protected] ~]# ssh-copy-id hadoop1 [[email protected] ~]# ssh-copy-id hadoop0 [[email protected] ~]# ssh-copy-id hadoop2

node2与node3为yarn节点要相互免秘钥 YARN的HA

[[email protected] ~]# ssh-keygen -t rsa [[email protected] ~]# ssh-copy-id hadoop2 [[email protected] ~]# ssh-copy-id hadoop0 [[email protected] ~]# ssh-copy-id hadoop1

3.3 解压安装hadoop

[[email protected] hadoop]# tar -zxvf hadoop-2.7.7.tar.gz

4 配置hadoop集群

注意:配置文件在hadoop2.7.7/etc/hadoop/下

4.1 修改core-site.xml

[[email protected] hadoop]$ vi core-site.xml <configuration>

<!-- 指定HDFS中NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop0:9000</value>

</property>

<!-- 指定hadoop运行时产生文件的存储目录,注意tmp目录需要创建 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/hadoop/hadoop-2.7.7/tmp</value>

</property>

</configuration>

4.2 修改hadoop-env.sh

[[email protected] hadoop]$ vi hadoop-env.sh 修改 export JAVA_HOME=/hadoop/jdk1.8

4.3 修改hdfs-site.xml

[[email protected] hadoop]$ vi hdfs-site.xml <configuration>

<!-- 设置dfs副本数,不设置默认是3个 -->

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<!-- 设置namenode数据存放路径 -->

<property>

<name>dfs.name.dir</name>

<value>/hadoop/hadoop-2.7.7/dfs/name</value>

</property>

<!-- 设置datanode数据存放路径 -->

<property>

<name>dfs.data.dir</name>

<value>/hadoop/hadoop-2.7.7/dfs/data</value>

</property>

<!-- 设置secondname的端口 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop1:50090</value>

</property>

</configuration>

4.4 修改slaves

[[email protected] hadoop]$ vi slaves hadoop0 hadoop1 hadoop2

4.5 修改mapred-site.xml

[[email protected] hadoop]# mv mapred-site.xml.template mapred-site.xml [[email protected] hadoop]$ vi mapred-site.xml <configuration> <!-- 指定mr运行在yarn上 --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

4.6 修改yarn-site.xml

[[email protected] hadoop]$ vi yarn-site.xml <configuration> <!-- reducer获取数据的方式 --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- 指定YARN的ResourceManager的地址 --> <property> <name>yarn.resourcemanager.hostname</name> <value>hadoop0</value> </property> </configuration>

4.9 分发hadoop到节点

[[email protected] /]# scp -r hadoop/ [email protected]:$PWD [[email protected] /]# scp -r hadoop/ [email protected]:$PWD

4.10 配置环境变量

[[email protected] ~]$ sudo vim /etc/profile 末尾追加 export HADOOP_HOME=/hadoop/hadoop-2.7.7 export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin 编译生效 source /etc/profile

5 启动验证集群

5.1 启动集群

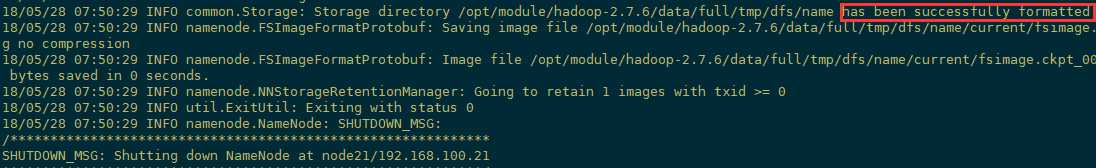

如果集群是第一次启动,需要格式化namenode

[[email protected] hadoop-2.7.7]$ hdfs namenode -format

启动Hdfs:

[[email protected] ~]# start-dfs.sh

Starting namenodes on [hadoop0]

hadoop0: starting namenode, logging to /hadoop/hadoop-2.7.7/logs/hadoop-hadoop-namenode-hadoop0.out

hadoop0: starting datanode, logging to /hadoop/hadoop-2.7.7/logs/hadoop-hadoop-datanode-hadoop0.out

hadoop2: starting datanode, logging to /hadoop/hadoop-2.7.7/logs/hadoop-hadoop-datanode-hadoop2.out

hadoop1: starting datanode, logging to /hadoop/hadoop-2.7.7/logs/hadoop-hadoop-datanode-hadoop1.out

Starting secondary namenodes [hadoop1]

hadoop1: starting secondarynamenode, logging to /hadoop/hadoop-2.7.7/logs/hadoop-hadoop-secondarynamenode-hadoop1.out

启动Yarn: 注意:Namenode和ResourceManger如果不是同一台机器,不能在NameNode上启动 yarn,应该在ResouceManager所在的机器上启动yarn。

[[email protected] ~]# start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /hadoop/hadoop-2.7.7/logs/yarn-hadoop-resourcemanager-hadoop0.out

hadoop2: starting nodemanager, logging to /hadoop/hadoop-2.7.7/logs/yarn-hadoop-nodemanager-hadoop2.out

hadoop1: starting nodemanager, logging to /hadoop/hadoop-2.7.7/logs/yarn-hadoop-nodemanager-hadoop1.out

hadoop0: starting nodemanager, logging to /hadoop/hadoop-2.7.7/logs/yarn-hadoop-nodemanager-hadoop0.out

jps查看进程

[[email protected] hadoop-2.7.7]$ jps

2162 NodeManager

2058 ResourceManager

2458 Jps

1692 NameNode

1820 DataNode

[[email protected] hadoop]$ jps

1650 Jps

1379 DataNode

1478 SecondaryNameNode

1549 NodeManager

[[email protected] hadoop]$ jps

1506 DataNode

1611 NodeManager

1711 Jps

5.2 Hadoop启动停止方式

1)各个服务组件逐一启动 分别启动hdfs组件: hadoop-daemon.sh start|stop namenode|datanode|secondarynamenode 启动yarn: yarn-daemon.sh start|stop resourcemanager|nodemanager 2)各个模块分开启动(配置ssh是前提)常用 start|stop-dfs.sh start|stop-yarn.sh 3)全部启动(不建议使用) start|stop-all.sh

5.3 集群时间同步

是否安装ntp

[[email protected] hadoop-2.7.7]$ ntp

bash: ntp: command not found

[[email protected] hadoop-2.7.7]$ sudo yum install ntp #安装ntp

[[email protected] hadoop-2.7.7]$ sudo ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime #linux的时区设置为中国上海时区

[[email protected] hadoop-2.7.7]$ sudo ntpdate time.windows.com #与当地网络时间同步

12 May 12:33:09 ntpdate[2571]: adjust time server 13.70.22.122 offset 0.029582 sec

[[email protected] hadoop-2.7.7]$ date #查看是否同步成功

Sun May 12 12:33:16 CST 2019

以上是关于centos7 + hadoop 2.7.7 完全分布式搭建的主要内容,如果未能解决你的问题,请参考以下文章