代码:

from bs4 import BeautifulSoup

from requests import Session, get, post

from time import sleep

import random

import re, os

class ProxyIpPool(object):

def __init__(self,page):

object.__init__(self)

self.page = page

def init_proxy_ip_pool(self):

url = ‘https://www.kuaidaili.com/free/‘

tablelist = [‘IP‘, ‘PORT‘, ‘类型‘, ‘位置‘]

ip = []

port = []

type = []

position = []

r = Session()

headers = {

‘Accept‘: ‘text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8‘,

‘Accept-Encoding‘: ‘gzip, deflate, br‘,

‘Accept-Language‘: ‘zh-CN,zh;q=0.9‘,

‘Connection‘:‘keep-alive‘,

‘Host‘: ‘www.kuaidaili.com‘,

# ‘Referer‘: url, # 点击下一页时 每一页的referer对应的url为:从前一页的link来到当前页的那个link。比如:从百度进入代理IP第一页时的referer的url就是百度的link

‘Upgrade-Insecure-Requests‘: ‘1‘,

‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.168 Safari/537.36‘

}

if self.page > 1:

url = url + ‘inha/‘ + str(self.page) + ‘/‘

request = r.get(url,headers=headers,timeout=2,)

print(request.status_code)

soup = BeautifulSoup(request.text, ‘lxml‘)

tags = soup.find_all(‘td‘, attrs={‘data-title‘: tablelist})

# 获取所有IP

ip_tag_match = re.compile(r‘data-title="IP">(.+?)</td‘)

ip.append(ip_tag_match.findall(str(tags)))

# 获取所有端口

port_tag_match = re.compile(r‘data-title="PORT">(.+?)</td‘)

port.append(port_tag_match.findall(str(tags)))

# 获取所有类型

type_match = re.compile(r‘data-title="类型">(.+?)</td‘)

type.append(type_match.findall(str(tags)))

# 获取所有位置

position_tag_match = re.compile(r‘data-title="位置">(.+?)</td‘)

position.append(position_tag_match.findall(str(tags)))

sleep(random.random()*7)

# ip、port、type、position作为字典保存

data_title = {‘ip‘: ip, ‘port‘: port, ‘type‘: type, ‘position‘: position}

return data_title

def create_proxy_ip_pool(page):

pool = ProxyIpPool(page).init_proxy_ip_pool()

print(‘初始化完成!开始创建代理池...‘)

iplist = pool.get(‘ip‘)

portlist = pool.get(‘port‘)

typelsit = pool.get(‘type‘)

positionlist = pool.get(‘position‘)

for i in range(0, len(iplist[0])):

print(format(iplist[0][i],‘<22‘) + format(portlist[0][i],‘<17‘) + format(typelsit[0][i],‘<12‘) + positionlist[0][i])

try:

with open(‘C:/Users/adimin/Desktop/proxyip.txt‘,‘a‘) as fp:

fp.write(format(iplist[0][i],‘<22‘) + format(portlist[0][i],‘<17‘) + format(typelsit[0][i],‘<12‘) + positionlist[0][i] + ‘\\r\\n‘)

except FileExistsError as err:

print(err)

os._exit(2)

if __name__ == ‘__main__‘:

print(‘正在初始化代理池...请耐心等待...‘)

print(format(‘IP‘, ‘^16‘) + format(‘PORT‘, ‘^16‘) + format(‘类型‘, ‘^16‘) + format(‘位置‘, ‘^16‘))

try:

with open(‘C:/Users/adimin/Desktop/proxyip.txt‘, ‘a‘) as fp:

fp.write(format(‘IP‘, ‘^16‘) + format(‘PORT‘, ‘^16‘) + format(‘类型‘, ‘^16‘) + format(‘位置‘, ‘^16‘) + ‘\\r\\n‘)

except:

with open(‘C:/Users/adimin/Desktop/proxyip.txt‘, ‘w‘) as fp:

fp.write(format(‘IP‘, ‘^16‘) + format(‘PORT‘, ‘^16‘) + format(‘类型‘, ‘^16‘) + format(‘位置‘, ‘^16‘) + ‘\\r\\n‘)

# 不知道为什么只能在外面循环才能爬取多页的IP 如果把代码改为在init_proxy_ip_pool函数中进行循环 则只能爬一页多一点...

for i in range(1,2177):

create_proxy_ip_pool(i)

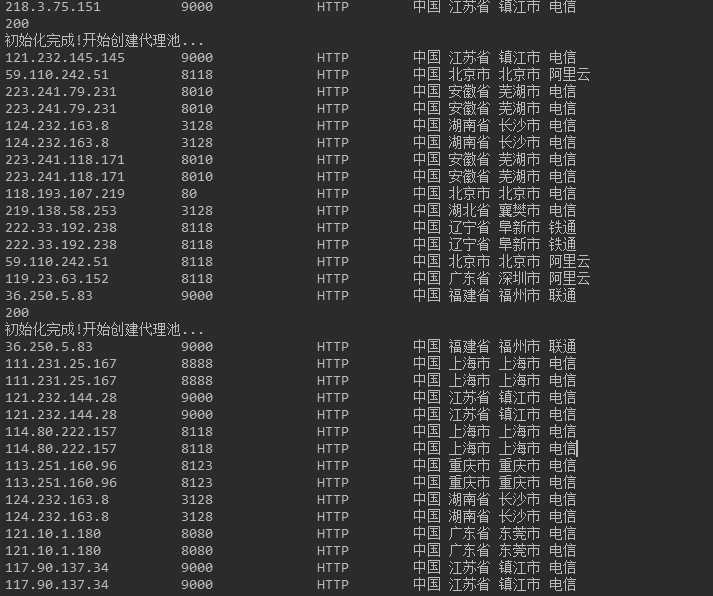

运行结果:

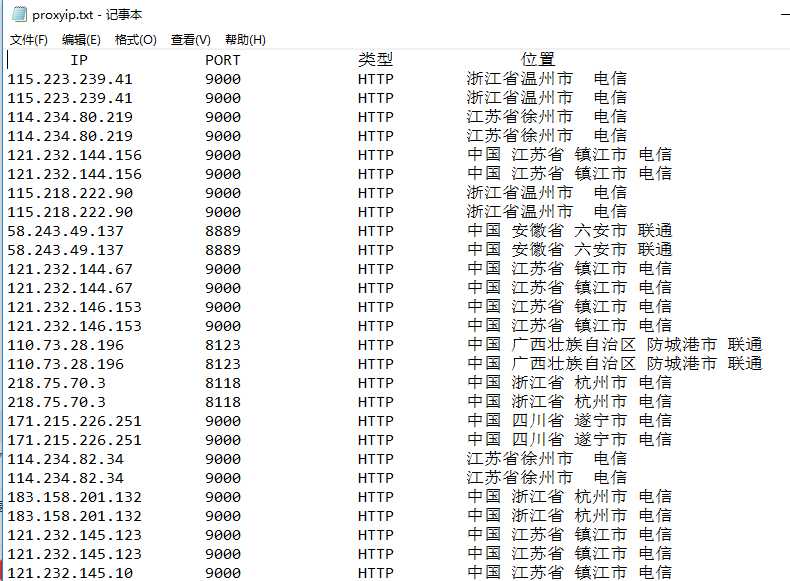

保存到本地: