Spark基础学习笔记06:搭建Spark On YARN模式的集群

Posted howard2005

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Spark基础学习笔记06:搭建Spark On YARN模式的集群相关的知识,希望对你有一定的参考价值。

文章目录

零、本讲学习目标

- 学会搭建Spark On YARN模式的集群

- 能够将Spark应用程序提交到集群运行

一、在Spark Standalone模式的集群基础上修改配置

- Spark On YARN模式的搭建比较简单,仅需要在YARN集群的一个节点上安装Spark即可,该节点可作为提交Spark应用程序到YARN集群的客户端。Spark本身的Master节点和Worker节点不需要启动。

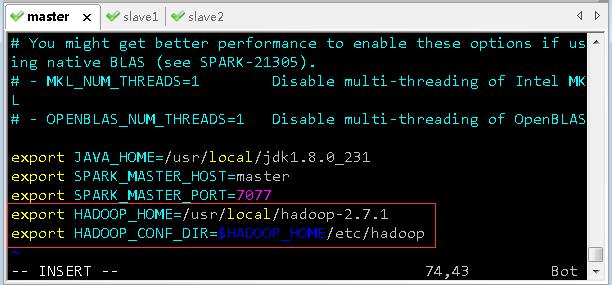

- 使用此模式需要修改Spark配置文件

$SPARK_HOME/conf/spark-env.sh,添加Hadoop相关属性,指定Hadoop与配置文件所在目录

export JAVA_HOME=/usr/local/jdk1.8.0_231

export SPARK_MASTER_HOST=master

export SPARK_MASTER_PORT=7077

export HADOOP_HOME=/usr/local/hadoop-2.7.1

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

- 存盘退出后,执行命令:

source spark-env.sh,让配置生效

二、运行Spark应用程序

- 修改完毕后,即可运行Spark应用程序。例如,运行Spark自带的求圆周率的例子(注意提前将Hadoop HDFS和YARN启动),并且以Spark On YARN的cluster模式运行。

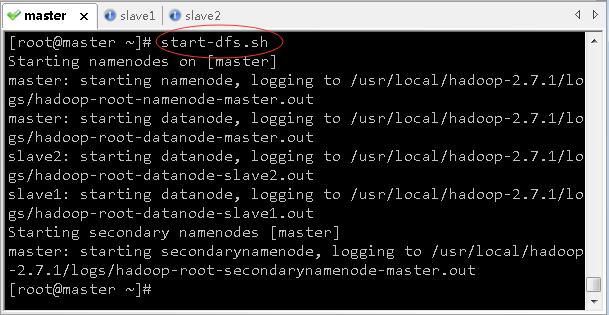

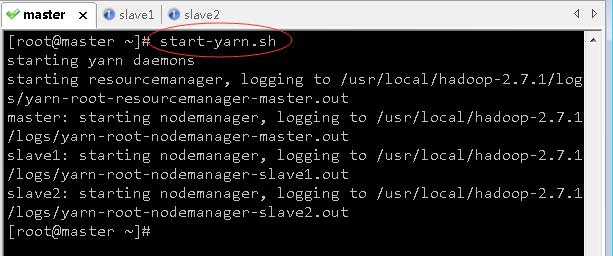

(一)启动Hadoop的HDFS和YARN

(二)运行Spark应用程序

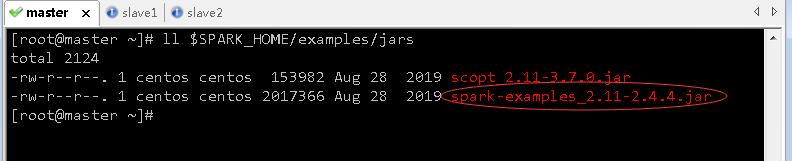

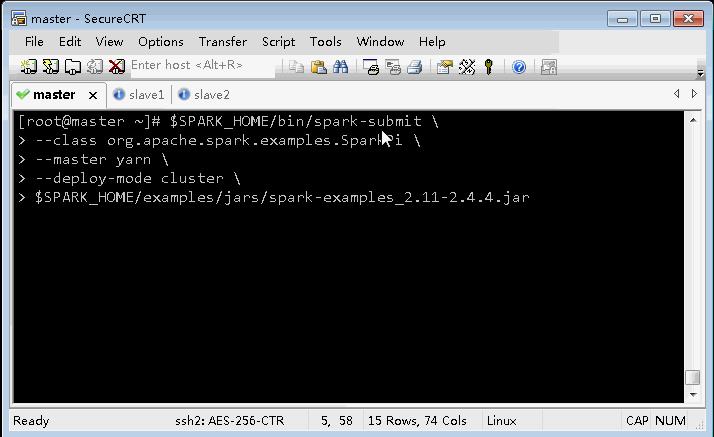

- 以Spark On YARN的cluster模式运行Spark应用程序

$SPARK_HOME/examples/jars/spark-examples_2.11-2.4.4.jar

- 执行命令

$SPARK_HOME/bin/spark-submit \\

--class org.apache.spark.examples.SparkPi \\

--master yarn \\

--deploy-mode cluster \\

$SPARK_HOME/examples/jars/spark-examples_2.11-2.4.4.jar

22/02/28 23:02:10 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

22/02/28 23:02:11 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.1.103:8032

22/02/28 23:02:11 INFO yarn.Client: Requesting a new application from cluster with 3 NodeManagers

22/02/28 23:02:11 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

22/02/28 23:02:11 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead

22/02/28 23:02:11 INFO yarn.Client: Setting up container launch context for our AM

22/02/28 23:02:11 INFO yarn.Client: Setting up the launch environment for our AM container

22/02/28 23:02:11 INFO yarn.Client: Preparing resources for our AM container

22/02/28 23:02:11 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

22/02/28 23:02:13 INFO yarn.Client: Uploading resource file:/tmp/spark-12d8a273-fc12-4e3b-9160-401e20d6d361/__spark_libs__4734784791851563295.zip -> hdfs://master:9000/user/root/.sparkStaging/application_1646060221038_0001/__spark_libs__4734784791851563295.zip

22/02/28 23:02:17 INFO yarn.Client: Uploading resource file:/usr/local/spark-2.4.4-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.4.4.jar -> hdfs://master:9000/user/root/.sparkStaging/application_1646060221038_0001/spark-examples_2.11-2.4.4.jar

22/02/28 23:02:17 INFO yarn.Client: Uploading resource file:/tmp/spark-12d8a273-fc12-4e3b-9160-401e20d6d361/__spark_conf__1484099741755123215.zip -> hdfs://master:9000/user/root/.sparkStaging/application_1646060221038_0001/__spark_conf__.zip

22/02/28 23:02:17 INFO spark.SecurityManager: Changing view acls to: root

22/02/28 23:02:17 INFO spark.SecurityManager: Changing modify acls to: root

22/02/28 23:02:17 INFO spark.SecurityManager: Changing view acls groups to:

22/02/28 23:02:17 INFO spark.SecurityManager: Changing modify acls groups to:

22/02/28 23:02:17 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

22/02/28 23:02:18 INFO yarn.Client: Submitting application application_1646060221038_0001 to ResourceManager

22/02/28 23:02:18 INFO impl.YarnClientImpl: Submitted application application_1646060221038_0001

22/02/28 23:02:19 INFO yarn.Client: Application report for application_1646060221038_0001 (state: ACCEPTED)

22/02/28 23:02:19 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1646060538467

final status: UNDEFINED

tracking URL: http://master:8088/proxy/application_1646060221038_0001/

user: root

22/02/28 23:02:20 INFO yarn.Client: Application report for application_1646060221038_0001 (state: ACCEPTED)

22/02/28 23:02:21 INFO yarn.Client: Application report for application_1646060221038_0001 (state: ACCEPTED)

22/02/28 23:02:22 INFO yarn.Client: Application report for application_1646060221038_0001 (state: ACCEPTED)

22/02/28 23:02:23 INFO yarn.Client: Application report for application_1646060221038_0001 (state: ACCEPTED)

22/02/28 23:02:24 INFO yarn.Client: Application report for application_1646060221038_0001 (state: ACCEPTED)

22/02/28 23:02:25 INFO yarn.Client: Application report for application_1646060221038_0001 (state: RUNNING)

22/02/28 23:02:25 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: master

ApplicationMaster RPC port: 40773

queue: default

start time: 1646060538467

final status: UNDEFINED

tracking URL: http://master:8088/proxy/application_1646060221038_0001/

user: root

22/02/28 23:02:26 INFO yarn.Client: Application report for application_1646060221038_0001 (state: RUNNING)

22/02/28 23:02:27 INFO yarn.Client: Application report for application_1646060221038_0001 (state: RUNNING)

22/02/28 23:02:28 INFO yarn.Client: Application report for application_1646060221038_0001 (state: RUNNING)

22/02/28 23:02:29 INFO yarn.Client: Application report for application_1646060221038_0001 (state: RUNNING)

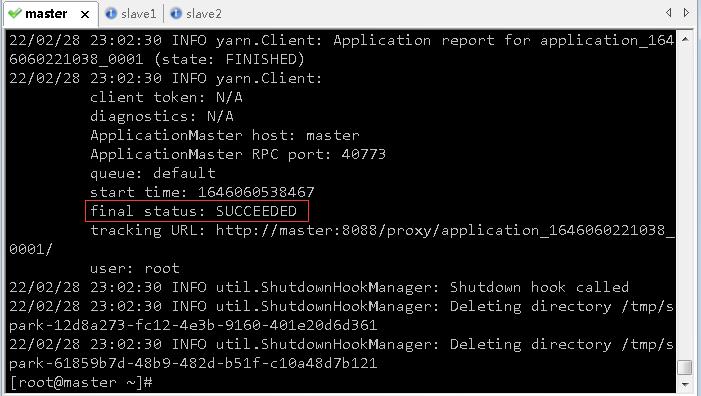

22/02/28 23:02:30 INFO yarn.Client: Application report for application_1646060221038_0001 (state: FINISHED)

22/02/28 23:02:30 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: master

ApplicationMaster RPC port: 40773

queue: default

start time: 1646060538467

final status: SUCCEEDED

tracking URL: http://master:8088/proxy/application_1646060221038_0001/

user: root

22/02/28 23:02:30 INFO util.ShutdownHookManager: Shutdown hook called

22/02/28 23:02:30 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-12d8a273-fc12-4e3b-9160-401e20d6d361

22/02/28 23:02:30 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-61859b7d-48b9-482d-b51f-c10a48d7b121

- 注意:

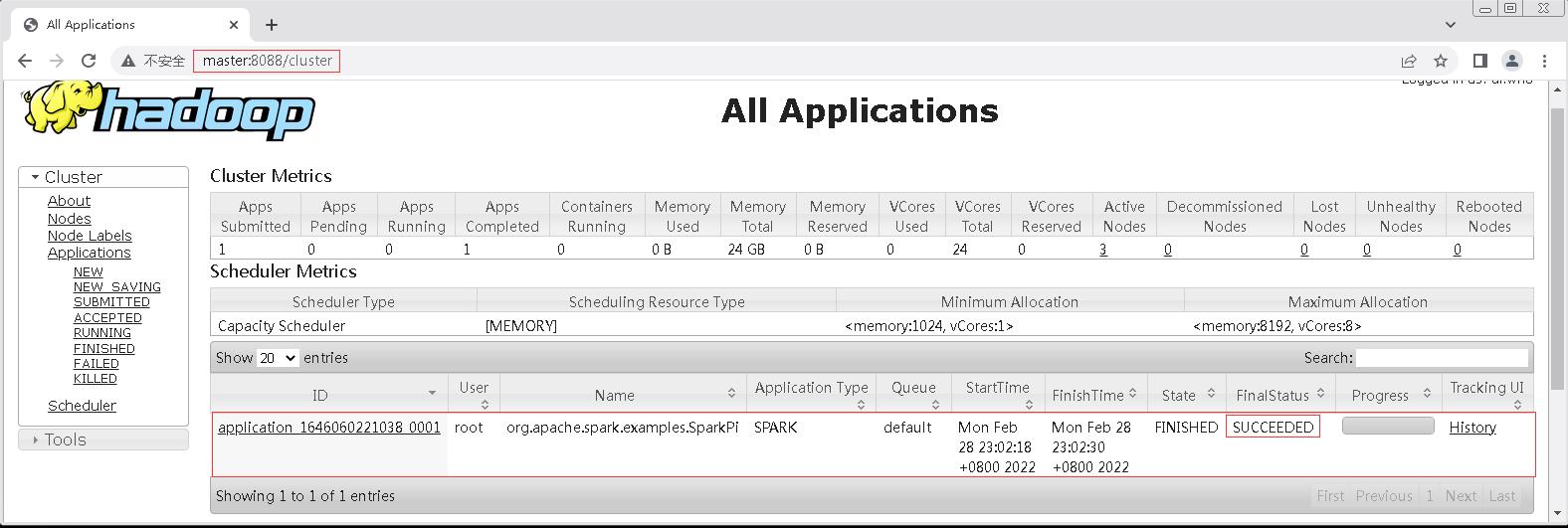

tracking URL: http://master:8088/proxy/application_1646060221038_0001/ - 程序执行过程中,可在YARN的ResourceManager对应的WebUI中查看应用程序执行的详细信息

- 浏览器中通过

http://master:8088来访问YARN的WEB UI

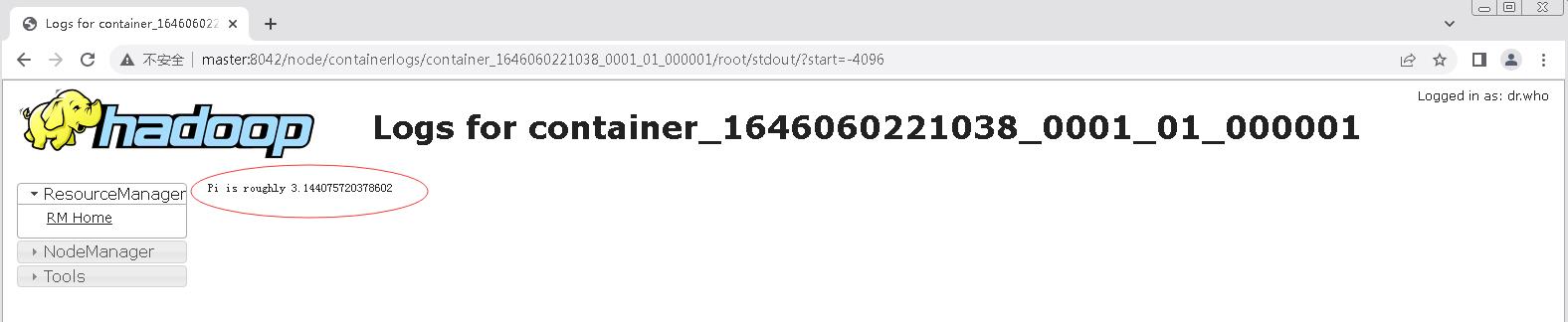

(三)查看Spark应用程序运行结果

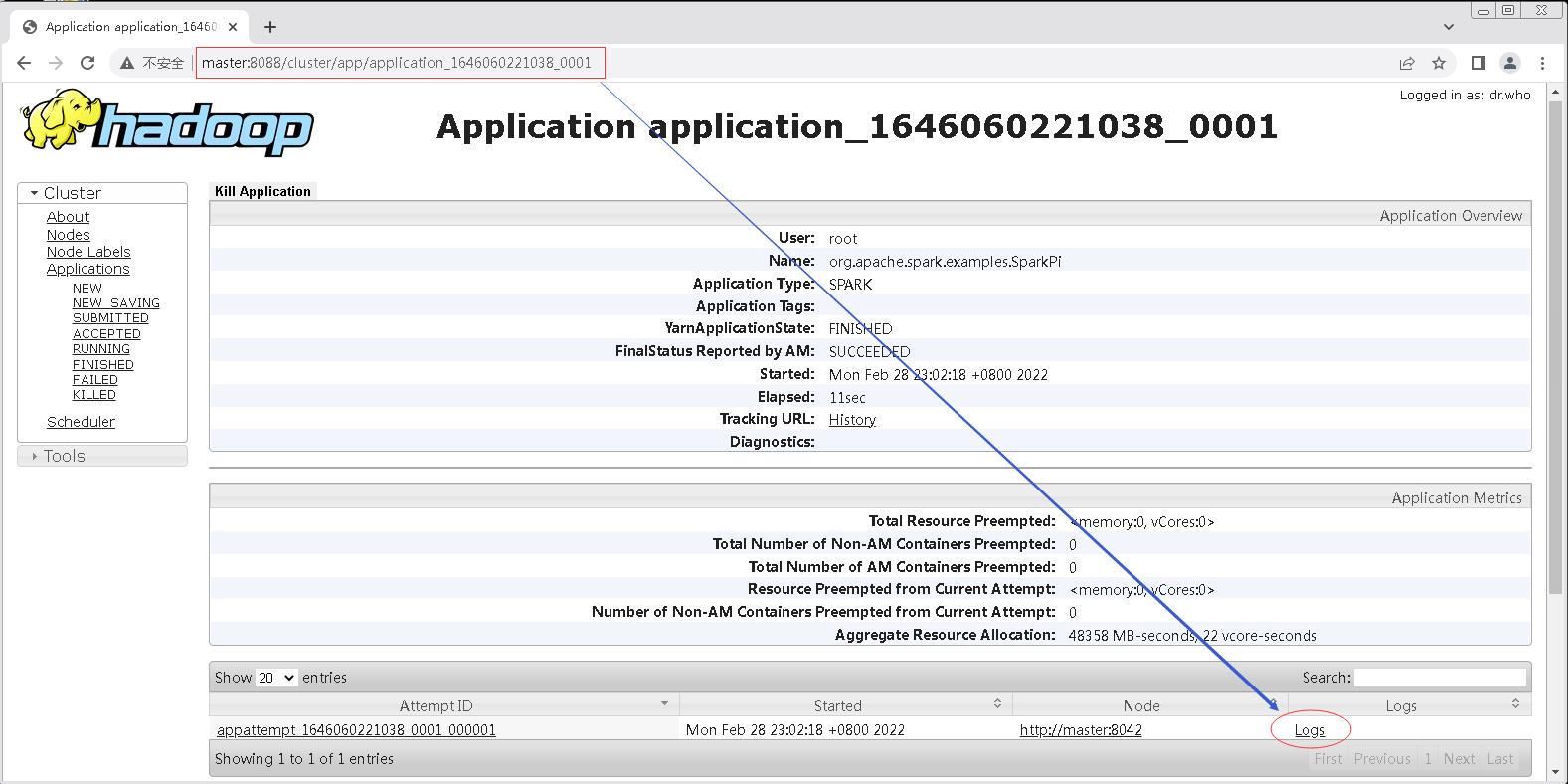

Spark On YARN的cluster模式运行该例子的输出结果不会打印到控制台中,可以在上图的WebUI中单击application Id,在Application详情页面的最下方单击Logs超链接,然后在新页面中单击stdout所属超链接,即可显示输出日志,而运行结果则在日志中,整个查看日志的过程如下所示。

- 单击

Logs超链接

以上是关于Spark基础学习笔记06:搭建Spark On YARN模式的集群的主要内容,如果未能解决你的问题,请参考以下文章