数字图像处理(名字的分割与识别)

Posted 一只夫夫

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了数字图像处理(名字的分割与识别)相关的知识,希望对你有一定的参考价值。

内容

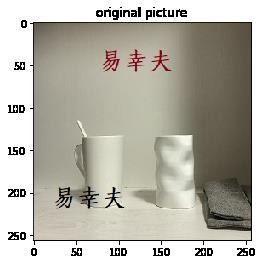

利用所学图像分割技术,将图片中包含的名字进行分割与识别

操作步骤

- 利用基于边界的分割技术,提取图像边缘信息,然后根据灰度分布,提供数个可能存在名字的选区框

- 训练一个能识别出图像中是否有名字的卷积神经网络

- 去重后,若CNN识别到选区中有名字,则显示该选区框

1. 提取边缘,产生可能存在名字的选区框

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

import PIL.Image as im

from function import*

dim = 256

img = plt.imread('2.jpg')

img = img/255.0

img = tf.image.resize(img, (dim,dim))

plt.title("original picture")

plt.imshow(img)

<matplotlib.image.AxesImage at 0x1968646a148>

# 使用log模板,对原图进行卷积,提取边缘

img_in = np.zeros((1, dim, dim, 1)) #首位1表示1张图片,末位1表示单通道

img_in[0,:,:,0] = img[:,:,0] #生成灰度图像

fil = np.zeros((5, 5, 1, 1)) #滤波器

fil[:,:,0,0]=[[0, 0, -1, 0, 0],

[0, -1, -2, -1, 0],

[-1, -2, 16, -2, -1],

[0, -1, -2, -1, 0],

[0, 0, -1, 0, 0 ]]

img_out = tf.nn.conv2d(input = img_in, filters = fil, strides = 1, padding = 'SAME')

img_out = np.clip(img_out, 0, 1)

ccc = np.zeros((dim,dim,3))

ccc[:,:,0] = img_out[0,:,:,0]

ccc[:,:,1] = img_out[0,:,:,0]

ccc[:,:,2] = img_out[0,:,:,0]

plt.imshow(ccc)

# 产生可能存在名字的选区,并将这些“提议”选区显示出来

co = []

mat = img_out[0,:,:,0]

gray_mean = np.mean(mat)

gray_std = np.var(mat)

for x in range(50, 230, 30):

for y in range(50, 230, 30):

mean = np.mean(mat[x-50:x+50, y-50:y+50])

if(mean - gray_mean > 0):

co.append((x,y))

RGBmat = creat_RGBmat(ccc,co)

plt.figure(1)

plt.title("proposal blocks")

plt.imshow(RGBmat)

plt.figure(2)

picture_test = creat_X_test(img, co, 100)

print("已分割出选区数:",len(co))

print("block shape:",picture_test.shape)

for i in range(picture_test.shape[0]):

plt.subplot(2,4,i+1)

plt.imshow(picture_test[i])

plt.axis('off')

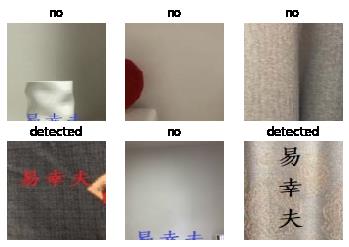

2. 训练一个卷积神经网络

xx,yy = load_picture()

xx_train, yy_train, xx_test, yy_test = pre_process(xx, yy, 6)

xx_train = tf.image.resize(xx_train, (100,100))

xx_test = tf.image.resize(xx_test, (100,100))

print("xx_trian.shape:",xx_train.shape)

print("xx_test.shape:",xx_test.shape)

print("\\t\\t显示6张用于训练的图片")

for i in range(6):

plt.subplot(2,3,i+1)

plt.title(yy_train[i])

plt.imshow(xx_train[i])

plt.axis('off')

# 定义一个模型

def model_init_fun(input_shape, num_classes):

initializer = tf.initializers.VarianceScaling(scale=2.0)

layers = ([

tf.keras.layers.Conv2D(input_shape = input_shape, filters = 30, padding = 'same',

kernel_size = (10,10), activation = 'relu',kernel_initializer=initializer, name = 'conv1'),

tf.keras.layers.Conv2D(filters = 60, padding = 'same', kernel_size = (5,5),

activation = 'relu', kernel_initializer=initializer, name = 'conv2'),

tf.keras.layers.Conv2D(filters = 120, padding = 'same', kernel_size = (3,3),

activation = 'relu', kernel_initializer=initializer, name = 'conv3'),

tf.keras.layers.MaxPool2D(pool_size = (4,4)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(num_classes, kernel_initializer=initializer, name = 'fc'),

tf.keras.layers.Softmax()

])

model = tf.keras.Sequential(layers)

return model

def optimizer_init_fn(learning_rate):

return tf.keras.optimizers.Adam(learning_rate=learning_rate)

cnn = model_init_fun((100, 100, 3), 2)

opt = optimizer_init_fn(1e-4)

# 训练模型并显示部分验证结果

cnn.compile(optimizer = opt, loss = 'sparse_categorical_crossentropy',

metrics=[tf.keras.metrics.sparse_categorical_accuracy])

cnn.fit(x = xx_train, y = yy_train, epochs=20, verbose=0)

cnn.evaluate(x = xx_test, y = yy_test,verbose=2)

y_pre = cnn.predict_classes(xx_test)

print("\\n\\t\\t\\t验证结果")

for i in range(xx_test.shape[0]):

plt.subplot(2, xx_test.shape[0]/2, i+1)

if y_pre[i] == 1:

title = "detected"

else:

title = "no"

plt.title(title)

plt.imshow(xx_test[i])

plt.axis('off')

6/6 - 0s - loss: 0.0607 - sparse_categorical_accuracy: 1.0000

验证结果

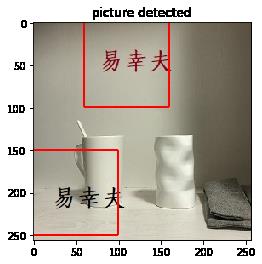

3. 对选区进行检测,显示检测到名字的选区框

# 逐个检测选区

y_pre = cnn.predict_classes(picture_test)

print("\\n\\t\\t\\t检测结果")

for i in range(y_pre.shape[0]):

plt.subplot(3, 4, i+1)

if y_pre[i] == 1:

title = "detected"

else:

title = "no"

plt.title(title)

plt.imshow(picture_test[i])

plt.axis('off')

检测结果

#对选区去重并显示

co_final = []

index = np.where(y_pre == 1)

index = index[0]

for i in range(len(index)):

x,y = co[index[i]]

if(i==len(index)-1):

px,py=co[i-1]

dist = (x-px)**2 + (y-py)**2

if(dist > 50**2):

co_final.append((x,y))

else:

px,py=co[i+1]

co_final.append((x,y))

dist = (x-px)**2 + (y-py)**2

if(dist < 50**2):

i = i + 1

final_picture = creat_RGBmat(np.array(img), co_final)

plt.title("picture detected")

plt.imshow(final_picture)

<matplotlib.image.AxesImage at 0x22244d0edc8>

以上是关于数字图像处理(名字的分割与识别)的主要内容,如果未能解决你的问题,请参考以下文章