Unity 渲染YUV数据 ---- 以Unity渲染Android Camera数据为例子

Posted newchenxf

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Unity 渲染YUV数据 ---- 以Unity渲染Android Camera数据为例子相关的知识,希望对你有一定的参考价值。

1 背景

一般Unity都是RGB直接渲染的,但是总有特殊情况下,需要渲染YUV数据。比如,Unity读取android的Camera YUV数据,并渲染。本文就基于这种情况,来展开讨论。

Unity读取Android的byte数组,本身就耗时,如果再把YUV数据转为RGB也在脚本中实现(即CPU运行),那就很卡了。

一种办法,就是这个转换,放在GPU完成,即,在shader实现!

接下来,分2块来贴出源码和实现。

2 YUV数据来源 ---- Android 侧

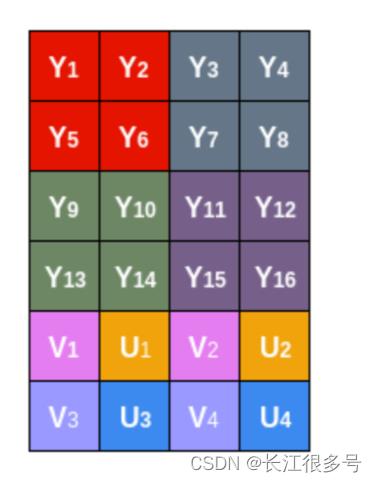

Android的Camera数据,一般是YUV格式的,最常用的就是NV21。其像素布局如下:

即数据排列是YYYYVUVU…

现在,Android就做一项工作,打开Camera,随便渲染到一个空纹理,然后呢,获得Preview的帧数据。

用一个SimpleCameraPlugin作为Unity调用Android的接口类:

代码如下:

package com.chenxf.unitycamerasdk;

import android.app.Activity;

import android.content.Context;

import android.graphics.Point;

import android.graphics.SurfaceTexture;

import android.hardware.Camera;

import android.opengl.GLES11Ext;

import android.opengl.GLES20;

import android.os.Handler;

import android.os.Looper;

import android.os.Message;

import android.util.Log;

import android.util.Size;

/**

*

* 直接读取YUV数据,在unity渲染的方案

*/

public class SimpleCameraPlugin implements SurfaceTexture.OnFrameAvailableListener, Camera.PreviewCallback

private static final String TAG = "qymv#CameraPlugin";

private final static int REQUEST_CODE = 1;

private final static int MSG_START_PREVIEW = 1;

private final static int MSG_SWITCH_CAMERA = 2;

private final static int MSG_RELEASE_PREVIEW = 3;

private final static int MSG_MANUAL_FOCUS = 4;

private final static int MSG_ROCK = 5;

private SurfaceTexture mSurfaceTexture;

private boolean mIsUpdateFrame;

private Context mContext;

private Handler mCameraHanlder;

private Size mExpectedPreviewSize;

private Size mPreviewSize;

private boolean isFocusing;

private int mWidth;

private int mHeight;

private byte[] yBuffer = null;

private byte[] uvBuffer = null;

public SimpleCameraPlugin()

Log.i(TAG, " create");

initCameraHandler();

private void initCameraHandler()

mCameraHanlder = new Handler(Looper.getMainLooper())

@Override

public void handleMessage(Message msg)

switch (msg.what)

case MSG_START_PREVIEW:

startPreview();

break;

case MSG_RELEASE_PREVIEW:

releasePreview();

break;

case MSG_SWITCH_CAMERA:

//switchCamera();

break;

case MSG_MANUAL_FOCUS:

//manualFocus(msg.arg1, msg.arg2);

break;

case MSG_ROCK:

autoFocus();

break;

default:

break;

;

public void releasePreview()

CameraUtil.releaseCamera();

// mCameraSensor.stop();

// mFocusView.cancelFocus();

Log.e(TAG, "releasePreview releaseCamera");

public void startPreview()

//if (mPreviewSize != null && requestPermission() )

if (mExpectedPreviewSize != null)

if (CameraUtil.getCamera() == null)

CameraUtil.openCamera();

Log.e(TAG, "openCamera");

//CameraUtil.setDisplay(mSurfaceTexture);

Camera.Size previewSize = CameraUtil.startPreview((Activity) mContext, mExpectedPreviewSize.getWidth(), mExpectedPreviewSize.getHeight());

CameraUtil.setCallback(this);

if(previewSize != null)

mWidth = previewSize.width;

mHeight = previewSize.height;

mPreviewSize = new Size(previewSize.width, previewSize.height);

initSurfaceTexture(previewSize.width, previewSize.height);

initBuffer(previewSize.width, previewSize.height);

CameraUtil.setDisplay(mSurfaceTexture);

private void initBuffer(int width, int height)

yBuffer = new byte[width * height];

uvBuffer = new byte[width * height / 2];

public void autoFocus()

if (CameraUtil.isBackCamera() && CameraUtil.getCamera() != null)

focus(mWidth / 2, mHeight / 2, true);

private void focus(final int x, final int y, final boolean isAutoFocus)

Log.i(TAG, "focus, position: " + x + " " + y);

if (CameraUtil.getCamera() == null || !CameraUtil.isBackCamera())

return;

if (isFocusing && isAutoFocus)

return;

if (mWidth == 0 || mHeight == 0)

return;

isFocusing = true;

Point focusPoint = new Point(x, y);

Size screenSize = new Size(mWidth, mHeight);

if (!isAutoFocus)

//mFocusView.beginFocus(x, y);

CameraUtil.newCameraFocus(focusPoint, screenSize, new Camera.AutoFocusCallback()

@Override

public void onAutoFocus(boolean success, Camera camera)

isFocusing = false;

if (!isAutoFocus)

//mFocusView.endFocus(success);

);

/**

* 初始化

* 调用该函数需要EGL 线程,否则会出现如下错误

* libEGL : call to OpenGL ES API with no current context

*

* @param context android的context,最好传递activity的上下文

* @param width 纹理宽

* @param height 纹理高

*/

public void start(Context context, int width, int height)

Log.w(TAG, "Start context " + context);

mContext = context;

mWidth = width;

mHeight = height;

callStartPreview(width, height);

private void callStartPreview(int width, int height)

mExpectedPreviewSize = new Size(width, height);

mCameraHanlder.sendEmptyMessage(MSG_START_PREVIEW);

mCameraHanlder.sendEmptyMessageDelayed(MSG_ROCK, 2000);

public void resume()

public void pause()

mCameraHanlder.sendEmptyMessage(MSG_RELEASE_PREVIEW);

private void initSurfaceTexture(int width, int height)

Log.i(TAG, "initSurfaceTexture " + " width " + width + " height " + height);

//生成CAMERA输出的纹理id

int videoTextureId = createOESTextureID();

//根据创建的纹理id生成一个SurfaceTexture, 视频播放输出到surface texture

mSurfaceTexture = new SurfaceTexture(videoTextureId);

mSurfaceTexture.setDefaultBufferSize(width, height);

mSurfaceTexture.setOnFrameAvailableListener(this);

@Override

public void onFrameAvailable(SurfaceTexture surfaceTexture)

Log.i(TAG, "onFrameAvailable");

//视频播放开始有输出

mIsUpdateFrame = true;

if (frameListener != null)

frameListener.onFrameAvailable();

/**

* Unity调用,更新视频纹理,然后绘制FBO

*/

public void updateTexture()

Log.i(TAG, "onFrameAvailable");

mIsUpdateFrame = false;

mSurfaceTexture.updateTexImage();

public boolean isUpdateFrame()

return mIsUpdateFrame;

@Override

public void onActivityResume()

resume();

@Override

public void onActivityPause()

pause();

private FrameListener frameListener;

public void setFrameListener(FrameListener listener)

frameListener = listener;

private synchronized boolean copyFrame(byte[] bytes)

Log.i(TAG, "copyFrame start");

if(yBuffer != null && uvBuffer != null)

System.arraycopy(bytes, 0, yBuffer, 0, yBuffer.length);

int uvIndex = yBuffer.length;

System.arraycopy(bytes, uvIndex, uvBuffer, 0, uvBuffer.length);

Log.i(TAG, "copyFrame end");

return true;

return false;

public synchronized byte[] readYBuffer()

Log.i(TAG, "readYBuffer");

return yBuffer;

public synchronized byte[] readUVBuffer()

Log.i(TAG, "readUVBuffer");

return uvBuffer;

public int getPreviewWidth()

Log.i(TAG, "getPreviewWidth " + mWidth);

return mWidth;

public int getPreviewHeight()

Log.i(TAG, "getPreviewWidth " + mHeight);

return mHeight;

@Override

public void onPreviewFrame(byte[] bytes, Camera camera)

if(copyFrame(bytes))

UnityMsgBridge.notifyGotFrame();

public interface FrameListener

void onFrameAvailable();

public static int createOESTextureID()

int[] texture = new int[1];

GLES20.glGenTextures(texture.length, texture, 0);

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, texture[0]);

GLES20.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES20.GL_TEXTURE_MIN_FILTER, GLES20.GL_LINEAR);

GLES20.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES20.GL_TEXTURE_MAG_FILTER, GLES20.GL_LINEAR);

GLES20.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES20.GL_TEXTURE_WRAP_S, GLES20.GL_CLAMP_TO_EDGE);

GLES20.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES20.GL_TEXTURE_WRAP_T, GLES20.GL_CLAMP_TO_EDGE);

return texture[0];

依赖很少,有个CameraUtils来打开摄像头:

package com.qiyi.unitycamerasdk;

import android.app.Activity;

import android.content.Context;

import android.content.pm.PackageManager;

import android.graphics.Point;

import android.graphics.Rect;

import android.graphics.SurfaceTexture;

import android.hardware.Camera;

import android.os.Build;

import android.util.Log;

import android.util.Size;

import android.view.Surface;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Collections;

import java.util.Comparator;

import java.util.List;

/**

* Created By Chengjunsen on 2018/8/23

*/

public class CameraUtil

private static final String TAG = "qymv#CameraUtil";

private static Camera mCamera = null;

private static int mCameraID = Camera.CameraInfo.CAMERA_FACING_FRONT;

public static void openCamera()

mCamera = Camera.open(mCameraID);

if (mCamera == null)

throw new RuntimeException("Unable to open camera");

public static Camera getCamera()

return mCamera;

public static void releaseCamera()

if (mCamera != null)

mCamera.stopPreview();

mCamera.release();

mCamera = null;

public static void setCameraId(int cameraId)

mCameraID = cameraId;

public static void switchCameraId()

mCameraID = isBackCamera() ? Camera.CameraInfo.CAMERA_FACING_FRONT : Camera.CameraInfo.CAMERA_FACING_BACK;

public static boolean isBackCamera()

return mCameraID == Camera.CameraInfo.CAMERA_FACING_BACK;

public static void setDisplay(SurfaceTexture surfaceTexture)

try

if (mCamera != null)

mCamera.setPreviewTexture(surfaceTexture);

catch (IOException e)

e.printStackTrace();

public static Camera.Size startPreview(Activity activity, int width, int height) 以上是关于Unity 渲染YUV数据 ---- 以Unity渲染Android Camera数据为例子的主要内容,如果未能解决你的问题,请参考以下文章