scrapy初探之实现爬取小说

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了scrapy初探之实现爬取小说相关的知识,希望对你有一定的参考价值。

一、前言

上文说明了scrapy框架的基础知识,本篇实现了爬取第九中文网的免费小说。

二、scrapy实例创建

1、创建项目

C:\Users\LENOVO\PycharmProjects\fullstack\book9>scrapy startproject book9

2、定义要爬取的字段(item.py)

import scrapy

class Book9Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

book_name = scrapy.Field() #小说名字

chapter_name = scrapy.Field() #小说章节名字

chapter_content = scrapy.Field() #小说章节内容3、写爬虫(spiders/book.py)

在spiders目录下创建book.py文件

import scrapy

from book9.items import Book9Item

from scrapy.http import Request

import os

class Book9Spider(scrapy.Spider):

name = "book9"

allowed_domains = [‘book9.net‘]

start_urls = [‘https://www.book9.net/xuanhuanxiaoshuo/‘]

#爬取每本书的URL

def parse(self, response):

book_urls = response.xpath(‘//div[@class="r"]/ul/li/span[@class="s2"]/a/@href‘).extract()

for book_url in book_urls:

yield Request(book_url,callback=self.parse_read)

#进入每一本书目录

def parse_read(self,response):

read_url = response.xpath(‘//div[@class="box_con"]/div/dl/dd/a/@href‘).extract()

for i in read_url:

read_url_path = os.path.join("https://www.book9.net" + i)

yield Request(read_url_path,callback=self.parse_content)

#爬取小说名,章节名,内容

def parse_content(self,response):

#爬取小说名

book_name = response.xpath(‘//div[@class="con_top"]/a/text()‘).extract()[2]

#爬取章节名

chapter_name = response.xpath(‘//div[@class="bookname"]/h1/text()‘).extract_first()

#爬取内容并处理

chapter_content_2 = response.xpath(‘//div[@class="box_con"]/div/text()‘).extract()

chapter_content_1 = ‘‘.join(chapter_content_2)

chapter_content = chapter_content_1.replace(‘ ‘, ‘‘)

item = Book9Item()

item[‘book_name‘] = book_name

item[‘chapter_name‘] = chapter_name

item[‘chapter_content‘] = chapter_content

yield item4、处理爬虫返回的数据(pipelines.py)

import os

class Book9Pipeline(object):

def process_item(self, item, spider):

#创建小说目录

file_path = os.path.join("D:\\Temp",item[‘book_name‘])

print(file_path)

if not os.path.exists(file_path):

os.makedirs(file_path)

#将各章节写入文件

chapter_path = os.path.join(file_path,item[‘chapter_name‘] + ‘.txt‘)

print(chapter_path)

with open(chapter_path,‘w‘,encoding=‘utf-8‘) as f:

f.write(item[‘chapter_content‘])

return item

5、配置文件(settiings.py)

BOT_NAME = ‘book9‘

SPIDER_MODULES = [‘book9.spiders‘]

NEWSPIDER_MODULE = ‘book9.spiders‘

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# 设置请求头部

DEFAULT_REQUEST_HEADERS = {

"User-Agent" : "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0;",

‘Accept‘: ‘text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8‘

}

# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

‘book9.pipelines.Book9Pipeline‘: 300,

}6、执行爬虫

C:\Users\LENOVO\PycharmProjects\fullstack\book9>scrapy crawl book9 --nolog

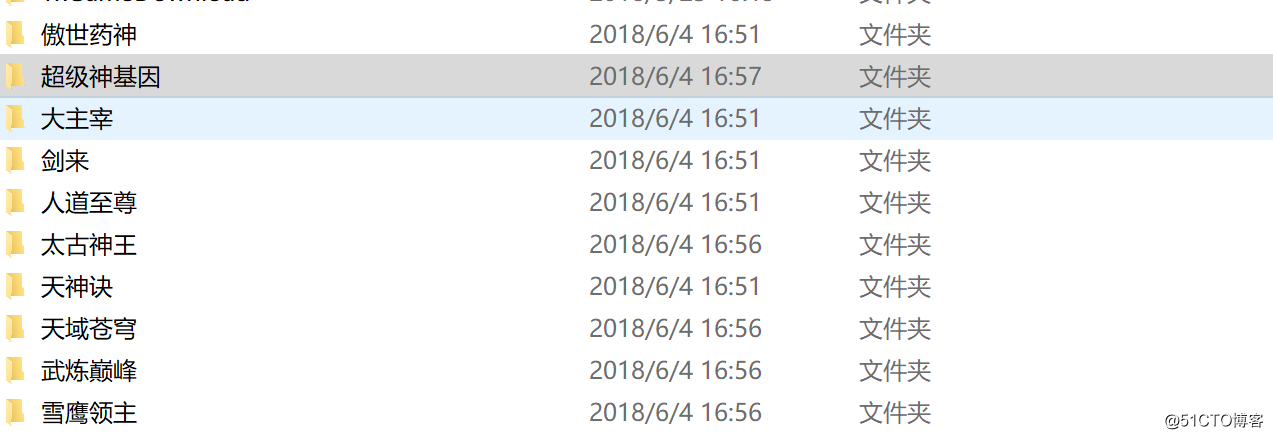

7、结果

以上是关于scrapy初探之实现爬取小说的主要内容,如果未能解决你的问题,请参考以下文章

Python爬虫之Scrapy框架系列(14)——实战ZH小说爬取多页爬取