京东口红top 30分析

Posted 飞基

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了京东口红top 30分析相关的知识,希望对你有一定的参考价值。

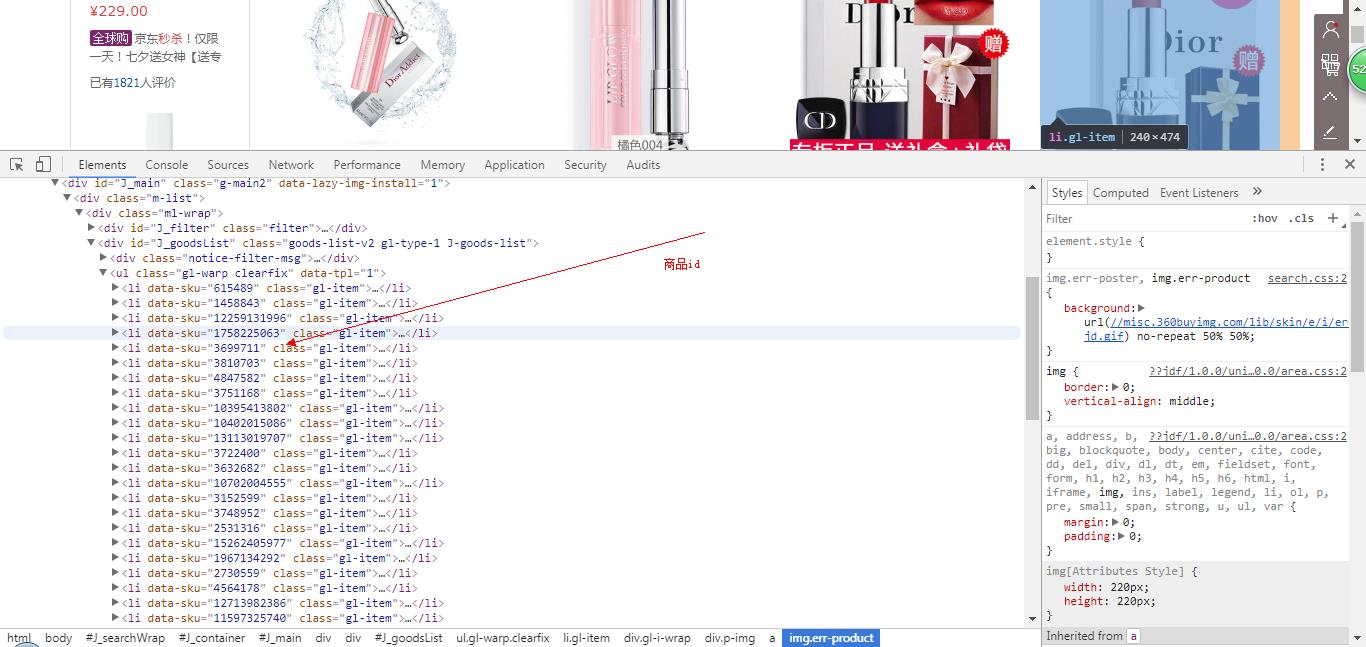

一、抓取商品id

分析网页源码,发现所有id都是在class=“gl-item”的标签里,可以利用bs4的select方法查找标签,获取id:

获取id后,分析商品页面可知道每个商品页面就是id号不同,可构造url:

将获取的id和构造的url保存在列表里,如下源码:

1 def get_product_url(url): 2 global pid 3 global links 4 req = urllib.request.Request(url) 5 req.add_header("User-Agent", 6 \'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 \' 7 \'(Khtml, like Gecko) Chrome/60.0.3112.101 Safari/537.36\') 8 req.add_header("GET", url) 9 content = urllib.request.urlopen(req).read() 10 soup = bs4.BeautifulSoup(content, "lxml") 11 product_id = soup.select(\'.gl-item\') 12 for i in range(len(product_id)): 13 lin = "https://item.jd.com/" + str(product_id[i].get(\'data-sku\')) + ".html" 14 # 获取链接 15 links.append(lin) 16 # 获取id 17 pid.append(product_id[i].get(\'data-sku\'))

二、获取商品信息

通过商品页面获取商品的基本信息(商品名,店名,价格等):

1 product_url = links[i] 2 req = urllib.request.Request(product_url) 3 req.add_header("User-Agent", 4 \'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:56.0) Gecko/20100101 Firefox/56.0\') 5 req.add_header("GET", product_url) 6 content = urllib.request.urlopen(req).read() 7 # 获取商品页面源码 8 soup = bs4.BeautifulSoup(content, "lxml") 9 # 获取商品名 10 sku_name = soup.select_one(\'.sku-name\').getText().strip() 11 # 获取商店名 12 try: 13 shop_name = soup.find(clstag="shangpin|keycount|product|dianpuname1").get(\'title\') 14 except: 15 shop_name = soup.find(clstag="shangpin|keycount|product|zcdpmc_oversea").get(\'title\') 16 # 获取商品ID 17 sku_id = str(pid[i]).ljust(20) 18 # 获取商品价格

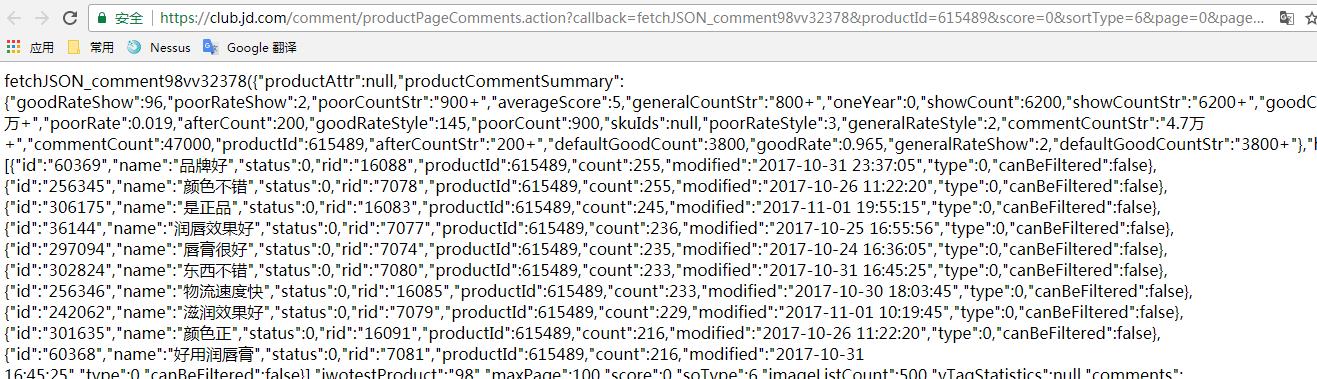

通过抓取评论的json页面获取商品热评、好评率、评论:

获取热评源码:

1 def get_product_comment(product_id): 2 comment_url = \'https://club.jd.com/comment/productPageComments.action?\' \\ 3 \'callback=fetchJSON_comment98vv16496&\' \\ 4 \'productId={}&\' \\ 5 \'score=0&\' \\ 6 \'sortType=6&\' \\ 7 \'page=0&\' \\ 8 \'pageSize=10\' \\ 9 \'&isShadowSku=0\'.format(str(product_id)) 10 response = urllib.request.urlopen(comment_url).read().decode(\'gbk\', \'ignore\') 11 response = re.search(r\'(?<=fetchJSON_comment98vv16496\\().*(?=\\);)\', response).group(0) 12 response_json = json.loads(response) 13 # 获取商品热评 14 hot_comments = [] 15 hot_comment = response_json[\'hotCommentTagStatistics\'] 16 for h_comment in hot_comment: 17 hot = str(h_comment[\'name\']) 18 count = str(h_comment[\'count\']) 19 hot_comments.append(hot + \'(\' + count + \')\') 20 return \',\'.join(hot_comments)

获取好评率源码:

1 def get_good_percent(product_id): 2 comment_url = \'https://club.jd.com/comment/productPageComments.action?\' \\ 3 \'callback=fetchJSON_comment98vv16496&\' \\ 4 \'productId={}&\' \\ 5 \'score=0&\' \\ 6 \'sortType=6&\' \\ 7 \'page=0&\' \\ 8 \'pageSize=10\' \\ 9 \'&isShadowSku=0\'.format(str(product_id)) 10 response = requests.get(comment_url).text 11 response = re.search(r\'(?<=fetchJSON_comment98vv16496\\().*(?=\\);)\', response).group(0) 12 response_json = json.loads(response) 13 # 获取好评率 14 percent = response_json[\'productCommentSummary\'][\'goodRateShow\'] 15 percent = str(percent) + \'%\' 16 return percent

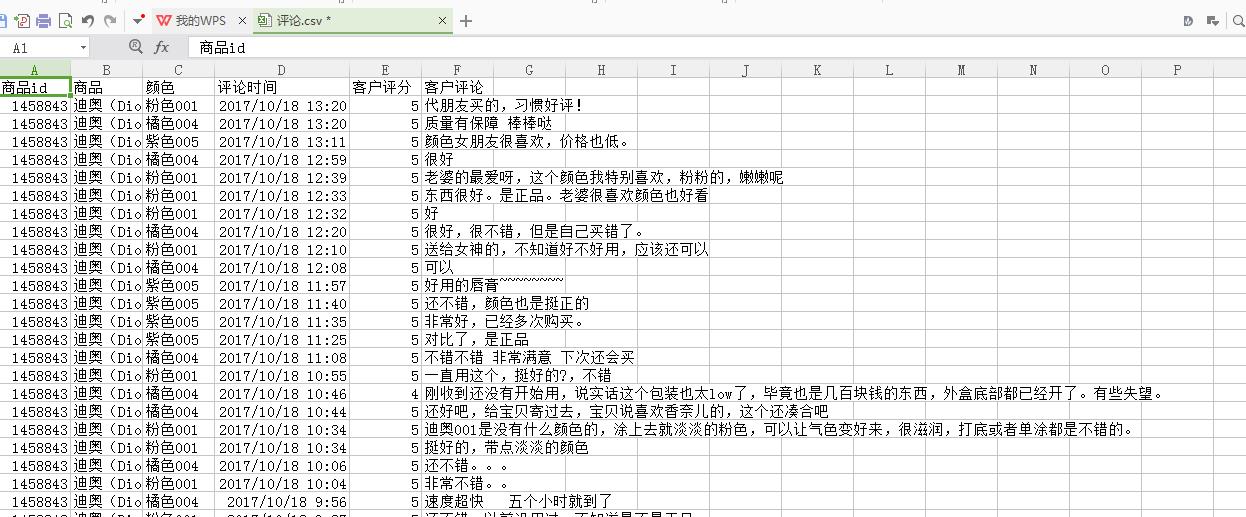

获取评论源码:

1 def get_comment(product_id, page): 2 global word 3 comment_url = \'https://club.jd.com/comment/productPageComments.action?\' \\ 4 \'callback=fetchJSON_comment98vv16496&\' \\ 5 \'productId={}&\' \\ 6 \'score=0&\' \\ 7 \'sortType=6&\' \\ 8 \'page={}&\' \\ 9 \'pageSize=10\' \\ 10 \'&isShadowSku=0\'.format(str(product_id), str(page)) 11 response = urllib.request.urlopen(comment_url).read().decode(\'gbk\', \'ignore\') 12 response = re.search(r\'(?<=fetchJSON_comment98vv16496\\().*(?=\\);)\', response).group(0) 13 response_json = json.loads(response) 14 # 写入评论.csv 15 comment_file = open(\'{0}\\\\评论.csv\'.format(path), \'a\', newline=\'\', encoding=\'utf-8\', errors=\'ignore\') 16 write = csv.writer(comment_file) 17 # 获取用户评论 18 comment_summary = response_json[\'comments\'] 19 for content in comment_summary: 20 # 评论时间 21 creation_time = str(content[\'creationTime\']) 22 # 商品颜色 23 product_color = str(content[\'productColor\']) 24 # 商品名称 25 reference_name = str(content[\'referenceName\']) 26 # 客户评分 27 score = str(content[\'score\']) 28 # 客户评论 29 content = str(content[\'content\']).strip() 30 # 记录评论 31 word.append(content) 32 write.writerow([product_id, reference_name, product_color, creation_time, score, content]) 33 comment_file.close()

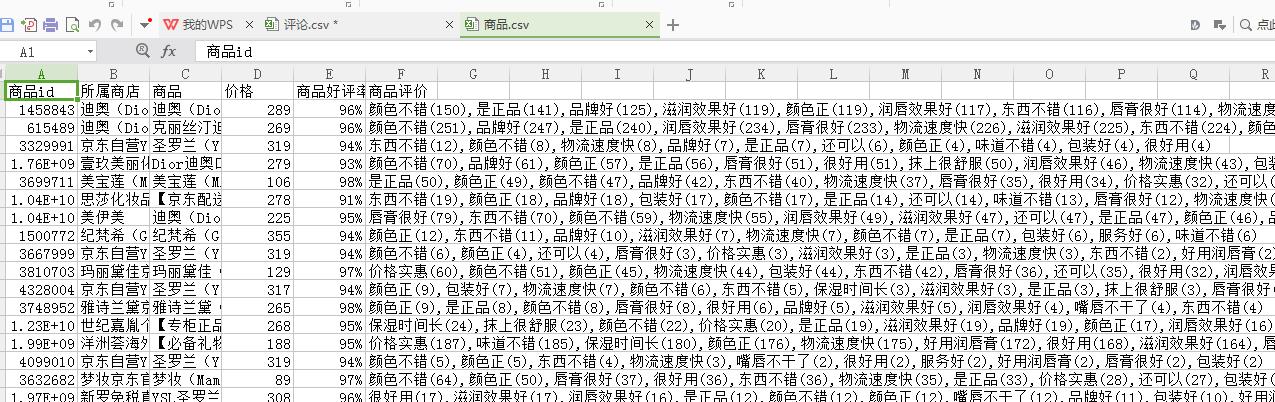

整体获取商品信息源码:

1 def get_product_info(): 2 global pid 3 global links 4 global word 5 # 创建评论.csv 6 comment_file = open(\'{0}\\\\评论.csv\'.format(path), \'w\', newline=\'\') 7 write = csv.writer(comment_file) 8 write.writerow([\'商品id\', \'商品\', \'颜色\', \'评论时间\', \'客户评分\', \'客户评论\']) 9 comment_file.close() 10 # 创建商品.csv 11 product_file = open(\'{0}\\\\商品.csv\'.format(path), \'w\', newline=\'\') 12 product_write = csv.writer(product_file) 13 product_write.writerow([\'商品id\', \'所属商店\', \'商品\', \'价格\', \'商品好评率\', \'商品评价\']) 14 product_file.close() 15 16 for i in range(len(pid)): 17 print(\'[*]正在收集数据。。。\') 18 product_url = links[i] 19 req = urllib.request.Request(product_url) 20 req.add_header("User-Agent", 21 \'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:56.0) Gecko/20100101 Firefox/56.0\') 22 req.add_header("GET", product_url) 23 content = urllib.request.urlopen(req).read() 24 # 获取商品页面源码 25 soup = bs4.BeautifulSoup(content, "lxml") 26 # 获取商品名 27 sku_name = soup.select_one(\'.sku-name\').getText().strip() 28 # 获取商店名 29 try: 30 shop_name = soup.find(clstag="shangpin|keycount|product|dianpuname1").get(\'title\') 31 except: 32 shop_name = soup.find(clstag="shangpin|keycount|product|zcdpmc_oversea").get(\'title\') 33 # 获取商品ID 34 sku_id = str(pid[i]).ljust(20) 35 # 获取商品价格 36 price_url = \'https://p.3.cn/prices/mgets?pduid=1580197051&skuIds=J_{}\'.format(pid[i]) 37 response = requests.get(price_url).content 38 price = json.loads(response) 39 price = price[0][\'p\'] 40 # 写入商品.csv 41 product_file = open(\'{0}\\\\商品.csv\'.format(path), \'a\', newline=\'\', encoding=\'utf-8\', errors=\'ignore\') 42 product_write = csv.writer(product_file) 43 product_write.writerow( 44 [sku_id, shop_name, sku_name, price, get_good_percent(pid[i]), get_product_comment(pid[i])]) 45 product_file.close() 46 pages = int(get_comment_count(pid[i])) 47 word = [] 48 try: 49 for j in range(pages): 50 get_comment(pid[i], j) 51 except Exception as e: 52 print("[!!!]{}商品评论加载失败!".format(pid[i])) 53 print("[!!!]Error:{}".format(e)) 54 55 print(\'[*]第{}件商品{}收集完毕!\'.format(i + 1, pid[i]))56 # 的生成词云 57 word = " ".join(word) 58 my_wordcloud = WordCloud(font_path=\'C:\\Windows\\Fonts\\STZHONGS.TTF\', background_color=\'white\').generate(word) 59 my_wordcloud.to_file("{}.jpg".format(pid[i]))

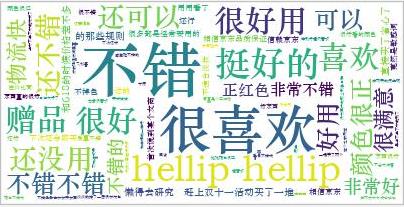

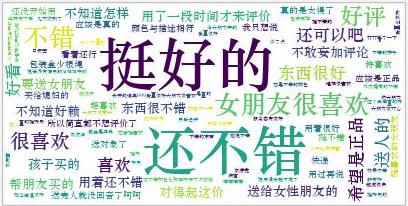

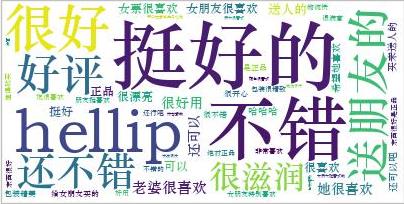

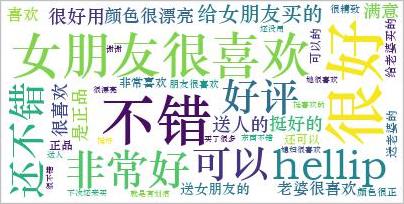

将商品信息和评论写入表格,生成评论词云:

三、总结

在爬取的过程中遇到最多的问题就是编码问题,获取页面的内容requset到的都是bytes类型的要decode(”gbk”),后来还是存在编码问题,最后找到一些文章说明,在后面加“ignore”可以解决,由于爬取的量太大,会有一些数据丢失,不过数据量够大也不影响对商品分析。

以上是关于京东口红top 30分析的主要内容,如果未能解决你的问题,请参考以下文章

TOP100案例专访京东高级经理马鑫谈京东618/双11全链路压测的实践之路