利用Tensorflow实现卷积神经网络模型

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了利用Tensorflow实现卷积神经网络模型相关的知识,希望对你有一定的参考价值。

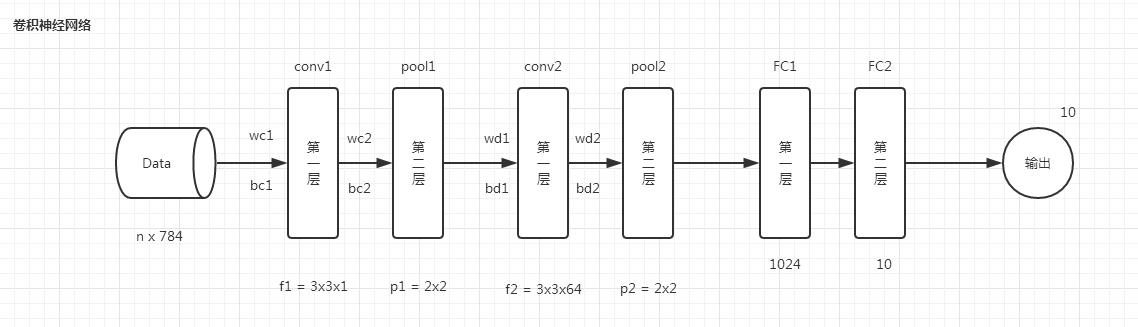

首先看一下卷积神经网络模型,如下图:

卷积神经网络(CNN)由输入层、卷积层、激活函数、池化层、全连接层组成,即INPUT-CONV-RELU-POOL-FC

池化层:为了减少运算量和数据维度而设置的一种层。

代码如下:

n_input = 784 # 28*28的灰度图 n_output = 10 # 完成一个10分类的操作 weights = { #\'权重参数\': tf.Variable(tf.高期([feature的H, feature的W, 当前feature连接的输入的深度, 最终想得到多少个特征图], 标准差=0.1)), \'wc1\': tf.Variable(tf.random_normal([3, 3, 1, 64], stddev=0.1)), \'wc2\': tf.Variable(tf.random_normal([3, 3, 64, 128], stddev=0.1)), #\'全连接层参数\': tf.Variable(tf.高斯([特征图H*特征图W*深度, 最终想得到多少个特征图], 标准差=0.1)), \'wd1\': tf.Variable(tf.random_normal([7*7*128, 1024], stddev=0.1)), \'wd2\': tf.Variable(tf.random_normal([1024, n_output], stddev=0.1)) } biases = { #\'偏置参数\': tf.Variable(tf.高斯([第1层有多少个偏置项], 标准差=0.1)), \'bc1\': tf.Variable(tf.random_normal([64], stddev=0.1)), \'bc2\': tf.Variable(tf.random_normal([128], stddev=0.1)), \'bd1\': tf.Variable(tf.random_normal([1024], stddev=0.1)), \'bd2\': tf.Variable(tf.random_normal([n_output], stddev=0.1)) } #卷积神经网络 def conv_basic(_input, _w, _b, _keepratio): #将输入数据转化成一个四维的[n, h, w, c]tensorflow格式数据 #_input_r = tf.将输入数据转化成tensorflow格式(输入, shape=[batch_size大小, H, W, 深度]) _input_r = tf.reshape(_input, shape=[-1, 28, 28, 1]) #第1层卷积 #_conv1 = tf.nn.卷积(输入, 权重参数, 步长=[batch_size大小, H, W, 深度], padding=\'建议选择SAME\') _conv1 = tf.nn.conv2d(_input_r, _w[\'wc1\'], strides=[1, 1, 1, 1], padding=\'SAME\') #_conv1 = tf.nn.非线性激活函数(tf.nn.加法(_conv1, _b[\'bc1\'])) _conv1 = tf.nn.relu(tf.nn.bias_add(_conv1, _b[\'bc1\'])) #第1层池化 #_pool1 = tf.nn.池化函数(_conv1, 指定池化窗口的大小=[batch_size大小, H, W, 深度], strides=[1, 2, 2, 1], padding=\'SAME\') _pool1 = tf.nn.max_pool(_conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding=\'SAME\') #随机杀死一些节点,不让所有神经元都加入到训练中 #_pool_dr1 = tf.nn.dropout(_pool1, 保留比例) _pool_dr1 = tf.nn.dropout(_pool1, _keepratio) #第2层卷积 _conv2 = tf.nn.conv2d(_pool_dr1, _w[\'wc2\'], strides=[1, 1, 1, 1], padding=\'SAME\') _conv2 = tf.nn.relu(tf.nn.bias_add(_conv2, _b[\'bc2\'])) _pool2 = tf.nn.max_pool(_conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding=\'SAME\') _pool_dr2 = tf.nn.dropout(_pool2, _keepratio) #全连接层 #转化成tensorflow格式 _dense1 = tf.reshape(_pool_dr2, [-1, _w[\'wd1\'].get_shape().as_list()[0]]) #第1层全连接层 _fc1 = tf.nn.relu(tf.add(tf.matmul(_dense1, _w[\'wd1\']), _b[\'bd1\'])) _fc_dr1 = tf.nn.dropout(_fc1, _keepratio) #第2层全连接层 _out = tf.add(tf.matmul(_fc_dr1, _w[\'wd2\']), _b[\'bd2\']) #返回值 out = { \'input_r\': _input_r, \'conv1\': _conv1, \'pool1\': _pool1, \'pool1_dr1\': _pool_dr1, \'conv2\': _conv2, \'pool2\': _pool2, \'pool_dr2\': _pool_dr2, \'dense1\': _dense1, \'fc1\': _fc1, \'fc_dr1\': _fc_dr1, \'out\': _out } return out print ("CNN READY") #设置损失函数&优化器(代码说明:略 请看前面文档) learning_rate = 0.001 x = tf.placeholder("float", [None, nsteps, diminput]) y = tf.placeholder("float", [None, dimoutput]) myrnn = _RNN(x, weights, biases, nsteps, \'basic\') pred = myrnn[\'O\'] cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(pred, y)) optm = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost) # Adam Optimizer accr = tf.reduce_mean(tf.cast(tf.equal(tf.argmax(pred,1), tf.argmax(y,1)), tf.float32)) init = tf.global_variables_initializer() print ("Network Ready!") #训练(代码说明:略 请看前面文档) training_epochs = 5 batch_size = 16 display_step = 1 sess = tf.Session() sess.run(init) print ("Start optimization") for epoch in range(training_epochs): avg_cost = 0. #total_batch = int(mnist.train.num_examples/batch_size) total_batch = 100 # Loop over all batches for i in range(total_batch): batch_xs, batch_ys = mnist.train.next_batch(batch_size) batch_xs = batch_xs.reshape((batch_size, nsteps, diminput)) # Fit training using batch data feeds = {x: batch_xs, y: batch_ys} sess.run(optm, feed_dict=feeds) # Compute average loss avg_cost += sess.run(cost, feed_dict=feeds)/total_batch # Display logs per epoch step if epoch % display_step == 0: print ("Epoch: %03d/%03d cost: %.9f" % (epoch, training_epochs, avg_cost)) feeds = {x: batch_xs, y: batch_ys} train_acc = sess.run(accr, feed_dict=feeds) print (" Training accuracy: %.3f" % (train_acc)) testimgs = testimgs.reshape((ntest, nsteps, diminput)) feeds = {x: testimgs, y: testlabels, istate: np.zeros((ntest, 2*dimhidden))} test_acc = sess.run(accr, feed_dict=feeds) print (" Test accuracy: %.3f" % (test_acc)) print ("Optimization Finished.")

以上是关于利用Tensorflow实现卷积神经网络模型的主要内容,如果未能解决你的问题,请参考以下文章