Zookeeper与Kafka集群搭建

Posted Albin

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Zookeeper与Kafka集群搭建相关的知识,希望对你有一定的参考价值。

一 :环境准备:

- 物理机window7 64位

- vmware 3个虚拟机 centos6.8 IP为:192.168.17.[129 -131]

- JDK1.7安装配置

- 各虚拟机之间配置免密登录

- 安装clustershell用于集群各节点统一操作配置

1 :在此说明一下免密和clustershell的操作和使用方式

1.1 :配置免密登录(各集群节点间,互相操作对方时,只需要输入对方ip或者host即可,不需要输入密码,即:免密登录)

1.1.2 :生成密钥文件和私钥文件 命令

ssh-keygen -t rsa

1.1.3 :查看生成秘钥文件

ls /root/.ssh

1.1.4 : 将秘钥拷贝到对方机器

ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.17.129

ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.17.130

ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.17.131

1.1.5 :测试互相是否连接上

可以分别在不同节点间互相登录操作一下

ssh root@192.168.17.130

hostname

1.2 : clustershell的安装

备注一下,我是安装的centos6.6 mini无界面版本,通过yun install clustershell安装时,会提示no package ,原因yum源中的包长期没有更新,所以使用来epel-release

安装命令:

sudo yum install epel-release

然后在yum install clustershell 就可以通过epel来安装了

1.2.2 : 配置cluster groups

vim /etc/clustershell/groups

添加一个组名:服务器IP或者host

kafka:192.168.17.129 192.168.17.130 192.168.17.131

二 :Zookeeper和Kafka下载

本文使用的zookeeper和kafka版本分别为:3.4.8 , 0.10.0.0

1 :首先到官网进行下载:

将压缩包放在自己指定的目录下,我这里放在了/opt/kafka 目录下

然后,通过clush 将压缩包copy到其它几个服务节点中

clush -g kafka -c /opt/kafka

2 :通过clush来解压缩所有节点的zk和kafka压缩包

clush -g kafka tar zxvf /opt/kafka/zookeeper-3.4.8

clush -g kafka tar zxvf /opt/kafka/kafka_2.11-0.10.1.0

3 : 将zoo_sample.cfg 拷贝一份为zoo.cfg (默认的zookeeper配置文件)

修改配置,zoo.cfg文件

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/tmp/zookeeper

# the port at which the clients will connect

clientPort=2181 ## zk 默认端口

## 节点IP和端口

server.1=192.168.17.129:2888:3888

server.2=192.168.17.130:2888:3888

server.3=192.168.17.131:2888:3888

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

3 : 创建tmp/zookeeper 用来存储zk信息

mkdir /tmp/zookeeper

4 : 为每个tmp/zookeeper 设置一个myid的文件,内容为节点id 1 or 2 or 3

echo "1" > myid

clush -g kafka "service iptables status"clush -g kafka "service iptables stop"

clush -g kafka /opt/kafka/zookeeper/bin/zkServer.sh start /opt/kafka/zookeeper/conf/zoo.cfg

clush -g kafka lsof -i:2181

bin/zkCli.sh -server 192.168.17.130:2181create /test hello

三 :Kafka安装部署

zookeeper.connect=192.168.17.129:2181,192.168.17.130:2181,192.168.17.131:2181

/opt/kafka/kafka_2.11-0.10.1.0/bin/kafka-server-start.sh -daemon /opt/kafka/kafka_2.11-0.10.1.0/config/server.properties

bin/kafka-topics.sh --zookeeper 192.168.17.129:2181 -topic topicTest --create --partition 3 --replication-factor 2

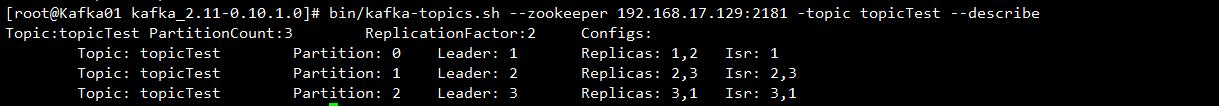

[root@Kafka01 kafka_2.11-0.10.1.0]# bin/kafka-topics.sh --zookeeper 192.168.17.129:2181 -topic topicTest --describe

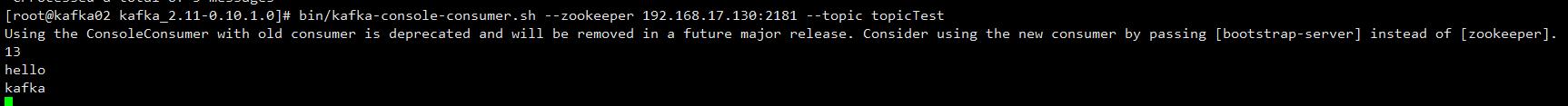

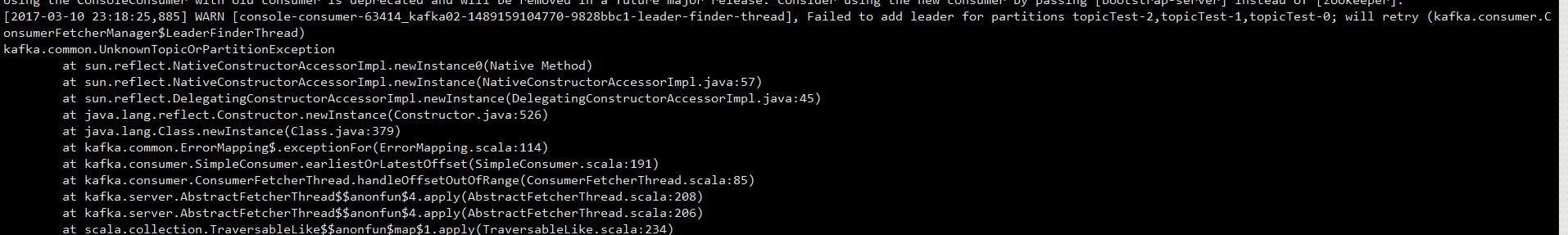

bin/kafka-console-consumer.sh --zookeeper 192.168.17.130:2181 --topic topicTest

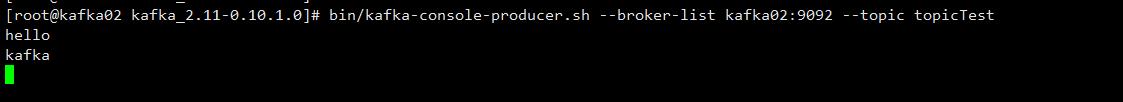

bin/kafka-console-producer.sh --broker-list kafka02:9092 --topic topicTest

以上是关于Zookeeper与Kafka集群搭建的主要内容,如果未能解决你的问题,请参考以下文章

Kafka:ZK+Kafka+Spark Streaming集群环境搭建安装zookeeper-3.4.12

zookeeper集群环境搭建(使用kafka的zookeeper搭建zk集群)