spark之workcount

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了spark之workcount相关的知识,希望对你有一定的参考价值。

使用idea+maven构建spark的开发环境,遇到一点小坑,所幸最后顺利完成,使用maven管理项目还是十分必要的~~~

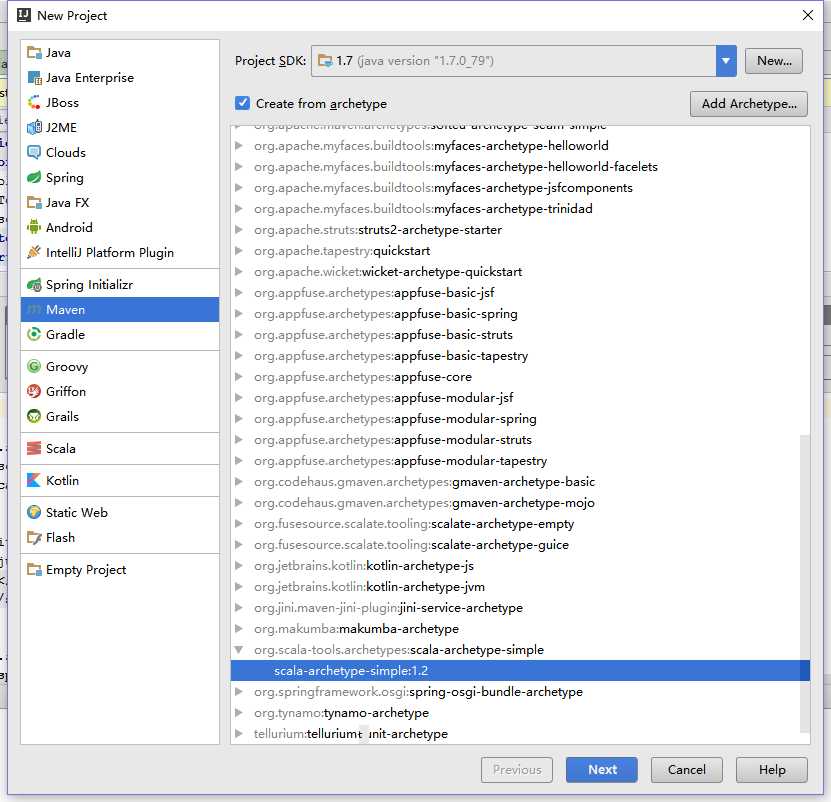

1. 新建maven项目,选择scala类的项目,如图,并next

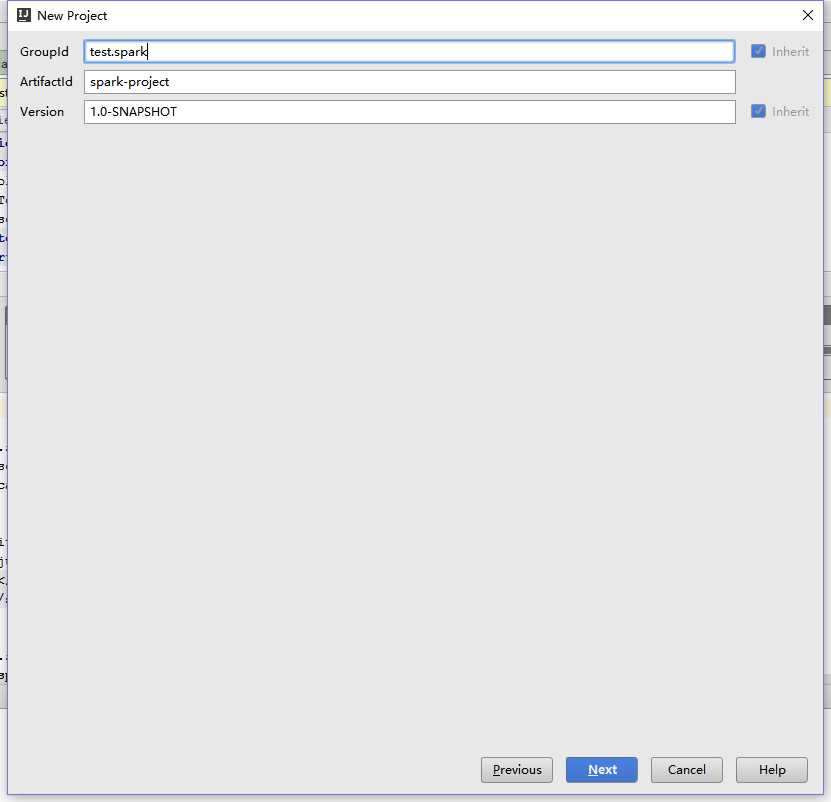

2. 填写groupID,artifactid,projectName,继续next,一路next,并填写项目名称

3. 项目生成之后,删除test中的测试类MySpec.scala,如果不删除在运行的时候可能会报测试错误

4. 在pom文件中设置scala到合适的版本

1 <properties> 2 <scala.version>2.10.5</scala.version> 3 </properties>

5. 惯例先上一个hello word程序,正常运行

package com.scalatest

/**

* Hello world!

*

*/

object App {

def main(args: Array[String]): Unit = {

println("hello scala")

}

}

6. 继续构建spark开发环境,添加maven依赖,这里选择了spark1.6.1版本,如果不清楚具体的依赖怎么写,可以查询

1 <dependency> 2 <groupId>org.apache.spark</groupId> 3 <artifactId>spark-core_2.11</artifactId> 4 <version>1.6.1</version> 5 </dependency>

7. 编写一个wordcount程序

object Test {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("sparktest").setMaster("local")

val sc = new SparkContext(conf)

val fileRDD = sc.textFile("F:\\\\data\\\\wordcount.txt")

fileRDD.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_+_).collect().foreach(println)

}

}

8. 运行测试程序如下的报错。

1 "C:\\Program Files\\Java\\jdk1.7.0_79\\bin\\java" -Didea.launcher.port=7538 "-Didea.launcher.bin.path=C:\\Program Files (x86)\\JetBrains\\IntelliJ IDEA 2016.2.5\\bin" -Dfile.encoding=UTF-8 -classpath "C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\charsets.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\deploy.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\ext\\access-bridge-64.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\ext\\dnsns.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\ext\\jaccess.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\ext\\localedata.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\ext\\sunec.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\ext\\sunjce_provider.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\ext\\sunmscapi.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\ext\\zipfs.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\javaws.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\jce.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\jfr.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\jfxrt.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\jsse.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\management-agent.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\plugin.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\resources.jar;C:\\Program Files\\Java\\jdk1.7.0_79\\jre\\lib\\rt.jar;F:\\IDEAworkspace\\scalaproject\\target\\classes;F:\\repo\\org\\apache\\spark\\spark-core_2.11\\1.6.1\\spark-core_2.11-1.6.1.jar;F:\\repo\\org\\apache\\avro\\avro-mapred\\1.7.7\\avro-mapred-1.7.7-hadoop2.jar;F:\\repo\\org\\apache\\avro\\avro-ipc\\1.7.7\\avro-ipc-1.7.7.jar;F:\\repo\\org\\apache\\avro\\avro\\1.7.7\\avro-1.7.7.jar;F:\\repo\\org\\apache\\avro\\avro-ipc\\1.7.7\\avro-ipc-1.7.7-tests.jar;F:\\repo\\org\\codehaus\\jackson\\jackson-core-asl\\1.9.13\\jackson-core-asl-1.9.13.jar;F:\\repo\\org\\codehaus\\jackson\\jackson-mapper-asl\\1.9.13\\jackson-mapper-asl-1.9.13.jar;F:\\repo\\com\\twitter\\chill_2.11\\0.5.0\\chill_2.11-0.5.0.jar;F:\\repo\\com\\esotericsoftware\\kryo\\kryo\\2.21\\kryo-2.21.jar;F:\\repo\\com\\esotericsoftware\\reflectasm\\reflectasm\\1.07\\reflectasm-1.07-shaded.jar;F:\\repo\\com\\esotericsoftware\\minlog\\minlog\\1.2\\minlog-1.2.jar;F:\\repo\\org\\objenesis\\objenesis\\1.2\\objenesis-1.2.jar;F:\\repo\\com\\twitter\\chill-java\\0.5.0\\chill-java-0.5.0.jar;F:\\repo\\org\\apache\\xbean\\xbean-asm5-shaded\\4.4\\xbean-asm5-shaded-4.4.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-client\\2.2.0\\hadoop-client-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-common\\2.2.0\\hadoop-common-2.2.0.jar;F:\\repo\\commons-cli\\commons-cli\\1.2\\commons-cli-1.2.jar;F:\\repo\\org\\apache\\commons\\commons-math\\2.1\\commons-math-2.1.jar;F:\\repo\\xmlenc\\xmlenc\\0.52\\xmlenc-0.52.jar;F:\\repo\\commons-configuration\\commons-configuration\\1.6\\commons-configuration-1.6.jar;F:\\repo\\commons-collections\\commons-collections\\3.2.1\\commons-collections-3.2.1.jar;F:\\repo\\commons-digester\\commons-digester\\1.8\\commons-digester-1.8.jar;F:\\repo\\commons-beanutils\\commons-beanutils\\1.7.0\\commons-beanutils-1.7.0.jar;F:\\repo\\commons-beanutils\\commons-beanutils-core\\1.8.0\\commons-beanutils-core-1.8.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-auth\\2.2.0\\hadoop-auth-2.2.0.jar;F:\\repo\\org\\apache\\commons\\commons-compress\\1.4.1\\commons-compress-1.4.1.jar;F:\\repo\\org\\tukaani\\xz\\1.0\\xz-1.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-hdfs\\2.2.0\\hadoop-hdfs-2.2.0.jar;F:\\repo\\org\\mortbay\\jetty\\jetty-util\\6.1.26\\jetty-util-6.1.26.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-mapreduce-client-app\\2.2.0\\hadoop-mapreduce-client-app-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-mapreduce-client-common\\2.2.0\\hadoop-mapreduce-client-common-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-yarn-client\\2.2.0\\hadoop-yarn-client-2.2.0.jar;F:\\repo\\com\\google\\inject\\guice\\3.0\\guice-3.0.jar;F:\\repo\\javax\\inject\\javax.inject\\1\\javax.inject-1.jar;F:\\repo\\aopalliance\\aopalliance\\1.0\\aopalliance-1.0.jar;F:\\repo\\com\\sun\\jersey\\jersey-test-framework\\jersey-test-framework-grizzly2\\1.9\\jersey-test-framework-grizzly2-1.9.jar;F:\\repo\\com\\sun\\jersey\\jersey-test-framework\\jersey-test-framework-core\\1.9\\jersey-test-framework-core-1.9.jar;F:\\repo\\javax\\servlet\\javax.servlet-api\\3.0.1\\javax.servlet-api-3.0.1.jar;F:\\repo\\com\\sun\\jersey\\jersey-client\\1.9\\jersey-client-1.9.jar;F:\\repo\\com\\sun\\jersey\\jersey-grizzly2\\1.9\\jersey-grizzly2-1.9.jar;F:\\repo\\org\\glassfish\\grizzly\\grizzly-http\\2.1.2\\grizzly-http-2.1.2.jar;F:\\repo\\org\\glassfish\\grizzly\\grizzly-framework\\2.1.2\\grizzly-framework-2.1.2.jar;F:\\repo\\org\\glassfish\\gmbal\\gmbal-api-only\\3.0.0-b023\\gmbal-api-only-3.0.0-b023.jar;F:\\repo\\org\\glassfish\\external\\management-api\\3.0.0-b012\\management-api-3.0.0-b012.jar;F:\\repo\\org\\glassfish\\grizzly\\grizzly-http-server\\2.1.2\\grizzly-http-server-2.1.2.jar;F:\\repo\\org\\glassfish\\grizzly\\grizzly-rcm\\2.1.2\\grizzly-rcm-2.1.2.jar;F:\\repo\\org\\glassfish\\grizzly\\grizzly-http-servlet\\2.1.2\\grizzly-http-servlet-2.1.2.jar;F:\\repo\\org\\glassfish\\javax.servlet\\3.1\\javax.servlet-3.1.jar;F:\\repo\\com\\sun\\jersey\\jersey-json\\1.9\\jersey-json-1.9.jar;F:\\repo\\org\\codehaus\\jettison\\jettison\\1.1\\jettison-1.1.jar;F:\\repo\\stax\\stax-api\\1.0.1\\stax-api-1.0.1.jar;F:\\repo\\com\\sun\\xml\\bind\\jaxb-impl\\2.2.3-1\\jaxb-impl-2.2.3-1.jar;F:\\repo\\javax\\xml\\bind\\jaxb-api\\2.2.2\\jaxb-api-2.2.2.jar;F:\\repo\\javax\\activation\\activation\\1.1\\activation-1.1.jar;F:\\repo\\org\\codehaus\\jackson\\jackson-jaxrs\\1.8.3\\jackson-jaxrs-1.8.3.jar;F:\\repo\\org\\codehaus\\jackson\\jackson-xc\\1.8.3\\jackson-xc-1.8.3.jar;F:\\repo\\com\\sun\\jersey\\contribs\\jersey-guice\\1.9\\jersey-guice-1.9.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-yarn-server-common\\2.2.0\\hadoop-yarn-server-common-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-mapreduce-client-shuffle\\2.2.0\\hadoop-mapreduce-client-shuffle-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-yarn-api\\2.2.0\\hadoop-yarn-api-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-mapreduce-client-core\\2.2.0\\hadoop-mapreduce-client-core-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-yarn-common\\2.2.0\\hadoop-yarn-common-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-mapreduce-client-jobclient\\2.2.0\\hadoop-mapreduce-client-jobclient-2.2.0.jar;F:\\repo\\org\\apache\\hadoop\\hadoop-annotations\\2.2.0\\hadoop-annotations-2.2.0.jar;F:\\repo\\org\\apache\\spark\\spark-launcher_2.11\\1.6.1\\spark-launcher_2.11-1.6.1.jar;F:\\repo\\org\\apache\\spark\\spark-network-common_2.11\\1.6.1\\spark-network-common_2.11-1.6.1.jar;F:\\repo\\org\\apache\\spark\\spark-network-shuffle_2.11\\1.6.1\\spark-network-shuffle_2.11-1.6.1.jar;F:\\repo\\org\\fusesource\\leveldbjni\\leveldbjni-all\\1.8\\leveldbjni-all-1.8.jar;F:\\repo\\com\\fasterxml\\jackson\\core\\jackson-annotations\\2.4.4\\jackson-annotations-2.4.4.jar;F:\\repo\\org\\apache\\spark\\spark-unsafe_2.11\\1.6.1\\spark-unsafe_2.11-1.6.1.jar;F:\\repo\\net\\java\\dev\\jets3t\\jets3t\\0.7.1\\jets3t-0.7.1.jar;F:\\repo\\commons-codec\\commons-codec\\1.3\\commons-codec-1.3.jar;F:\\repo\\commons-httpclient\\commons-httpclient\\3.1\\commons-httpclient-3.1.jar;F:\\repo\\org\\apache\\curator\\curator-recipes\\2.4.0\\curator-recipes-2.4.0.jar;F:\\repo\\org\\apache\\curator\\curator-framework\\2.4.0\\curator-framework-2.4.0.jar;F:\\repo\\org\\apache\\curator\\curator-client\\2.4.0\\curator-client-2.4.0.jar;F:\\repo\\org\\apache\\zookeeper\\zookeeper\\3.4.5\\zookeeper-3.4.5.jar;F:\\repo\\jline\\jline\\0.9.94\\jline-0.9.94.jar;F:\\repo\\com\\google\\guava\\guava\\14.0.1\\guava-14.0.1.jar;F:\\repo\\org\\eclipse\\jetty\\orbit\\javax.servlet\\3.0.0.v201112011016\\javax.servlet-3.0.0.v201112011016.jar;F:\\repo\\org\\apache\\commons\\commons-lang3\\3.3.2\\commons-lang3-3.3.2.jar;F:\\repo\\org\\apache\\commons\\commons-math3\\3.4.1\\commons-math3-3.4.1.jar;F:\\repo\\com\\google\\code\\findbugs\\jsr305\\1.3.9\\jsr305-1.3.9.jar;F:\\repo\\org\\slf4j\\slf4j-api\\1.7.10\\slf4j-api-1.7.10.jar;F:\\repo\\org\\slf4j\\jul-to-slf4j\\1.7.10\\jul-to-slf4j-1.7.10.jar;F:\\repo\\org\\slf4j\\jcl-over-slf4j\\1.7.10\\jcl-over-slf4j-1.7.10.jar;F:\\repo\\log4j\\log4j\\1.2.17\\log4j-1.2.17.jar;F:\\repo\\org\\slf4j\\slf4j-log4j12\\1.7.10\\slf4j-log4j12-1.7.10.jar;F:\\repo\\com\\ning\\compress-lzf\\1.0.3\\compress-lzf-1.0.3.jar;F:\\repo\\org\\xerial\\snappy\\snappy-java\\1.1.2\\snappy-java-1.1.2.jar;F:\\repo\\net\\jpountz\\lz4\\lz4\\1.3.0\\lz4-1.3.0.jar;F:\\repo\\org\\roaringbitmap\\RoaringBitmap\\0.5.11\\RoaringBitmap-0.5.11.jar;F:\\repo\\commons-net\\commons-net\\2.2\\commons-net-2.2.jar;F:\\repo\\com\\typesafe\\akka\\akka-remote_2.11\\2.3.11\\akka-remote_2.11-2.3.11.jar;F:\\repo\\com\\typesafe\\akka\\akka-actor_2.11\\2.3.11\\akka-actor_2.11-2.3.11.jar;F:\\repo\\com\\typesafe\\config\\1.2.1\\config-1.2.1.jar;F:\\repo\\io\\netty\\netty\\3.8.0.Final\\netty-3.8.0.Final.jar;F:\\repo\\com\\google\\protobuf\\protobuf-java\\2.5.0\\protobuf-java-2.5.0.jar;F:\\repo\\org\\uncommons\\maths\\uncommons-maths\\1.2.2a\\uncommons-maths-1.2.2a.jar;F:\\repo\\com\\typesafe\\akka\\akka-slf4j_2.11\\2.3.11\\akka-slf4j_2.11-2.3.11.jar;F:\\repo\\org\\json4s\\json4s-jackson_2.11\\3.2.10\\json4s-jackson_2.11-3.2.10.jar;F:\\repo\\org\\json4s\\json4s-core_2.11\\3.2.10\\json4s-core_2.11-3.2.10.jar;F:\\repo\\org\\json4s\\json4s-ast_2.11\\3.2.10\\json4s-ast_2.11-3.2.10.jar;F:\\repo\\org\\scala-lang\\scalap\\2.11.0\\scalap-2.11.0.jar;F:\\repo\\org\\scala-lang\\scala-compiler\\2.11.0\\scala-compiler-2.11.0.jar;F:\\repo\\org\\scala-lang\\modules\\scala-xml_2.11\\1.0.1\\scala-xml_2.11-1.0.1.jar;F:\\repo\\org\\scala-lang\\modules\\scala-parser-combinators_2.11\\1.0.1\\scala-parser-combinators_2.11-1.0.1.jar;F:\\repo\\com\\sun\\jersey\\jersey-server\\1.9\\jersey-server-1.9.jar;F:\\repo\\asm\\asm\\3.1\\asm-3.1.jar;F:\\repo\\com\\sun\\jersey\\jersey-core\\1.9\\jersey-core-1.9.jar;F:\\repo\\org\\apache\\mesos\\mesos\\0.21.1\\mesos-0.21.1-shaded-protobuf.jar;F:\\repo\\io\\netty\\netty-all\\4.0.29.Final\\netty-all-4.0.29.Final.jar;F:\\repo\\com\\clearspring\\analytics\\stream\\2.7.0\\stream-2.7.0.jar;F:\\repo\\io\\dropwizard\\metrics\\metrics-core\\3.1.2\\metrics-core-3.1.2.jar;F:\\repo\\io\\dropwizard\\metrics\\metrics-jvm\\3.1.2\\metrics-jvm-3.1.2.jar;F:\\repo\\io\\dropwizard\\metrics\\metrics-json\\3.1.2\\metrics-json-3.1.2.jar;F:\\repo\\io\\dropwizard\\metrics\\metrics-graphite\\3.1.2\\metrics-graphite-3.1.2.jar;F:\\repo\\com\\fasterxml\\jackson\\core\\jackson-databind\\2.4.4\\jackson-databind-2.4.4.jar;F:\\repo\\com\\fasterxml\\jackson\\core\\jackson-core\\2.4.4\\jackson-core-2.4.4.jar;F:\\repo\\com\\fasterxml\\jackson\\module\\jackson-module-scala_2.11\\2.4.4\\jackson-module-scala_2.11-2.4.4.jar;F:\\repo\\org\\scala-lang\\scala-reflect\\2.11.2\\scala-reflect-2.11.2.jar;F:\\repo\\com\\thoughtworks\\paranamer\\paranamer\\2.6\\paranamer-2.6.jar;F:\\repo\\org\\apache\\ivy\\ivy\\2.4.0\\ivy-2.4.0.jar;F:\\repo\\oro\\oro\\2.0.8\\oro-2.0.8.jar;F:\\repo\\org\\tachyonproject\\tachyon-client\\0.8.2\\tachyon-client-0.8.2.jar;F:\\repo\\commons-lang\\commons-lang\\2.4\\commons-lang-2.4.jar;F:\\repo\\commons-io\\commons-io\\2.4\\commons-io-2.4.jar;F:\\repo\\org\\tachyonproject\\tachyon-underfs-hdfs\\0.8.2\\tachyon-underfs-hdfs-0.8.2.jar;F:\\repo\\org\\tachyonproject\\tachyon-underfs-s3\\0.8.2\\tachyon-underfs-s3-0.8.2.jar;F:\\repo\\org\\tachyonproject\\tachyon-underfs-local\\0.8.2\\tachyon-underfs-local-0.8.2.jar;F:\\repo\\net\\razorvine\\pyrolite\\4.9\\pyrolite-4.9.jar;F:\\repo\\net\\sf\\py4j\\py4j\\0.9\\py4j-0.9.jar;F:\\repo\\org\\spark-project\\spark\\unused\\1.0.0\\unused-1.0.0.jar;F:\\repo\\org\\scala-lang\\scala-library\\2.10.5\\scala-library-2.10.5.jar;C:\\Program Files (x86)\\JetBrains\\IntelliJ IDEA 2016.2.5\\lib\\idea_rt.jar" com.intellij.rt.execution.application.AppMain com.scalatest.Test 2 Exception in thread "main" java.lang.NoSuchMethodError: scala.Predef$.$conforms()Lscala/Predef$$less$colon$less; 3 at org.apache.spark.util.Utils$.getSystemProperties(Utils.scala:1546) 4 at org.apache.spark.SparkConf.<init>(SparkConf.scala:59) 5 at org.apache.spark.SparkConf.<init>(SparkConf.scala:53) 6 at com.scalatest.Test$.main(Test.scala:12) 7 at com.scalatest.Test.main(Test.scala) 8 at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) 9 at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) 10 at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) 11 at java.lang.reflect.Method.invoke(Method.java:606) 12 at com.intellij.rt.execution.application.AppMain.main(AppMain.java:147)

将scala的版本换成2.11.0,再次运行,不再报错。至此,基于idea+maven的spark基础开发环境就算是基本搭建完成了

下次研究一下spark的文件读取实现,其中借鉴了许多hadoop的基础类

以上是关于spark之workcount的主要内容,如果未能解决你的问题,请参考以下文章

在这个 spark 代码片段中 ordering.by 是啥意思?

Linux 搭建Hadoop集群 ----workcount案例