[ML] {ud120} Lesson 4: Decision Trees

Posted ecoflex

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[ML] {ud120} Lesson 4: Decision Trees相关的知识,希望对你有一定的参考价值。

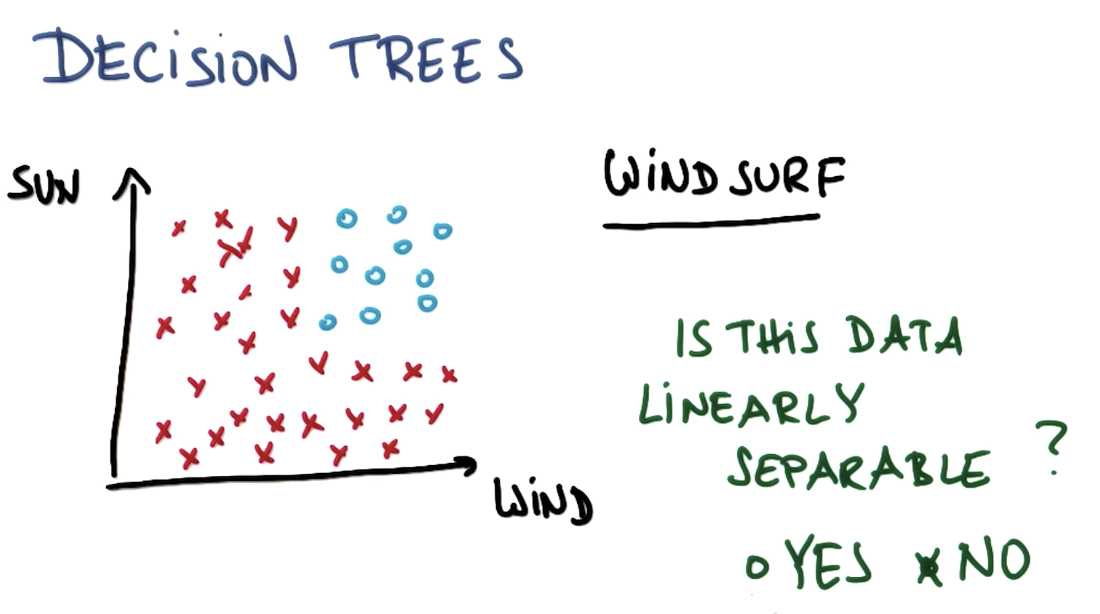

Linearly Separable Data

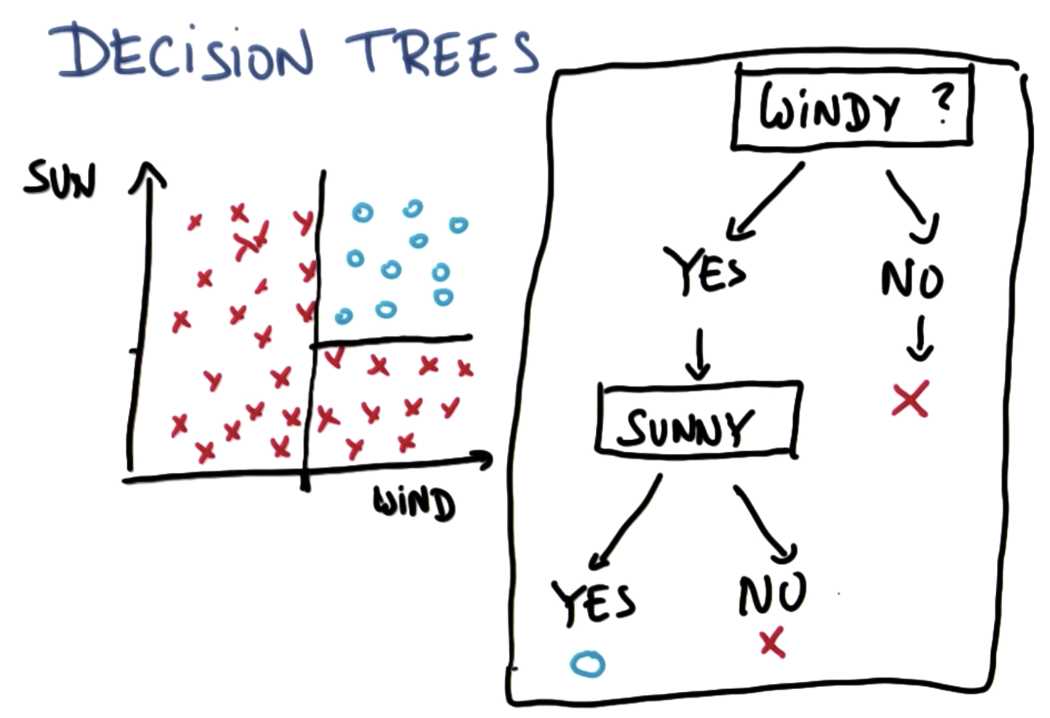

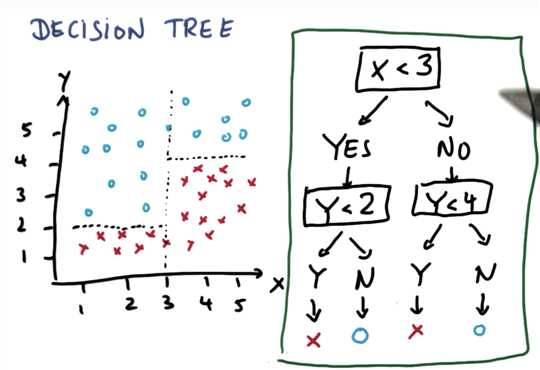

Multiple Linear Questions

Constructing a Decision Tree First Split

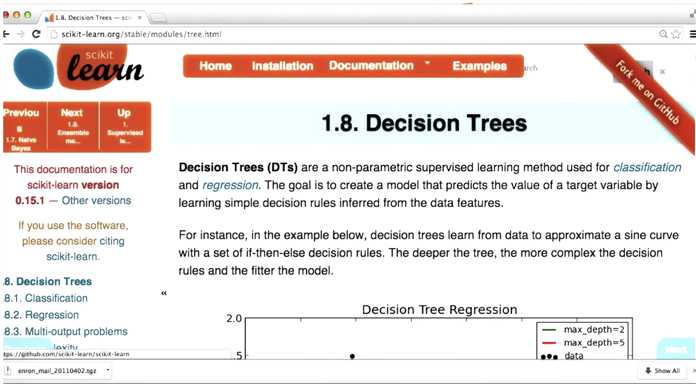

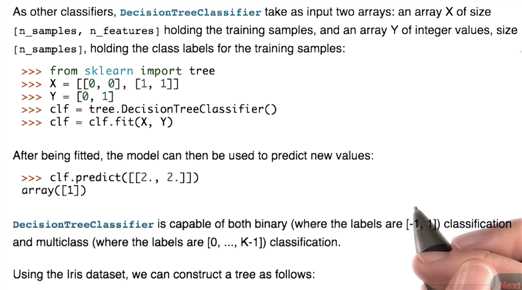

Coding A Decision Tree

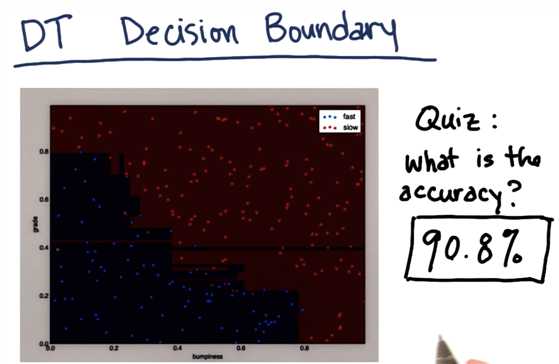

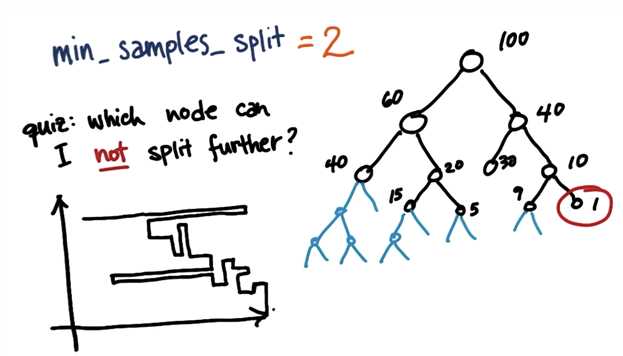

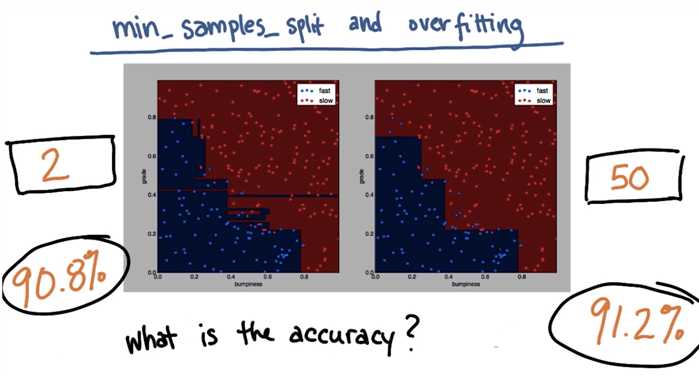

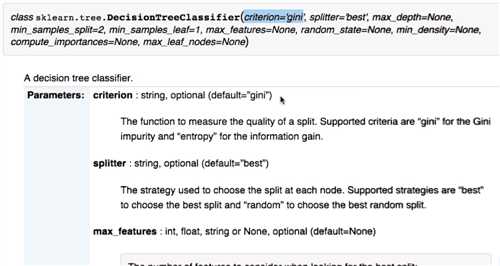

Decision Tree Parameters

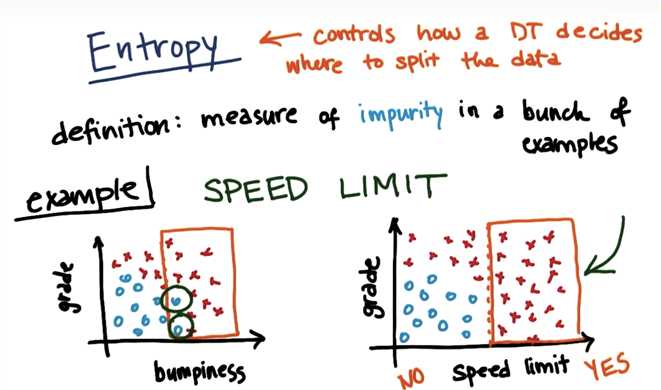

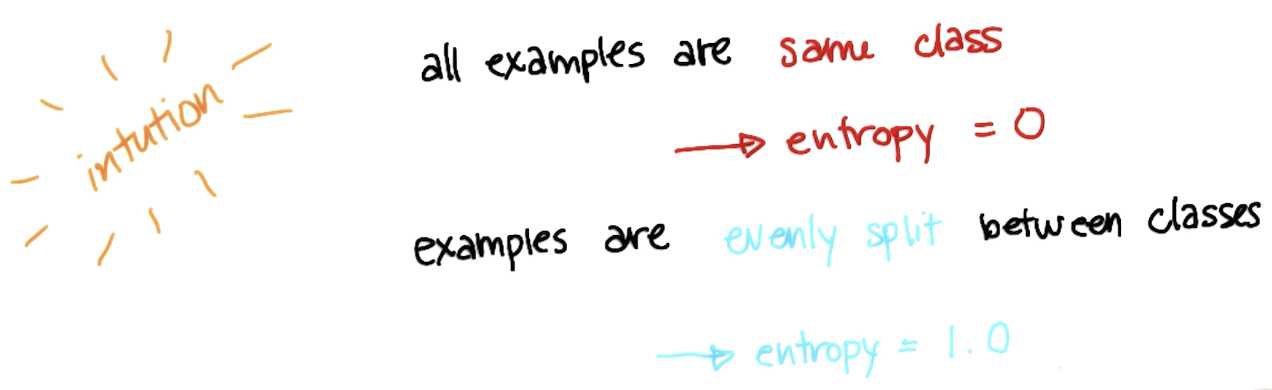

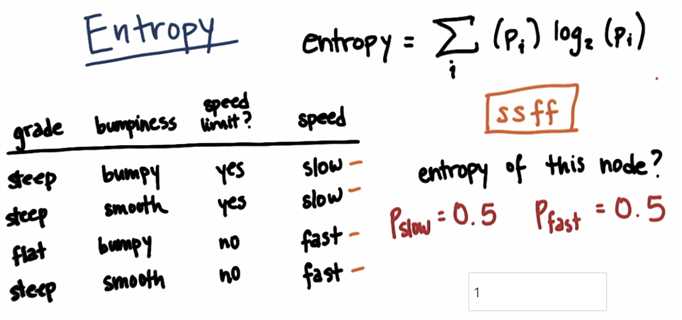

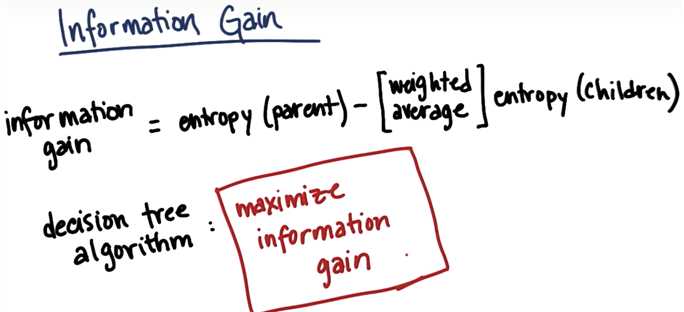

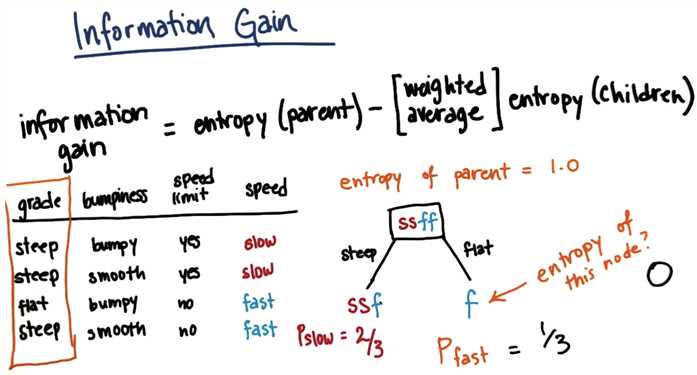

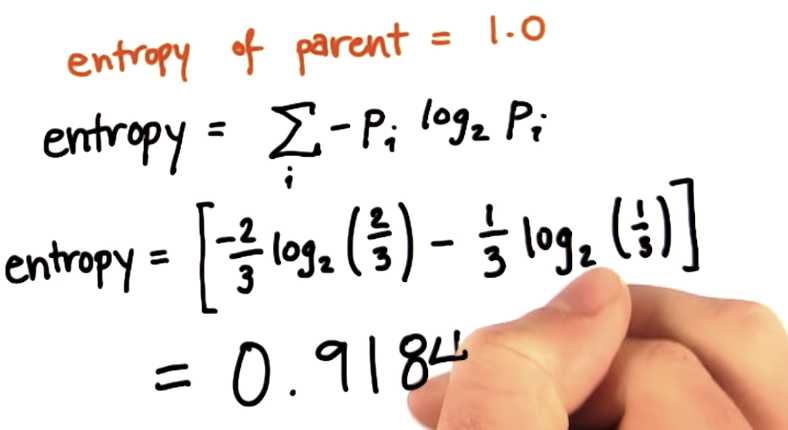

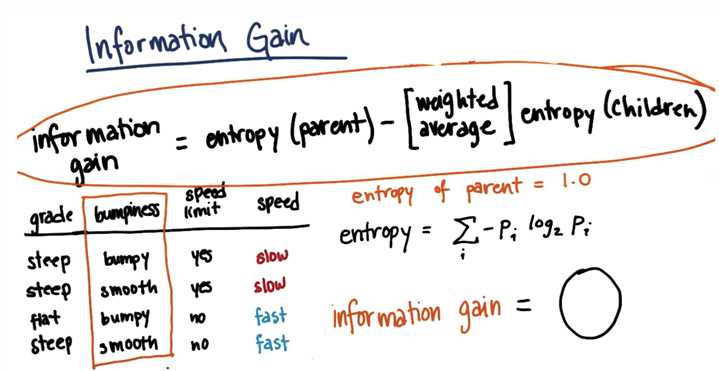

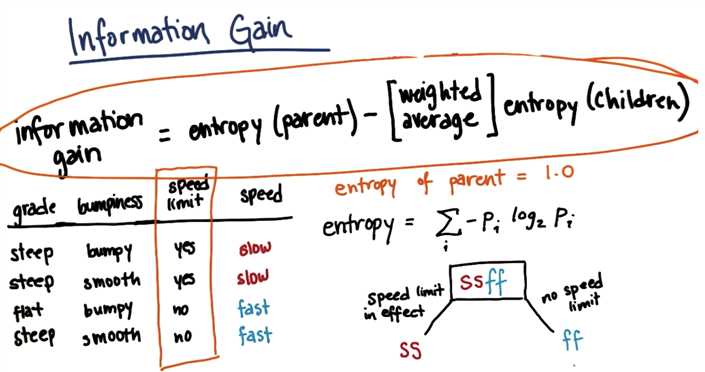

Data Impurity and Entropy

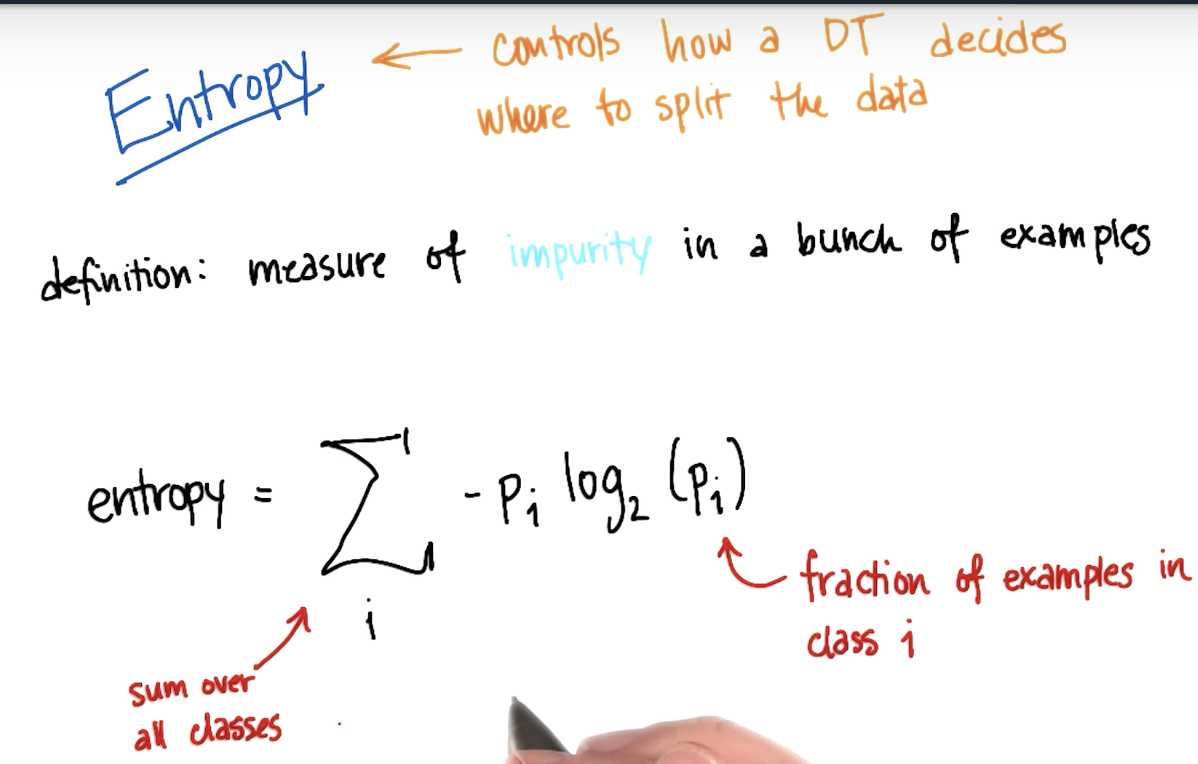

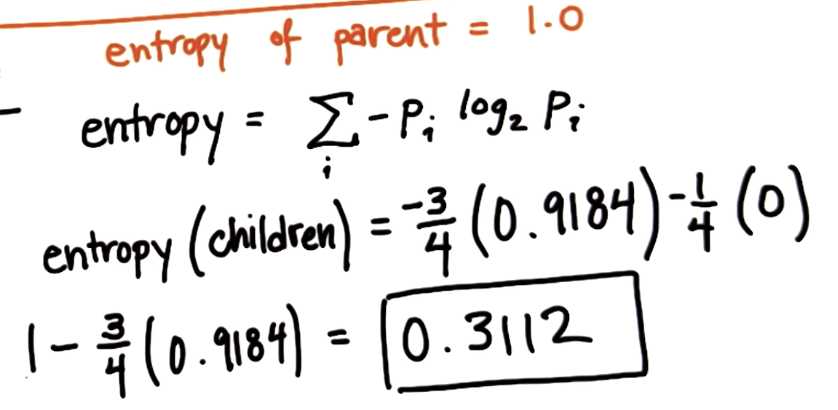

Formula of Entropy

There is an error in the formula in the entropy written on this slide. There should be a negative (-) sign preceding the sum:

Entropy = - \\sum_i (p_i) \\log_2 (p_i)−∑i?(pi?)log2?(pi?)

IG = 1

Tuning Criterion Parameter

gini is another measurement of purity

Decision Tree Mini-Project

In this project, we will again try to identify the authors in a body of emails, this time using a decision tree. The starter code is in decision_tree/dt_author_id.py.

Get the data for this mini project from here.

Once again, you‘ll do the mini-project on your own computer and enter your answers in the web browser. You can find the instructions for the decision tree mini-project here.

以上是关于[ML] {ud120} Lesson 4: Decision Trees的主要内容,如果未能解决你的问题,请参考以下文章

[Machine Learning for Trading] {ud501} Lesson 23: 03-03 Assessing a learning algorithm | Lesson 24:

[Artificial Intelligence] {ud954} Lesson 10: 10. Planning under Uncertainty

[Knowledge-based AI] {ud409} Lesson 22: 22 - Diagnosis

[Knowledge-based AI] {ud409} Lesson 12: 12 - Logic