吴裕雄 python 机器学习——集成学习AdaBoost算法分类模型

Posted tszr

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了吴裕雄 python 机器学习——集成学习AdaBoost算法分类模型相关的知识,希望对你有一定的参考价值。

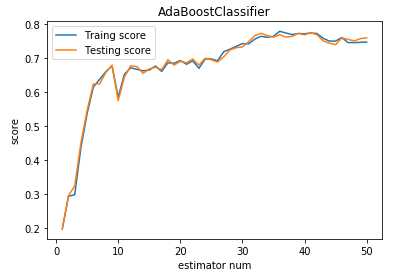

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets,ensemble from sklearn.model_selection import train_test_split def load_data_classification(): ‘‘‘ 加载用于分类问题的数据集 ‘‘‘ # 使用 scikit-learn 自带的 digits 数据集 digits=datasets.load_digits() # 分层采样拆分成训练集和测试集,测试集大小为原始数据集大小的 1/4 return train_test_split(digits.data,digits.target,test_size=0.25,random_state=0,stratify=digits.target) #集成学习AdaBoost算法分类模型 def test_AdaBoostClassifier(*data): ‘‘‘ 测试 AdaBoostClassifier 的用法,绘制 AdaBoostClassifier 的预测性能随基础分类器数量的影响 ‘‘‘ X_train,X_test,y_train,y_test=data clf=ensemble.AdaBoostClassifier(learning_rate=0.1) clf.fit(X_train,y_train) ## 绘图 fig=plt.figure() ax=fig.add_subplot(1,1,1) estimators_num=len(clf.estimators_) X=range(1,estimators_num+1) ax.plot(list(X),list(clf.staged_score(X_train,y_train)),label="Traing score") ax.plot(list(X),list(clf.staged_score(X_test,y_test)),label="Testing score") ax.set_xlabel("estimator num") ax.set_ylabel("score") ax.legend(loc="best") ax.set_title("AdaBoostClassifier") plt.show() # 获取分类数据 X_train,X_test,y_train,y_test=load_data_classification() # 调用 test_AdaBoostClassifier test_AdaBoostClassifier(X_train,X_test,y_train,y_test)

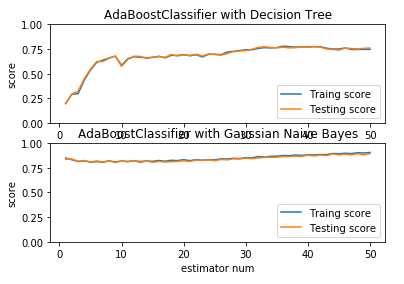

def test_AdaBoostClassifier_base_classifier(*data): ‘‘‘ 测试 AdaBoostClassifier 的预测性能随基础分类器数量和基础分类器的类型的影响 ‘‘‘ from sklearn.naive_bayes import GaussianNB X_train,X_test,y_train,y_test=data fig=plt.figure() ax=fig.add_subplot(2,1,1) ########### 默认的个体分类器 ############# clf=ensemble.AdaBoostClassifier(learning_rate=0.1) clf.fit(X_train,y_train) ## 绘图 estimators_num=len(clf.estimators_) X=range(1,estimators_num+1) ax.plot(list(X),list(clf.staged_score(X_train,y_train)),label="Traing score") ax.plot(list(X),list(clf.staged_score(X_test,y_test)),label="Testing score") ax.set_xlabel("estimator num") ax.set_ylabel("score") ax.legend(loc="lower right") ax.set_ylim(0,1) ax.set_title("AdaBoostClassifier with Decision Tree") ####### Gaussian Naive Bayes 个体分类器 ######## ax=fig.add_subplot(2,1,2) clf=ensemble.AdaBoostClassifier(learning_rate=0.1,base_estimator=GaussianNB()) clf.fit(X_train,y_train) ## 绘图 estimators_num=len(clf.estimators_) X=range(1,estimators_num+1) ax.plot(list(X),list(clf.staged_score(X_train,y_train)),label="Traing score") ax.plot(list(X),list(clf.staged_score(X_test,y_test)),label="Testing score") ax.set_xlabel("estimator num") ax.set_ylabel("score") ax.legend(loc="lower right") ax.set_ylim(0,1) ax.set_title("AdaBoostClassifier with Gaussian Naive Bayes") plt.show() # 调用 test_AdaBoostClassifier_base_classifier test_AdaBoostClassifier_base_classifier(X_train,X_test,y_train,y_test)

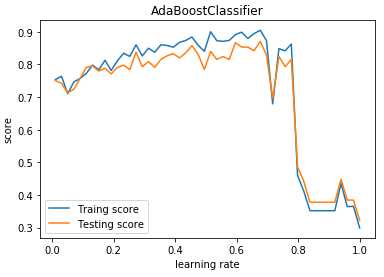

def test_AdaBoostClassifier_learning_rate(*data): ‘‘‘ 测试 AdaBoostClassifier 的预测性能随学习率的影响 ‘‘‘ X_train,X_test,y_train,y_test=data learning_rates=np.linspace(0.01,1) fig=plt.figure() ax=fig.add_subplot(1,1,1) traing_scores=[] testing_scores=[] for learning_rate in learning_rates: clf=ensemble.AdaBoostClassifier(learning_rate=learning_rate,n_estimators=500) clf.fit(X_train,y_train) traing_scores.append(clf.score(X_train,y_train)) testing_scores.append(clf.score(X_test,y_test)) ax.plot(learning_rates,traing_scores,label="Traing score") ax.plot(learning_rates,testing_scores,label="Testing score") ax.set_xlabel("learning rate") ax.set_ylabel("score") ax.legend(loc="best") ax.set_title("AdaBoostClassifier") plt.show() # 调用 test_AdaBoostClassifier_learning_rate test_AdaBoostClassifier_learning_rate(X_train,X_test,y_train,y_test)

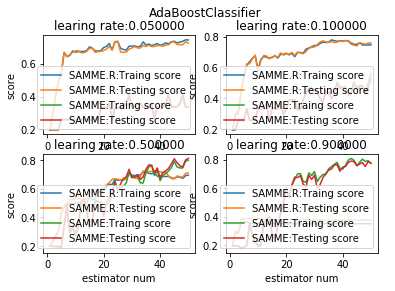

def test_AdaBoostClassifier_algorithm(*data): ‘‘‘ 测试 AdaBoostClassifier 的预测性能随学习率和 algorithm 参数的影响 ‘‘‘ X_train,X_test,y_train,y_test=data algorithms=[‘SAMME.R‘,‘SAMME‘] fig=plt.figure() learning_rates=[0.05,0.1,0.5,0.9] for i,learning_rate in enumerate(learning_rates): ax=fig.add_subplot(2,2,i+1) for i ,algorithm in enumerate(algorithms): clf=ensemble.AdaBoostClassifier(learning_rate=learning_rate,algorithm=algorithm) clf.fit(X_train,y_train) ## 绘图 estimators_num=len(clf.estimators_) X=range(1,estimators_num+1) ax.plot(list(X),list(clf.staged_score(X_train,y_train)),label="%s:Traing score"%algorithms[i]) ax.plot(list(X),list(clf.staged_score(X_test,y_test)),label="%s:Testing score"%algorithms[i]) ax.set_xlabel("estimator num") ax.set_ylabel("score") ax.legend(loc="lower right") ax.set_title("learing rate:%f"%learning_rate) fig.suptitle("AdaBoostClassifier") plt.show() # 调用 test_AdaBoostClassifier_algorithm test_AdaBoostClassifier_algorithm(X_train,X_test,y_train,y_test)

以上是关于吴裕雄 python 机器学习——集成学习AdaBoost算法分类模型的主要内容,如果未能解决你的问题,请参考以下文章

吴裕雄 python 机器学习——集成学习随机森林RandomForestClassifier分类模型

吴裕雄 python 机器学习——集成学习随机森林RandomForestRegressor回归模型

吴裕雄 python 机器学习——集成学习梯度提升决策树GradientBoostingClassifier分类模型