[pytorch] 官网教程+注释

Posted aoru45

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[pytorch] 官网教程+注释相关的知识,希望对你有一定的参考价值。

pytorch官网教程+注释

Classifier

import torch

import torchvision

import torchvision.transforms as transformstransform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=3,

shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=3,

shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

Files already downloaded and verified

Files already downloaded and verifiedimport matplotlib.pyplot as plt

import numpy as np

def imshow(img):

img = img/2 + 0.5 # 因为之前标准化的时候除以0.5就是乘以2,还减了0.5,所以回复原来的亮度值

npimg = img.numpy()

plt.imshow(np.transpose(npimg,(1,2,0))) # 转置根本的是改变索引顺序,即bgr转rgb

plt.show()

dataiter = iter(trainloader)

images,labels = dataiter.next()

print(images.shape) #torch.Size([4, 3, 32, 32]) bchw

print(torchvision.utils.make_grid(images).shape) #torch.Size([3, 36, 138])

#imshow(torchvision.utils.make_grid(images)) # 以格子形式显示多张图片

#print(" ".join("%5s"% classes[labels[j]] for j in range(4)))

torch.Size([3, 3, 32, 32])

torch.Size([3, 36, 104])import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv2d(3,6,5)

self.pool = nn.MaxPool2d(2,2)

self.conv2 = nn.Conv2d(6,16,5)

self.fc1 = nn.Linear(16*5*5,120)

self.fc2 = nn.Linear(120,84)

self.fc3 = nn.Linear(84,10)

def forward(self,x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1,16*5*5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()

import torch.optim as optim

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(),lr = 0.001,momentum = 0.9)

for epoch in range(2):

running_loss = 0.0

for i,data in enumerate(trainloader):

inputs,labels = data

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs,labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i%2000 ==1999:

print('[%d,%5d] loss:%.3f'%(epoch+1,i+1,running_loss/2000))

running_loss = 0.0

print("finished training")[1, 2000] loss:1.468

[1, 4000] loss:1.410

[1, 6000] loss:1.378

[1, 8000] loss:1.363

[1,10000] loss:1.330

[1,12000] loss:1.299

[2, 2000] loss:1.245

[2, 4000] loss:1.217

[2, 6000] loss:1.237

[2, 8000] loss:1.197

[2,10000] loss:1.193

[2,12000] loss:1.196

finished trainingdataiter = iter(testloader)

images,labels = dataiter.next()

imshow(torchvision.utils.make_grid(images))

outputs = net(images)

_,predicted = torch.max(outputs,1)

print("predicted"," ".join([classes[predicted[j]] for j in range(4)]))predicted cat ship ship shipcorrect = 0

total = 0

with torch.no_grad():

for data in testloader:

images,labels = data

outputs = net(images)

_,predicted = torch.max(outputs.data,1)

total += labels.size(0) # 等价于labels.size()[0]

correct+= (predicted==labels).sum().item()

print("acc:{}%%".format(100*correct/total))acc:56.96%%device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")print(device)cpunet.to(device)Net(

(conv1): Conv2d(3, 6, kernel_size=(5, 5), stride=(1, 1))

(pool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv2): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1))

(fc1): Linear(in_features=400, out_features=120, bias=True)

(fc2): Linear(in_features=120, out_features=84, bias=True)

(fc3): Linear(in_features=84, out_features=10, bias=True)

)DataLoading And Processing

from __future__ import print_function,division

import os

import torch

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from torch.utils.data import Dataset,DataLoader

from torchvision import transforms,utils

import warnings

from skimage import io,transform

warnings.filterwarnings("ignore")

plt.ion()landmarks_frame = pd.read_csv("data/faces/face_landmarks.csv") # name x y x y ...

#print(landmarks_frame.columns.tolist())

n = 65 # 第65个样本

img_name = landmarks_frame.iloc[n,0]# 第65个样本的文件名

#print(img_name)

#print(landmarks_frame.iloc[n,1:])

landmarks = landmarks_frame.iloc[n,1:].as_matrix()# 第65个样本的样本值向量

#如果不加上as_matrix的结果就是feature name + feature val,加了之后只有feature val

#print(landmarks)

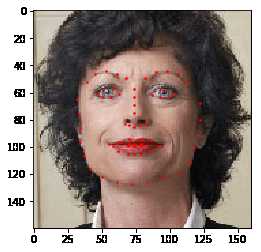

landmarks = landmarks.astype('float').reshape(-1,2) # 两个一组,组成两列的矩阵def show_landmarks(image,landmarks):

plt.imshow(image)

plt.scatter(landmarks[:,0],landmarks[:,1],s=10,marker='.',c='r')

#plt.pause(0.001) # python 窗口用得着plt.figure()

show_landmarks(io.imread(os.path.join("data/faces/",img_name)),landmarks)

# torch.utils.data.Dataset 是一个抽象类,我们的dataset需要继承这个类,才能对其进行操作

class FaceLandmarksDataset(Dataset):

def __init__(self,csv_file,root_dir,transform=None):

self.landmarks_frame = pd.read_csv(csv_file)

self.root_dir = root_dir

self.transform = transform

def __len__(self):

return len(self.landmarks_frame)

def __getitem__(self,idx):

img_name = os.path.join(

self.root_dir,

self.landmarks_frame.iloc[idx,0]

)

image = io.imread(img_name)

landmarks = self.landmarks_frame.iloc[idx,1:].as_matrix()

landmarks = landmarks.astype('float').reshape([-1,2])

sample = {"image":image,"landmarks":landmarks}

if self.transform:

sample = self.transform(sample)

return sample

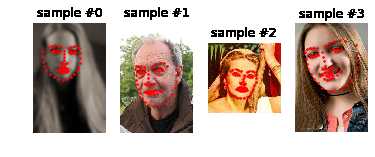

face_dataset = FaceLandmarksDataset(csv_file='data/faces/face_landmarks.csv',root_dir='data/faces/')

fig = plt.figure()

for i in range(len(face_dataset)):

sample = face_dataset[i]

print(i,sample['image'].shape,sample['landmarks'].shape)

ax = plt.subplot(1,4,i+1)

#plt.tight_layout()

ax.set_title("sample #{}".format(i))

ax.axis("off")

show_landmarks(**sample) #**为python 拆包,将dict拆解为x=a,y=b的格式

if i==3:

plt.show()

break0 (324, 215, 3) (68, 2)

1 (500, 333, 3) (68, 2)

2 (250, 258, 3) (68, 2)

3 (434, 290, 3) (68, 2)

class Rescale():

def __init__(self,output_size):

assert isinstance(output_size,(int,tuple)) # 必须是int或tuple类型,否则报错,要习惯用assert isinstance

self.output_size = output_size

def __call__(self,sample): # 这个类的对象是一个函数,所以定义call

image,landmarks = sample['image'],sample['landmarks']

h,w = image.shape[:2]

if isinstance(self.output_size,int): # 如果只输入了一个值,则以较短边为基准保持长宽比率不变变换

if h>w:

new_h,new_w = self.output_size*h/w,self.output_size

else:

new_h,new_w = self.output_size,self.output_size*w/h

else: #给定一个size那就直接变成这个size

new_h,new_w = output_size

new_h,new_w = int(new_h),int(new_w)

img = transform.resize(image,(new_h,new_w)) # 进行resize操作,调用的是skimage的resize,提供(h,w),跟opencv相反

landmarks = landmarks * [new_w/w,new_h/h]# 相应的,landmark也要做转换

return {"image":img,"landmarks":landmarks} # 返回dict

class RandomCrop():

def __init__(self,output_size):

assert isinstance(output_size,(int,tuple))

if isinstance(output_size,int):

self.output_size = (output_size,output_size)

else:

assert len(output_size) ==2

self.output_size = output_size

def __call__(self,sample):

image,landmarks = sample['image'],sample['landmarks']

h,w = image.shape[:2]# 原图宽高

new_h,new_w = self.output_size # 裁剪的宽高

top = np.random.randint(0,h-new_h) # 裁剪输出图像最上端

left = np.random.randint(0,w-new_w) # 最左端,保证取的时候不越界

image = image[top:top+new_h,left:left+new_w] # 随机裁剪

landmarks = landmarks - [left,top] # 这里landmarks同样也需要做变换,之所以减去[left,top]是因为存储的是x,y对应的轴是w轴和h轴

return {"image":image,"landmarks":landmarks}

class ToTensor():

def __call__(self,sample):

image,landmarks = sample['image'],sample['landmarks']

image = image.transpose((2,0,1)) # transpose实质是reshape

# skimage的shape [h,w,c]

# torch的shape [c,h,w]

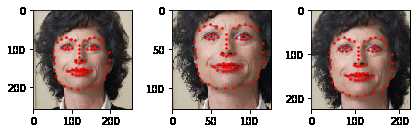

return {"image":torch.from_numpy(image),"landmarks":torch.from_numpy(landmarks)}scale = Rescale(256)

crop = RandomCrop(128)

composed = transforms.Compose([Rescale(256),RandomCrop(224)]) # 组合变换

fig = plt.figure()

sample = face_dataset[65]

for i,tsfrm in enumerate([scale,crop,composed]): # 三种变换

transformed_sample = tsfrm(sample) # 应用其中之一

ax = plt.subplot(1,3,i+1)

plt.tight_layout()

show_landmarks(**transformed_sample)

plt.show()

transformed_dataset = FaceLandmarksDataset(csv_file = 'data/faces/face_landmarks.csv',

root_dir="data/faces/",

transform=transforms.Compose(

[

Rescale(256),

RandomCrop(224),

ToTensor()

])

)

for i in range(len(transformed_dataset)):

sample = transformed_dataset[i]

print(i,sample['image'].size(),sample['landmarks'].size())

if i==3:

break

0 torch.Size([3, 224, 224]) torch.Size([68, 2])

1 torch.Size([3, 224, 224]) torch.Size([68, 2])

2 torch.Size([3, 224, 224]) torch.Size([68, 2])

3 torch.Size([3, 224, 224]) torch.Size([68, 2])

dataloader = DataLoader(transformed_dataset,batch_size=4,shuffle=True,num_workers=4) # 调用dataloader

def show_landmarks_batch(sample_batched):

images_batch,landmarks_batch = sample_batched['image'],sample_batched['landmarks']

batch_size = len(images_batch)

im_size = images_batch.size(2) #这里的size是shape

grid = utils.make_grid(images_batch) # 多张图变成一张图

plt.imshow(grid.numpy().transpose(1,2,0)) # reshape到能用plt显示

for i in range(batch_size):

# 第i张图片的所有点的x,所有点的y,后面 + i*im_size是由于所有图像水平显示,所以需要水平有个偏移

# 转numpy是因为torch类型的数据没办法scatter

plt.scatter(landmarks_batch[i,:,0].numpy() + i*im_size,

landmarks_batch[i,:,1].numpy(),

s=10,marker='.',c='r')

plt.title("batch from dataloader")

for i_batch,sample_batched in enumerate(dataloader):

if i_batch ==3:

plt.figure()

show_landmarks_batch(sample_batched)

plt.axis("off") # 关闭坐标系

plt.ioff()

plt.show()

break

<built-in method size of Tensor object at 0x7f273f3bad38>

以上是关于[pytorch] 官网教程+注释的主要内容,如果未能解决你的问题,请参考以下文章