Smooth Support Vector Machine - Python实现

Posted LOGAN_XIONG

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Smooth Support Vector Machine - Python实现相关的知识,希望对你有一定的参考价值。

- 算法特征:

①. 所有点尽可能正确分开; ②. 极大化margin; ③. 极小化非线性可分之误差. - 算法推导:

Part Ⅰ

线性可分之含义:

包含同类型所有数据点的最小凸集合彼此不存在交集.

引入光滑化手段:

plus function: \\begin{equation*}(x)_{+} = \\max \\{ x, 0 \\}\\end{equation*}

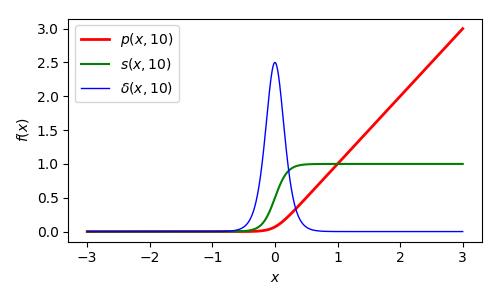

p-function: \\begin{equation*}p(x, \\beta) = \\frac{1}{\\beta}\\ln(\\mathrm{e}^{\\beta x} + 1)\\end{equation*}

可采用p-function近似plus function. 相关函数图像如下: 下面给出p-function的1阶及2阶导数:

下面给出p-function的1阶及2阶导数:

1st order (sigmoid function): \\begin{equation*} s(x, \\beta) = \\frac{1}{\\mathrm{e}^{-\\beta x} + 1} \\end{equation*}

2nd order (delta function): \\begin{equation*} \\delta (x, \\beta) = \\frac{\\beta \\mathrm{e}^{\\beta x}}{(\\mathrm{e}^{\\beta x} + 1)^2} \\end{equation*}

相关函数图像如下: Part Ⅱ

Part Ⅱ

SVM决策超平面方程如下:

\\begin{equation}\\label{eq_1}

h(x) = W^{\\mathrm{T}}x + b

\\end{equation}

其中, $W$是超平面法向量, $b$是超平面偏置参数.

本文拟采用$L_2$范数衡量非线性可分之误差, 则SVM原始优化问题如下:

\\begin{equation}

\\begin{split}

\\min &\\quad\\frac{1}{2}\\left\\|W\\right\\|_2^2 + \\frac{c}{2}\\left\\|\\xi\\right\\|_2^2 \\\\

\\mathrm{s.t.} &\\quad D(A^{\\mathrm{T}}W + \\mathbf{1}b) \\geq \\mathbf{1} - \\xi

\\end{split}\\label{eq_2}

\\end{equation}

其中, $A = [x^{(1)}, x^{(2)}, \\cdots , x^{(n)}]$, $D = diag([\\bar{y}^{(1)}, \\bar{y}^{(2)}, \\cdots, \\bar{y}^{(n)}])$, $\\xi$是由所有样本非线性可分之误差组成的列矢量.

根据上述优化问题(\\ref{eq_2}), 误差$\\xi$可采用plus function等效表示:

\\begin{equation}\\label{eq_3}

\\xi = (\\mathbf{1} - D(A^{\\mathrm{T}}W + \\mathbf{1}b))_{+}

\\end{equation}

由此可将该有约束最优化问题转化为如下无约束最优化问题:

\\begin{equation}\\label{eq_4}

\\min\\quad \\frac{1}{2}\\left\\|W\\right\\|_2^2 + \\frac{c}{2}\\left\\| \\left(\\mathbf{1} - D(A^{\\mathrm{T}}W + \\mathbf{1}b)\\right)_{+} \\right\\|_2^2

\\end{equation}

同样, 根据优化问题(\\ref{eq_2})KKT条件中稳定性条件有:

\\begin{equation}\\label{eq_5}

W = AD^{\\mathrm{T}}\\alpha

\\end{equation}

其中, $\\alpha$为对偶变量. 代入式(\\ref{eq_4})变数变换可得:

\\begin{equation}\\label{eq_6}

\\min\\quad \\frac{1}{2}\\alpha^{\\mathrm{T}}D^{\\mathrm{T}}A^{\\mathrm{T}}AD\\alpha + \\frac{c}{2}\\left\\| \\left(\\mathbf{1} - D(A^{\\mathrm{T}}AD^{\\mathrm{T}}\\alpha + \\mathbf{1}b)\\right)_{+} \\right\\|_2^2

\\end{equation}

由于权重参数$c$的存在, 上述最优化问题等效如下多目标最优化问题:

\\begin{equation}

\\left\\{

\\begin{split}

&\\min\\quad \\frac{1}{2}\\alpha^{\\mathrm{T}}D^{\\mathrm{T}}A^{\\mathrm{T}}AD\\alpha \\\\

&\\min\\quad \\frac{1}{2}\\left\\| \\left(\\mathbf{1} - D(A^{\\mathrm{T}}AD^{\\mathrm{T}}\\alpha + \\mathbf{1}b)\\right)_{+} \\right\\|_2^2

\\end{split}

\\right.

\\label{eq_7}

\\end{equation}

由于$D^TA^TAD\\succeq 0$, 有如下等效:

\\begin{equation}\\label{eq_8}

\\min\\quad \\frac{1}{2}\\alpha^{\\mathrm{T}}D^{\\mathrm{T}}A^{\\mathrm{T}}AD\\alpha \\quad\\Rightarrow\\quad \\min\\quad \\frac{1}{2}\\alpha^{\\mathrm{T}}\\alpha

\\end{equation}

根据Part Ⅰ中光滑化手段, 有如下等效:

\\begin{equation}\\label{eq_9}

\\min\\quad \\frac{1}{2}\\left\\| \\left(\\mathbf{1} - D(A^{\\mathrm{T}}AD^{\\mathrm{T}}\\alpha + \\mathbf{1}b)\\right)_{+} \\right\\|_2^2 \\quad\\Rightarrow\\quad \\min\\quad \\frac{1}{2}\\left\\| p(\\mathbf{1} - DA^{\\mathrm{T}}AD\\alpha - D\\mathbf{1}b, \\beta)\\right\\|_2^2, \\quad\\text{where $\\beta\\to\\infty$}

\\end{equation}

结合式(\\ref{eq_8})、式(\\ref{eq_9}), 优化问题(\\ref{eq_6})等效如下:

\\begin{equation}\\label{eq_10}

\\min\\quad \\frac{1}{2}\\alpha^{\\mathrm{T}}\\alpha + \\frac{c}{2}\\left\\| p(\\mathbf{1} - DA^{\\mathrm{T}}AD\\alpha - D\\mathbf{1}b, \\beta)\\right\\|_2^2, \\quad\\text{where $\\beta\\to\\infty$}

\\end{equation}

为增强模型的分类能力, 引入kernel trick, 得最终优化问题:

\\begin{equation}\\label{eq_11}

\\min\\quad \\frac{1}{2}\\alpha^{\\mathrm{T}}\\alpha + \\frac{c}{2}\\left\\| p(\\mathbf{1} - DK(A^{\\mathrm{T}}, A)D\\alpha - D\\mathbf{1}b, \\beta)\\right\\|_2^2, \\quad\\text{where $\\beta\\to\\infty$}

\\end{equation}

其中, $K$代表kernel矩阵, 内部元素$K_{ij}=k(x^{(i)}, x^{(j)})$由kernel函数决定. 常见的kernel函数如下:

①. 多项式核: $k(x^{(i)}, x^{(j)}) = ({x^{(i)}}\\cdot x^{(j)})^n$

②. 高斯核: $k(x^{(i)}, x^{(j)}) = \\mathrm{e}^{-\\mu\\| x^{(i)} - x^{(j)}\\|_2^2}$

结合式(\\ref{eq_1})、式(\\ref{eq_5})及kernel trick可得最终决策超平面方程:

\\begin{equation}

h(x) = \\alpha^{\\mathrm{T}}DK(A^{\\mathrm{T}}, x) + b

\\end{equation}

Part Ⅲ

以下给出优化相关协助信息. 拟设式(\\ref{eq_11})之目标函数符号为$J$, 并令:

\\begin{equation}

J = J_1 + J_2\\quad\\quad\\text{其中,}

\\left\\{

\\begin{split}

&J_1 = \\frac{1}{2}\\alpha^{\\mathrm{T}}\\alpha \\\\

&J_2 = \\frac{c}{2}\\left\\| p(\\mathbf{1} - DK(A^{\\mathrm{T}}, A)D\\alpha - D\\mathbf{1}b, \\beta)\\right\\|_2^2

\\end{split}

\\right.

\\end{equation}

$J_1$相关:

\\begin{gather*}

\\frac{\\partial J_1}{\\partial \\alpha} = \\alpha \\quad \\frac{\\partial J_1}{\\partial b} = 0 \\\\

\\frac{\\partial^2J_1}{\\partial\\alpha^2} = I \\quad \\frac{\\partial^2J_1}{\\partial\\alpha\\partial b} = 0 \\quad \\frac{\\partial^2J_1}{\\partial b\\partial\\alpha} = 0 \\quad \\frac{\\partial^2J_1}{\\partial b^2} = 0

\\end{gather*}

\\begin{gather*}

\\nabla J_1 = \\begin{bmatrix} \\frac{\\partial J_1}{\\partial \\alpha} \\\\ \\frac{\\partial J_1}{\\partial b}\\end{bmatrix} \\\\ \\\\

\\nabla^2 J_1 = \\begin{bmatrix}\\frac{\\partial^2J_1}{\\partial\\alpha^2} & \\frac{\\partial^2J_1}{\\partial\\alpha\\partial b} \\\\ \\frac{\\partial^2J_1}{\\partial b\\partial\\alpha} & \\frac{\\partial^2J_1}{\\partial b^2}\\end{bmatrix}

\\end{gather*}

$J_2$相关:

\\begin{gather*}

z_i = 1 - \\sum_{j}K_{ij}\\bar{y}^{(i)}\\bar{y}^{(j)}\\alpha_j - \\bar{y}^{(i)}b \\\\

\\frac{\\partial J_2}{\\partial \\alpha_k} = -c\\sum_{i}\\bar{y}^{(i)}\\bar{y}^{(k)}K_{ik}p(z_i, \\beta)s(z_i, \\beta) \\\\

\\frac{\\partial J_2}{\\partial b} = -c\\sum_{i}\\bar{y}^{(i)}p(z_i, \\beta)s(z_i, \\beta) \\\\

\\frac{\\partial^2 J_2}{\\partial \\alpha_k\\partial \\alpha_l} = c\\sum_{i}\\bar{y}^{(k)}\\bar{y}^{(l)}K_{ik}K_{il}[s^2(z_i, \\beta) + p(z_i, \\beta)\\delta(z_i, \\beta)] \\\\

\\frac{\\partial^2 J_2}{\\partial \\alpha_k\\partial b} = c\\sum_{i}\\bar{y}^{(k)}K_{ik}[s^2(z_i, \\beta) + p(z_i, \\beta)\\delta(z_i, \\beta)] \\\\

\\frac{\\partial^2 J_2}{\\partial b\\partial\\alpha_l} = c\\sum_{i}\\bar{y}^{(l)}K_{il}[s^2(z_i, \\beta) + p(z_i, \\beta)\\delta(z_i, \\beta)] \\\\

\\frac{\\partial^2 J_2}{\\partial b^2} = c\\sum_{i}[s^2(z_i, \\beta) + p(z_i, \\beta)\\delta(z_i, \\beta)]

\\end{gather*}

\\begin{gather*}

\\nabla J_2 = \\begin{bmatrix} \\frac{\\partial J_2}{\\partial\\alpha_k} \\\\ \\frac{\\partial J_2}{\\partial b} \\end{bmatrix} \\\\ \\\\

\\nabla^2 J_2 = \\begin{bmatrix} \\frac{\\partial^2 J_2}{\\partial\\alpha_k\\partial\\alpha_l} & \\frac{\\partial^2 J_2}{\\partial\\alpha_k\\partial b} \\\\ \\frac{\\partial^2 J_2}{\\partial b\\partial\\alpha_l} & \\frac{\\partial^2 J_2}{\\partial b^2} \\end{bmatrix}

\\end{gather*}

目标函数$J$之gradient及Hessian如下:

\\begin{gather}

\\nabla J = \\nabla J_1 + \\nabla J_2 \\\\

H = \\nabla^2 J = \\nabla^2 J_1 + \\nabla^2 J_2

\\end{gather}

Part Ⅳ

现以二分类为例进行算法实施:

\\begin{equation*}

\\begin{cases}

h(x) \\geq 0 & y = 1 & \\text{正例} \\\\

h(x) < 0 & y = -1 & \\text{负例}

\\end{cases}

\\end{equation*} - 代码实现:

1 # Smooth Support Vector Machine之实现 2 3 import numpy 4 from matplotlib import pyplot as plt 5 6 7 def spiral_point(val, center=(0, 0)): 8 rn = 0.4 * (105 - val) / 104 9 an = numpy.pi * (val - 1) / 25 10 11 x0 = center[0] + rn * numpy.sin(an) 12 y0 = center[1] + rn * numpy.cos(an) 13 z0 = -1 14 x1 = center[0] - rn * numpy.sin(an) 15 y1 = center[1] - rn * numpy.cos(an) 16 z1 = 1 17 18 return (x0, y0, z0), (x1, y1, z1) 19 20 21 def spiral_data(valList): 22 dataList = list(spiral_point(val) for val in valList) 23 data0 = numpy.array(list(item[0] for item in dataList)) 24 data1 = numpy.array(list(item[1] for item in dataList)) 25 return data0, data1 26 27 28 # 生成训练数据集 29 trainingValList = numpy.arange(1, 101, 1) 30 trainingData0, trainingData1 = spiral_data(trainingValList) 31 trainingSet = numpy.vstack((trainingData0, trainingData1)) 32 # 生成测试数据集 33 testValList = numpy.arange(1.5, 101.5, 1) 34 testData0, testData1 = spiral_data(testValList) 35 testSet = numpy.vstack((testData0, testData1)) 36 37 38 class SSVM(object): 39 40 def __init__(self, trainingSet, c=1, mu=1, beta=100): 41 self.__trainingSet = trainingSet # 训练集数据 42 self.__c = c # 误差项权重 43 self.__mu = mu # gaussian kernel参数 44 self.__beta = beta # 光滑化参数 45 46 self.__A, self.__D = self.__get_AD() 47 48 49 def get_cls(self, x, alpha, b): 50 A, D = self.__A, self.__D 51 mu = self.__mu 52 53 x = numpy.array(x).reshape((-1, 1)) 54 KAx = self.__get_KAx(A, x, mu) 55 clsVal = self.__calc_hVal(KAx, D, alpha, b) 56 if clsVal >= 0: 57 return 1 58 else: 59 return -1 60 61 62 def get_accuracy(self, dataSet, alpha, b): 63 \'\'\' 64 正确率计算 65 \'\'\' 66 rightCnt = 0 67 for row in dataSet: 68 clsVal = self.get_cls(row[:2], alpha, b) 69 if clsVal == row[2]: 70 rightCnt += 1 71 accuracy = rightCnt / dataSet.shape[0] 72 return accuracy 73 74 75 def optimize(self, maxIter=100, epsilon=1.e-9): 76 \'\'\' 77 maxIter: 最大迭代次数 78 epsilon: 收敛判据, 梯度趋于0则收敛 79 \'\'\' 80 A, D = self.__A, self.__D 81 c = self.__c 82 mu = self.__mu 83 beta = self.__beta 84 85 alpha, b = self.__init_alpha_b((A.shape[1], 1)) 86 KAA = self.__get_KAA(A, mu) 87 88 JVal = self.__calc_JVal(KAA, D, c, beta, alpha, b) 89 grad = self.__calc_grad(KAA, D, c, beta, alpha, b) 90 Hess = self.__calc_Hess(KAA, D, c, beta, alpha, b) 91 92 for i in range(maxIter): 93 print("iterCnt: {:3d}, JVal: {}".format(i, JVal)) 94 if self.__converged1(grad, epsilon): 95 return alpha, b, True 96 97 dCurr = -numpy.matmul(numpy.linalg.inv(Hess), grad) 98 ALPHA = self.__calc_ALPHA_by_ArmijoRule(alpha, b, JVal, grad, dCurr, KAA, D, c, beta) 99 100 delta = ALPHA * dCurr 101 alphaNew = alpha + delta[:-1, :] 102 bNew = b + delta[-1, -1] 103 JValNew = self.__calc_JVal(KAA, D, c, beta, alphaNew, bNew) 104 if self.__converged2(delta, JValNew - JVal, epsilon ** 2): 105 return alphaNew, bNew, True 106 107 alpha, b, JVal = alphaNew, bNew, JValNew 108 grad = self.__calc_grad(KAA, D, c, beta, alpha, b) 109 Hess = self.__calc_Hess(KAA, D, c, beta, alpha, b) 110 else: 111 if self.__converged1(grad, epsilon): 112 return alpha, b, True 113 return alpha, b, False 114 115 116 def __converged2(self, delta, JValDelta, epsilon): 117 val1 = numpy.linalg.norm(delta, ord=numpy.inf) 118 val2 = numpy.abs(JValDelta) 119 if val1 <= epsilon or val2 <= epsilon: 120 return True 121 return False 122 123 124 def __calc_ALPHA_by_ArmijoRule(self, alphaCurr, bCurr, JCurr, gCurr, dCurr, KAA, D, c, beta, C=1.e-4, v=0.5): 125 i = 0 126 ALPHA = v ** i 127 delta = ALPHA * dCurr 128 alphaNext = alphaCurr + delta[:-1, :] 129 bNext = bCurr + delta[-1, -1] 130 JNext = self.__calc_JVal(KAA, D, c, beta, alphaNext, bNext) 131 while True: 132 if JNext <= JCurr + C * ALPHA * numpy.matmul(dCurr.T, gCurr)[0, 0]: break 133 i += 1 134 ALPHA = v ** i 135 delta = ALPHA * dCurr 136 alphaNext = alphaCurr + delta[:-1, :] 137 bNext = bCurr + delta[-1, -1] 138 JNext = self.__calc_JVal(KAA, D, c, beta, alphaNext, bNext) 139 return ALPHA 140 141 142 def __converged1(self, grad, epsilon): 143 if numpy.linalg.norm(grad, ord=numpy.inf) <= epsilon: 144 return True 145 return False 146 147 148 def __p(self, x, beta): 149 term = x * beta 150 if term > 10: 151 val = x + numpy.math.log(1 + numpy.math.exp(-term)) / beta 152 else: 153 val = numpy.math.log(numpy.math.exp(term) + 1) / beta 154 return val 155 156 157 def __s(self, x, beta): 158 term = x * beta 159 if term > 10: 160 val = 1 / (numpy.math.exp(-beta * x) + 1) 161 else: 162 term1 = numpy.math.exp(term) 163 val = term1 / (1 + term1) 164 return val 165 166 167 def __d(self, x, beta): 168 term = x * beta 169 if term > 10: 170 term1 = numpy.math.exp(-term) 171 val = beta * term1 / (1 + term1) ** 2 172 else: 173 term1 = numpy.math.exp(term) 174 val = beta * term1 / (term1 + 1) ** 2 175 return val 176 177 178 def __calc_Hess(self, KAA, D, c, beta, alpha, b): 179 Hess_J1 = self.__calc_Hess_J1(alpha) 180 Hess_J2 = self.__calc_Hess_J2(KAA, D, c, beta, alpha, b) 181 Hess = Hess_J1 + Hess_J2 182 return Hess 183 184 185 def __calc_Hess_J2(self, KAA, D, c, beta, alpha, b): 186 Hess_J2 = numpy.zeros((KAA.shape[0] + 1, KAA.shape[0] + 1)) 187 Y = numpy.matmul(D, numpy.ones((D.shape[0], 1))) 188 YY = numpy.matmul(Y, Y.T) 189 KAAYY = KAA * YY 190 191 z = 1 - numpy.matmul(KAAYY, alpha) - Y * b 192 p = numpy.array(list(self.__p(z[i, 0], beta) for i in range(z.shape[0]))).reshape((-1, 1)) 193 s = numpy.array(list(self.__s(z[i, 0], beta) for i in range(z.shape[0]))).reshape((-1, 1)) 194 d = numpy.array(list(self.__d(z[i, 0], beta) for i in range(z.shape[0]))).reshape((-1, 1)) 195 term = s * s + p * d 196 197 for k in range(Hess_J2.shape[0] - 1): 198 for l in range(k + 1): 199 val = c * Y[k, 0] * Y[l, 0] * numpy.sum(KAA[:, k:k+1] * KAA[:, l:l+1] * term) 200 Hess_J2[k, l] = Hess_J2[l, k] = val 201 val = c * Y[k, 0] * numpy.sum(KAA[:, k:k+1] * term) 202 Hess_J2[k, -1] = Hess_J2[-1, k] = val 203 val = c * numpy.sum(term) 204 Hess_J2[-1, -1] = val 205 return Hess_J2 206 207 208 def __calc_Hess_J1(self, alpha): 209 I = numpy.identity(alpha.shape[0]) 210 term = numpy.hstack((I, numpy.zeros((I.shape[0], 1)))) 211 Hess_J1 = numpy.vstack((term, numpy.zeros((1, term.shape[1])))) 212 return Hess_J1 213 214 215 def __calc_grad(self, KAA, D, c, beta, alpha, b): 216 grad_J1 = self.__calc_grad_J1(alpha) 217 grad_J2 = self.__calc_grad_J2(KAA, D, c, beta, alpha, b) 218 grad = grad_J1 + grad_J2 219 return grad 220 221 222 def __calc_grad_J2(self, KAA, D, c, beta, alpha, b): 223 grad_J2 = numpy.zeros((KAA.shape[0] + 1, 1)) 224 Y = numpy.matmul(D, numpy.ones((D.shape[0], 1))) 225 YY = numpy.matmul(Y, Y.T) 226 KAAYY = KAA * YY 227 228 z = 1 - numpy.matmul(KAAYY, alpha) - Y * b 229 p = numpy.array(list(self.__p(z[i, 0], beta) for i in range(z.shape[0]))).reshape((-1, 1)) 230 s = numpy.array(list(self.__s(z[i, 0], beta) for i in range(z.shape[0]))).reshape((-1, 1)) 231 term = p * s 232 233 for k in range(grad_J2.shape[0] - 1): 234 val = -c * Y[k, 0] * numpy.sum(Y * KAA[:, k:k+1] * term) 235 grad_J2[k, 0] = val 236 grad_J2[-1, 0] = -c * numpy.sum(Y * term) 237 return grad_J2 238 239 240 def __calc_grad_J1(self, alpha): 241 grad_J1 = numpy.vstack((alpha, [[0]])) 242 return grad_J1 243 244 245 def __calc_JVal(self, KAA, D, c, beta, alpha, b): 246 J1 = self.__calc_J1(alpha) 247 J2 = self.__calc_J2(KAA, D, c, beta, alpha, b) 248 JVal = J1 + J2 249 return JVal 250 251 252 def __calc_J2(self, KAA, D, c, beta, alpha, b): 253 tmpOne = numpy.ones((KAA.shape[0], 1)) 254 x = tmpOne - numpy.matmul(numpy.matmul(numpy.matmul(D, KAA), D), alpha) - numpy.matmul(D, tmpOne) * b 255 p = numpy.array(list(self.__p(x[i, 0], beta) for i in range(x.shape[0]))) 256 J2 = numpy.sum(p * p) * c / 2 257 return J2 258 259 260 def __calc_J1(self, alpha): 261 J1 = numpy.sum(alpha * alpha) / 2 262 return J1 263 264 265 def __get_KAA(self, A, mu): 266 KAA = numpy.zeros((A.shape[1], A.shape[1])) 267 for rowIdx in range(KAA.shape[0]): 268 for colIdx in range(rowIdx + 1): 269 x1 = A[:, rowIdx:rowIdx+1] 270 x2 = A[:, colIdx:colIdx+1] 271 val = self.__calc_gaussian(x1, x2, mu) 272 KAA[rowIdx, colIdx] = KAA[colIdx, rowIdx] = val 273 return KAA 274 275 276 def __init_alpha_b(self, shape): 277 \'\'\' 278 alpha, b之初始化 279 \'\'\' 280 alpha, b = numpy.zeros(shape), 0 281 return alpha, b 282 283 284 def __get_KAx(self, A, x, mu): 285 KAx = numpy.zeros((A.shape[1], 1)) 286 for rowIdx in range(KAx.shape[0]): 287 x1 = A[:, rowIdx:rowIdx+1] 288 val = self.__calc_gaussian(x1, x, mu) 289 KAx[rowIdx, 0] = val 290 return KAx 291 292 293 def __calc_hVal(self, KAx, D, alpha, b): 294 hVal = numpy.matmul(numpy.matmul(alpha.T, D), KAx)[0, 0] + b 295 return hVal 296 297 298 def __calc_gaussian(self, x1, x2, mu): 299 val = numpy.math.exp(-mu * numpy.linalg.norm(x1 - x2) ** 2) 300 # val = numpy.sum(x1 * x2) 301 return val 302 303 304 def __get_AD(self): 305 A = self.__trainingSet[:, :2].T 306 D = numpy.diag(self.__trainingSet[:, 2]) 307 return A, D 308 309 310 class SpiralPlot(object): 311 312 def spiral_data_plot(self, trainingData0, trainingData1, testData0, testData1): 313 fig = plt.figure(figsize=(5, 5)) 314 ax1 = plt.subplot() 315 ax1.scatter(trainingData1[:, 0], trainingData1[:, 1], c="red", marker="o", s=10, label="training data - Positive") 316 ax1.scatter(trainingData0[:, 0], trainingData0[:, 1], c="blue", marker="o", s=10, label="training data - Negative") 317 ax1.scatter(testData1[:, 0], testData1[:, 1], c="red", marker="x", s=10, label="test data - Positive") 318 ax1.scatter(testData0[:, 0], testData0[:, 1], c="blue", marker="x", s=10, label="test data - Negative") 319 ax1.set(xlim=(-0.5, 0.5), ylim=(-0.5, 0.5), xlabel="$x_1$", ylabel="$x_2$") 320 plt.legend(fontsize="x-small") 321 fig.tight_layout() 322 fig.savefig("spiral.png", dpi=100) 323 plt.close() 324 325 326 def spiral_pred_plot(self, trainingData0, trainingData1, testData0, testData1, ssvmObj, alpha, b): 327 x = numpy.linspace(-0.5, 0.5, 500) 328 y = numpy.linspace(-0.5, 0.5, 500) 329 x, y = numpy.meshgrid(x, y) 330 z = numpy.zeros(shape=x.shape) 331 for rowIdx in range(x.shape[0]): 332 for colIdx in range(x.shape[1]): 333 z[rowIdx, colIdx] = ssvmObj.get_cls((x[rowIdx, colIdx], y[rowIdx, colIdx]), alpha, b) 334 cls2color = {-1: "blue", 0: "white", 1: "red"} 335 336 fig = plt.figure(figsize=(5, 5)) 337 ax1 = plt.subplot() 338 ax1.contourf(x, y, z, levels=[-1.5, -0.5, 0.5, 1.5], colors=["blue", "white", "red"], alpha=0.3) 339 ax1.scatter(trainingData1[:, 0], trainingData1[:, 1], c="red", marker="o", s=10, label="training data - Positive") 340 ax1.scatter(trainingData0[:, 0], trainingData0[:, 1], c="blue", marker="o", s=10, label="training data - Negative") 341 ax1.scatter(testData1[:, 0], testData1[:, 1], c="red", marker="x", s=10, label="test data - Positive") 342 ax1.scatter(testData0[:, 0], testData0[:, 1], c="blue", marker="x", s=10, label="test data - Negative") 343 ax1.set(xlim=(-0.5, 0.5), ylim=(-0.5, 0.5), xlabel="$x_1$", ylabel=support-vector-machines-svm

支持向量机(support vector machines, SVM)

机器学习技法:06 Support Vector Regression