python爬虫之scrapy的pipeline的使用

Posted Charles.L

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了python爬虫之scrapy的pipeline的使用相关的知识,希望对你有一定的参考价值。

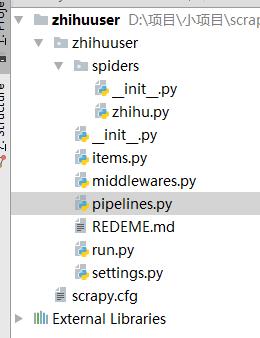

scrapy的pipeline是一个非常重要的模块,主要作用是将return的items写入到数据库、文件等持久化模块,下面我们就简单的了解一下pipelines的用法。

案例一:

items池

class ZhihuuserItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() id = scrapy.Field() name = scrapy.Field() avatar_url = scrapy.Field() headline = scrapy.Field() description = scrapy.Field() url = scrapy.Field() url_token = scrapy.Field() gender = scrapy.Field() cover_url = scrapy.Field() type = scrapy.Field() badge = scrapy.Field() answer_count = scrapy.Field() articles_count = scrapy.Field() commercial_question = scrapy.Field() favorite_count = scrapy.Field() favorited_count = scrapy.Field() follower_count = scrapy.Field() following_columns_count = scrapy.Field() following_count = scrapy.Field() pins_count = scrapy.Field() question_count = scrapy.Field() thank_from_count = scrapy.Field() thank_to_count = scrapy.Field() thanked_count = scrapy.Field() vote_from_count = scrapy.Field() vote_to_count = scrapy.Field() voteup_count = scrapy.Field() following_favlists_count = scrapy.Field() following_question_count = scrapy.Field() following_topic_count = scrapy.Field() marked_answers_count = scrapy.Field() mutual_followees_count = scrapy.Field() participated_live_count = scrapy.Field() locations = scrapy.Field() educations = scrapy.Field() employments = scrapy.Field()

写入MongoDB数据库的基本配置

#配置MongoDB数据库的连接信息 MONGO_URL = \'172.16.5.239\' MONGO_PORT = 27017 MONGO_DB = \'zhihuuser\' #参数等于False,就等于告诉你这个网站你想取什么就取什么,不会读取每个网站的根目录下的禁止爬取列表(例如:www.baidu.com/robots.txt) ROBOTSTXT_OBEY = False 执行pipelines下的写入操作 ITEM_PIPELINES = { \'zhihuuser.pipelines.MongoDBPipeline\': 300, }

pipelines.py:

1、首先我们要从settings文件中读取数据的地址、端口、数据库名称(没有会自动创建)。

2、拿到数据库的基本信息后进行连接。

3、将数据写入数据库

4、关闭数据库

注意:只有打开和关闭是只执行一次,而写入操作会根据具体的写入次数而定。

import pymongo

class MongoDBPipeline(object):

"""

1、连接数据库操作

"""

def __init__(self,mongourl,mongoport,mongodb):

\'\'\'

初始化mongodb数据的url、端口号、数据库名称

:param mongourl:

:param mongoport:

:param mongodb:

\'\'\'

self.mongourl = mongourl

self.mongoport = mongoport

self.mongodb = mongodb

@classmethod

def from_crawler(cls,crawler):

"""

1、读取settings里面的mongodb数据的url、port、DB。

:param crawler:

:return:

"""

return cls(

mongourl = crawler.settings.get("MONGO_URL"),

mongoport = crawler.settings.get("MONGO_PORT"),

mongodb = crawler.settings.get("MONGO_DB")

)

def open_spider(self,spider):

\'\'\'

1、连接mongodb数据

:param spider:

:return:

\'\'\'

self.client = pymongo.MongoClient(self.mongourl,self.mongoport)

self.db = self.client[self.mongodb]

def process_item(self,item,spider):

\'\'\'

1、将数据写入数据库

:param item:

:param spider:

:return:

\'\'\'

name = item.__class__.__name__

# self.db[name].insert(dict(item))

self.db[\'user\'].update({\'url_token\':item[\'url_token\']},{\'$set\':item},True)

return item

def close_spider(self,spider):

\'\'\'

1、关闭数据库连接

:param spider:

:return:

\'\'\'

self.client.close()

以上是关于python爬虫之scrapy的pipeline的使用的主要内容,如果未能解决你的问题,请参考以下文章

Python爬虫从入门到放弃(十六)之 Scrapy框架中Item Pipeline用法

2017.08.04 Python网络爬虫之Scrapy爬虫实战二 天气预报的数据存储问题

python3下scrapy爬虫(第十四卷:scrapy+scrapy_redis+scrapyd打造分布式爬虫之执行)