学习笔记《pytorch 入门》完整的模型训练套路(CIFAR10 model)

Posted 我是NGL

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了学习笔记《pytorch 入门》完整的模型训练套路(CIFAR10 model)相关的知识,希望对你有一定的参考价值。

文章目录

准备数据集(训练和测试)

- 训练数据集

train_data = torchvision.datasets.CIFAR10("dataset2", train=True, transform=torchvision.transforms.ToTensor(),download=True) # class:CIFAR10

- 测试用的数据集

test_data = torchvision.datasets.CIFAR10("dataset2", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

查看数据集长度(len())

train_data_size = len(train_data)# 50000

test_data_size = len(test_data)#10000

利用dataloader加载数据集(DataLoader())

train_dataloader = DataLoader(train_data, batch_size=64) # DataLoader:782

test_dataloader = DataLoader(test_data, batch_size=64)

搭建神经网络

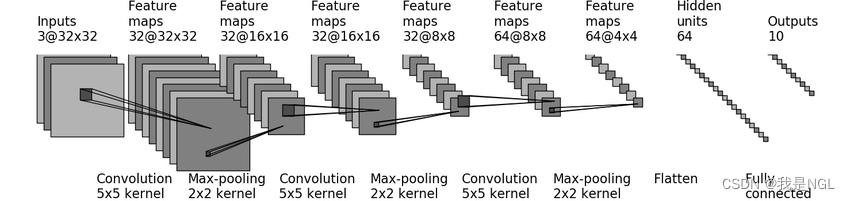

先看一下cifar10 model structure,然后搭建神经网络

-

从外部引入可以

from my_first_model import *!my_first_model import torch from torch import nn from torch.nn import Sequential class Net(nn.Module): def __init__(self): super(Net, self).__init__() self.model1 = Sequential( nn.Conv2d(3, 32, 5, 1, 2), nn.MaxPool2d(2), nn.Conv2d(32, 32, 5, 1, 2), nn.MaxPool2d(2), nn.Conv2d(32, 64, 5, 1, 2), nn.MaxPool2d(2), nn.modules.flatten.Flatten(), nn.Linear(64*4*4, 64), nn.Linear(64, 10) ) def forward(self, x): x = self.model1(x) return x if __name__ == '__main__': net = Net() input = torch.ones(64, 3, 32, 32) output = net(input) print(output.shape)net = Net() -

直接内部搭建也行

创建损失函数,分类问题使用交叉熵

loss_fn = nn.CrossEntropyLoss() #CrossEntropyloss

创建优化器

learning_rate = 0.01

optimizer = torch.optim.SGD(net.parameters(), lr=learning_rate)

设置训练网络的一些参数

-

记录训练的次数

total_train_step = 0 -

记录测试的次数

total_test_step = 0 -

训练的轮数

epoch = 10 -

添加tensorboard绘制曲线

writer = SummaryWriter("train_cifar10_logs") -

记录开始时间

start_time = time.time()

进入训练循环

-

第一层训练轮数循环

for i in range(epoch): -

第二层:每一轮训练对dataloader的遍历训练

# 训练步骤开始 for data in train_dataloader: imgs, targets = data每一个data都是一个列表

outputs = net(imgs) # 损失 loss = loss_fn(outputs, targets) # 优化模型 optimizer.zero_grad() # 首先取消梯度 loss.backward() # 回溯 optimizer.step() # Performs a single optimization step (parameter update) # 训练一次就完成,一次就是训练了64张图片,因为batch-size = 64 total_train_step += 1 # 每训练一百次就打印一下信息 if total_train_step % 100 == 0: end_time = time.time() print(f"训练时间: end_time - start_time") print(f"训练次数: total_train_step, Loss: loss.item()") writer.add_scalar("train_loss", loss.item(), total_train_step)

准备进入测试步骤

net.eval() # 进入评估模式

total_test_loss = 0 # 测试时总的损失值

total_accuracy = 0 # 正确的个数

with torch.no_grad(): # 禁用梯度计算

for data in test_dataloader: # 遍历dataloader

imgs, targets = data # 取得图片和目标

outputs = net(imgs) # 进入神经网络产生输出

loss = loss_fn(outputs, targets) # 损失

total_test_loss += loss.item()

accuracy = (outputs.argmax(1) == targets).sum().item()

total_accuracy += accuracy # 和目标匹配正确的个数

print(f"整体测试集上的Loss: total_test_loss")

print(f"整体测试集上的正确率:total_accuracy / test_data_size")

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / test_data_size, total_test_step)

total_test_step += 1 #

torch.save(net, f"net_i") # 保存模型

print("模型已保存")

writer.close() # 关闭writer

完整代码:

# @TIME: 2023/1/25 20:01

# @AUTHOR: 我是NGL

import torch

from torch import nn

from torch.nn import Sequential

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.model1 = Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.modules.flatten.Flatten(),

nn.Linear(64*4*4, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

if __name__ == '__main__':

net = Net()

input = torch.ones(64, 3, 32, 32)

output = net(input)

print(output.shape)

# @TIME: 2023/1/25 19:31

# @AUTHOR: 我是NGL

from torch.utils.tensorboard import SummaryWriter

from my_first_model import *

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Linear

from torch.nn.modules.flatten import Flatten

from torch.utils.data import DataLoader

import time

# 准备数据集(训练和测试)

train_data = torchvision.datasets.CIFAR10("dataset2", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("dataset2", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# length 长度

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度: train_data_size")

print(f"测试数据集的长度: test_data_size")

# 利用dataloader 来加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 创建神经网络模型

net = Net()

# 损失函数 分类问题使用交叉熵

loss_fn = nn.CrossEntropyLoss()

# 优化器

learning_rate = 0.01

optimizer = torch.optim.SGD(net.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("train_cifar10_logs")

# 开始时间

start_time = time.time()

for i in range(epoch):

print(f"---------------第 i+1 轮训练开始------------")

# 训练步骤开始

for data in train_dataloader:

imgs, targets = data

outputs = net(imgs)

# 损失

loss = loss_fn(outputs, targets)

# 优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

end_time = time.time()

print(f"训练时间: end_time - start_time")

print(f"训练次数: total_train_step, Loss: loss.item()")

writer.add_scalar("train_loss", loss.item(), total_train_step)

# 测试步骤开始

net.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

outputs = net(imgs)

loss = loss_fn(outputs, targets)

total_test_loss += loss.item()

accuracy = (outputs.argmax(1) == targets).sum().item()

total_accuracy += accuracy

print(f"整体测试集上的Loss: total_test_loss")

print(f"整体测试集上的正确率:total_accuracy / test_data_size")

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / test_data_size, total_test_step)

total_test_step += 1

torch.save(net, f"net_i")

print("模型已保存")

writer.close()

学习来源:吹一下土堆大大,大家有兴趣看一下他的pytoch教学视频

https://www.bilibili.com/video/BV1hE411t7RN?p=1&vd_source=4b9bc16687589a734dd252399f19f55b

以上是关于学习笔记《pytorch 入门》完整的模型训练套路(CIFAR10 model)的主要内容,如果未能解决你的问题,请参考以下文章

初识Pytorch之完整的模型训练套路-合在一个.py文件中 Complete model training routine - in one .py file