atlas单机安装

Posted 想学习安全的小白

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了atlas单机安装相关的知识,希望对你有一定的参考价值。

一、虚拟机准备

- 更新虚拟机,命令:yum -y update

- 修改hostname,命令:

hostnamectl set-hostname atlas - 关闭防火墙,命令:

systemctl stop firewalld.service和systemctl disable firewalld.service - reboot

二、安装jdk

- 卸载openjdk,命令:

rpm -e --nodeps java-1.7.0-openjdk

rpm -e --nodeps java-1.7.0-openjdk-headless

rpm -e --nodeps java-1.8.0-openjdk

rpm -e --nodeps java-1.8.0-openjdk-headless

- 解压jdk,命令:

tar -xzvf jdk-8u161-linux-x64.tar.gz -C /home/atlas/

mv jdk1.8.0_161/ jdk1.8

- 配置环境变量,命令

vim /etc/profile.d/my_env.sh

export JAVA_HOME=/home/atlas/jdk1.8

export PATH=$PATH:$JAVA_HOME/bin

source /etc/profile

三、配置免密登录

- 生成密钥,命令:

ssh-keygen -t rsa - 进入.ssh目录,命令:

cd /root/.ssh - 配置免密,命令:

cat id_rsa.pub >> authorized_keys

chmod 600 ./authorized_keys

四、配置handoop2.7.2

- 解压安装包,命令:

tar -xzvf hadoop-2.7.2.tar.gz -C /home/atlas/

mv hadoop-2.7.2/ hadoop

- 配置环境变量

vim /etc/profile.d/my_env.sh

export HADOOP_HOME=/home/atlas/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

source /etc/profile

- 修改core-site.xml,命令:

vim /home/atlas/hadoop/etc/hadoop/core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/atlas/hadoop/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>atguigu</value>

</property>

<property>

<name>hadoop.proxyuser.atguigu.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.atguigu.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.atguigu.groups</name>

<value>*</value>

</property>

</configuration>

- 修改hdfs-site.xml,命令:

vim /home/atlas/hadoop/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/atlas/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/atlas/hadoop/tmp/dfs/data</value>

</property>

</configuration>

- 修改yarn-site.xml,命令:

vim /home/atlas/hadoop/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

- 修改mapred-site.xml,命令:

cp /home/atlas/hadoop/etc/hadoop/mapred-site.xml.template /home/atlas/hadoop/etc/hadoop/mapred-site.xml

vim /home/atlas/hadoop/etc/hadoop/mapred-site.xml

<configuration>

<!-- 指定MR运行在YARN上 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- 启动hadoop,命令:

hdfs namenode -format

start-dfs.sh

start-yarn.sh

五、安装mysql

- 删除系统自带mysql,命令:

rpm -qa|grep mariadb

rpm -e --nodeps mariadb-libs

- 解压压缩包,命令:

tar -xvf mysql-5.7.28-1.el7.x86_64.rpm-bundle.tar - 安装mysql,命令:

rpm -ivh mysql-community-common-5.7.28-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-5.7.28-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-compat-5.7.28-1.el7.x86_64.rpm

rpm -ivh mysql-community-client-5.7.28-1.el7.x86_64.rpm

rpm -ivh mysql-community-server-5.7.28-1.el7.x86_64.rpm

- 初始化数据库,命令:

mysqld --initialize --user=mysql - 查看临时生成的root 用户的密码,命令:

cat /var/log/mysqld.log - 启动mysql服务,命令:

systemctl start mysqld - 登录MySQL数据库,命令:

mysql -uroot -p,之后输入之前的临时密码进入到数据库 - 修改密码,命令:

set password = password("新密码"); - 修改mysql库下的user表中的root用户允许任意ip连接,命令1:

update mysql.user set host='%' where user='root';,命令2:flush privileges;

六、安装hive

- 解压安装包,命令:

tar -xzvf apache-hive-3.1.2-bin.tar.gz -C /home/atlas/

mv apache-hive-3.1.2-bin/ hive

- 为Hive配置环境变量,命令:

vim /etc/profile.d/my_env.sh

export HIVE_HOME=/home/atlas/hive

export PATH=$PATH:$HIVE_HOME/bin

source /etc/profile

- 配置驱动,命令:

cp /home/atlas/rar/3_mysql/mysql-connector-java-5.1.37.jar /home/atlas/hive/lib/ - 编辑hive-site.xml,命令

vim /home/atlas/hive/conf/hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- jdbc 连接的URL -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/metastore?useSSL=false&useUnicode=true&characterEncoding=UTF-8</value>

</property>

<!-- jdbc 连接的Driver-->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<!-- jdbc 连接的username-->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<!-- jdbc 连接的password -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>970725</value>

</property>

<!-- Hive 元数据存储版本的验证 -->

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<!--元数据存储授权-->

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

</configuration>

- 修改hive-env.sh,命令:

mv /home/atlas/hive/conf/hive-env.sh.template /home/atlas/hive/conf/hive-env.sh

vim /home/atlas/hive/conf/hive-env.sh

将#export HADOOP_HEAPSIZE=1024开放

- 修改hive-log4j2.properties,命令:

mv /home/atlas/hive/conf/hive-log4j2.properties.template /home/atlas/hive/conf/hive-log4j2.properties

vim /home/atlas/hive/conf/hive-log4j2.properties

修改property.hive.log.dir = /home/atlas/hive/logs

- 登录mysql,命令:

mysql -uroot -p - 新建Hive元数据库后退出,命令:

create database metastore; - 初始化Hive元数据库,命令:

schematool -initSchema -dbType mysql -verbose - 配置metastore数据库编码,命令:

mysql -uroot -p

use metastore

alter table COLUMNS_V2 modify column COMMENT varchar(256) character set utf8;

alter table TABLE_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

alter table PARTITION_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

alter table PARTITION_KEYS modify column PKEY_COMMENT varchar(4000) character set utf8;

alter table INDEX_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

alter table TBLS modify column view_expanded_text mediumtext character set utf8;

alter table TBLS modify column view_original_text mediumtext character set utf8;

七、安装zookeeper

- 解压安装包,命令:

tar -xzvf apache-zookeeper-3.5.7-bin.tar.gz -C /home/atlas/

mv apache-zookeeper-3.5.7-bin/ zookeeper

- 创建文件夹zkData,命令:

mkdir -p /home/atlas/zookeeper/zkData - 创建文件,命令:

vim /home/atlas/zookeeper/zkData/myid,写入1 - 重命名zoo_sample.cfg文件为zoo.cfg,命令:

mv /home/atlas/zookeeper/conf/zoo_sample.cfg /home/atlas/zookeeper/conf/zoo.cfg - 修改zoo.cfg文件

#修改

dataDir=/home/atlas/zookeeper/zkData

#文本末尾追加

#######################cluster##########################

server.1=hadoop01:2888:3888

server.2=hadoop02:2888:3888

server.3=hadoop03:2888:3888

- 启动zookeeper,命令:

/home/atlas/zookeeper/bin/zkServer.sh start - 停止:

/home/atlas/zookeeper/bin/zkServer.sh stop - 查看状态:

/home/atlas/zookeeper/bin/zkServer.sh stauts

八、安装kafka

- 解压安装包,命令:

tar -xzvf kafka_2.11-2.4.1.tgz -C /home/atlas/

mv kafka_2.11-2.4.1/ kafka

- 创建logs文件夹 ,命令:

mkdir -p /home/atlas/kafka/logs - 修改server.properties文件,命令:vim /home/atlas/kafka/config/server.properties

#删除topic 功能使能,追加在broker.id=0后面

delete.topic.enable=true

#修改kafka运行日志存放的路径

log.dirs=/home/atlas/kafka/data

#修改配置连接Zookeeper 集群地址

zookeeper.connect=localhost:2181/kafka

- 配置kafka环境变量,

vim /etc/profile.d/my_env.sh

export KAFKA_HOME=/home/atlas/kafka

export PATH=$PATH:$KAFKA_HOME/bin

- 启动,命令:

/home/atlas/kafka/bin/kafka-server-start.sh -daemon /home/atlas/kafka/config/server.properties - 停止,命令:

/home/atlas/kafka/bin/kafka-server-stop.sh stop

九、安装hbase

- 解压安装包,命令:

tar -xzvf hbase-2.0.5-bin.tar.gz -C /home/atlas/

mv hbase-2.0.5/ hbase

- 配置环境变量,命令:

vim /etc/profile.d/my_env.sh

export HBASE_HOME=/home/atlas/hbase

export PATH=$PATH:$HBASE_HOME/bin

- 修改hbase-env.sh文件,命令:

vim /home/atlas/hbase/conf/hbase-env.sh

#修改

export HBASE_MANAGES_ZK=false #原来为true

- 修改hbase-site.xml文件,命令:

vim /home/atlas/hbase/conf/hbase-site.xml

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/HBase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>localhost</value>

</property>

- 启动,命令:

/home/atlas/hbase/bin/start-hbase.sh - 停止:

/home/atlas/hbase/bin/stop-hbase.sh

十、安装solr

- 解压安装包,命令:

tar -xzvf /home/atlas/rar/solr-7.7.3.tgz -C /home/atlas/

mv solr-7.7.3/ solr

- 创建用户,命令:

useradd solr - 设置密码,命令:

echo solr | passwd --stdin solr - 修改solr 目录的所有者为solr用户,命令:

chown -R solr:solr /home/atlas/solr - 修改/home/atlas/solr/bin/solr.in.sh文件,命令:

vim /home/atlas/solr/bin/solr.in.sh

ZK_HOST="localhost:2181"

- 启动命令:

sudo -i -u solr /home/atlas/solr/bin/solr start

十一、安装atlas

1、上传压缩包并解压

- 解压apache-atlas-2.1.0-server.tar.gz文件,重命名为atlas

tar -xzvf /home/atlas/rar/9_atlas/apache-atlas-2.1.0-server.tar.gz -C /home/atlas/

mv apache-atlas-2.1.0/ atlas

2、Atlas集成Hbase

- 修改atlas/conf/atlas-application.properties配置文件,命令:

vim /home/atlas/atlas/conf/atlas-application.properties

atlas.graph.storage.hostname=localhost:2181

- 修改atlas/conf/atlas-env.sh 配置文件,命令:

vim /home/atlas/atlas/conf/atlas-env.sh

#在文件最后追加

export HBASE_CONF_DIR=/home/atlas/hbase/conf

3、Atlas集成Solr

- 修改atlas/conf/atlas-application.properties配置文件,命令:

vim /home/atlas/atlas/conf/atlas-application.properties

#Solr 这里的注释掉

#Solr cloud mode properties

#atlas.graph.index.search.solr.mode=cloud

#atlas.graph.index.search.solr.zookeeper-url=

#atlas.graph.index.search.solr.zookeeper-connect-timeout=60000

#atlas.graph.index.search.solr.zookeeper-session-timeout=60000

#atlas.graph.index.search.solr.wait-searcher=true

#Solr http mode properties

atlas.graph.index.search.solr.mode=http

atlas.graph.index.search.solr.http-urls=http://localhost:2181/solr

- 复制文件,命令:

cp -rf /home/atlas/atlas/conf/solr /home/atlas/solr/atlas_conf - 执行下列命令

sudo -i -u solr /home/atlas/solr/bin/solr create -c vertex_index -d /home/atlas/solr/atlas_conf

4、Atlas集成Kafka

- 修改atlas/conf/atlas-application.properties配置文件,命令:

vim /home/atlas/atlas/conf/atlas-application.properties

atlas.notification.embedded=false

atlas.kafka.data=/home/atlas/kafka/data

atlas.kafka.zookeeper.connect=localhost:2181/kafka

atlas.kafka.bootstrap.servers=localhost:9092

5、Atlas Server 配置

- 修改atlas/conf/atlas-application.properties配置文件,命令:

vim /home/atlas/atlas/conf/atlas-application.properties

atlas.server.run.setup.on.start=false

- 修改atlas-log4j.xml文件,命令:

vim /home/atlas/atlas/conf/atlas-log4j.xml

#去掉下面代码的注释

<appender name="perf_appender" class="org.apache.log4j.DailyRollingFileAppender">

<param name="file" value="$atlas.log.dir/atlas_perf.log" />

<param name="datePattern" value="'.'yyyy-MM-dd" />

<param name="append" value="true" />

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%d|%t|%m%n" />

</layout>

</appender>

<logger name="org.apache.atlas.perf" additivity="false">

<level value="debug" />

<appender-ref ref="perf_appender" />

</logger>

6、Atlas集成Hive

- 修改atlas/conf/atlas-application.properties配置文件,命令:

vim /home/atlas/atlas/conf/atlas-application.properties

#在文件末尾追加

######### Hive Hook Configs #######

atlas.hook.hive.synchronous=false

atlas.hook.hive.numRetries=3

atlas.hook.hive.queueSize=10000

atlas.cluster.name=primary

- 修改hive-site.xml文件,命令:

vim /home/atlas/hive/conf/hive-site.xml

#在configuration标签里追加

<property>

<name>hive.exec.post.hooks</name>

<value>org.apache.atlas.hive.hook.HiveHook</value>

</property>

7、安装Hive Hook

- 解压Hive Hook,命令:

tar -zxvf apache-atlas-2.1.0-hive-hook.tar.gz - 将Hive Hook目录里的文件依赖复制到Atlas 安装路径,命令:

cp -r apache-atlas-hive-hook-2.1.0/* /home/atlas/atlas/ - 修改hive/conf/hive-env.sh配置文件,命令:

vim /home/atlas/hive/conf/hive-env.sh

export HIVE_AUX_JARS_PATH=/home/atlas/atlas/hook/hive

- 将Atlas 配置文件/home/atlas/atlas/conf/atlas-application.properties 拷贝到/home/atlas/hive/conf 目录,命令:

cp /home/atlas/atlas/conf/atlas-application.properties /home/atlas/hive/conf/

十二、Atlas启动

1、启动前置配置

- 启动Hadoop,命令:

start-all.sh - 启动Zookeeper,命令:

/home/atlas/zookeeper/bin/zkServer.sh start - 启动Kafka,命令:

/home/atlas/kafka/bin/kafka-server-start.sh -daemon /home/atlas/kafka/config/server.properties - 启动Hbase,命令:

/home/atlas/hbase/bin/start-hbase.sh - 启动Solr,命令:

sudo -i -u solr /home/atlas/solr/bin/solr start

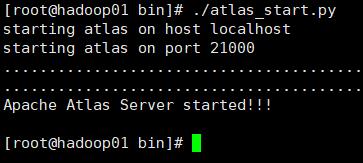

3、启动Atlas服务

- 进入atlas的bin目录,命令:

cd /home/atlas/atlas/bin - 执行启动脚本,命令:

./atlas_start.py,等待2min

- 访问hadoop01的21000端口

- 使用默认账号登录,用户名:admin,密码:admin

以上是关于atlas单机安装的主要内容,如果未能解决你的问题,请参考以下文章