基于LSTM+FCN处理多变量时间序列问题记录

Posted 彭祥.

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基于LSTM+FCN处理多变量时间序列问题记录相关的知识,希望对你有一定的参考价值。

Long Short Term Memory Fully Convolutional Network (LSTM-FCN) and

Attention LSTM-FCN (ALSTM-FCN) have been successful in classifying

univariate time series . However, they have never been applied to on a

multivariate time series classification problem. The models we

propose, Multivariate LSTM-FCN (MLSTM-FCN) and Multivariate.Attention

LSTM-FCN (MALSTM-FCN), converts their respective univariate models

into multivariate variants. We extend the squeeze-and-excite block to

the case of 1D sequence models and augment the fully convolutional

blocks of the LSTM-FCN and ALSTM-FCN models to enhance classification

accuracy.

LSTM-FCN与ALSTM-FCN已经在单变量时间序列分类问题上取得了成功,但它们还没有应用到一个多变量时间序列的分类问题中去。我们提出的MLSTM-FCN和MALSTM-FCN模型,即转变它们各自的单变量模型为多变量模型。我们延展了挤压-激活模块到一维卷积层以及增强LSTM-FCN与ALSTM-FCN层的全连接块取提高分类精度。

As the datasets now consist of multivariate time series, we can define

a time series dataset as a tensor of shape (N, Q, M ), where N is the

number of samples in the dataset, Q is the maximum number of time

steps amongst all variables and M is the number of variables processed

per time step. Therefore a univariate time series dataset is a special

case of the above definition, where M is 1. The alteration required to

the input of the LSTM-FCN and ALSTM-FCN models is to accept M inputs

per time step, rather than a single input per time step.

目前该数据集由多变量时间序列组成,我们可以将一个时间序列定义为(N,Q,M)的tensor类型,其中N代表数据集中样例的数量,Q代表

下面是

模型介绍

Similar to LSTM-FCN and ALSTM-FCN, the proposed models comprise a

fully convolutional block and a LSTM block, as depicted in Fig. 1.

The fully convolutional block contains three temporal convolutional

blocks, used as a feature extractor, which is replicated from the

original fully convolutional block by Wang et al [34]. The

convolutional blocks contain a convolutional layer with a number of

filters (128, 256, and 128) and a kernel size of 8, 5, and 3

respectively. Each convolutional layer is succeeded by batch

normalization, with a momentum of 0.99 and epsilon of 0.001. The batch

normalization layer is succeeded by the ReLU activation function. In

addition, the first two convolutional blocks conclude 6 with a

squeeze-and-excite block, which sets the proposed model apart from

LSTM-FCN and ALSTM-FCN. Fig. 2 summarizes the process of how the

squeeze-and-excite block is computed in our architecture. For all

squeeze and excitation blocks, we set the reduction ratio r to 16. The

final temporal convolutional block is followed by a global average

pooling layer.The squeeze-and-excite block is an addition to the FCN block which adaptively recalibrates the input feature maps. Due to the reduction ratio r set to 16, the number of parameters required to learn these self-attention maps is reduced such that the overall model

size increases by just 3-10 %.

与LSTM-FCN和ALSTM-FCN相同,该模型包含一个全卷积块和一个LSTM块,如下图所描述的。

全卷积块包含三个时间卷积块,作为一个特征提取器,它最早作为初始全卷积块被人提出。这个卷积块包含一个卷积层,其过滤器数量分别为128,256,128,其卷积核大小分别为8,5,3。每个卷积层后紧跟着为批次归一化,归一化完成后使用ReLU激活函数。此外,在前两个卷积块中还紧跟一个挤压-激活块,这是该模型之所以区别于LSTM-FCN和ALSTM-FCN。

下图总结了激活-挤压块如何在我们的结构中计算,对于所有的挤压-激活块,我们都设置减速比r为16,最后时间卷积块再紧跟一个平均池化层。

这个挤压-激活块是作为FCN块的补充,用于自适应重新校准feture map的权重值。由于监所比设置为16,学习这些自我注意图所需的参数数量减少,使得整个模型大小仅增加3-10%。

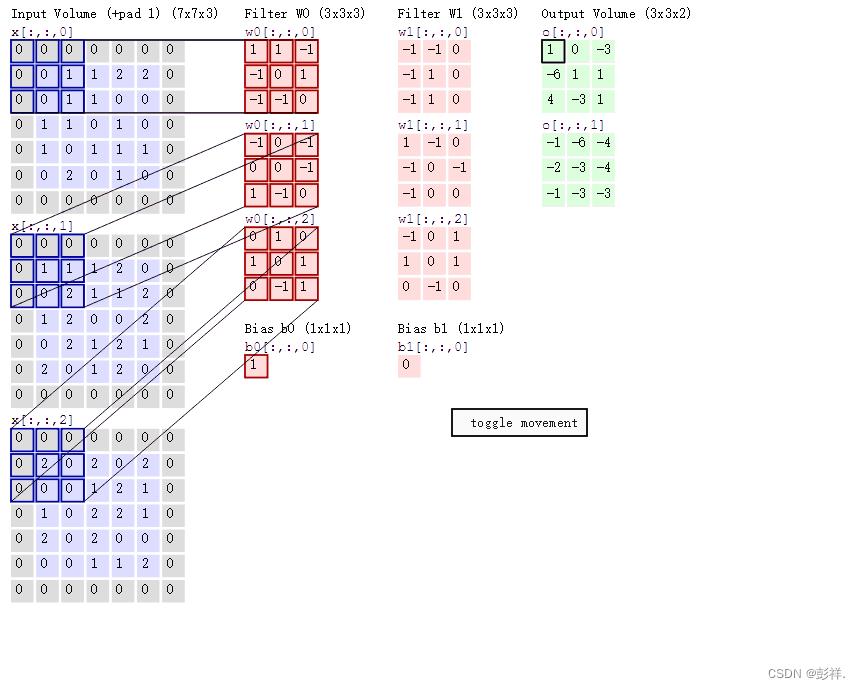

过滤器即卷积核,在卷积神经网络中根据其要提取的信息不同,可以有多个卷积和,卷积核大小即为过滤器大小

ReLU激活函数

线性整流函数(Linear rectification function),又称修正线性单元,是一种人工神经网络中常用的激活函数(activation function),通常指代以斜坡函数及其变种为代表的非线性函数。

This adaptive rescaling of the filter maps is of utmost importance to

the improved performance of the MLSTM-FCN model compared to LSTM-FCN,

as it incorporates learned self-attention to the inter-correlations

between multiple variables at each time step, which was inadequate

with the LSTM-FCN

滤波器图的自适应重新缩放对MLSTM-FCN模型的性能提升至关重要,相比于LSTM-FCN,因为其在每个时间步长中整合自注意力学习到多变量到多变量的内在联系中,这在LSTM中是不充分的。

以上是关于基于LSTM+FCN处理多变量时间序列问题记录的主要内容,如果未能解决你的问题,请参考以下文章

基于 Mixup 数据增强的 LSTM-FCN 时间序列分类学习记录

深度学习多变量时间序列预测:LSTM算法构建时间序列多变量模型预测交通流量+代码实战

LSTM Fully Convolutional Networks for Time Series Classification 学习记录