HDFS的block默认存储大小

Posted 闭关苦炼内功

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了HDFS的block默认存储大小相关的知识,希望对你有一定的参考价值。

被问到hadoop的HDFS的block默认存储大小

想都没想直接回答64M

。。。

抱着学习的心态,我们去官网一探究竟

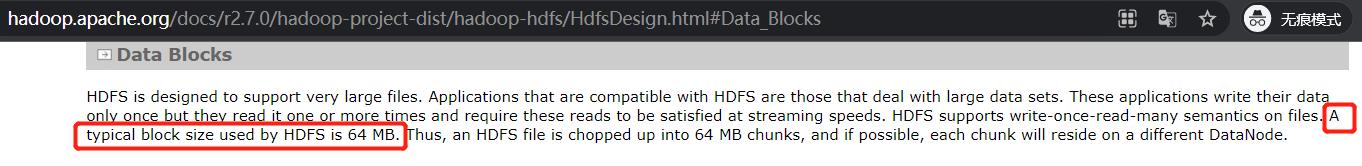

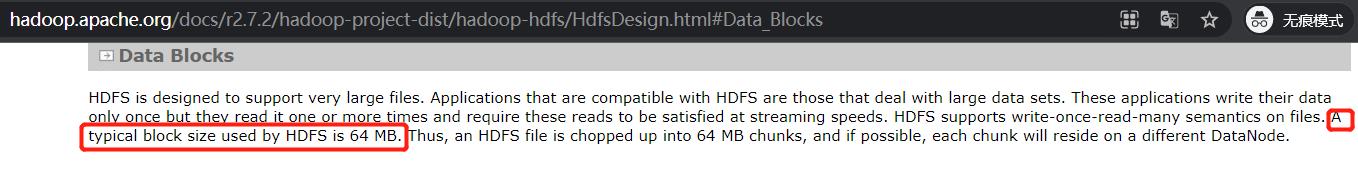

hadoop2.7.2

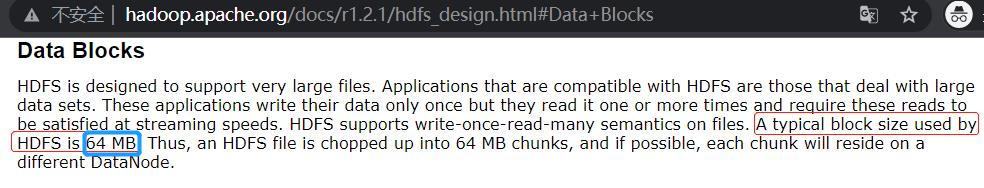

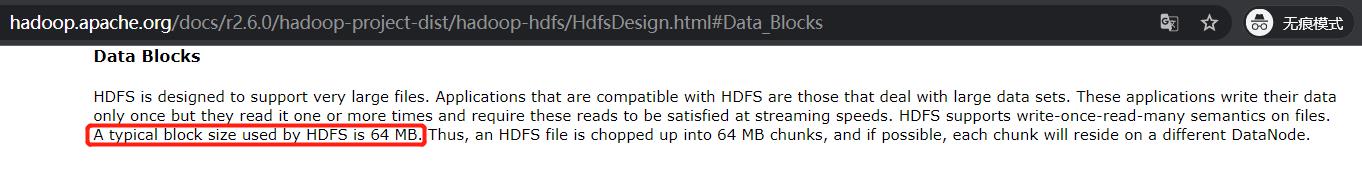

Data Blocks

HDFS is designed to support very large files. Applications that are compatible with HDFS are those that deal with large data sets. These applications write their data only once but they read it one or more times and require these reads to be satisfied at streaming speeds. HDFS supports write-once-read-many semantics on files. A typical block size used by HDFS is 64 MB. Thus, an HDFS file is chopped up into 64 MB chunks, and if possible, each chunk will reside on a different DataNode.

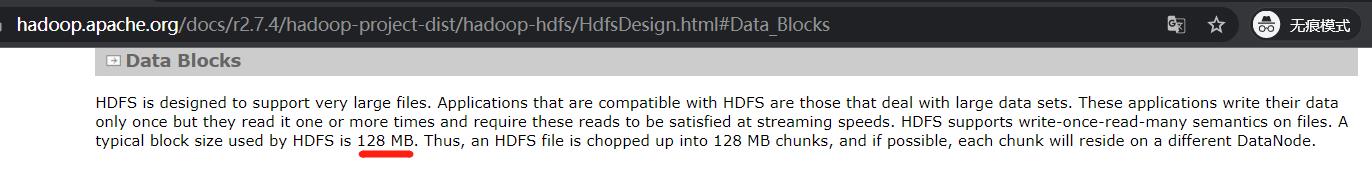

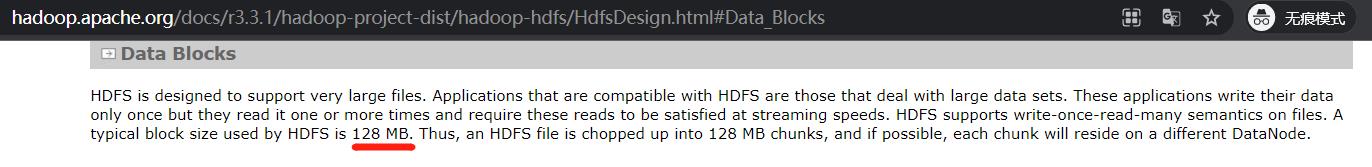

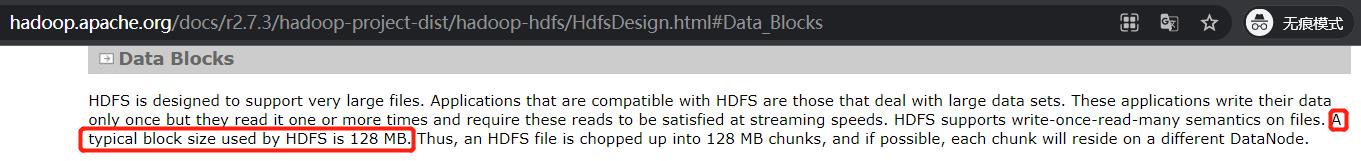

hadoop2.7.3

Data Blocks

HDFS is designed to support very large files. Applications that are compatible with HDFS are those that deal with large data sets. These applications write their data only once but they read it one or more times and require these reads to be satisfied at streaming speeds. HDFS supports write-once-read-many semantics on files. A typical block size used by HDFS is 128 MB. Thus, an HDFS file is chopped up into 128 MB chunks, and if possible, each chunk will reside on a different DataNode.

所以我们可以下一个结论:

-

hadoop2.7.2版本及之前的版本默认block大小都是64M

-

hadoop2.7.3版本开始及之后的版本默认block大小都是128M

几十k的小文件,存到HDFS占用空间是文件实际大小。而非一个block块的大小

我们下期见,拜拜!

以上是关于HDFS的block默认存储大小的主要内容,如果未能解决你的问题,请参考以下文章