综述A Comprehensive Survey on Graph NeuralNetworks

Posted 海轰Pro

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了综述A Comprehensive Survey on Graph NeuralNetworks相关的知识,希望对你有一定的参考价值。

目录

前言

Hello!小伙伴!

非常感谢您阅读海轰的文章,倘若文中有错误的地方,欢迎您指出~

自我介绍 ଘ(੭ˊᵕˋ)੭

昵称:海轰

标签:程序猿|C++选手|学生

简介:因C语言结识编程,随后转入计算机专业,获得过国家奖学金,有幸在竞赛中拿过一些国奖、省奖…已保研。

学习经验:扎实基础 + 多做笔记 + 多敲代码 + 多思考 + 学好英语!

唯有努力💪

文章仅作为自己的学习笔记 用于知识体系建立以及复习

知其然 知其所以然!

专业名词

- GAE 图自编码器

笔记

With multiple graphs, GAEs are able to learn the gener-ative distribution of graphs by encoding graphs into hiddenrepresentations and decoding a graph structure given hiddenrepresentations. The majority of GAEs for graph generationare designed to solve the molecular graph generation problem,which has a high practical value in drug discovery. Thesemethods either propose a new graph in a sequential manneror in a global manner.

对于多个图,GAE能够通过将图编码为隐藏表示和解码给定隐藏表示的图结构来了解图的一般分布。大多数用于图生成的GAE都是为了解决分子图生成问题而设计的,在药物发现中具有很高的实用价值。这些方法要么以顺序方式提出一个新图,要么以全局方式提出一个新图。

Sequential approaches generate a graph by proposing nodesand edges step by step

顺序方法通过逐步提出节点和边来生成图

DeepGCG (Deep Generative Model of Graphs)

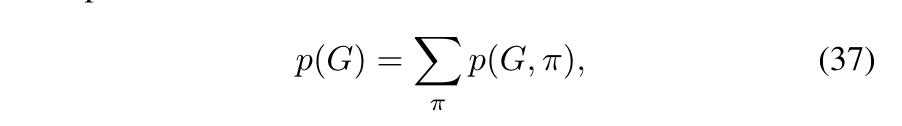

Deep Generative Model of Graphs (DeepGMG) [65]assumes the probability of a graph is the sum over all possiblenode permutations

图的深层生成模型(DeepGMG)[65]假设一个图的概率是所有可能的节点置换的和

whereπdenotes a node ordering. It captures the complex jointprobability of all nodes and edges in the graph. DeepGMGgenerates graphs by making a sequence of decisions, namelywhether to add a node, which node to add, whether to addan edge, and which node to connect to the new node. Thedecision process of generating nodes and edges is conditionedon the node states and the graph state of a growing graphupdated by a RecGNN. In another work, GraphRNN [66]proposes a graph-level RNN and an edge-level RNN to modelthe generation process of nodes and edges. The graph-levelRNN adds a new node to a node sequence each time whilethe edge-level RNN produces a binary sequence indicatingconnections between the new node and the nodes previouslygenerated in the sequence

其中π表示节点排序。它捕获了图中所有节点和边的复杂连接可能性。

deepgmg通过一系列决策生成图,包括是否添加节点、添加哪个节点、是否添加边以及连接到新节点的节点。

生成节点和边的决策过程取决于节点状态和由RecGNN更新的增长图的图状态。

在另一项工作中,GraphRNN[66]提出了图级RNN和边级RNN来模拟节点和边的生成过程。

- 图级别RNN每次向节点序列添加一个新节点

- 边级别RNN生成一个二进制序列,指示新节点与序列中先前生成的节点之间的连接

Global approaches output a graph all at once.

全局方法一次输出一个图形。

Spatial-temporalgraph neural networks (STGNNs)

Graphs in many real-world applications are dynamic bothin terms of graph structures and graph inputs. Spatial-temporalgraph neural networks (STGNNs) occupy important positionsin capturing the dynamicity of graphs.

许多实际应用中的图都是动态的,无论是图结构还是图输入。

时空图神经网络(STGNN)在捕捉图的动态性方面占有重要地位。

Methods under thiscategory aim to model the dynamic node inputs while assum-ing interdependency between connected nodes. For example, atraffic network consists of speed sensors placed on roads whereedge weights are determined by the distance between pairs ofsensors. As the traffic condition of one road may depend on itsadjacent roads’ conditions, it is necessary to consider spatialdependency when performing traffic speed forecasting. As asolution, STGNNs capture spatial and temporal dependenciesof a graph simultaneously. The task of STGNNs can beforecasting future node values or labels, or predicting spatial-temporal graph labels. STGNNs follow two directions, RNN-based methods and CNN-based methods

该类别下的方法旨在为动态节点输入建模,同时假设连接节点之间的相互依赖性。

例如,atraffic网络由放置在道路上的速度传感器组成,其边缘权重由传感器对之间的距离决定。由于一条道路的交通状况可能取决于其道路的条件,因此在进行交通速度预测时必须考虑空间依赖性。

作为一种解决方案,STGNS同时捕获图形的空间和时间依赖性。

STGNS的任务可以在预测未来的节点值或标签,或预测时空图标签之前完成。STGNS遵循两个方向,基于RNN的方法和基于CNN的方法

Most RNN-based approaches capture spatial-temporal de-pendencies by filtering inputs and hidden states passed to arecurrent unit using graph convolutions [48], [71], [72].

大多数基于RNN的方法都是通过使用图卷积[48]、[71]、[72]过滤传递到当前单元的输入和隐藏状态来捕获时空相关性。

总结

因为一些事情才开始读论文,开始有点困难

主要体现在专业单词不认识、知识点不熟悉、基础知识不扎实

这一篇综述读了挺久,平时有一些琐事,进度很慢

不过收获还是有的,不是那么畏惧看全英文的文章了

对这方面有了一点点浅层次的了解,大概知道是啥意思了

希望再之后的学习过程中,多读论文,多学习

加油!

结语

说明:

- 参考《A Comprehensive Survey on Graph NeuralNetworks》

文章仅作为个人学习笔记记录,记录从0到1的一个过程

希望对您有一点点帮助,如有错误欢迎小伙伴指正

以上是关于综述A Comprehensive Survey on Graph NeuralNetworks的主要内容,如果未能解决你的问题,请参考以下文章

综述A Comprehensive Survey on Graph NeuralNetworks

综述A Comprehensive Survey on Graph NeuralNetworks

PPFL全面综述文章: A Comprehensive Survey of Privacy-preserving Federated Learning 解析

论文笔记 A Comprehensive Survey on Graph Neural Networks(GNN综述)

数据聚类|深度聚类A Comprehensive Survey on Deep Clustering: Taxonomy, Challenges综述论文研读

数据聚类|深度聚类A Comprehensive Survey on Deep Clustering: Taxonomy, Challenges综述论文研读