综述A Comprehensive Survey on Graph NeuralNetworks

Posted 海轰Pro

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了综述A Comprehensive Survey on Graph NeuralNetworks相关的知识,希望对你有一定的参考价值。

目录

- 前言

- 专业名词

- 笔记

- INTRODUCTION 引言

- BACKGROUND & DEFINITION 背景与定义

- CATEGORIZATION ANDFRAMEWORKS 分类和框架

- 结语

前言

Hello!小伙伴!

非常感谢您阅读海轰的文章,倘若文中有错误的地方,欢迎您指出~

自我介绍 ଘ(੭ˊᵕˋ)੭

昵称:海轰

标签:程序猿|C++选手|学生

简介:因C语言结识编程,随后转入计算机专业,获得过国家奖学金,有幸在竞赛中拿过一些国奖、省奖…已保研。

学习经验:扎实基础 + 多做笔记 + 多敲代码 + 多思考 + 学好英语!

唯有努力💪

文章仅作为自己的学习笔记 用于知识体系建立以及复习

知其然 知其所以然!

专业名词

- graph neural networks 图神经网络

- graph convolutional networks 图卷积神经网络

- graph representation learning 图表示学习

- graph autoencoder 图自动编码器

- network embedding 网络嵌入

- pattern recognition 模式识别

- data mining 数据挖掘

- object detection 目标检测

- speech recognition 语音识别

- molecule 分子

- classification 分类

- clustering 聚类

- Spatial-Temporal Graph 时空图

- eigenvalue decomposition 特征值分解

笔记

INTRODUCTION 引言

We proposea new taxonomy to divide the state-of-the-art graph neuralnetworks into four categories, namely recurrent graph neuralnetworks, convolutional graph neural networks, graph autoen-coders, and spatial-temporal graph neural networks

我们提出了一种新的分类法,将最先进的图神经网络分为四类,即

- 递归图神经网络 recurrent graph neural networks

- 卷积图神经网络 convolutional graph neural networks

- 图自动编码器 graph autoen-coders

- 时空图神经网络 patial-temporal graph neural networks

A convolutional neural network (CNN)is able to exploit the shift-invariance, local connectivity, andcompositionality of image data [9]. As a result, CNNs canextract local meaningful features that are shared with the entiredata sets for various image analysis

以图像分析为例,图像可以表示为欧几里德空间中的规则网格。卷积神经网络(CNN)能够利用图像数据的移位不变性,局部连通性和组合性[8],因此,CNN可以提取与整个数据集共享的局部有意义的特征,用于各种图像分析任务。

Furthermore, a core assumption of existingmachine learning algorithms is that instances are independentof each other. This assumption no longer holds for graph databecause each instance (node) is related to others by links ofvarious types, such as citations, friendships, and interactions.

此外,现有机器学习算法的一个核心假设是实例相互独立。这种假设不再适用于图形数据库,因为每个实例(节点)通过各种类型的链接(如引用、友谊和交互)与其他实例(节点)相关。

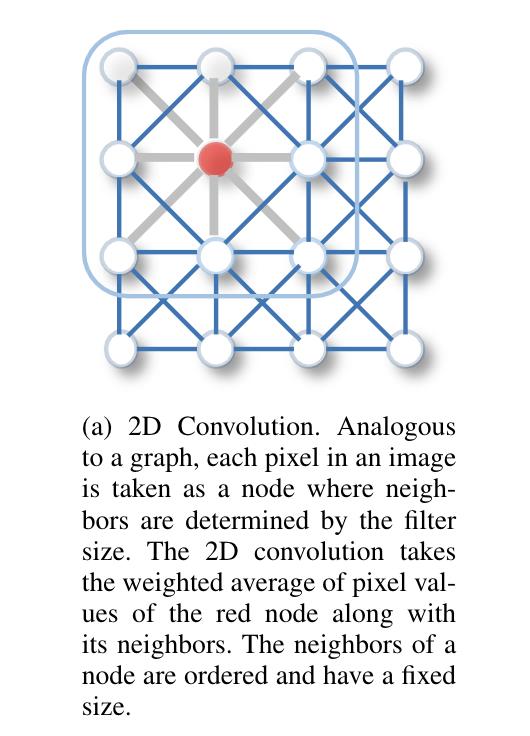

As illustrated in Figure1, an image can be considered as a special case of graphswhere pixels are connected by adjacent pixels.Similar to 2Dconvolution, one may perform graph convolutions by takingthe weighted average of a node’s neighborhood information.

如图1所示,图像可以被视为图形的特例,其中像素由相邻像素连接.与二维卷积类似,可以通过获取节点邻域信息的加权平均值来执行图卷积。

2D Convolution. Analogousto a graph, each pixel in an imageis taken as a node where neigh-bors are determined by the filtersize. The 2D convolution takesthe weighted average of pixel val-ues of the red node along withits neighbors. The neighbors of anode are ordered and have a fixedsize

二维卷积。与图形类似,图像中的每个像素都被视为一个节点,其中相邻像素由过滤器大小决定。2D卷积取红色节点及其相邻节点的像素值的加权平均值。阳极的邻域是有序的,并且具有固定的尺寸

Graph Convolution. To get ahidden representation of the rednode, one simple solution of thegraph convolutional operation isto take the average value of thenode features of the red nodealong with its neighbors. Differ-ent from image data, the neigh-bors of a node are unordered andvariable in size.

图卷积。为了得到红色节点的深度表示,图卷积运算的一个简单解决方案是取红色节点与其相邻节点的节点特征的平均值。与图像数据不同的是,节点的邻域无序且大小不一。

读后总结:传统机器学习的方法在欧里几得空间有很好的效果,但是现在越来越多的数据是非欧里几得空间中的数据,传统机器学习方法应用在这种数据中效果不太好,需要引入新的方法:GNN,每个实例不再是单独、独立的,实例与实例之间有着不同的联系…

BACKGROUND & DEFINITION 背景与定义

Network embedding 网络嵌入

Network embedding aims at representing network nodes as low-dimensional vectorrepresentations, preserving both network topology structureand node content information, so that any subsequent graphanalytics task such as classification, clustering, and recom-mendation can be easily performed using simple off-the-shelfmachine learning algorithms (e.g., support vector machines for classification).

网络嵌入旨在将网络节点表示为低维向量表示,同时保留网络拓扑结构和节点内容信息,以便任何后续的图形分析任务(如分类、聚类),使用简单的现成机器学习算法(例如,用于分类的支持向量机)可以很容易地执行推荐。

The main distinction between GNNs and network embedding GNNs和网络嵌入的主要区别

The main distinction between GNNs and network embedding is that GNNs are a group of neural network models which aredesigned for various tasks while network embedding coversvarious kinds of methods targeting the same task. Therefore,GNNs can address the network embedding problem througha graph autoencoder framework. On the other hand, networkembedding contains other non-deep learning methods such asmatrix factorization [33], [34] and random walks [35].

GNNs和网络嵌入的主要区别在于,GNNs是一组针对不同任务设计的神经网络模型,而网络嵌入涵盖了针对同一任务的各种方法。因此,GNNs可以通过图形自动编码器框架解决网络嵌入问题。另一方面,network embedding包含其他非深度学习方法,如矩阵分解[33]、[34]和随机游走[35]。

graph kernel methods 图核方法

Graphkernels are historically dominant techniques to solve theproblem of graph classification [36], [37], [38]. These methodsemploy a kernel function to measure the similarity betweenpairs of graphs so that kernel-based algorithms like supportvector machines can be used for supervised learning on graphs.

Graphkernel是解决图形分类问题的历史主导技术[36]、[37]、[38]。这些方法使用核函数来度量图的两部分之间的相似性,这样基于核的算法(如支持向量机)就可以用于图的监督学习。

the notations used in this paper 本文中使用的符号

Spatial-Temporal Graph 时空图

A spatial-temporalgraph is an attributed graph where the node attributes changedynamically over time.

时空图是节点属性随时间动态变化的属性图。

定义

CATEGORIZATION ANDFRAMEWORKS 分类和框架

Recurrent graph neural networks 递归图神经网络(RecGNNs)

RecGNNs aim tolearn node representations with recurrent neural architectures.They assume a node in a graph constantly exchanges informa-tion/message with its neighbors until a stable equilibrium is reached

RECGNS旨在学习具有递归神经结构的节点表示,他们假设图中的一个节点不断地与其邻居交换信息/消息,直到达到稳定平衡

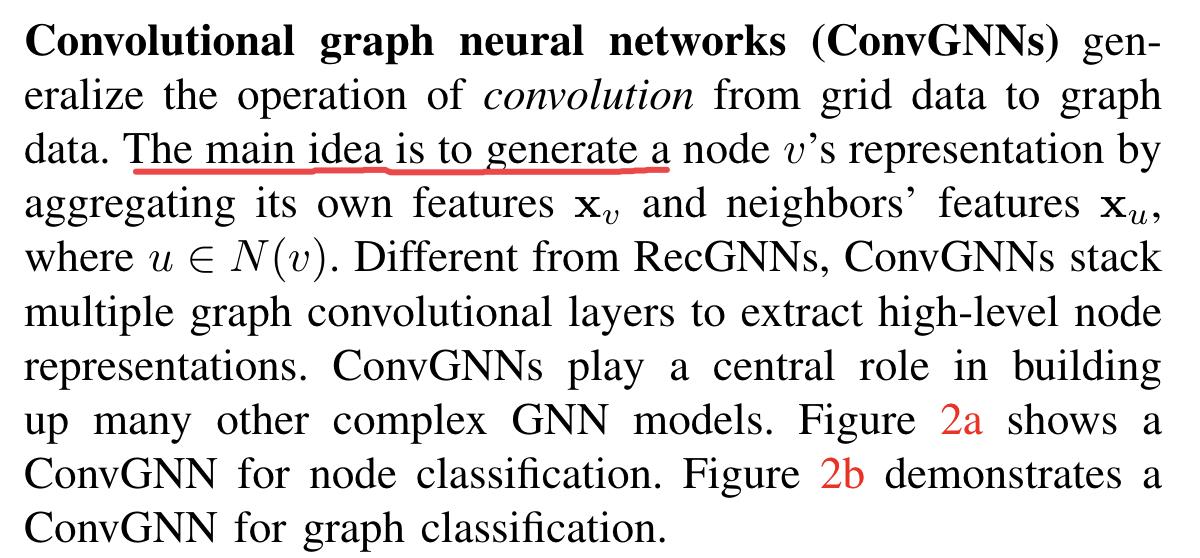

Convolutional graph neural networks 卷积图神经网络(ConvGNNs)

a ConvGNN for node classification 用于节点分类的ConvGNN

A ConvGNN with multiple graph convolutional layers. A graph convo-lutional layer encapsulates each node’s hidden representation by aggregatingfeature information from its neighbors. After feature aggregation, a non-lineartransformation is applied to the resulted outputs. By stacking multiple layers,the final hidden representation of each node receives messages from a furtherneighborhood.

具有多个图卷积层的ConvGNN。图卷积层通过聚集相邻节点的特征信息来封装每个节点的隐藏表示。特征聚合后,非线性转换应用于结果输出。通过堆叠多个层,每个节点的最终隐藏表示从另一个邻居接收消息。

aConvGNN for graph classification 用于图分类的aConvGNN

A ConvGNN with pooling and readout layers for graph classification[21]. A graph convolutional layer is followed by a pooling layer to coarsena graph into sub-graphs so that node representations on coarsened graphsrepresent higher graph-level representations. A readout layer summarizes thefinal graph representation by taking the sum/mean of hidden representationsof sub-graphs

一个用于图形分类的具有池和读出层的ConvGNN[21]。图卷积层之后是池层,将图粗化为子图,以便粗化图上的节点表示代表更高的图级表示。读出层通过获取子图的隐藏表示的总和/平均值来总结最终的图形表示

Graph autoencoders 图形自动编码器(GAEs)

are unsupervised learningframeworks which encode nodes/graphs into a latent vectorspace and reconstruct graph data from the encoded infor-mation. GAEs are used to learn network embeddings andgraph generative distributions. For network embedding, GAEslearn latent node representations through reconstructing graphstructural information such as the graph adjacency matrix. Forgraph generation, some methods generate nodes and edges ofa graph step by step while other methods output a graph allat once.

是一种无监督学习框架,它将节点/图形编码到一个潜在的向量空间中,并从编码的信息中重建图形数据。GAE用于学习网络嵌入和图形生成分布。

- 对于网络嵌入,Gaes通过重建图的邻接矩阵等结构信息来学习潜在节点表示。

- 在图生成方面,有些方法一步一步地生成图的节点和边,而有些方法一次输出一个图。

A GAE for network embedding [61]. The encoder uses graph convolutionallayers to get a network embedding for each node. The decoder computes thepair-wise distance given network embeddings. After applying a non-linearactivation function, the decoder reconstructs the graph adjacency matrix. Thenetwork is trained by minimizing the discrepancy between the real adjacencymatrix and the reconstructed adjacency matrix.

用于网络嵌入的GAE[61]。编码器使用图卷积层为每个节点获得网络嵌入。解码器计算给定网络嵌入的空中距离。在应用非线性化函数后,解码器重建图形邻接矩阵。通过最小化真实邻接矩阵和重构邻接矩阵之间的差异来训练网络。

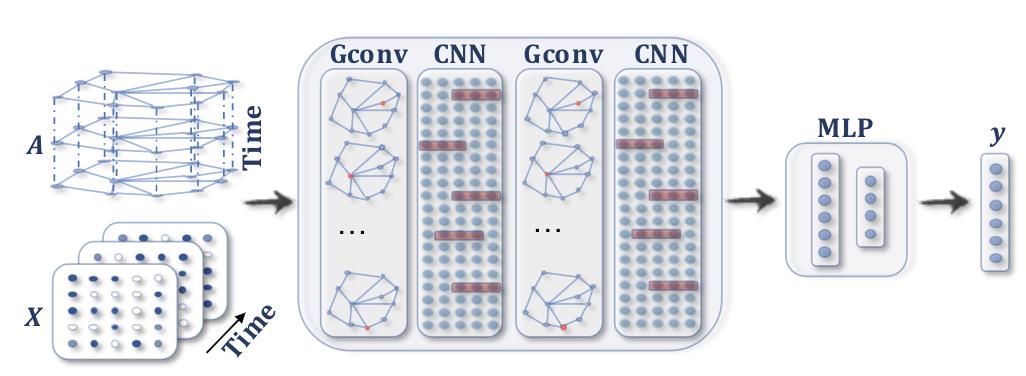

Spatial-temporal graph neural networks 时空图神经网络(STGNNs)

aimto learn hidden patterns from spatial-temporal graphs, whichbecome increasingly important in a variety of applications suchas traffic speed forecasting [72], driver maneuver anticipation[73], and human action recognition [75]. The key idea ofSTGNNs is to consider spatial dependency and temporaldependency at the same time. Many current approaches in-tegrate graph convolutions to capture spatial dependency withRNNs or CNNs to model the temporal dependency.

目的是从时空图中学习隐藏模式,这在各种应用中变得越来越重要,如交通速度预测[72]、驾驶员操纵预测[73]和人类行为识别[75]。STGNS的核心思想是同时考虑空间依赖性和时间依赖性。目前,许多积分图卷积方法都是用RNN或CNN来捕捉空间依赖关系,从而对时间依赖关系进行建模。图2显示了时空图预测的STGNN

A STGNN for spatial-temporal graph forecasting [74]. A graph convolu-tional layer is followed by a 1D-CNN layer. The graph convolutional layeroperates onAandX(t)to capture the spatial dependency, while the 1D-CNNlayer slides overXalong the time axis to capture the temporal dependency.The output layer is a linear transformation, generating a prediction for eachnode, such as its future value at the next time step

用于时空图预测的STGNN[74]。图卷积层之后是一维CNN层。图中的卷积层覆盖OnAndX(t)以捕捉空间依赖性,而一维CNN层沿着时间轴滑动X以捕捉时间依赖性。输出层是一个线性变换,为每个节点生成一个预测,比如下一个时间步的未来值

Frameworks 框架

With the graph structure and node content information asinputs, the outputs of GNNs can focus on different graphanalytics tasks with one of the following mechanisms:

通过图形结构和节点内容信息作为输入,GNNs的输出可以通过以下机制之一专注于不同的图分析任务

Node-level 节点级

outputs relate to node regression and nodeclassification tasks. RecGNNs and ConvGNNs can extracthigh-level node representations by information propa-gation/graph convolution. With a multi-perceptron or asoftmax layer as the output layer, GNNs are able toperform node-level tasks in an end-to-end manner.

输出与节点回归和节点分类任务有关。RecGNNs和ConvGNNs可以通过信息传播/图卷积提取高级节点表示。通过使用多感知器或a softmax层作为输出层,GNN能够以端到端的方式执行节点级任务。

Edge-level 边缘级别

outputs relate to the edge classification andlink prediction tasks. With two nodes’ hidden representa-tions from GNNs as inputs, a similarity function or a neu-ral network can be utilized to predict the label/connectionstrength of an edge

输出与边缘分类和链接预测任务有关。利用GNN中两个节点的隐藏表示作为输入,可以利用相似函数或神经网络来预测边的标记/连接强度

Graph-level 图级

outputs relate to the graph classificationtask. To obtain a compact representation on the graphlevel, GNNs are often combined with pooling and read-out operations.

输出与图分类任务相关。为了在图形层上获得紧凑的表示,GNN通常与池和读取操作相结合。

Training Frameworks 训练框架

Many GNNs (e.g., ConvGNNs) can be trained in a (semi-) supervised or purely unsupervised waywithin an end-to-end learning framework, depending on thelearning tasks and label information available at hand

许多GNN(例如ConvGNN)可以在端到端学习框架内以(半)监督或纯无监督的方式进行培训,具体取决于手头的学习任务和标签信息

Semi-supervised learning for node-level classification 用于节点级分类的半监督学习

Given a single network with partial nodes being labeledand others remaining unlabeled, ConvGNNs can learn arobust model that effectively identifies the class labelsfor the unlabeled nodes [22]. To this end, an end-to-end framework can be built by stacking a couple ofgraph convolutional layers followed by a softmax layerfor multi-class classification

给定一个单独的网络,其中部分节点被标记,其他节点未标记,ConvGNS可以学习Arobast模型,该模型可以有效地识别未标记节点的类标签[22]。为此,可以通过叠加两个图形卷积层,然后叠加一个用于多类分类的softmax层来构建端到端框架

Supervised learning for graph-level classification 图级分类的监督学习

Graph-level classification aims to predict the class label(s)for an entire graph [52], [54], [78], [79]. The end-to-end learning for this task can be realized with acombination of graph convolutional layers, graph poolinglayers, and/or readout layers. While graph convolutionallayers are responsible for exacting high-level node rep-resentations, graph pooling layers play the role of down-sampling, which coarsens each graph into a sub-structureeach time. A readout layer collapses node representationsof each graph into a graph representation. By applyinga multi-layer perceptron and a softmax layer to graphrepresentations, we can build an end-to-end frameworkfor graph classification. An example is given in Fig 2b

图级分类旨在预测整个图的类别标签[52]、[54]、[78]、[79]。该任务的端到端学习可以通过图形卷积层、图形池和/或读出层的组合来实现。

- 图卷积层负责精确的高级节点表示,

- 而图池层则扮演向下采样的角色,每次都会将每个图粗化为一个子结构。

- 读出层将每个图形的节点表示折叠为图形表示。

通过将多层感知器和softmax层应用于图形表示,我们可以构建一个端到端的图形分类框架。

Unsupervised learning for graph embedding 图嵌入的无监督学习

When no class labels are available in graphs, we can learn thegraph embedding in a purely unsupervised way in an end-to-end framework. These algorithms exploit edge-levelinformation in two ways. One simple way is to adoptan autoencoder framework where the encoder employsgraph convolutional layers to embed the graph into thelatent representation upon which a decoder is used toreconstruct the graph structure [61], [62]. Another popular way is to utilize the negative sampling approachwhich samples a portion of node pairs as negative pairswhile existing node pairs with links in the graphs arepositive pairs. Then a logistic regression layer is appliedto distinguish between positive and negative pairs

当图形中没有可用的类标签时,我们可以在端到端框架中以完全无监督的方式学习图形嵌入。这些算法通过两种方式利用边缘级别信息。

- 一种简单的方法是采用自动编码器框架,其中编码器使用图形卷积层将图形嵌入到最近的表示中,然后使用解码器来构造图形结构[61],[62]。

- 另一种流行的方法是利用负采样方法,将一部分节点对采样为负对,而图中存在链接的节点对为正对。然后应用逻辑回归层来区分正负对

Summary of RecGNNs and ConvGNNs.

结语

说明:

- 参考《A Comprehensive Survey on Graph NeuralNetworks》

文章仅作为学习笔记,记录从0到1的一个过程

希望对您有一点点帮助,如有错误欢迎小伙伴指正

以上是关于综述A Comprehensive Survey on Graph NeuralNetworks的主要内容,如果未能解决你的问题,请参考以下文章