Windows 上运行 Hadoop WordCount 用例

Posted yjyyjy

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Windows 上运行 Hadoop WordCount 用例相关的知识,希望对你有一定的参考价值。

1. 下载wordcount jar 文件

下载文件夹放置你的目录: https://github.com/yjy24/bigdata_learning/blob/master/hadoopMapRedSimple.zip

2. 启动 hadoop

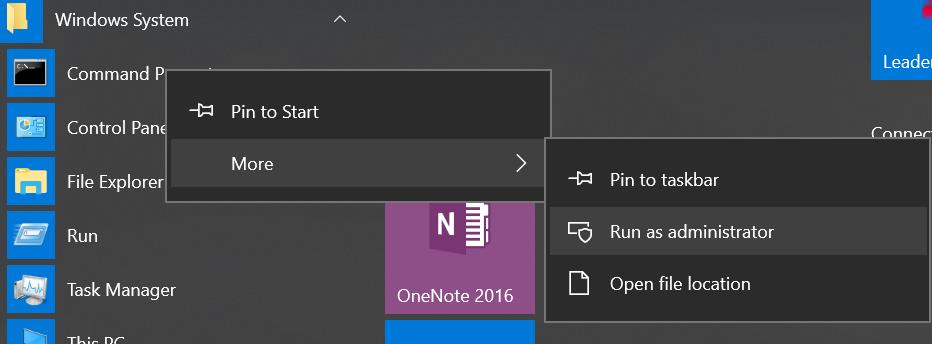

- 管理员身份运行window cmd:

- 启动 Hadoop 所有服务, 如果还没有搭建Hadoop 环境请参考:https://www.cnblogs.com/yjyyjy/p/12731968.html

C:\\WINDOWS\\system32>start-all.cmd This script is Deprecated. Instead use start-dfs.cmd and start-yarn.cmd starting yarn daemons

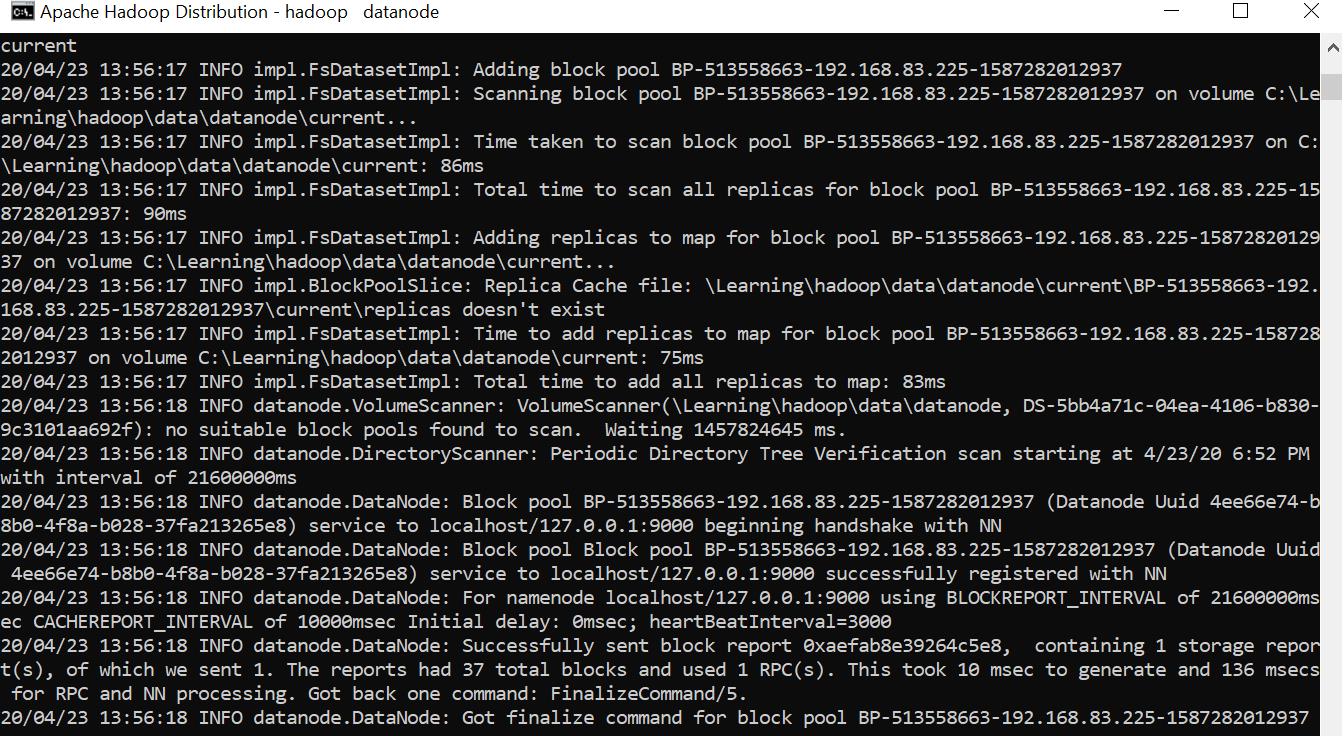

datanode log:

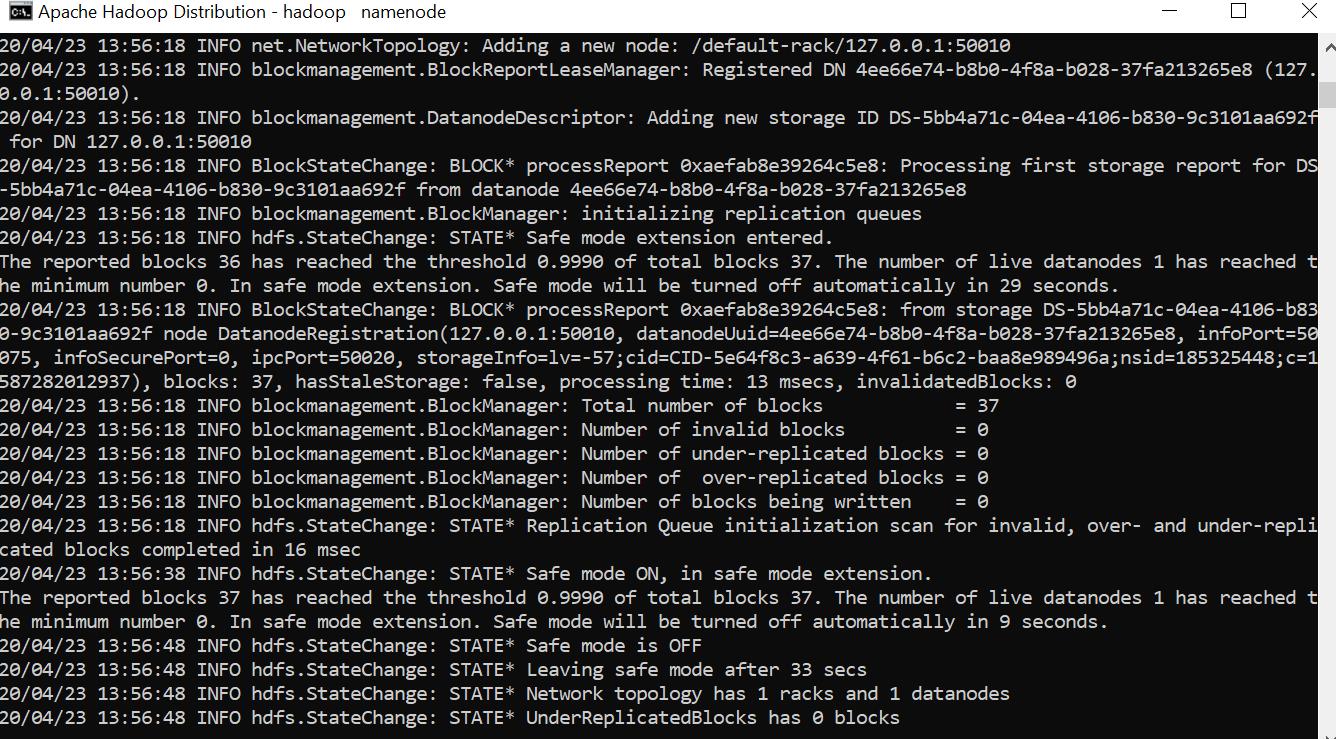

namenode log:

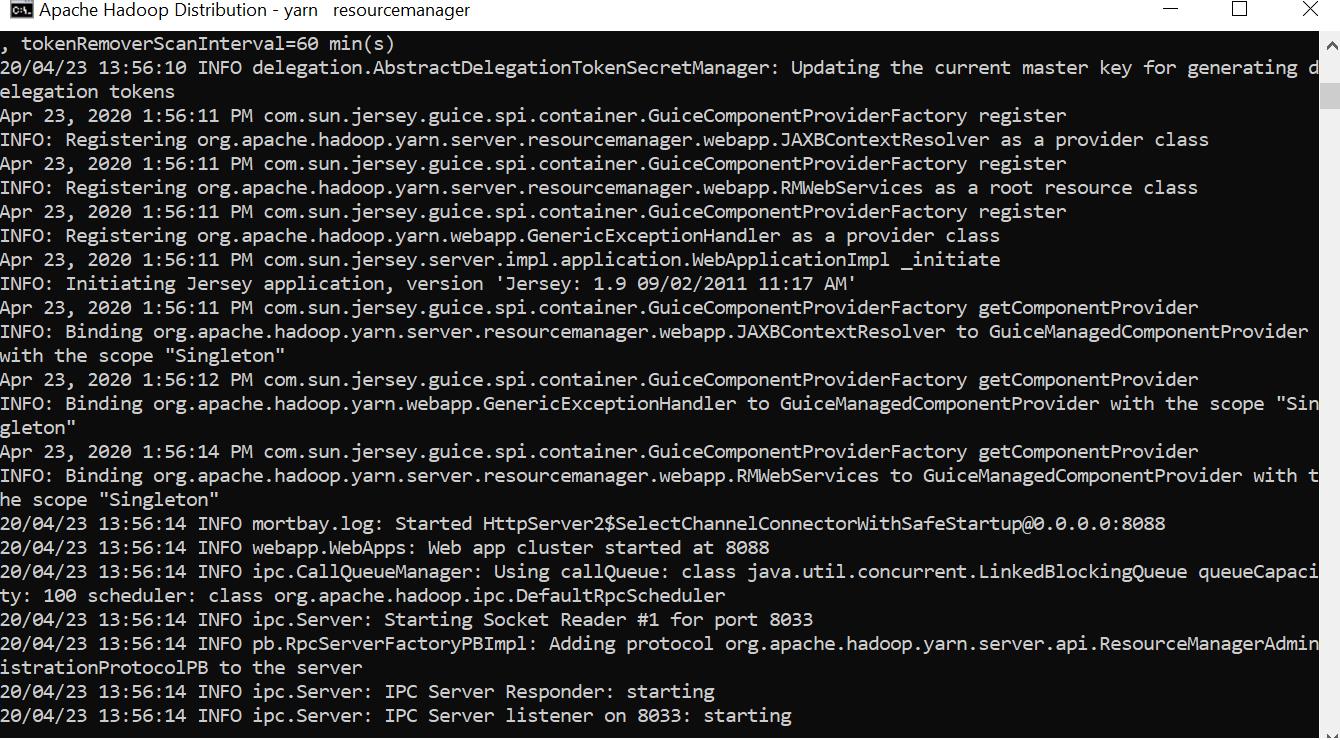

yarn resource manager log

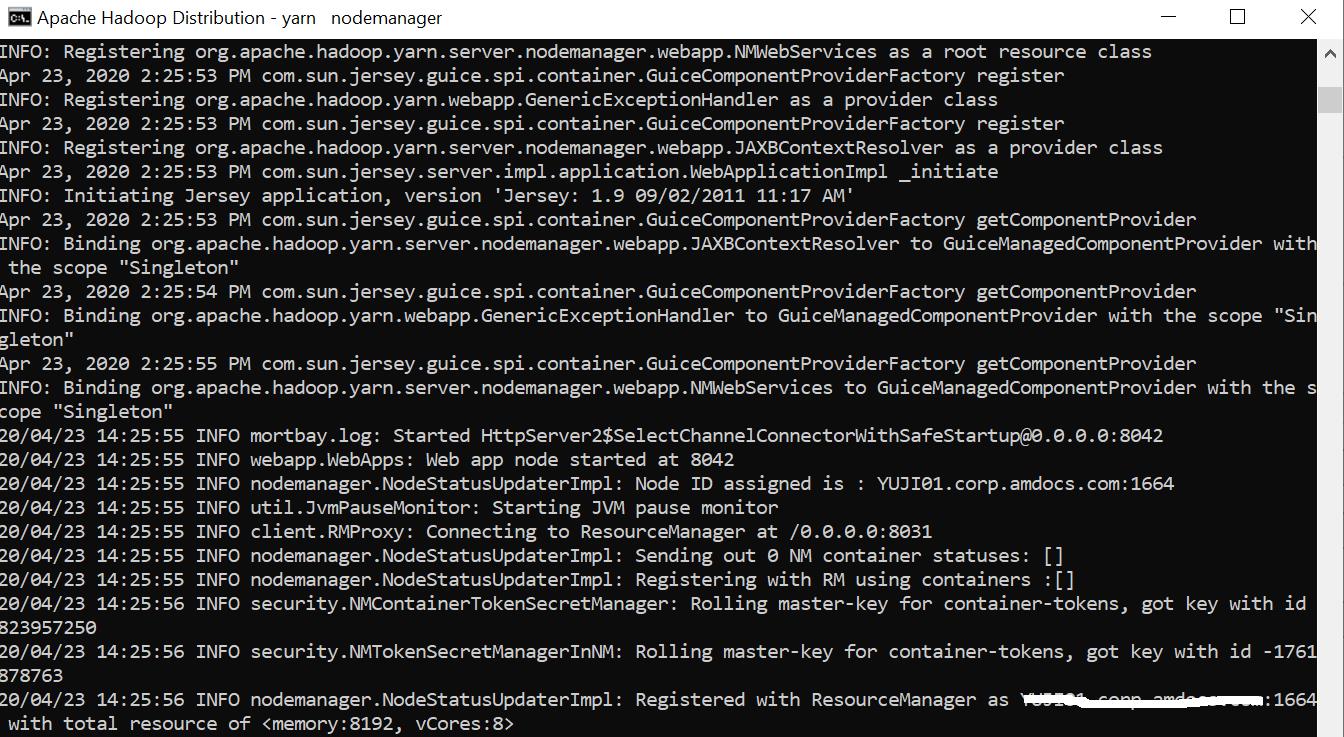

yarn nodemanager log

3. 运行程序

- 创建hdfs 目录,如果刚打开是 safe mode 还不能创建目录,那么可以关掉safe mode 就可以了

C:\\WINDOWS\\system32>hadoop fs -mkdir /input_dirs

mkdir: Cannot create directory /input_dirs. Name node is in safe mode.

C:\\WINDOWS\\system32>hadoop dfsadmin -safemode leave

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Safe mode is OFF

C:\\WINDOWS\\system32>hadoop fs -mkdir /input_dirs

C:\\WINDOWS\\system32>

- 拷贝输入文件到Hdfs 目录下

C:\\WINDOWS\\system32>hadoop fs -put C:/Learning/lessons/Hadoop-class/hadoopMapRedSimple/inputfile.txt /input_dirs

C:\\WINDOWS\\system32>

- 查看文件内容来验证是否文件已经放置好

C:\\WINDOWS\\system32>hadoop dfs -cat /input_dirs/inputfile.txt DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. 23 23 27 43 24 25 26 26 26 26 25 26 25 26 27 28 28 28 30 31 31 31 30 30 30 29 31 32 32 32 33 34 35 36 36 34 34 34 34 39 38 39 39 39 41 42 43 40 39 38 38 40 38 39 39 39 39 41 41 41 28 40 39 39 45 23 23 27 43 24 25 26 26 26 26 25 26 25 26 27 28 28 28 30 31 31 31 30 30 30 29 31 32 32 32 33 34 35 36 36 34 34 34 34 39 38 39 39 39 41 42 43 40 39 38 38 40 38 39 39 39 39 41 41 41 28 40 39 39 45 23 23 27 43 24 25 26 26 26 26 25 26 25 26 27 28 28 28 30 31 31 31 30 30 30 29 31 32 32 32 33 34 35 36 36 34 34 34 34 39 38 39 39 39 41 42 43 40 39 38 38 40 38 39 39 39 39 41 41 41 28 40 39 39 45 23 23 27 43 24 25 26 26 26 26 25 26 25 26 27 28 28 28 30 31 31 31 30 30 30 29 31 32 32 32 33 34 35 36 36 34 34 34 34 39 38 39 39 39 41 42 43 40 39 38 38 40 38 39 39 39 39 41 41 41 28 40 39 39 45 23 23 27 43 24 25 26 26 26 26 25 26 25 26 27 28 28 28 30 31 31 31 30 30 30 29 31 32 32 32 33 34 35 36 36 34 34 34 34 39 38 39 39 39 41 42 43 40 39 38 38 40 38 39 39 39 39 41 41 41 28 40 39 39 45 23 23 27 43 24 25 26 26 26 26 25 26 25 26 27 28 28 28 30 31 31 31 30 30 30 29 31 32 32 32 33 34 35 36 36 34 34 34 34 39 38 39 39 39 41 42 43 40 39 38 38 40 38 39 39 39 39 41 41 41 28 40 39 39 45 C:\\WINDOWS\\system32>

- 运行 word count

C:\\WINDOWS\\system32>hadoop jar C:/Learning/lessons/Hadoop-class/hadoopMapRedSimple/MapReduceClient.jar wordcount /input_dirs /output_dirs

20/04/23 14:49:52 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

20/04/23 14:49:55 INFO input.FileInputFormat: Total input files to process : 1

20/04/23 14:49:55 INFO mapreduce.JobSubmitter: number of splits:1

20/04/23 14:49:56 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

20/04/23 14:49:56 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1587642121010_0001

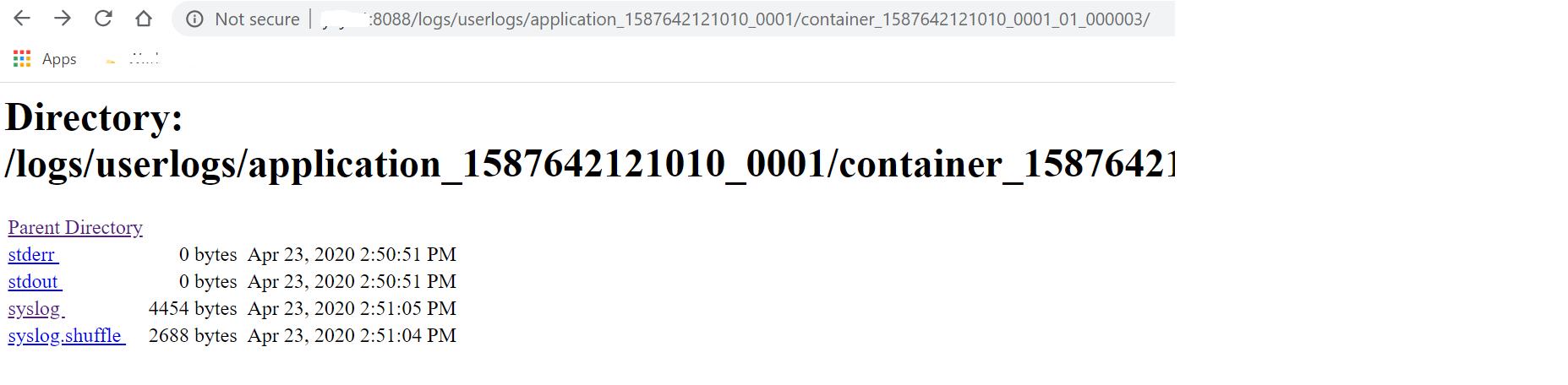

20/04/23 14:49:58 INFO impl.YarnClientImpl: Submitted application application_1587642121010_0001

20/04/23 14:49:58 INFO mapreduce.Job: The url to track the job: http://localhost:8088/proxy/application_1587642121010_0001/

20/04/23 14:49:58 INFO mapreduce.Job: Running job: job_1587642121010_0001

20/04/23 14:50:28 INFO mapreduce.Job: Job job_1587642121010_0001 running in uber mode : false

20/04/23 14:50:28 INFO mapreduce.Job: map 0% reduce 0%

20/04/23 14:50:47 INFO mapreduce.Job: map 100% reduce 0%

20/04/23 14:51:07 INFO mapreduce.Job: map 100% reduce 100%

20/04/23 14:51:08 INFO mapreduce.Job: Job job_1587642121010_0001 completed successfully

20/04/23 14:51:08 INFO mapreduce.Job: Counters: 49

......

- 查看统计结果: 每个数字在文件中出现的次数统计

C:\\WINDOWS\\system32>hadoop dfs -cat /output_dirs/* DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. 23 12 24 6 25 18 26 36 27 12 28 24 29 6 30 24 31 24 32 18 33 6 34 30 35 6 36 12 38 24 39 66 40 18 41 24 42 6 43 12 45 6 C:\\WINDOWS\\system32>

以上是关于Windows 上运行 Hadoop WordCount 用例的主要内容,如果未能解决你的问题,请参考以下文章