第155天学习打卡(Kubernetes emptyDir nfs pv和pvc 集群资源监控)

Posted doudoutj

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了第155天学习打卡(Kubernetes emptyDir nfs pv和pvc 集群资源监控)相关的知识,希望对你有一定的参考价值。

容器磁盘上的文件的生命周期是短暂的,这就使得在容器中运行重要应用时会出现一些问题。首先,当容器崩溃时,kubelet会重启它,但是容器中国的文件将丢失–容器以干净的状态(镜像最初的状态)重新启动。其次在pod中同时运行多个容器时,这些容器之间通常需要共享文件。kubernetes中的volume抽象就很好的解决这些问题。

Kubernetes中的卷有明确的寿命,与封装它的pod相同。所以,卷的生命比pod中的所有容器都长,当这个容器重启时数据仍然得以保存。当然,当pod不再存在时,卷也将不复存在。也许更重要的是,kubernetes支持多种类型的卷,pod可以同时使用任意数量的卷。

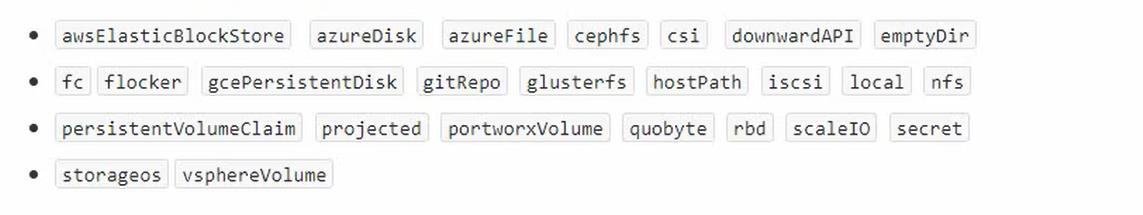

kubernetes支持以下类型的卷

emptyDir

当pod被分配给节点时,首先创建emptyDir卷,并且只要该pod在该节点上运行,该卷就会存在。正如卷的名字所述,它最初是空的。Pod中 的容器可以读取和写入emptyDir卷中的相同文件,尽管该卷可以挂载到每个容器中的相同的或者不同的路径上。当出于任何原因从节点中删除pod时,emptyDir中的数据将被永久删除。

=注意:容器崩溃不会从节点中移除pod,因此emptyDir卷中的数据在容器崩溃时是安全的

emptyDir的用法:

- 暂存空间,例如用于基于磁盘的合并排序

- 用作长时间计算崩溃恢复时的检查点

- Web服务器容器提供数据时,保存内容管理器容器中提取的文件。

- 这里的image得从node节点的docker images中进行查看,然后选择(因为是从私有仓库中下载下来的),因为没有找到合适的 所有在下面的运行中开始出错了

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: registry.aliyuncs.com/google_containers/pause

name: test-container

volumeMounts:

- name: cache-volume

mountPath: /cache

volumes:

- name: cache-volume

emptyDir: {}

[root@master volume]# vim em.yaml

[root@master volume]# kubectl apply -f em.yaml

pod/test-pd configured

[root@master volume]# kubectl get pod

NAME READY STATUS RESTARTS AGE

ds-test-r7ct2 1/1 Running 0 8m20s

ds-test-sp7z4 1/1 Running 0 8m20s

ds-test-wm84n 1/1 Running 0 8m21s

hello-1623503580-5qx6h 0/1 Completed 0 2m24s

hello-1623503640-4xdsv 0/1 Completed 0 84s

hello-1623503700-kpwz6 0/1 Completed 0 23s

test-pd 1/1 Running 0 6m29s

出现的问题

[root@master volume]# kubectl exec test-pd -it -- /bin/sh

OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: "/bin/sh": stat /bin/sh: no such file or directory: unknown

command terminated with exit code 126

[root@master volume]# kubectl exec -it test-pd /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: "/bin/sh": stat /bin/sh: no such file or directory: unknown

command terminated with exit code 126

[root@master volume]# kubectl exec test-pd -it -- /bin/sh

OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: "/bin/sh": stat /bin/sh: no such file or directory: unknown

command terminated with exit code 126

[root@master volume]#

nfs

数据卷emptydir是本地存储,pod重启, 数据就不存在了,要使pod重启数据还存在,需要对数据进行持久化存储。

1.nfs 网络存储

pod重启,数据还存在

第一步 找一台服务器专门作为nfs服务端

(1)安装nfs

[root@kuangshen ~]# yum install -y nfs-utils

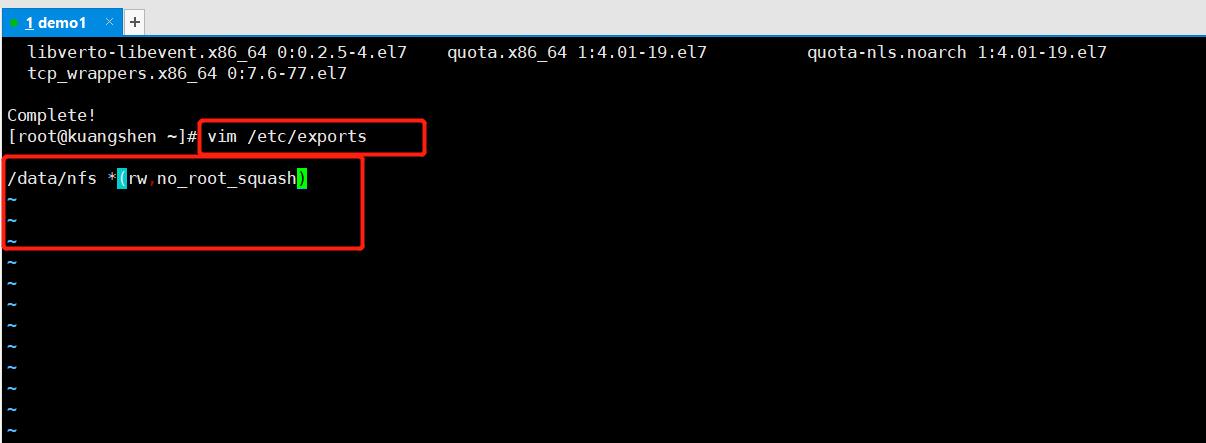

(2) 设置挂载路径

# 存储目录,*允许所有人连接,rw读写权限,sync文件同时写入硬盘及内存,no_root_squash 使用者root用户自动修改为普通用户

vim /etc/exports

/data/nfs *(rw,no_root_squash,sync)#也可以写成图片上的内容

(3)挂载路径需要创建出来

[root@kuangshen ~]# vim /etc/exports

[root@kuangshen ~]# mkdir /data

[root@kuangshen ~]# cd /data

[root@kuangshen data]# mkdir nfs

[root@kuangshen data]# ls

nfs

第二步 在k8s集群node节点安装nfs

[root@node01 ~]# yum install -y nfs-utils

[root@node02 ~]# yum install -y nfs-utils

[root@node03 ~]# yum install -y nfs-utils

第三步 在nfs服务器启动nfs服务

[root@kuangshen data]# systemctl start nfs

[root@kuangshen data]# ps -ef | grep nfs

root 32603 2 0 19:31 ? 00:00:00 [nfsd4_callbacks]

root 32609 2 0 19:31 ? 00:00:00 [nfsd]

root 32610 2 0 19:31 ? 00:00:00 [nfsd]

root 32611 2 0 19:31 ? 00:00:00 [nfsd]

root 32612 2 0 19:31 ? 00:00:00 [nfsd]

root 32613 2 0 19:31 ? 00:00:00 [nfsd]

root 32614 2 0 19:31 ? 00:00:00 [nfsd]

root 32615 2 0 19:31 ? 00:00:00 [nfsd]

root 32616 2 0 19:31 ? 00:00:00 [nfsd]

root 32719 30878 0 19:32 pts/0 00:00:00 grep --color=auto nfs

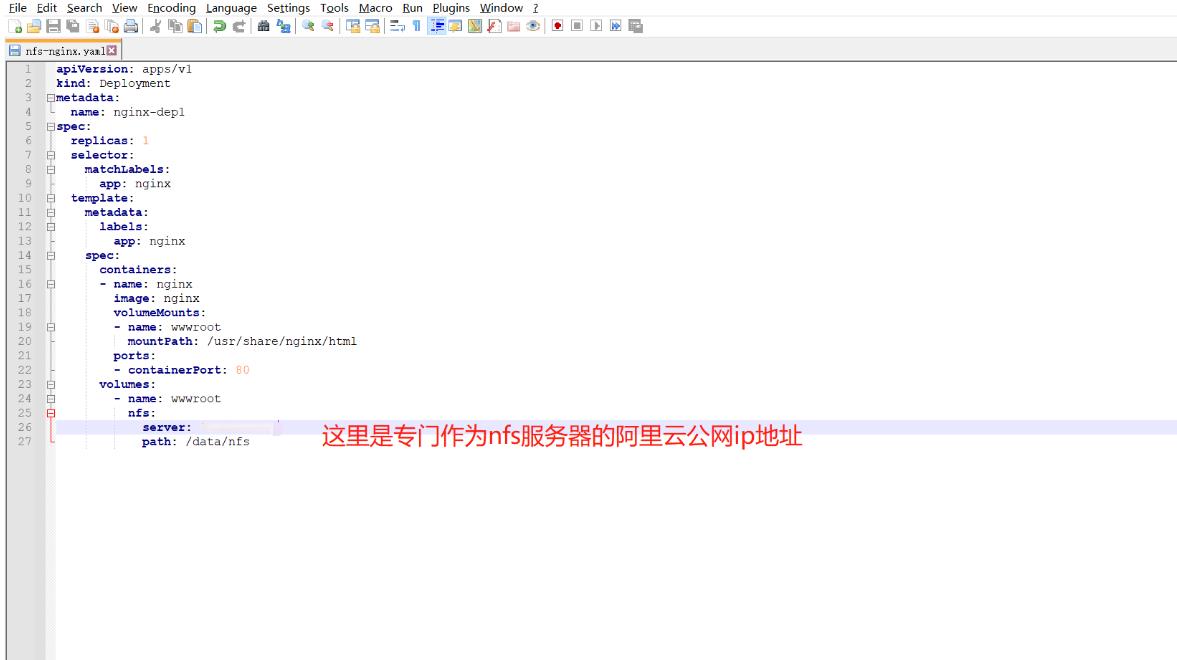

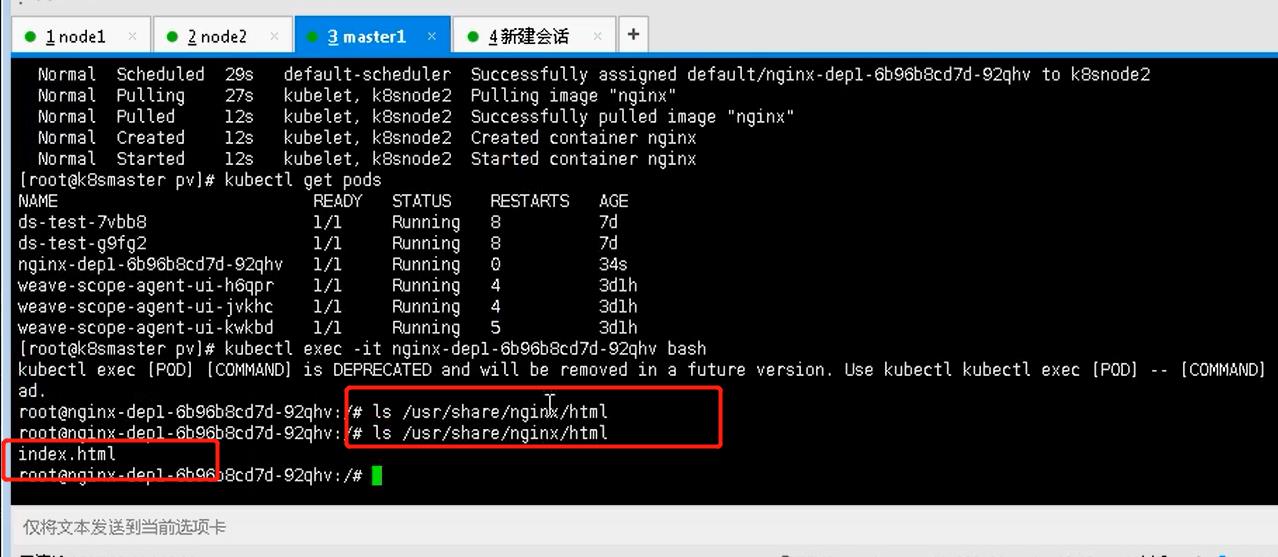

第四步 在k8s集群部署应用使用nfs持久化网络存储

[root@master pv]# vim nfs-nginx.yaml #这里粘贴的内容就是下面nfs-nginx.yaml文件里面的内容

[root@master pv]# kubectl apply -f nfs-nginx.yaml

deployment.apps/nginx-dep1 created

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep1

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: html

nfs:

server: nfs服务端的ip地址

path: /data/nfs

[root@master pv]# kubectl get pods

NAME READY STATUS RESTARTS AGE

ds-test-489bb 1/1 Running 0 2d

ds-test-4rvcr 1/1 Running 0 2d

ds-test-nx6kt 1/1 Running 0 2d

hello-1623412080-9hq98 0/1 Completed 0 3m3s

hello-1623412140-dgssl 0/1 Completed 0 2m3s

hello-1623412200-9mqss 0/1 Completed 0 63s

hello-1623412260-ffkxx 0/1 ContainerCreating 0 12s

nginx-dep1-7d6c86b544-gc4cr 0/1 ContainerCreating 0 8m35s

查看日志出的错误:

[root@master pv]# kubectl describe pod nginx-dep1-7d6c86b544-gc4cr

Warning FailedMount 23s (x2 over 4m41s) kubelet MountVolume.SetUp failed for volume "wwwroot" : mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t nfs 8.140.99.18:/data/nfs /var/lib/kubelet/pods/b1e30a93-e417-486f-925d-8a079d7ff06d/volumes/kubernetes.io~nfs/wwwroot

Output: mount.nfs: Connection timed out

Warning FailedMount 7s (x4 over 6m56s) kubelet Unable to attach or mount volumes: unmounted volumes=[wwwroot], unattached volumes=[wwwroot default-token-8vnf8]: timed out waiting for the condition

还没能解决这个问题

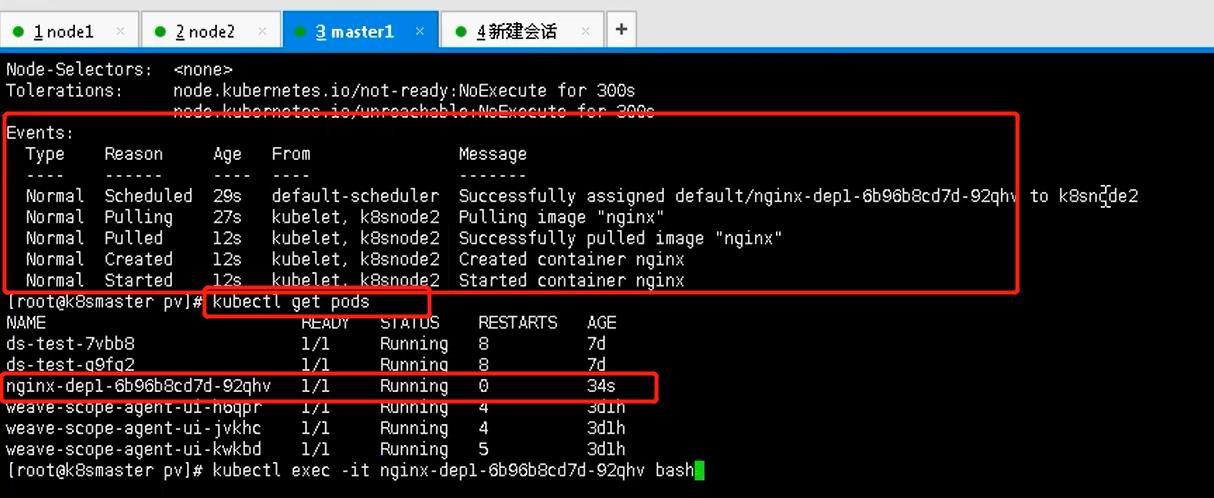

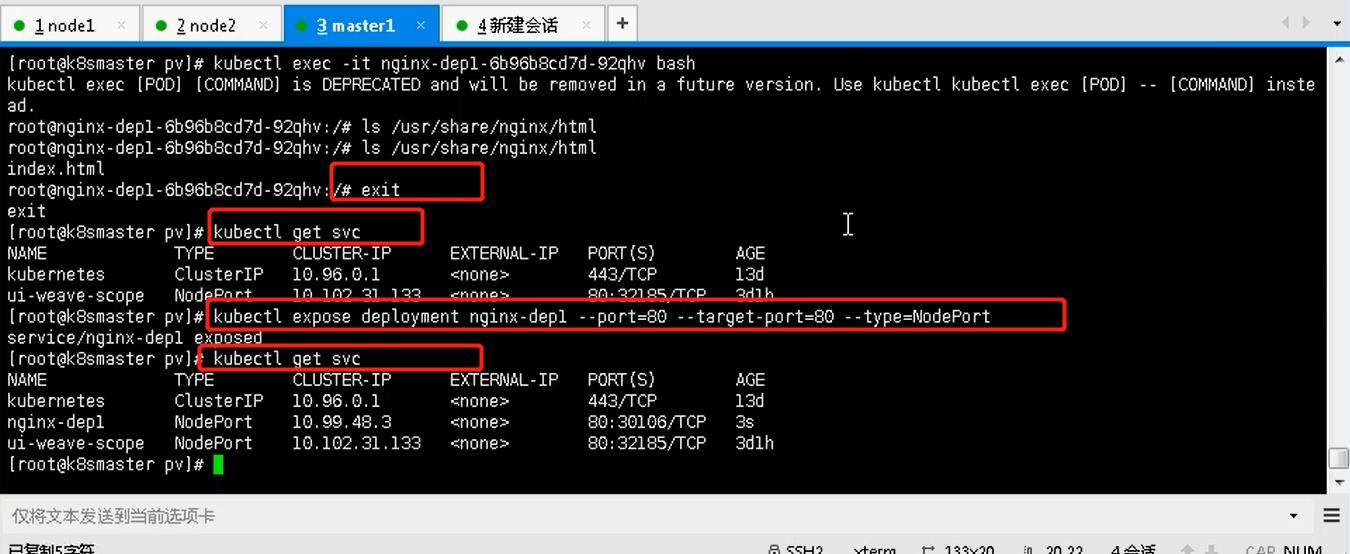

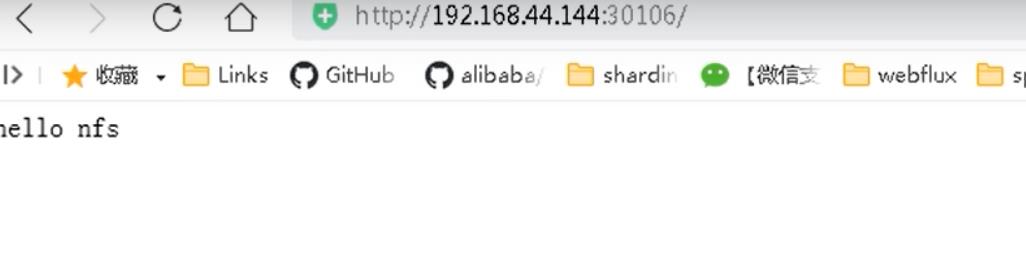

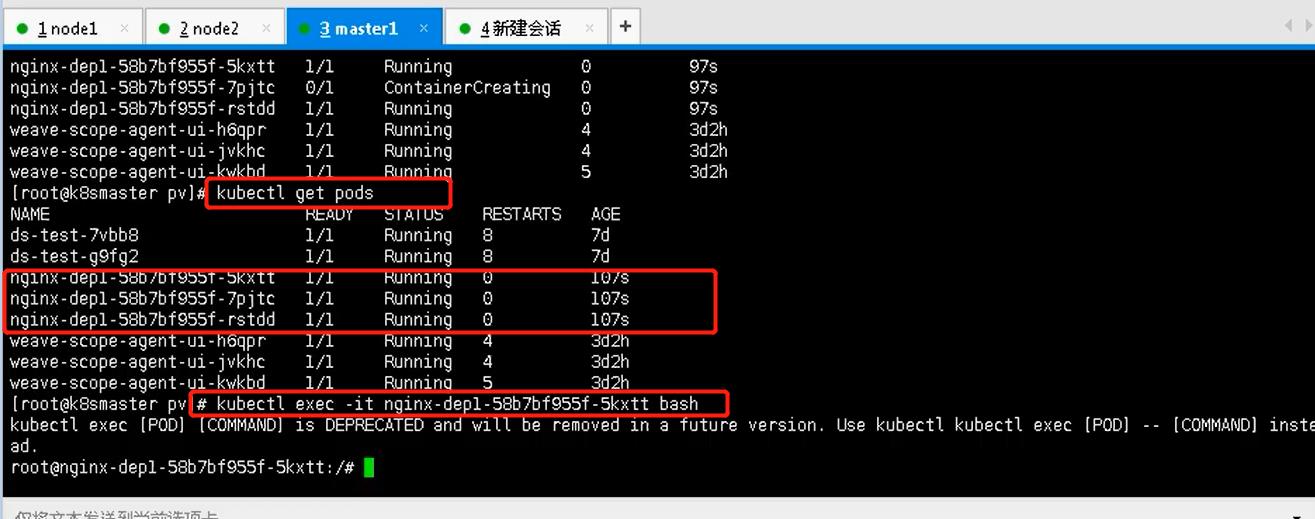

下面这截图是成功执行的样子

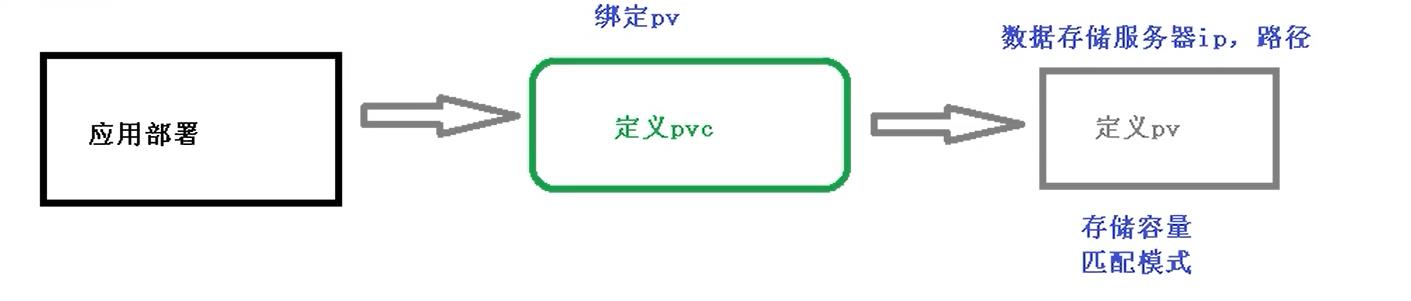

PV和PVC

1.PV:持久化存储,对存储资源进行抽象,对外提供可以调用的地方。(生产者)

2.PVC:用于调用不需要关系内部实现细节(消费者)

3.实现流程

pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep1

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: my-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

pv.ymal

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

nfs:

path: /data/nfs

server: nfs服务端的ip地址

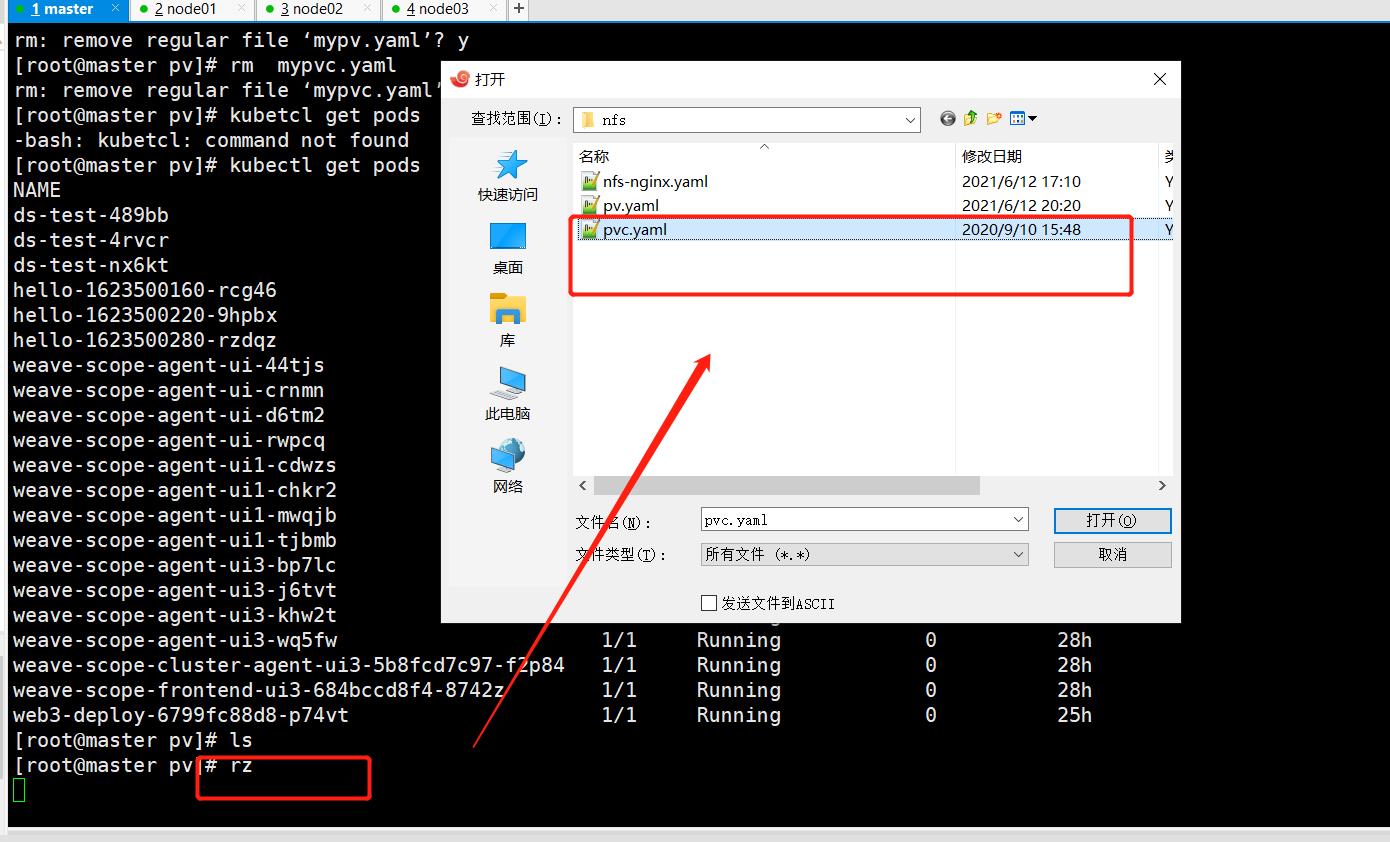

[root@master pv]# rz

[root@master pv]# ls

pvc.yaml pv.yaml

[root@master pv]# rz

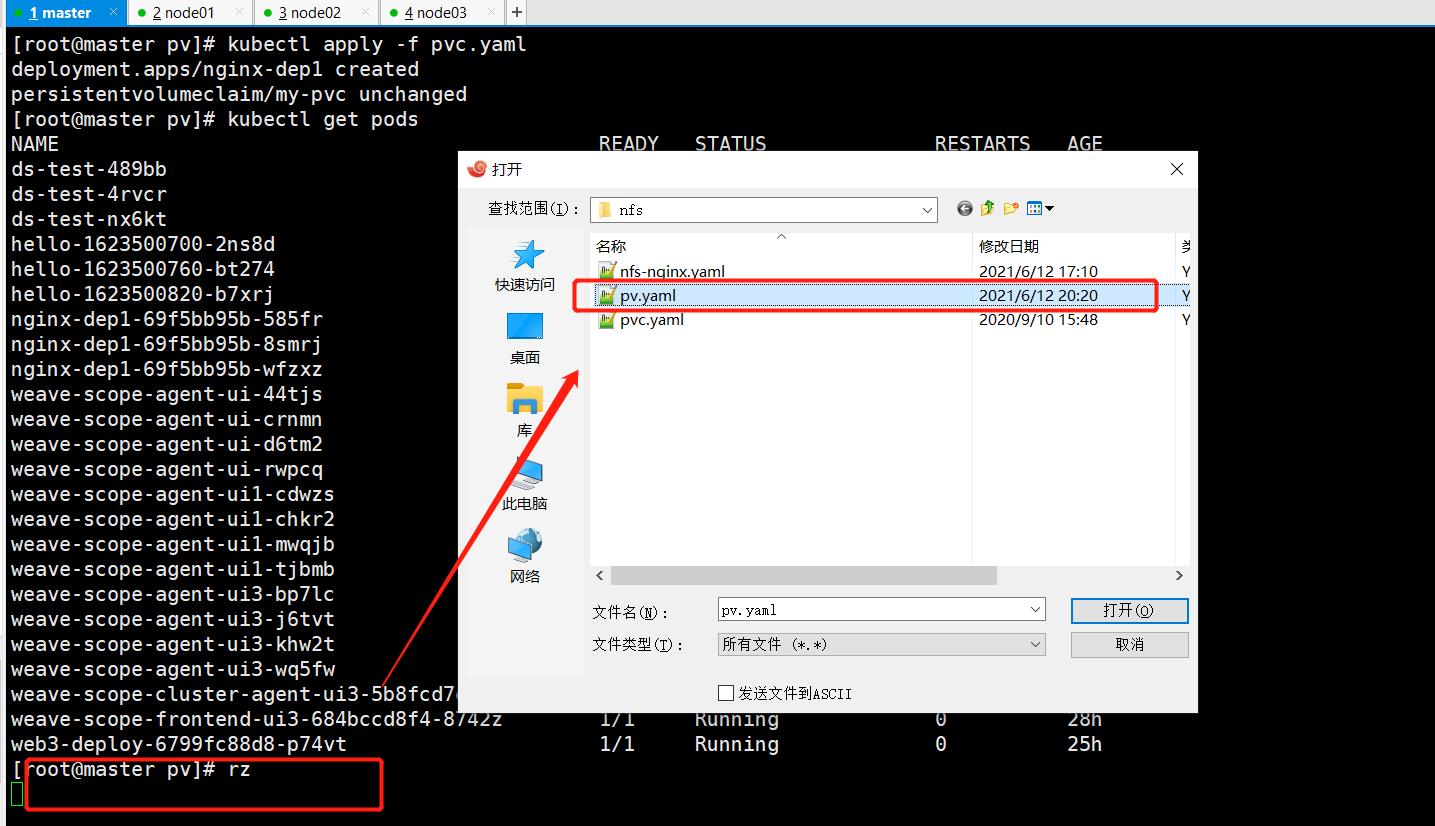

[root@master pv]# kubectl apply -f pv.yaml

persistentvolume/my-pv unchanged

[root@master pv]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/my-pv 5Gi RWX Retain Bound default/my-pvc 3h4m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/my-pvc Bound my-pv 5Gi RWX 3h6m

persistentvolumeclaim/myclaim Pending nfs 20m

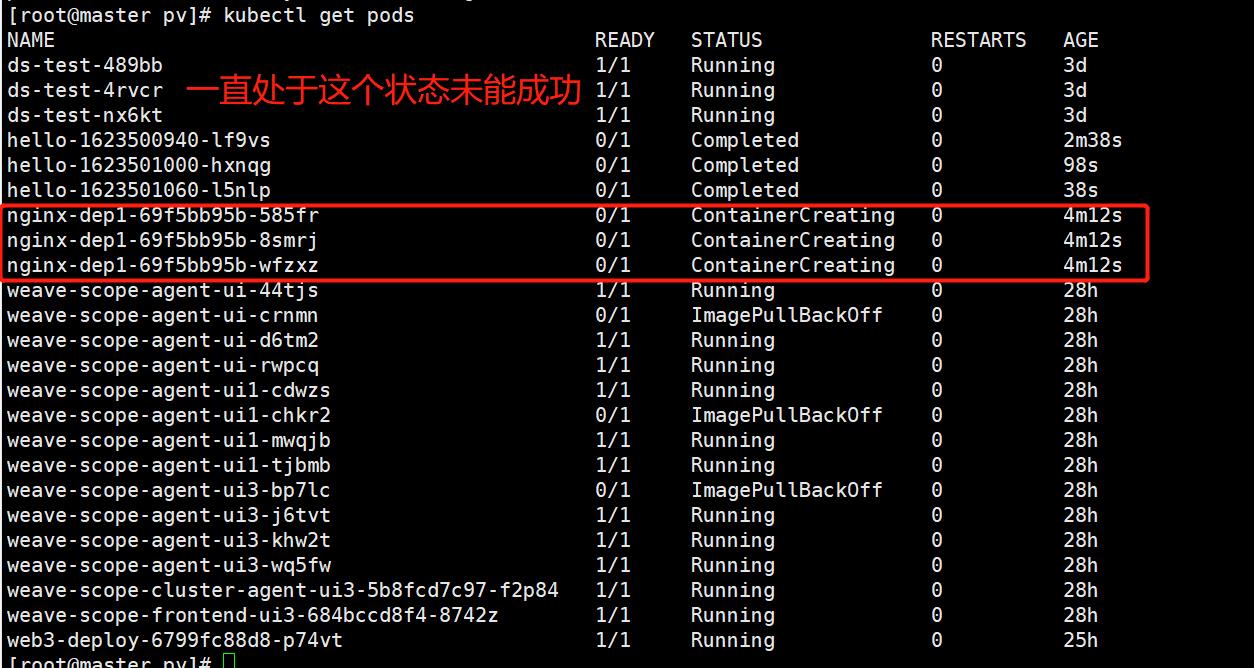

[root@master pv]# kubectl get pods

NAME READY STATUS RESTARTS AGE

ds-test-489bb 1/1 Running 0 3d

ds-test-4rvcr 1/1 Running 0 3d

ds-test-nx6kt 1/1 Running 0 3d

hello-1623500940-lf9vs 0/1 Completed 0 2m38s

hello-1623501000-hxnqg 0/1 Completed 0 98s

hello-1623501060-l5nlp 0/1 Completed 0 38s

nginx-dep1-69f5bb95b-585fr 0/1 ContainerCreating 0 4m12s

nginx-dep1-69f5bb95b-8smrj 0/1 ContainerCreating 0 4m12s

nginx-dep1-69f5bb95b-wfzxz 0/1 ContainerCreating 0 4m12s

weave-scope-agent-ui-44tjs 1/1 Running 0 28h

weave-scope-agent-ui-crnmn 0/1 ImagePullBackOff 0 28h

weave-scope-agent-ui-d6tm2 1/1 Running 0 28h

weave-scope-agent-ui-rwpcq 1/1 Running 0 28h

weave-scope-agent-ui1-cdwzs 1/1 Running 0 28h

weave-scope-agent-ui1-chkr2 0/1 ImagePullBackOff 0 28h

weave-scope-agent-ui1-mwqjb 1/1 Running 0 28h

weave-scope-agent-ui1-tjbmb 1/1 Running 0 28h

weave-scope-agent-ui3-bp7lc 0/1 ImagePullBackOff 0 28h

weave-scope-agent-ui3-j6tvt 1/1 Running 0 28h

weave-scope-agent-ui3-khw2t 1/1 Running 0 28h

weave-scope-agent-ui3-wq5fw 1/1 Running 0 28h

weave-scope-cluster-agent-ui3-5b8fcd7c97-f2p84 1/1 Running 0 28h

weave-scope-frontend-ui3-684bccd8f4-8742z 1/1 Running 0 28h

web3-deploy-6799fc88d8-p74vt 1/1 Running 0 25h

[root@master pv]#

错误信息

[root@master pv]# kubectl describe pod nginx-dep1-69f5bb95b-585fr #查看节点详细信息

Normal Scheduled 6m53s default-scheduler Successfully assigned default/nginx-dep1-69f5bb95b-585fr to node02

Warning FailedMount 2m36s kubelet MountVolume.SetUp failed for volume "my-pv" : mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t nfs 8.140.99.18:/data/nfs /var/lib/kubelet/pods/10f49896-989e-4075-9b11-f3533640d602/volumes/kubernetes.io~nfs/my-pv

Output: mount.nfs: Connection timed out

Warning FailedMount 21s (x3 over 4m51s) kubelet Unable to attach or mount volumes: unmounted volumes=[wwwroot], unattached volumes=[wwwroot default-token-8vnf8]: timed out waiting for the condition

目前 nfs和pv pvc都未能成功,网上找的资料也未能解决这个问题

这是操作成功应该有的截图

知识点补充删除所有pod的命令

kubectl delete pod --all

集群资源监控

1.监控指标

- 集群监控

- 节点资源利用率

- 节点数

- 运行pods

- Pod监控

- 容器指标

- 应用程序

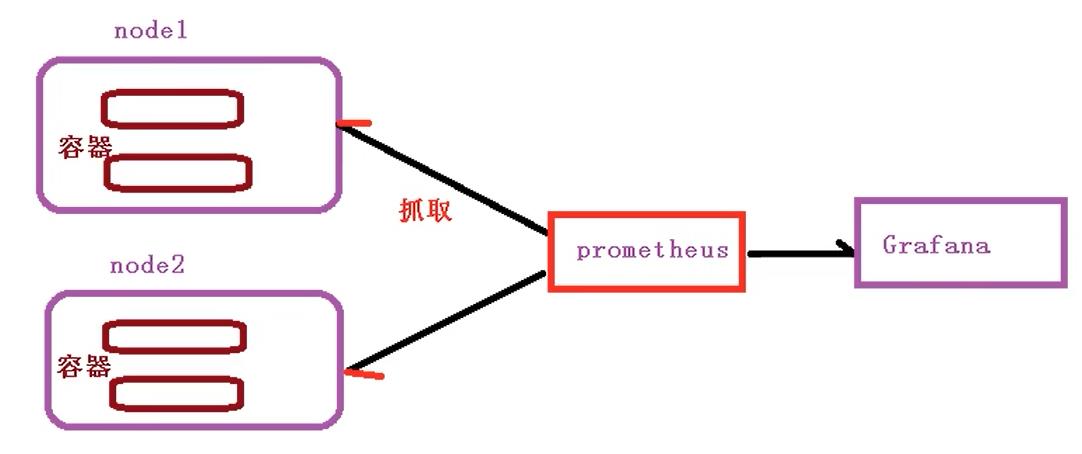

2.监控平台搭建方案

prometheus + Grafana

(1)prometheus

- 开源的

- 监控、报警、数据库

- 以HTTP协议周期性抓取被监控组件状态

- 不需要很复杂的集成过程,使用http接口接入就可以了

(2) Grafana

- 开源的数据分析和可视化工具

- 支持多种数据源

B站学习网址:k8s教程由浅入深-尚硅谷_哔哩哔哩_bilibili

以上是关于第155天学习打卡(Kubernetes emptyDir nfs pv和pvc 集群资源监控)的主要内容,如果未能解决你的问题,请参考以下文章

第156天学习打卡(Kubernetes 搭建监控平台 高可用集群部署 )

第149天学习打卡(Kubernetes 部署nginx 部署Dashboard)

第151天学习打卡(Kubernetes 集群YAML文件详解 Pod Controller)