第153天学习打卡(Kubernetes Ingress Helm)

Posted doudoutj

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了第153天学习打卡(Kubernetes Ingress Helm)相关的知识,希望对你有一定的参考价值。

Ingress

1.把端口号对外暴露,通过ip + 端口号进行访问

- 使用Service里面的NodePort实现

2.NodePort缺陷

- 在每个节点都会启动一个端口,在访问时候通过任何节点,通过节点ip+暴露端口号实现访问

- 意味着每个端口只能使用一次,一个端口对应一个应用

- 实际访问中都是使用域名,根据不同域名跳转到不同端口服务中

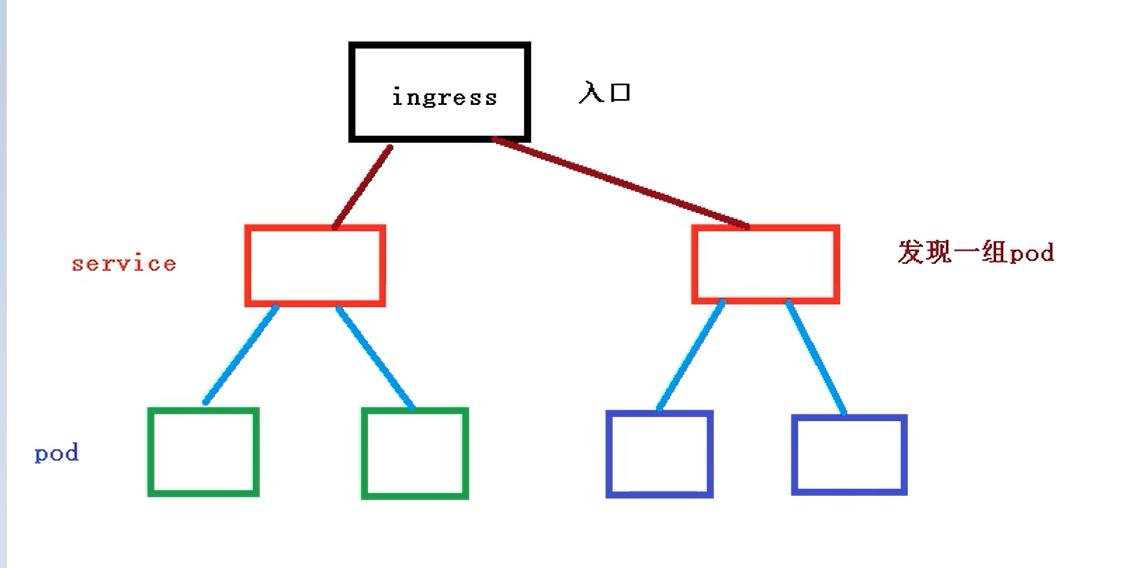

3.Ingress和Pod关系

- pod和ingress通过service关联的

- ingress作为统一入口,由service关联一组pod

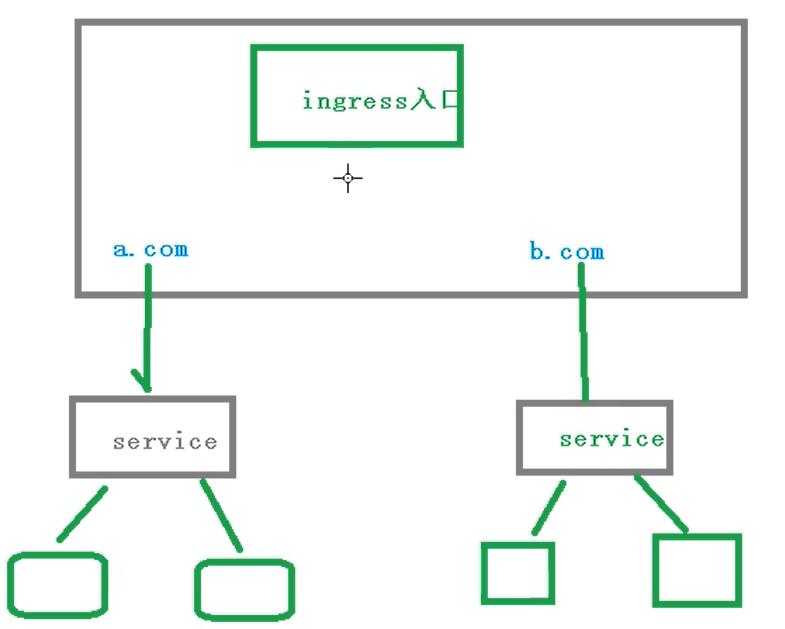

4.ingress工作流程

5.使用ingress

第一步部署ingress Controller

第二步创建ingress规则

选择官方维护的nginx控制器,实现部署

6.使用Ingress对外暴露应用

(1)创建nginx应用,对外暴露端口使用NodePort

[root@master ~]# kubectl create deployment web3 --image=ngin

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

ds-test-489bb 1/1 Running 0 21h

ds-test-4rvcr 1/1 Running 0 21h

ds-test-nx6kt 1/1 Running 0 21h

hello-1623317280-jd4r2 0/1 Completed 0 2m47s

hello-1623317340-xdw8p 0/1 Completed 0 107s

hello-1623317400-5p22p 0/1 Completed 0 47s

mypod 0/1 Completed 0 21h

tomcat-7d987c7694-kw6xs 1/1 Running 0 21h

web-5bb6fd4c98-9zqzg 1/1 Running 0 21h

web-5bb6fd4c98-d7gqr 1/1 Running 0 21h

web3-587cfc4c49-rgzc9 0/1 ContainerCreating 0 15s

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

ds-test-489bb 1/1 Running 0 21h

ds-test-4rvcr 1/1 Running 0 21h

ds-test-nx6kt 1/1 Running 0 21h

hello-1623317520-rngkw 0/1 Completed 0 2m36s

hello-1623317580-r4bnc 0/1 Completed 0 95s

hello-1623317640-sjhj7 0/1 Completed 0 35s

mypod 0/1 Completed 0 21h

tomcat-7d987c7694-kw6xs 1/1 Running 0 21h

web-5bb6fd4c98-9zqzg 1/1 Running 0 21h

web-5bb6fd4c98-d7gqr 1/1 Running 0 21h

web3-587cfc4c49-rgzc9 1/1 Running 0 4m5s

[root@master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

tomcat 1/1 1 1 3d21h

web 2/2 2 2 26h

web3 1/1 1 1 4m43s

[root@master ~]# kubectl delete deploy web

deployment.apps "web" deleted

[root@master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

tomcat 1/1 1 1 3d21h

web3 1/1 1 1 5m11s

[root@master ~]# kubectl expose deployment web3 --port=80 --target-port=80 --type=NodePort#暴露端口

service/web3 exposed

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d21h

web3 NodePort 10.110.237.168 <none> 80:30038/TCP 12s

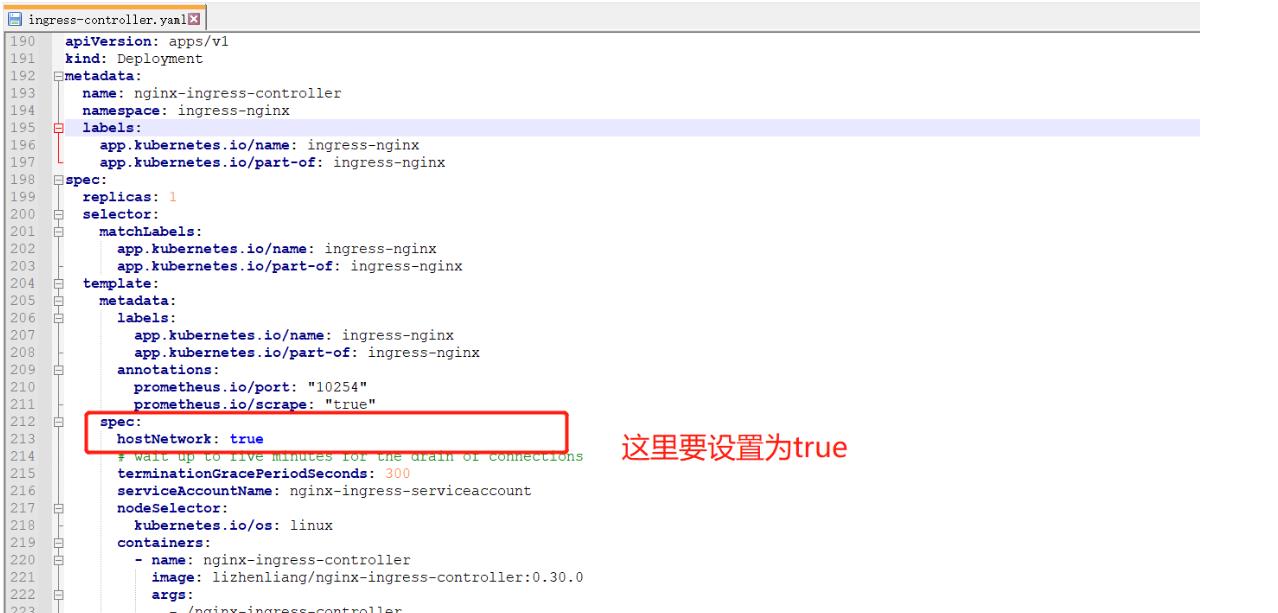

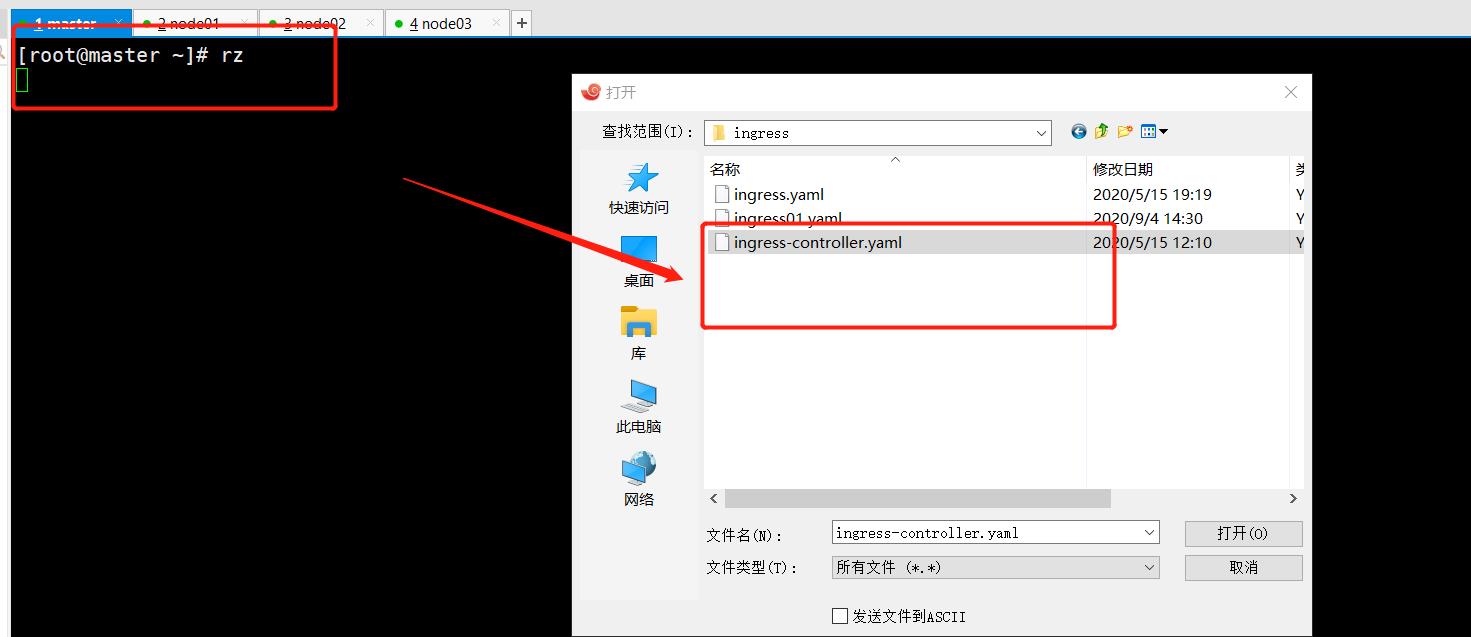

(2)部署ingress controller

ingress-controller.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: true

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: lizhenliang/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

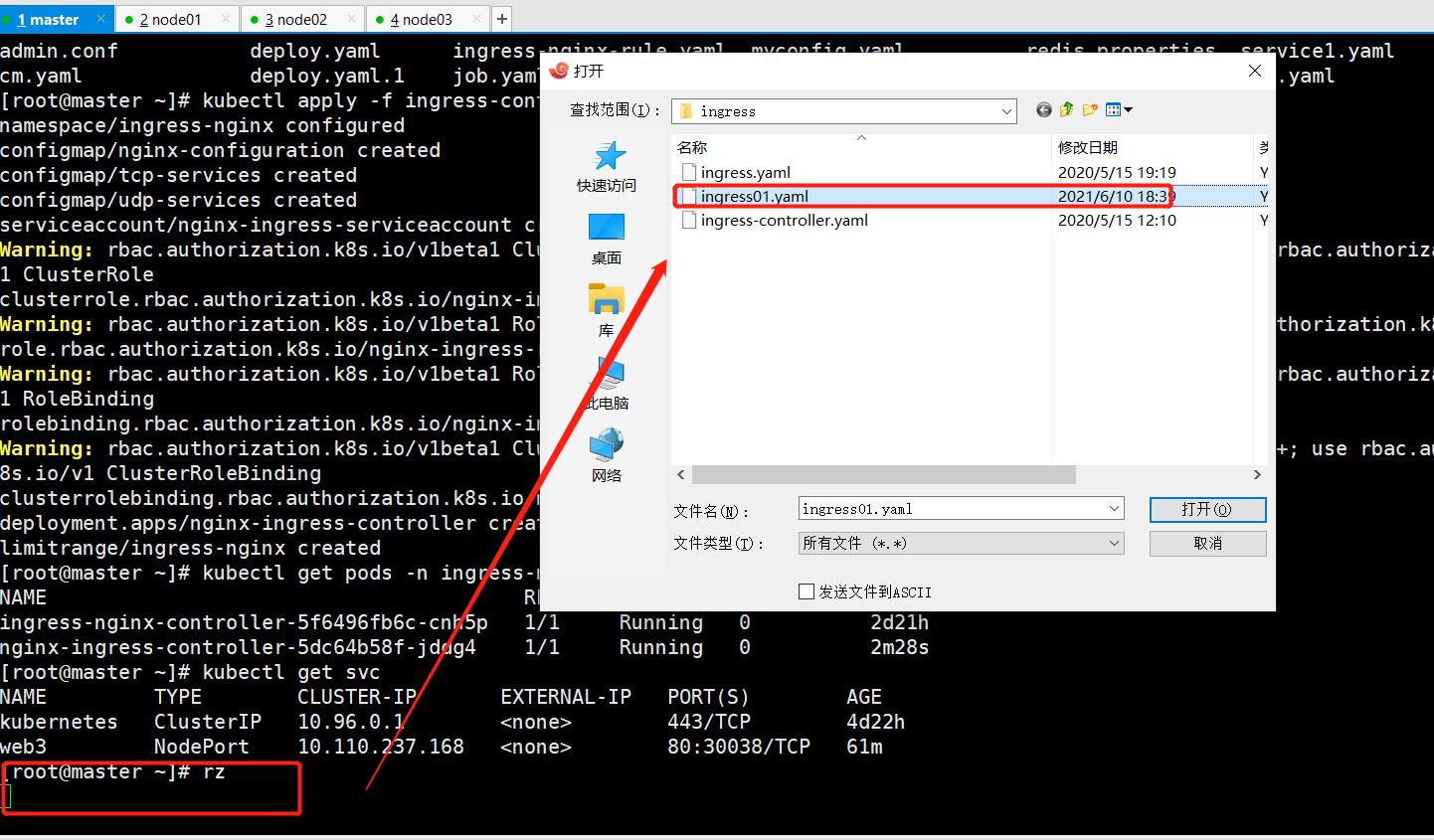

[root@master ~]# kubectl apply -f ingress-controller.yaml

namespace/ingress-nginx configured

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

Warning: rbac.authorization.k8s.io/v1beta1 Role is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 Role

role.rbac.authorization.k8s.io/nginx-ingress-role created

Warning: rbac.authorization.k8s.io/v1beta1 RoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 RoleBinding

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

limitrange/ingress-nginx created

=这里的警告需要把authorization.k8s.io/v1beta1改为authorization.k8s.io/v1就没有警告了

(3)查看ingress controller状态

[root@master ~]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-5f6496fb6c-cnh5p 1/1 Running 0 2d21h

nginx-ingress-controller-5dc64b58f-jddg4 1/1 Running 0 2m28s

(4)创建ingress规则

ingress01.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: k8s-ingress

spec:

rules:

- host: www.abc.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: web

port:

number: 80

[root@master ~]# kubectl apply -f ingress01.yaml

ingress.networking.k8s.io/k8s-ingress created

[root@master ~]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-controller-5f6496fb6c-cnh5p 1/1 Running 0 3d 172.31.197.180 node01 <none> <none>

nginx-ingress-controller-5dc64b58f-jddg4 1/1 Running 0 163m 172.31.197.179 node02 <none> <none>

[root@master ~]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-5f6496fb6c-cnh5p 1/1 Running 0 3d

nginx-ingress-controller-5dc64b58f-jddg4 1/1 Running 0 168m

[root@master ~]# kubect get svc

-bash: kubect: command not found

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d

web NodePort 10.111.111.127 <none> 80:32079/TCP 17m

[root@master ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

k8s-ingress <none> www.abc.com 172.31.197.180 80 7m53s

在node01节点上查看监听80端口和443端口

[root@node01 ~]# netstat -antp | grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 2734/nginx: master

tcp6 0 0 :::80 :::* LISTEN 2734/nginx: master

[root@node01 ~]# netstat -antp | grep 443

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 2734/nginx: master

tcp6 0 0 :::8443 :::* LISTEN 2670/nginx-ingress-

tcp6 0 0 :::443 :::* LISTEN 2734/nginx: master

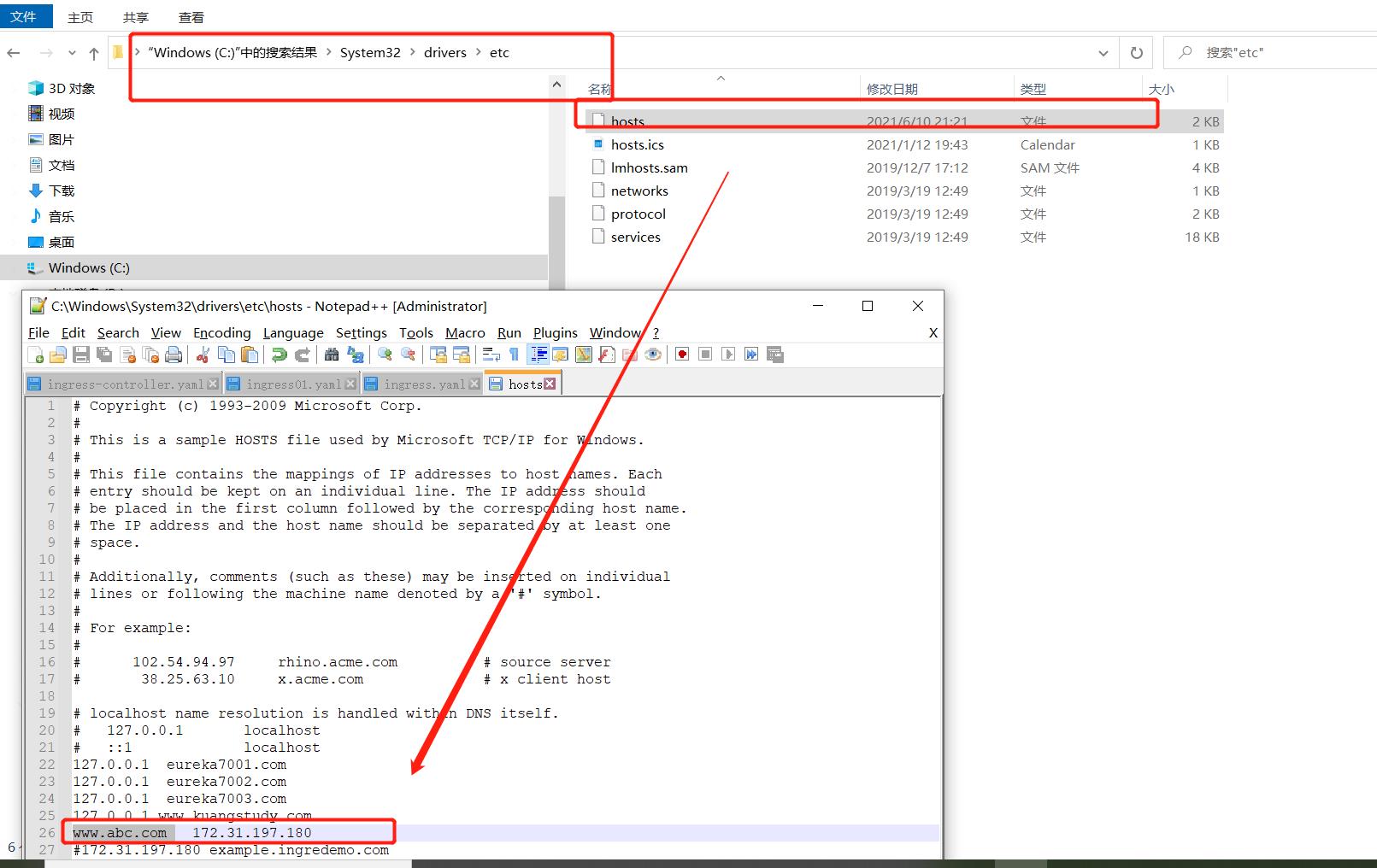

(5)在windows系统hosts文件中添加域名访问规则

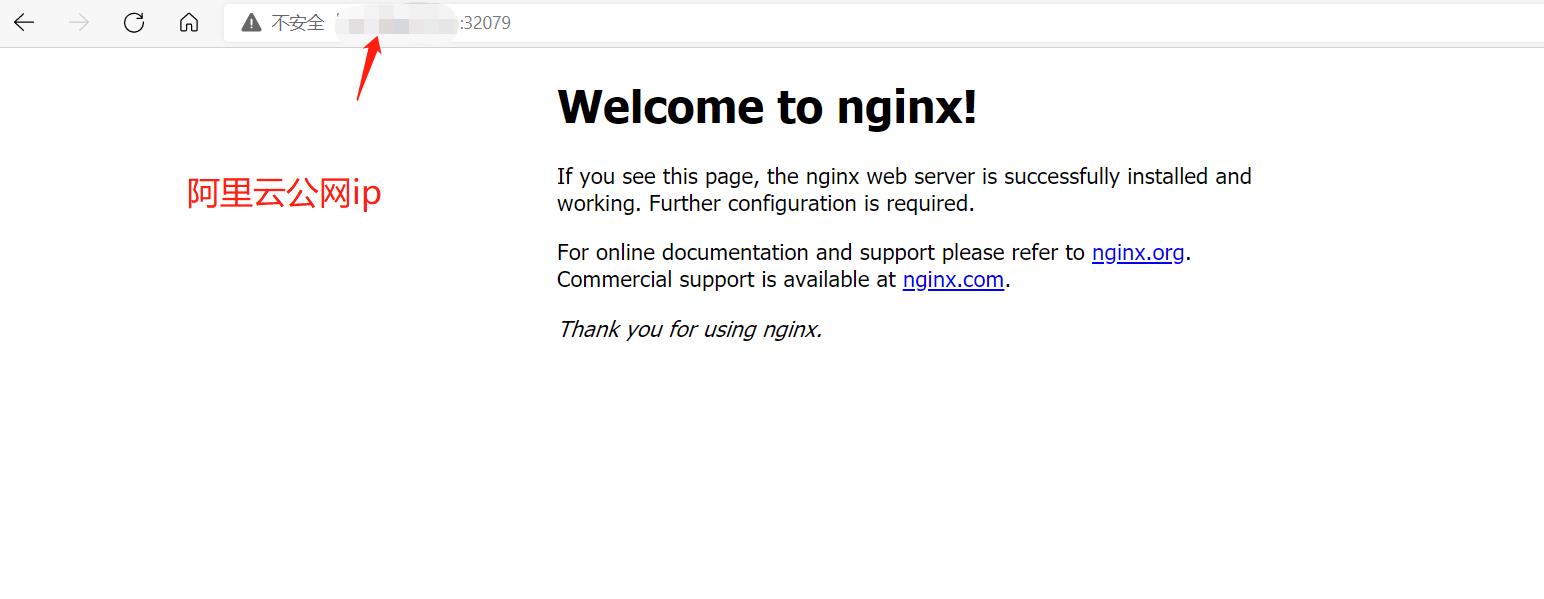

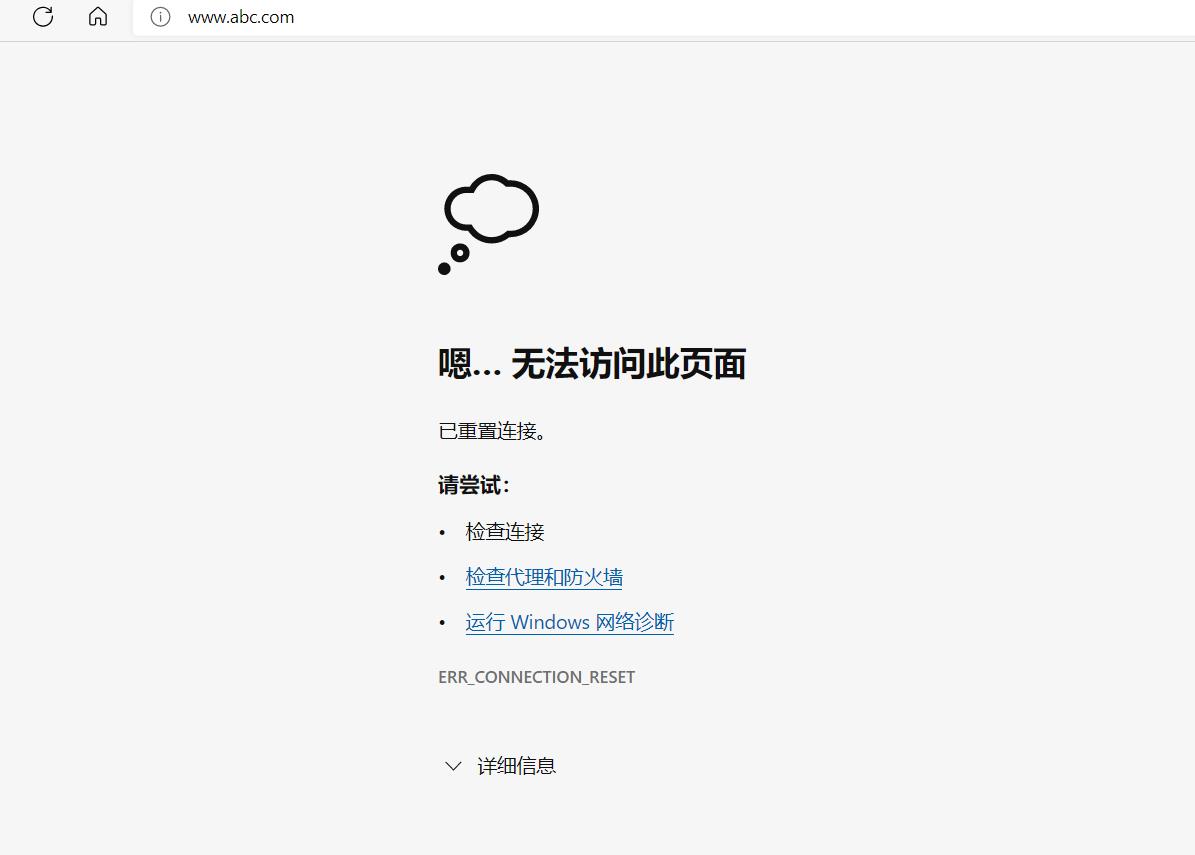

出错的地通过域名依然访问不了,按照网上的改了几次也访问不了,但是通过node节点的阿里云公网ip加暴露的端口号可以访问

Helm

1.helm引入

K8S 上的应用对象,都是由特定的资源描述组成,包括 deployment、service 等。都保存各自文件中或者集中写到一个配置文件。然后 kubectl apply –f 部署。如果应用只由一个或几个这样的服务组成,上面部署方式足够了。而对于一个复杂的应用,会有很多类似

上面的资源描述文件,例如微服务架构应用,组成应用的服务可能多达十个,几十个。如果有更新或回滚应用的需求,可能要修改和维护所涉及的大量资源文件,而这种组织和管理应用的方式就显得力不从心了。且由于缺少对发布过的应用版本管理和控制,使

Kubernetes 上的应用维护和更新等面临诸多的挑战,主要面临以下问题:(1)如何将这些服务作为一个整体管理 (2)这些资源文件如何高效复用 (3)不支持应用级别的版本管理

之前方式部署应用基本过程

- 编写yaml文件

- deployment

- service

- Ingress域名访问

- 如果使用之前方式部署单一应用,少数服务的应用,比较合适

缺点:比如部署微服务项目,可能有几十个服务,每个服务都有一套yaml文件,需要维护大量yaml文件,版本管理特别不方便。

2.使用helm可以解决哪些问题?

(1)使用helm可以把这些yaml作为一个整体管理

(2)实现yaml高效复用。

(3)使用helm应用级别的版本管理

3. Helm 介绍

Helm 是一个 Kubernetes 的包管理工具,就像 Linux 下的包管理器,如 yum/apt 等,可以很方便的将之前打包好的 yaml 文件部署到 kubernetes 上。

Helm 有 3 个重要概念:

(1)helm:一个命令行客户端工具,主要用于 Kubernetes 应用 chart 的创建、打包、发布和管理。

(2)Chart:应用描述,一系列用于描述 k8s 资源相关文件的集合。

(3)Release:基于 Chart 的部署实体,一个 chart 被 Helm 运行后将会生成对应的一个release;将在 k8s 中创建出真实运行的资源对象。

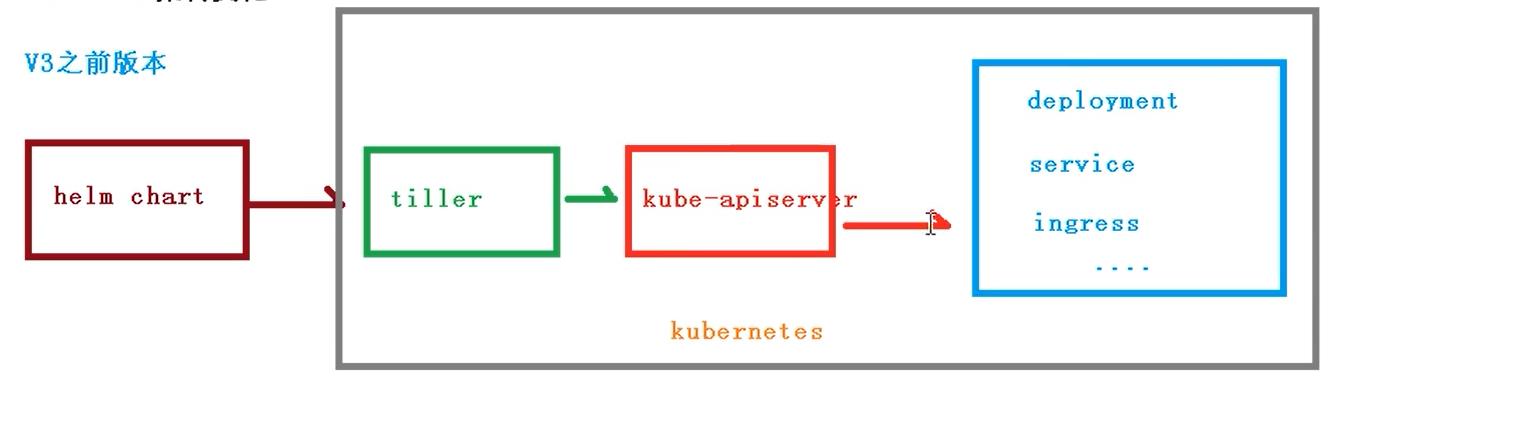

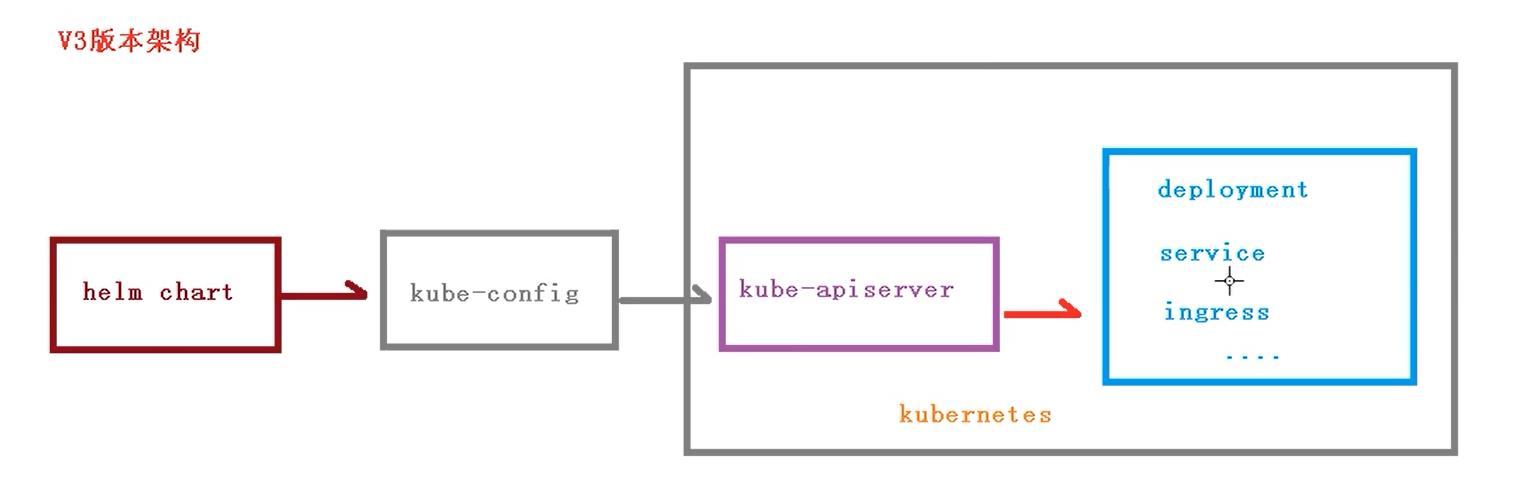

4. Helm 3 v3 变化

2019 年 11 月 13 日, Helm 团队发布 Helm v3 的第一个稳定版本。

该版本主要变化如下:

架构变化:

1、最明显的变化是 Tiller 的删除

2、Release 名称可以在不同命名空间重用

3、支持将 Chart 推送至 Docker 镜像仓库中

4、使用 JSONSchema 验证 chart values

5、其他

5.helm架构变化

官网地址:Helm

6.helm安装

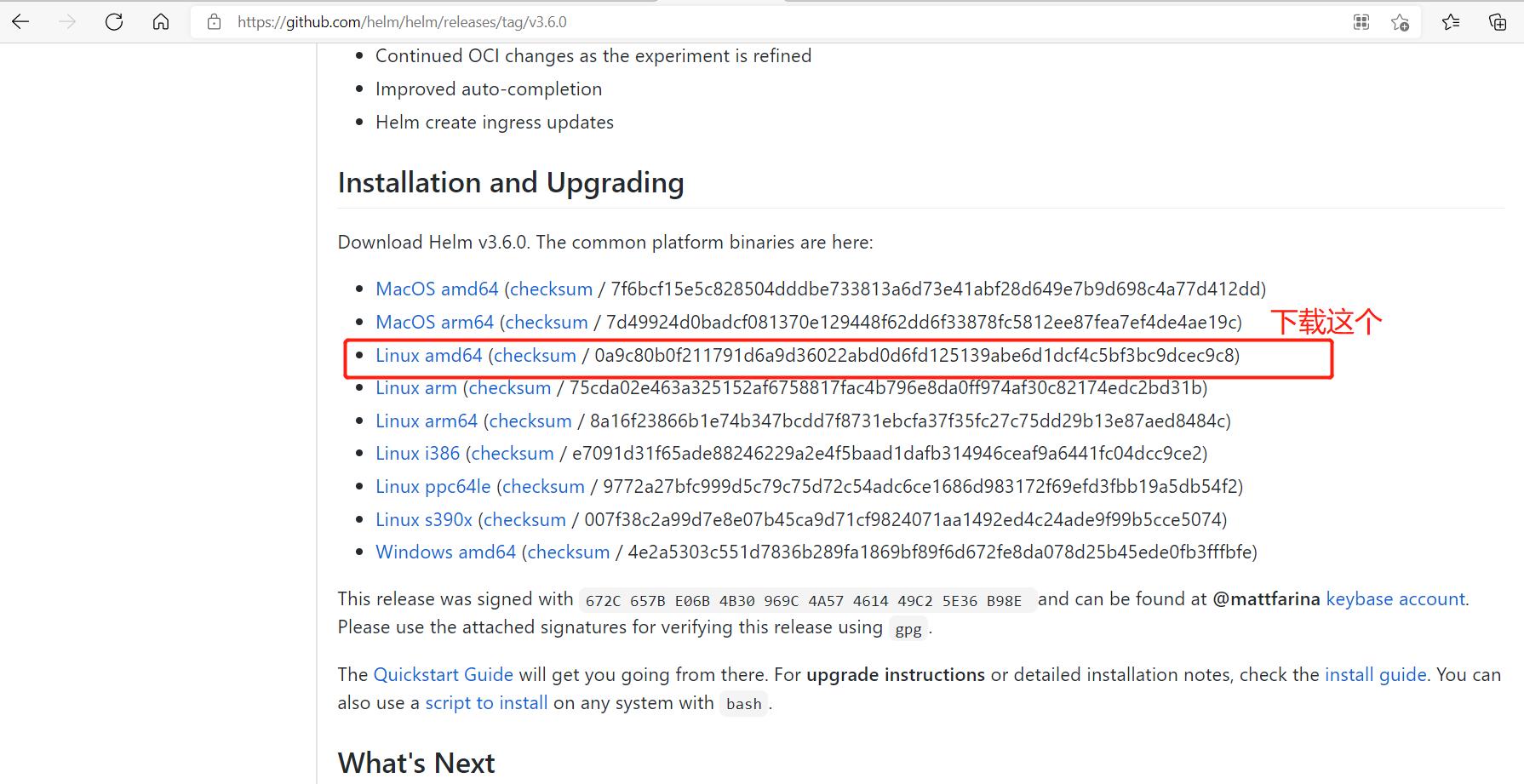

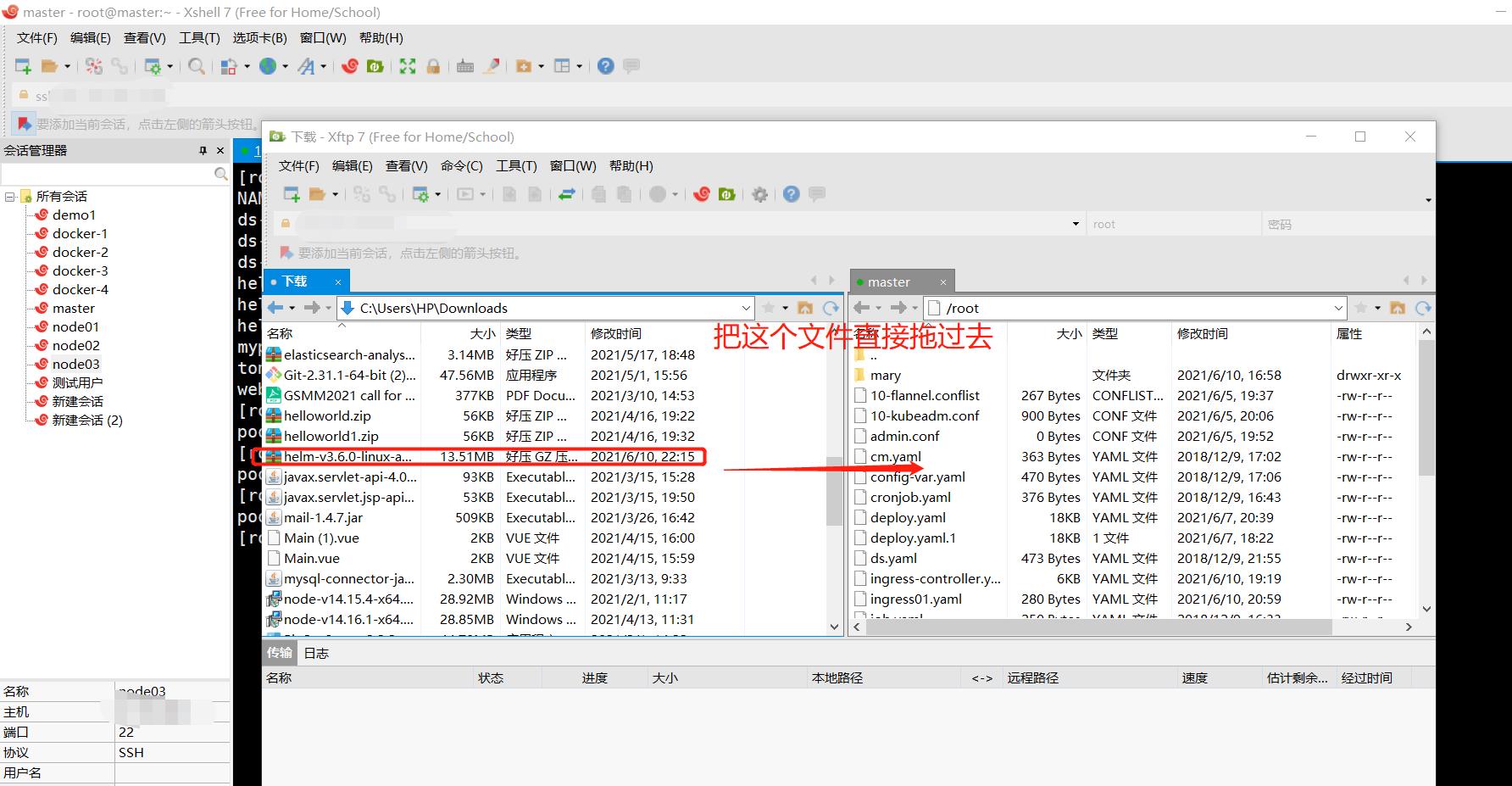

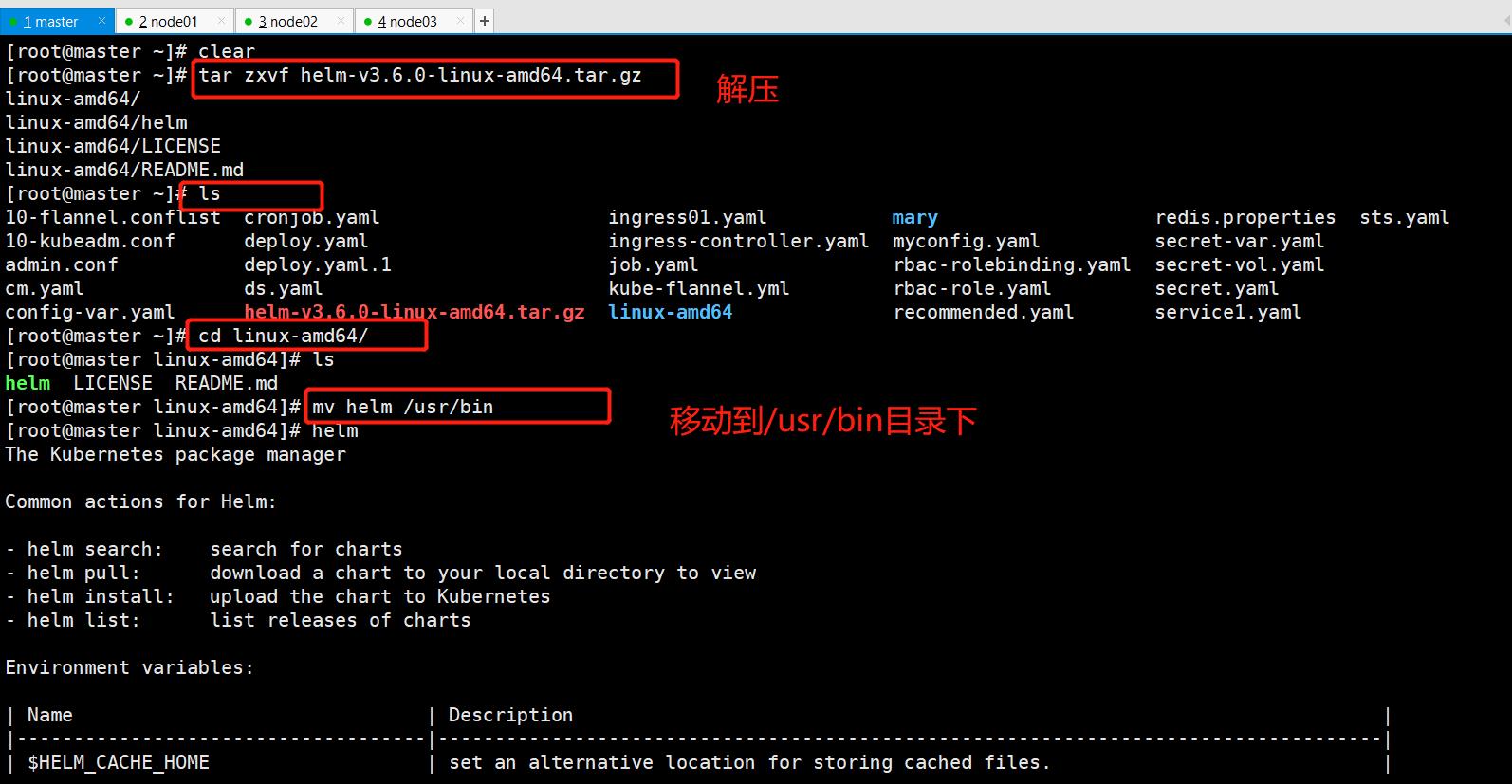

第一步 下载helm安装压缩文件,上传到linux系统中

下载网址:Release Helm v3.6.0 · helm/helm · GitHub

第二步 解压helm压缩文件,把解压之后的helm目录复制到usr.bin目录下

root@master ~]# tar zxvf helm-v3.6.0-linux-amd64.tar.gz

linux-amd64/

linux-amd64/helm

linux-amd64/LICENSE

linux-amd64/README.md

[root@master ~]# ls

10-flannel.conflist cronjob.yaml ingress01.yaml mary redis.properties sts.yaml

10-kubeadm.conf deploy.yaml ingress-controller.yaml myconfig.yaml secret-var.yaml

admin.conf deploy.yaml.1 job.yaml rbac-rolebinding.yaml secret-vol.yaml

cm.yaml ds.yaml kube-flannel.yml rbac-role.yaml secret.yaml

config-var.yaml helm-v3.6.0-linux-amd64.tar.gz linux-amd64 recommended.yaml service1.yaml

[root@master ~]# cd linux-amd64/

[root@master linux-amd64]# ls

helm LICENSE README.md

[root@master linux-amd64]# mv helm /usr/bin

[root@master linux-amd64]# helm # 查看命令

7.配置helm仓库

(1)添加仓库

helm repo add 仓库名称 仓库地址

#添加的微软仓库

[root@master linux-amd64]# helm repo add stable http://mirror.azure.cn/kubernetes/charts/

"stable" has been added to your repositories

[root@master linux-amd64]# helm repo list # 查看命令

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

#添加阿里云仓库

[root@master linux-amd64]# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

"aliyun" has been added to your repositories

[root@master linux-amd64]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

[root@master linux-amd64]# helm repo update # 更新操作

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "aliyun" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@master linux-amd64]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

#删除一个仓库

[root@master linux-amd64]# helm repo remove aliyun

"aliyun" has been removed from your repositories

[root@master linux-amd64]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

[root@master linux-amd64]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

B站学习网址:k8s教程由浅入深-尚硅谷_哔哩哔哩_bilibili

以上是关于第153天学习打卡(Kubernetes Ingress Helm)的主要内容,如果未能解决你的问题,请参考以下文章

第156天学习打卡(Kubernetes 搭建监控平台 高可用集群部署 )

第149天学习打卡(Kubernetes 部署nginx 部署Dashboard)

第151天学习打卡(Kubernetes 集群YAML文件详解 Pod Controller)