Tensorflow+Keraskeras实现条件生成对抗网络DCGAN--以Minis和fashion_mnist数据集为例

Posted Better Bench

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Tensorflow+Keraskeras实现条件生成对抗网络DCGAN--以Minis和fashion_mnist数据集为例相关的知识,希望对你有一定的参考价值。

1 引言

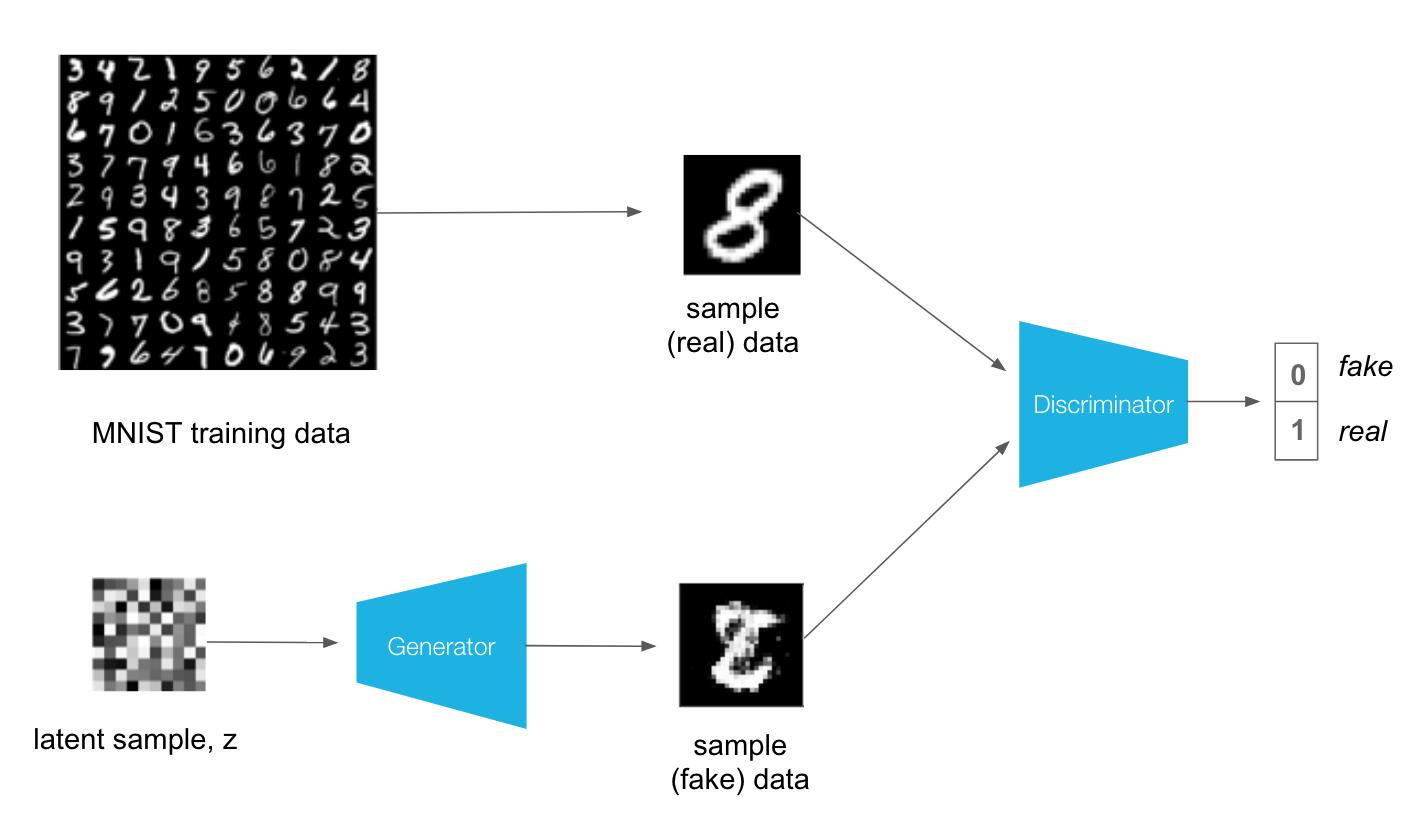

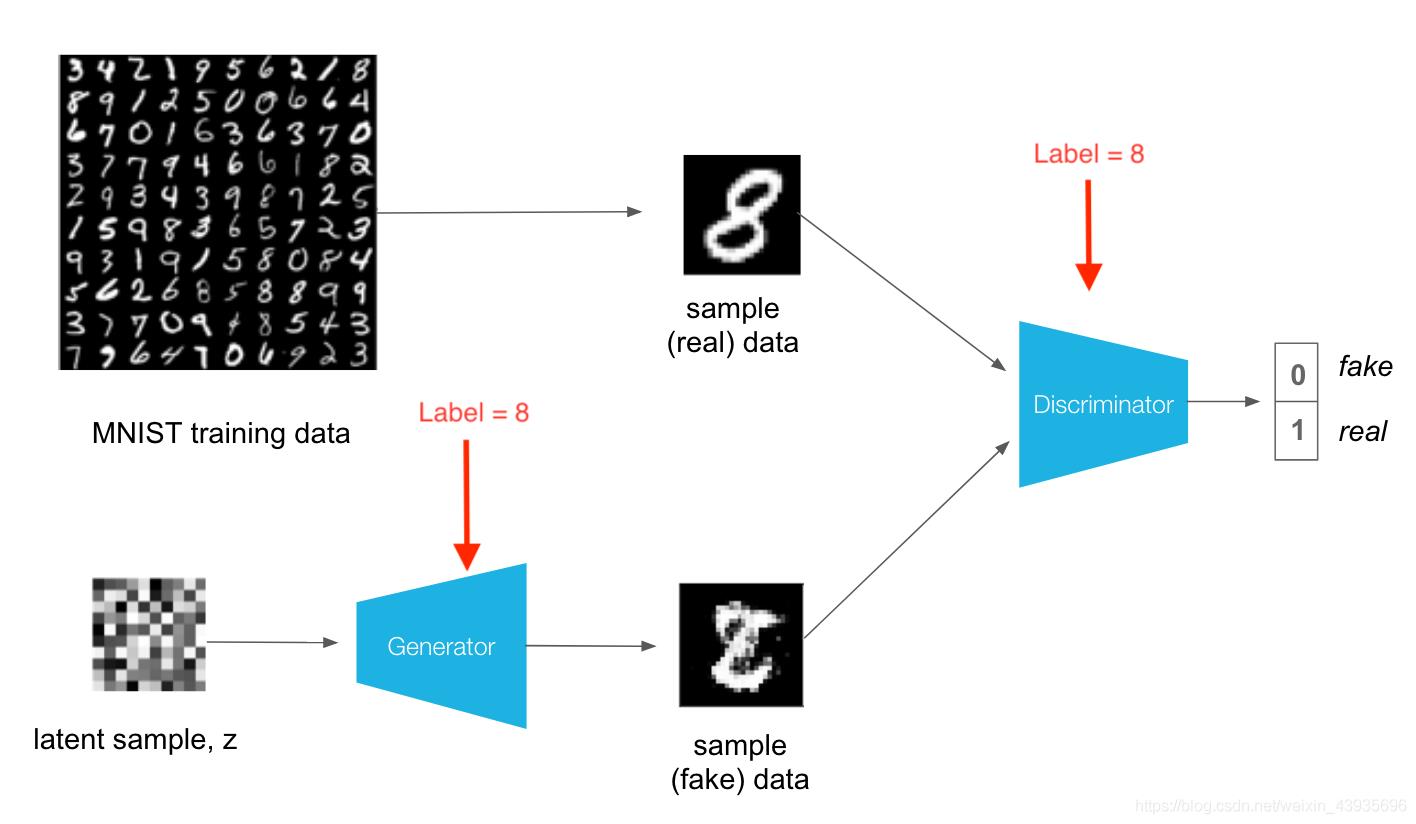

条件生成对抗网络(Conditional Generative Adversarial Nets,简称CGAN)是GAN的改进。

举例如图所示,如果使用Minist数据集

- 在GAN中,在训练时,随机初始化一个和图片大小一致的矩阵和原始图片的矩阵进行博弈,产生一个新的类似于原始图片的网络。

- 在Conditional GAN中,在训练时,会同时输入label,告诉当前网络生成的图片是数字8,而不是生成其他数字的图片

2 实现

Mian.py

指定条件即条件输入是Label

import tensorflow as tf

from tensorflow.keras.datasets import fashion_mnist,mnist

import utils

from models import build_discriminator_model,build_generator_model

import numpy as np

# 图片维度

noise_dim = 100

# 学习率

learning_rate = 1e-4

# 交叉熵用来计算生成器Generator和鉴别器Disctiminator的损失函数

cross_entropy = tf.keras.losses.BinaryCrossentropy(from_logits=True)

# 指定使用哪个数据集

dataset = 'fashion_mnist'

if dataset == 'mnist':

(X_train, y_train), (X_test, y_test) = mnist.load_data()

if dataset == 'fashion_mnist':

(X_train, y_train), (X_test, y_test) = fashion_mnist.load_data()

else:

raise RuntimeError('Dataset not found')

# 数据标准化

X_train, X_test = utils.normalize(X_train, X_test)

# 初始化G和D

discriminator = build_discriminator_model()

generator = build_generator_model()

# 数据标准化

def normalize(train, test):

# convert from integers to floats

train_norm = train.astype('float32')

test_norm = test.astype('float32')

# normalize to range 0-1

train_norm = train_norm / 255.0

test_norm = test_norm / 255.0

# return normalized images

return train_norm, test_norm

# 生成器和鉴别器的优化器

generator_optimizer = tf.keras.optimizers.Adam(learning_rate = 1e-4)

discriminator_optimizer = tf.keras.optimizers.Adam(learning_rate = 1e-4)

# 鉴别器的损失函数

def discriminator_loss(real_output, fake_output):

real_loss = cross_entropy(tf.ones_like(real_output), real_output)

fake_loss = cross_entropy(tf.zeros_like(fake_output), fake_output)

total_loss = real_loss + fake_loss

return total_loss

# 生成器的损失函数

def generator_loss(fake_output):

return cross_entropy(tf.ones_like(fake_output), fake_output)

# 保存模型

def save_models(epochs, learning_rate):

generator.save(f'generator-epochs-{epochs}-learning_rate-{learning_rate}.h5')

discriminator.save(f'discriminator-epochs-{epochs}-learning_rate-{learning_rate}.h5')

# 训练

tf.function

def train_step(batch_size=512):

# 随机产生一组下标,从训练数据中随机抽取训练集

idx = np.random.randint(0, X_train.shape[0], batch_size)

# 随机抽取训练集

Xtrain, labels = X_train[idx], y_train[idx]

with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

# 随机初始化一个和图片大小的矩阵

z = np.random.normal(0, 1, size=(batch_size, noise_dim))

# 经过生成器,产生一个图片。并指定条件是label,把label嵌入到图片中

generated_images = generator([z, labels], training=True)

real_output = discriminator([Xtrain, labels], training=True)

fake_output = discriminator([generated_images, labels], training=True)

gen_loss = generator_loss(fake_output)

disc_loss = discriminator_loss(real_output, fake_output)

# 打印G和D的损失函数

tf.print(f'Genrator loss: {gen_loss} Discriminator loss: {disc_loss}')

gradients_of_generator = gen_tape.gradient(gen_loss, generator.trainable_variables)

gradients_of_discriminator = disc_tape.gradient(disc_loss, discriminator.trainable_variables)

# 更新梯度

generator_optimizer.apply_gradients(zip(gradients_of_generator, generator.trainable_variables))

discriminator_optimizer.apply_gradients(zip(gradients_of_discriminator, discriminator.trainable_variables))

if __name__ =="__main__":

epochs = 100

for epoch in range(1, epochs + 1):

print(f'Epoch {epoch}/{epochs}')

train_step()

if epoch % 500 == 0:

save_models(epoch, learning_rate)

Model.py

模型采用深度卷卷积的GAN网络结构

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras.models import Sequential, Model

import numpy as np

WIDTH, HEIGHT = 28, 28

num_classes = 10

img_channel = 1

img_shape = (WIDTH, HEIGHT, img_channel)

noise_dim = 100

def build_generator_model():

model = tf.keras.Sequential()

model.add(layers.Dense(7*7*256, use_bias=False,input_shape=(noise_dim,)))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Reshape((7, 7, 256)))

model.add(layers.Conv2DTranspose(128, (1, 1), strides=(1, 1), padding='same', use_bias=False))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(64, (3, 3), strides=(2, 2), padding='same', use_bias=False))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(1, (5, 5), strides=(2, 2), padding='same', use_bias=False, activation='tanh'))

z = layers.Input(shape=(noise_dim,))

label = layers.Input(shape=(1,))

label_embedding = layers.Embedding(num_classes, noise_dim, input_length = 1)(label)

label_embedding = layers.Flatten()(label_embedding)

joined = layers.multiply([z, label_embedding])

img = model(joined)

return Model([z, label], img)

def build_discriminator_model():

model = tf.keras.Sequential()

model.add(layers.Conv2D(64, (5, 5), strides=(2, 2), padding='same',

input_shape=[28, 28, 2]))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Conv2D(128, (5, 5), strides=(2, 2), padding='same'))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Flatten())

model.add(layers.Dense(1))

img = layers.Input(shape=(img_shape))

label = layers.Input(shape=(1,))

label_embedding = layers.Embedding(input_dim=num_classes, output_dim=np.prod(img_shape), input_length = 1)(label)

label_embedding = layers.Flatten()(label_embedding)

label_embedding = layers.Reshape(img_shape)(label_embedding)

concat = layers.Concatenate(axis=-1)([img, label_embedding])

prediction = model(concat)

return Model([img, label], prediction)

以上是关于Tensorflow+Keraskeras实现条件生成对抗网络DCGAN--以Minis和fashion_mnist数据集为例的主要内容,如果未能解决你的问题,请参考以下文章

Tensorflow+kerasKeras API三种搭建神经网络的方式及以mnist举例实现

Tensorflow+kerasKeras API三种搭建神经网络的方式及以mnist举例实现

Tensorflow+kerasKeras API两种训练GAN网络的方式

Tensorflow+kerasKeras API两种训练GAN网络的方式