HDFS的Java客户端编写

Posted 张曼

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了HDFS的Java客户端编写相关的知识,希望对你有一定的参考价值。

总结: 之前在教材上看hdfs的Java客户端编写,只有关键代码,呵呵……。闲话不说,上正文。

1. Hadoop 的Java客户端编写建议在linux系统上开发

2. 可以使用eclipse,idea 等IDE工具,目前比较流行的是idea

3. 新建项目之后需要添加很多jar包,win,linux下添加jar方式略有不同

4. 使用代码会出现文件格式不认识,权限等问题。

具体:

1.首先测试从hdfs中下载文件:

下载文件的代码:(将hdfs://localhost:9000/jdk-7u65-linux-i586.tar.gz文件下载到本地/opt/download/doload.tgz)

package cn.qlq.hdfs;

import java.io.FileOutputStream;

import java.io.IOException;

import org.apache.commons.compress.utils.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class HdfsUtil {

public static void main(String a[]) throws IOException {

//to upload a file

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

Path path = new Path("hdfs://localhost:9000/jdk-7u65-linux-i586.tar.gz");

FSDataInputStream input = fs.open(path);

FileOutputStream output = new FileOutputStream("/opt/download/doload.tgz");

IOUtils.copy(input, output);

}

}

直接运行报错:

原因是程序不认识 hdfs://localhost:9000/jdk-7u65-linux-i586.tar.gz 这样的目录

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. Exception in thread "main" java.lang.IllegalArgumentException: Wrong FS: hdfs://localhost:9000/jdk-7u65-linux-i586.tar.gz, expected: file:/// at org.apache.hadoop.fs.FileSystem.checkPath(FileSystem.java:643) at org.apache.hadoop.fs.RawLocalFileSystem.pathToFile(RawLocalFileSystem.java:79) at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:506) at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:724) at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:501) at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:397) at org.apache.hadoop.fs.ChecksumFileSystem$ChecksumFSInputChecker.<init>(ChecksumFileSystem.java:137) at org.apache.hadoop.fs.ChecksumFileSystem.open(ChecksumFileSystem.java:339) at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:764) at cn.qlq.hdfs.HdfsUtil.main(HdfsUtil.java:21)

解决办法:

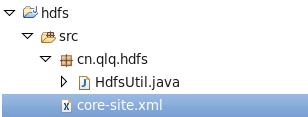

- 第一种: 将hadoop安装目录下的etc目录中的core-site.xml拷贝到eclipse的src目录下。这样就不会报错

运行结果:

[root@localhost download]# ll total 140224 -rw-r--r--. 1 root root 143588167 Apr 20 05:55 doload.tgz [root@localhost download]# pwd /opt/download [root@localhost download]# ll total 140224 -rw-r--r--. 1 root root 143588167 Apr 20 05:55 doload.tgz

- 第二种:直接在程序中修改

我们先查看hdfs-site.xml中的内容:

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/opt/hadoop/hadoop-2.4.1/data/</value> </property> </configuration>

代码改为:

public static void main(String a[]) throws IOException {

//to upload a filed

Configuration conf = new Configuration();

//set hdfs root dir

conf.set("fs.defaultFS", "hdfs://localhost:9000");

FileSystem fs = FileSystem.get(conf);

Path path = new Path("hdfs://localhost:9000/jdk-7u65-linux-i586.tar.gz");

FSDataInputStream input = fs.open(path);

FileOutputStream output = new FileOutputStream("/opt/download/doload.tgz");

IOUtils.copy(input, output);

}

2.下面代码演示了hdfs的基本操作:

package cn.qlq.hdfs;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.commons.compress.utils.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.RemoteIterator;

import org.junit.Before;

import org.junit.Test;

public class HdfsUtil {

private FileSystem fs = null;

@Before

public void befor() throws IOException, InterruptedException, URISyntaxException{

//读取classpath下的xxx-site.xml 配置文件,并解析其内容,封装到conf对象中

Configuration conf = new Configuration();

//也可以在代码中对conf中的配置信息进行手动设置,会覆盖掉配置文件中的读取的值

conf.set("fs.defaultFS", "hdfs://localhost:9000/");

//根据配置信息,去获取一个具体文件系统的客户端操作实例对象

fs = FileSystem.get(new URI("hdfs://localhost:9000/"),conf,"root");

}

/**

* 上传文件,比较底层的写法

*

* @throws Exception

*/

@Test

public void upload() throws Exception {

Path dst = new Path("hdfs://localhost:9000/aa/qingshu.txt");

FSDataOutputStream os = fs.create(dst);

FileInputStream is = new FileInputStream("/opt/download/haha.txt");

IOUtils.copy(is, os);

}

/**

* 上传文件,封装好的写法

* @throws Exception

* @throws IOException

*/

@Test

public void upload2() throws Exception, IOException{

fs.copyFromLocalFile(new Path("/opt/download/haha.txt"), new Path("hdfs://localhost:9000/aa/qingshu2.txt"));

}

/**

* download file

* @throws IOException

*/

@Test

public void download() throws IOException {

Path path = new Path("hdfs://localhost:9000/jdk-7u65-linux-i586.tar.gz");

FSDataInputStream input = fs.open(path);

FileOutputStream output = new FileOutputStream("/opt/download/doload.tgz");

IOUtils.copy(input, output);

}

/**

* 下载文件

* @throws Exception

* @throws IllegalArgumentException

*/

@Test

public void download2() throws Exception {

fs.copyToLocalFile(new Path("hdfs://localhost:9000/aa/qingshu2.txt"), new Path("/opt/download/haha2.txt"));

}

/**

* 查看文件信息

* @throws IOException

* @throws IllegalArgumentException

* @throws FileNotFoundException

*

*/

@Test

public void listFiles() throws FileNotFoundException, IllegalArgumentException, IOException {

// listFiles列出的是文件信息,而且提供递归遍历

RemoteIterator<LocatedFileStatus> files = fs.listFiles(new Path("/"), true);

while(files.hasNext()){

LocatedFileStatus file = files.next();

Path filePath = file.getPath();

String fileName = filePath.getName();

System.out.println(fileName);

}

System.out.println("---------------------------------");

//listStatus 可以列出文件和文件夹的信息,但是不提供自带的递归遍历

FileStatus[] listStatus = fs.listStatus(new Path("/"));

for(FileStatus status: listStatus){

String name = status.getPath().getName();

System.out.println(name + (status.isDirectory()?" is dir":" is file"));

}

}

/**

* 创建文件夹

* @throws Exception

* @throws IllegalArgumentException

*/

@Test

public void mkdir() throws IllegalArgumentException, Exception {

fs.mkdirs(new Path("/aaa/bbb/ccc"));

}

/**

* 删除文件或文件夹

* @throws IOException

* @throws IllegalArgumentException

*/

@Test

public void rm() throws IllegalArgumentException, IOException {

fs.delete(new Path("/aa"), true);

}

}

以上是关于HDFS的Java客户端编写的主要内容,如果未能解决你的问题,请参考以下文章