HDFS的Java客户端操作代码(HDFS的查看创建)

Posted Mr.Zhao

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了HDFS的Java客户端操作代码(HDFS的查看创建)相关的知识,希望对你有一定的参考价值。

1.HDFS的put上传文件操作的java代码:

1 package Hdfs; 2 3 import java.io.FileInputStream; 4 import java.io.FileNotFoundException; 5 import java.io.IOException; 6 import java.net.URI; 7 8 import org.apache.hadoop.conf.Configuration; 9 import org.apache.hadoop.fs.FSDataOutputStream; 10 import org.apache.hadoop.fs.FileStatus; 11 import org.apache.hadoop.fs.FileSystem; 12 import org.apache.hadoop.fs.Path; 13 import org.apache.hadoop.io.IOUtils; 14 15 public class FileSystemTest { 16 public static void main(String[] args) throws Exception { 17 //注:hadoop用户是具有集群文件系统权限的用户。 18 System.setProperty("HADOOP_USER_NAME","root"); 19 System.setProperty("HADOOP_USER_PASSWORD","neusoft"); 20 FileSystem fileSystem = FileSystem.newInstance(new URI("hdfs://neusoft-master:9000"), new Configuration()); 21 //ls(fileSystem); //提取的方法 22 put(fileSystem); //上传的方法 23 } 24 25 private static void put(FileSystem fileSystem) throws IOException, 26 FileNotFoundException { 27 //put创建上传图片C:\\Users\\SimonsZhao\\Desktop\\234967-13112015163685.jpg 28 FSDataOutputStream out = fileSystem.create(new Path("/234967-13112015163685.jpg")); 29 //两个流嵌套 30 IOUtils.copyBytes(new FileInputStream("C:\\\\Users\\\\SimonsZhao\\\\Desktop\\\\234967-13112015163685.jpg"), 31 out, 1024, true); 32 } 33 34 private static void ls(FileSystem fileSystem) throws Exception { 35 //ls命令的操作 36 FileStatus[] listStatus = fileSystem.listStatus(new Path("/")); 37 for (FileStatus fileStatus : listStatus) { 38 System.out.println(fileStatus); 39 } 40 } 41 }

Linux服务器端验证:

问题总结:

( 1).权限问题:

1 log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory). 2 log4j:WARN Please initialize the log4j system properly. 3 log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. 4 Exception in thread "main" org.apache.hadoop.security.AccessControlException: Permission denied: user=SimonsZhao, access=WRITE, inode="/234967-13112015163685.jpg":root:supergroup:-rw-r--r-- 5 at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:257) 6 at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:238) 7 at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:151) 8 at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:138) 9 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6609) 10 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6591) 11 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPathAccess(FSNamesystem.java:6516) 12 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2751) 13 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2675) 14 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2560) 15 at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:593) 16 at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.create(AuthorizationProviderProxyClientProtocol.java:111) 17 at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:393) 18 at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) 19 at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617) 20 at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1060) 21 at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086) 22 at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082) 23 at java.security.AccessController.doPrivileged(Native Method) 24 at javax.security.auth.Subject.doAs(Subject.java:415) 25 at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1707) 26 at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080) 27 28 at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) 29 at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57) 30 at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) 31 at java.lang.reflect.Constructor.newInstance(Constructor.java:526) 32 at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106) 33 at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73) 34 at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:1730) 35 at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1668) 36 at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1593) 37 at org.apache.hadoop.hdfs.DistributedFileSystem$6.doCall(DistributedFileSystem.java:397) 38 at org.apache.hadoop.hdfs.DistributedFileSystem$6.doCall(DistributedFileSystem.java:393) 39 at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) 40 at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:393) 41 at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:337) 42 at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:908) 43 at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:889) 44 at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:786) 45 at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:775) 46 at Hdfs.FileSystemTest.main(FileSystemTest.java:23) 47 Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=SimonsZhao, access=WRITE, inode="/234967-13112015163685.jpg":root:supergroup:-rw-r--r-- 48 at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:257) 49 at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:238) 50 at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:151) 51 at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:138) 52 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6609) 53 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6591) 54 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPathAccess(FSNamesystem.java:6516) 55 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2751) 56 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2675) 57 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2560) 58 at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:593) 59 at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.create(AuthorizationProviderProxyClientProtocol.java:111) 60 at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:393) 61 at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) 62 at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617) 63 at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1060) 64 at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086) 65 at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082) 66 at java.security.AccessController.doPrivileged(Native Method) 67 at javax.security.auth.Subject.doAs(Subject.java:415) 68 at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1707) 69 at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080) 70 71 at org.apache.hadoop.ipc.Client.call(Client.java:1468) 72 at org.apache.hadoop.ipc.Client.call(Client.java:1399) 73 at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232) 74 at com.sun.proxy.$Proxy9.create(Unknown Source) 75 at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.create(ClientNamenodeProtocolTranslatorPB.java:295) 76 at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) 77 at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) 78 at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) 79 at java.lang.reflect.Method.invoke(Method.java:606) 80 at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187) 81 at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) 82 at com.sun.proxy.$Proxy10.create(Unknown Source) 83 at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:1725) 84 ... 12 more

原因:在windows环境下提交任务是用的本机的admin用户,此处是SimonsZhao我个人账户,并不具有集群文件系统的操作权限。

解决办法:

增加以上代码到main方法的首行即可。

(2).对上述问题可能需要修改两处配置文件(待验证)

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

hdfs fs -chmod 777 /tmp

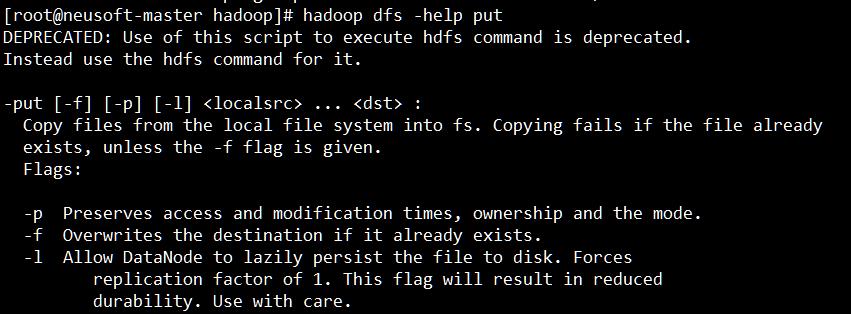

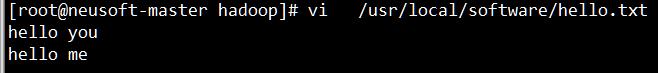

[root@neusoft-master hadoop]# vi /usr/local/software/hello.txt

hello you

hello me

[root@neusoft-master hadoop]# hadoop dfs -put -p /usr/local/software/hello.txt /hello2

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

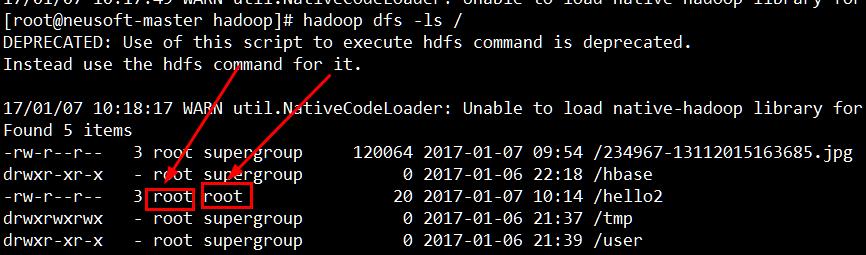

[root@neusoft-master hadoop]# hadoop dfs -ls /

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 5 items

-rw-r--r-- 3 root supergroup 120064 2017-01-07 09:54 /234967-13112015163685.jpg

drwxr-xr-x - root supergroup 0 2017-01-06 22:18 /hbase

-rw-r--r-- 3 root root 20 2017-01-07 10:14 /hello2

drwxrwxrwx - root supergroup 0 2017-01-06 21:37 /tmp

drwxr-xr-x - root supergroup 0 2017-01-06 21:39 /user

上面的时间、用户都发生了改变,hello2的时间是当时上传的时间,这就是保留作用

[root@neusoft-master hadoop]# hadoop dfs -cat /hello2

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

17/01/07 10:19:06 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

hello you

hello me

3.java操作HDFS下载文件系统中的文件并输出在本地显示

1 package Hdfs; 2 3 import java.io.FileInputStream; 4 import java.io.FileNotFoundException; 5 import java.io.FileOutputStream; 6 import java.io.IOException; 7 import java.net.URI; 8 9 import org.apache.hadoop.conf.Configuration; 10 import org.apache.hadoop.fs.FSDataInputStream; 11 import org.apache.hadoop.fs.FSDataOutputStream; 12 import org.apache.hadoop.fs.FileStatus; 13 import org.apache.hadoop.fs.FileSystem; 14 import org.apache.hadoop.fs.Path; 15 import org.apache.hadoop.io.IOUtils; 16 17 public class FileSystemTest { 18 public static void main(String[] args) throws Exception { 19 //注:hadoop用户是具有集群文件系统权限的用户。 20 System.setProperty("HADOOP_USER_NAME","root"); 21 System.setProperty("HADOOP_USER_PASSWORD","neusoft"); 22 FileSystem fileSystem = FileSystem.newInstance(new URI("hdfs://neusoft-master:9000"), new Configuration()); 23 //ls(fileSystem); //提取的方法 24 //put(fileSystem); //上传的方法 25 show(fileSystem); //显示之前上传的图片 26 } 27 28 private static void show(FileSystem fileSystem) throws IOException, 29 FileNotFoundException { 30 FSDataInputStream in = fileSystem.open(new Path("/234967-13112015163685.jpg")); 31 IOUtils.copyBytes(in, 32 new FileOutputStream("C:\\\\Users\\\\SimonsZhao\\\\Desktop\\\\___234967-13112015163685.jpg"),1024,true); 33 } 34 35 private static void put(FileSystem fileSystem) throws IOException, 36 FileNotFoundException { 37 //put创建上传图片C:\\Users\\SimonsZhao\\Desktop\\234967-13112015163685.jpg 38 FSDataOutputStream out = fileSystem.create(new Path("/234967-13112015163685.jpg")); 39 //两个流嵌套 40 IOUtils.copyBytes(new FileInputStream("C:\\\\Users\\\\SimonsZhao\\\\Desktop\\\\234967-13112015163685.jpg"), 41 out, 1024, true); 42 } 43 44 private static void ls(FileSystem fileSystem) throws Exception { 45 //ls命令的操作 46 FileStatus[] listStatus = fileSystem.listStatus(new Path("/")); 47 for (FileStatus fileStatus : listStatus) { 48 System.out.println(fileStatus); 49 } 50 } 51 }

在桌面显示如下:

Eclipse中抽取代码:alt+shift+M抽取为函数

4.删除HDFS根目录下面的图片

1 package Hdfs; 2 3 import java.io.FileInputStream; 4 import java.io.FileNotFoundException; 5 import java.io.FileOutputStream; 6 import java.io.IOException; 7 import java.net.URI; 8 9 import org.apache.hadoop.conf.Configuration; 10 import org.apache.hadoop.fs.FSDataInputStream; 11 import org.apache.hadoop.fs.FSDataOutputStream; 12 import org.apache.hadoop.fs.FileStatus; 13 import org.apache.hadoop.fs.FileSystem; 14 import org.apache.hadoop.fs.Path; 15 import org.apache.hadoop.io.IOUtils; 16 17 public class FileSystemTest { 18 public static void main(String[] args) throws Exception { 19 //注:hadoop用户是具有集群文件系统权限的用户。 20 System.setProperty("HADOOP_USER_NAME","root"); 21 System.setProperty("HADOOP_USER_PASSWORD","neusoft"); 22 FileSystem fileSystem = FileSystem.newInstance(new URI("hdfs://neusoft-master:9000"), new Configuration()); 23 //ls(fileSystem); //提取的方法 24 //put(fileSystem); //上传的方法 25 //show(fileSystem); //显示之前上传的图片 26 //删除根目录下面的图片 27 fileSystem.delete(new Path("/234967-13112015163685.jpg"),false); 28 } 29 30 private static void show(FileSystem fileSystem) throws IOException, 31 FileNotFoundException { 32 FSDataInputStream in = fileSystem.open(new Path("/234967-13112015163685.jpg")); 33 IOUtils.copyBytes(in, 34 new FileOutputStream("C:\\\\Users\\\\SimonsZhao\\\\Desktop\\\\___234967-13112015163685.jpg"),1024,true); 35 } 36 37 private static void put(FileSystem fileSystem) throws IOException, 38 FileNotFoundException { 39 //put创建上传图片C:\\Users\\SimonsZhao\\Desktop\\234967-13112015163685.jpg 40 FSDataOutputStream out = fileSystem.create(new Path("/234967-13112015163685.jpg")); 41 //两个流嵌套 42 IOUtils.copyBytes(new FileInputStream("C:\\\\Users\\\\SimonsZhao\\\\Desktop\\\\234967-13112015163685.jpg"), 43 out, 1024, true); 44 } 45 46 private static void ls(FileSystem fileSystem) throws Exception { 47 //ls命令的操作 48 FileStatus[] listStatus = fileSystem.listStatus(new Path("/")); 49 for (FileStatus fileStatus : listStatus) { 50 System.out.println(fileStatus); 51 } 52 } 53 }

以上是关于HDFS的Java客户端操作代码(HDFS的查看创建)的主要内容,如果未能解决你的问题,请参考以下文章