Hudi学习三:IDEA操作hudi

Posted NC_NE

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hudi学习三:IDEA操作hudi相关的知识,希望对你有一定的参考价值。

一、开发说明

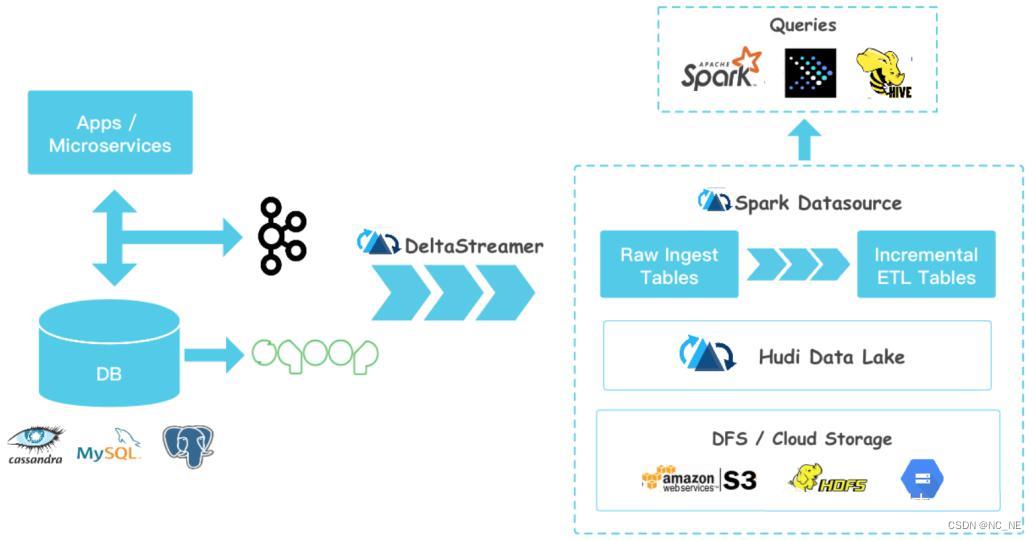

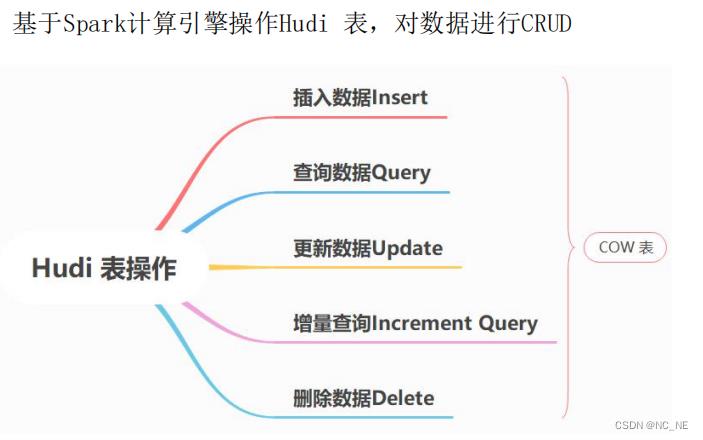

承接上一篇使用spark-shell操作Hudi,实际开发中肯定不能只在shell下来操作,Hudi其实提供了Hudi表的概念,而且支持CRUD操作,所以可以使用Spark来操作Hudi API进行读写

二、环境准备

1、创建Maven工程(随意)

2、添加Hudi及Spark相关依赖jar包

<properties>

<scala.version>2.12.10</scala.version>

<scala.binary.version>2.12</scala.binary.version>

<spark.version>3.0.0</spark.version>

<hadoop.version>2.7.3</hadoop.version>

<hudi.version>0.9.0</hudi.version>

</properties>

<dependencies>

<!-- 依赖Scala语言 -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>$scala.version</version>

</dependency>

<!-- Spark Core 依赖 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_$scala.binary.version</artifactId>

<version>$spark.version</version>

</dependency>

<!-- Spark SQL 依赖 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_$scala.binary.version</artifactId>

<version>$spark.version</version>

</dependency>

<!-- Hadoop Client 依赖 -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>$hadoop.version</version>

</dependency>

<dependency>

<groupId>org.codehaus.janino</groupId>

<artifactId>janino</artifactId>

<version>3.0.8</version>

</dependency>

<!-- hudi-spark3 -->

<dependency>

<groupId>org.apache.hudi</groupId>

<artifactId>hudi-spark3-bundle_2.12</artifactId>

<version>$hudi.version</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-avro_2.12</artifactId>

<version>$spark.version</version>

</dependency>

</dependencies>

<build>

<outputDirectory>target/classes</outputDirectory>

<testOutputDirectory>target/test-classes</testOutputDirectory>

<resources>

<resource>

<directory>$project.basedir/src/main/resources</directory>

</resource>

</resources>

<!-- Maven 编译的插件 -->

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.0</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>3、将hadoop的core-site.xml和hdfs-site.xml拷贝到resources

三、代码实现

1、测试案列

1)、测试说明

基于Spark DataSource数据源,模拟产生Trip乘车交易数据,保存到Hudi表(COW类型: Copy on Write),再从Hudi表加载数据分析查询

2)、案列

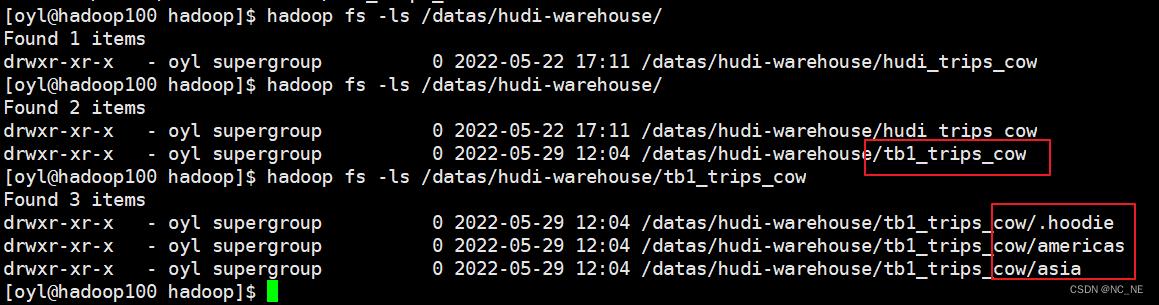

2、案列一:插入数据到hudi表

使用官方QuickstartUtils提供模拟产生Trip数据,模拟100条交易Trip乘车数据,将其转换为DataFrame数据集,保存至Hudi表中,代码基本与spark-shell命令行一致

1)、代码

package com.ouyangl.hudi.spark.start

import org.apache.spark.SparkConf

import org.apache.spark.sql.DataFrame, SaveMode, SparkSession

/**

* @author oyl

* @create 2022-05-29 14:15

* @Description 官方案例:模拟产生数据,插入Hudi表,表的类型COW

*/

object insertDatasToHudi

def main(args: Array[String]): Unit =

// 创建sparkSQL的运行环境

val conf = new SparkConf().setAppName("insertDatasToHudi").setMaster("local[2]")

val spark = SparkSession.builder().config(conf)

// 设置序列化方式:Kryo

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.getOrCreate()

//定义变量:表名,数据存储路径

val tableName : String = "tb1_trips_cow"

val tablePath : String = "/datas/hudi-warehouse/tb1_trips_cow"

//引入相关包

import spark.implicits._

import scala.collection.JavaConversions._

// 第1步、模拟乘车数据

import org.apache.hudi.QuickstartUtils._

val generator: DataGenerator = new DataGenerator()

val insertDatas = convertToStringList(generator.generateInserts(100))

val insertDF: DataFrame = spark.read.json(spark.sparkContext.parallelize(insertDatas, 2).toDS())

// insertDF.printSchema()

// insertDF.show(2)

//第2步、将数据插入到hudi表

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.config.HoodieWriteConfig._

insertDF.write

.format("hudi")

.mode(SaveMode.Overwrite)

.option("hoodie.insert.shuffle.parallelism", "2")

.option("hoodie.upsert.shuffle.parallelism", "2")

// Hudi 表的属性值设置

.option(PRECOMBINE_FIELD.key(), "ts")

.option(RECORDKEY_FIELD.key(), "uuid")

.option(PARTITIONPATH_FIELD.key(), "partitionpath")

.option(TBL_NAME.key(), tableName)

.save(tablePath)

//关闭

spark.stop()

2)、执行结果

可以看到数据就写入到tb1_trips_cow表里面去了

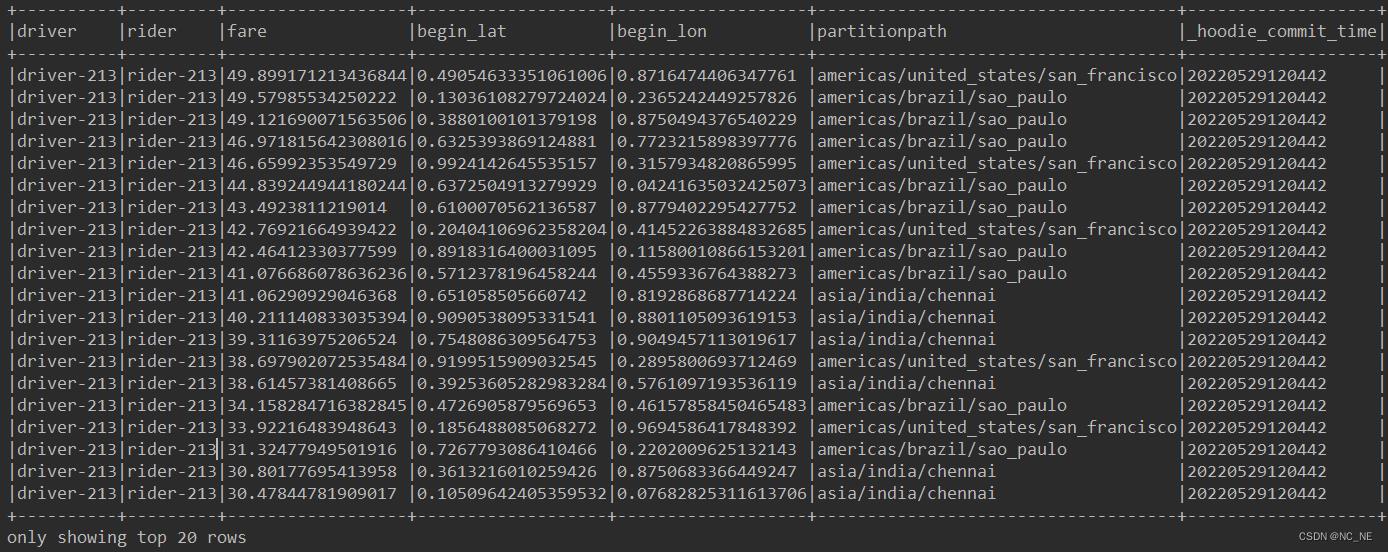

2、案列二:查询数据Query

1)、Snapshot快照方式直接查询

package com.ouyangl.hudi.spark.start

import org.apache.spark.SparkConf

import org.apache.spark.sql.DataFrame, SparkSession

/**

* @author oyl

* @create 2022-05-29 14:19

* @Description 快照方式查询(Snapshot Query)数据,采用DSL方式

*/

object queryDataFromHudi

def main(args: Array[String]): Unit =

// 创建sparkSQL的运行环境

val conf = new SparkConf().setAppName("queryDataFromHudi").setMaster("local[2]")

val spark = SparkSession.builder().config(conf)

// 设置序列化方式:Kryo

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.getOrCreate()

//定义变量:表名,数据存储路径

val tableName : String = "tb1_trips_cow"

val tablePath : String = "/datas/hudi-warehouse/tb1_trips_cow"

import spark.implicits._

val df: DataFrame = spark.read.format("hudi").load(tablePath)

val value = df.filter($"fare" >= 20 && $"fare" <= 50)

.select($"driver", $"rider", $"fare", $"begin_lat", $"begin_lon", $"partitionpath", $"_hoodie_commit_time")

.orderBy($"fare".desc, $"_hoodie_commit_time".desc)

value.show(20,false)

spark.stop()

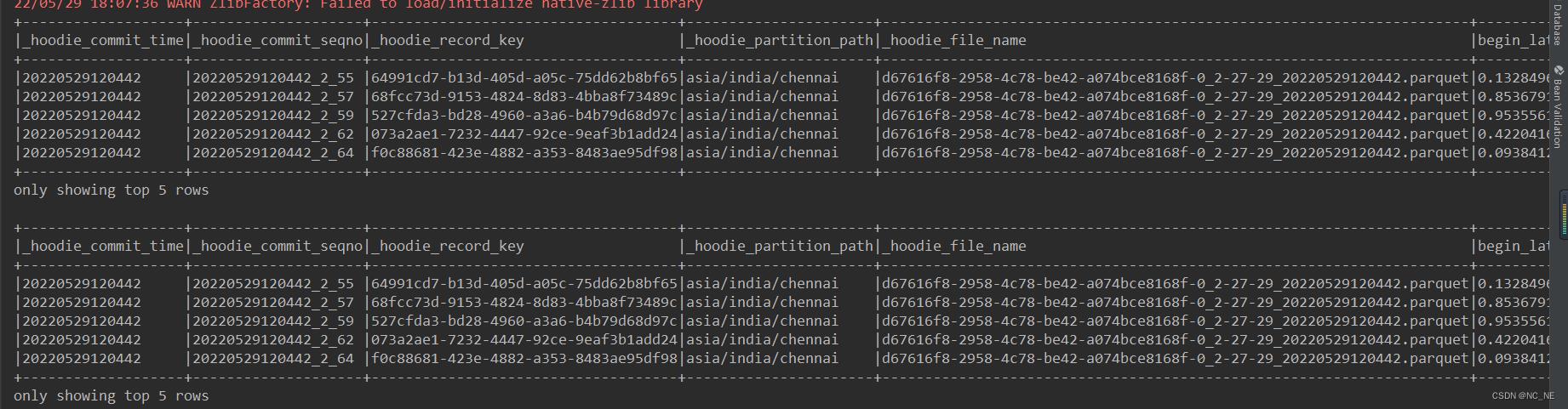

2)、时间点查询

可以依据时间进行过滤查询,设置属性:"as.of.instant"

package com.ouyangl.hudi.spark.start

import org.apache.spark.SparkConf

import org.apache.spark.sql.DataFrame, SparkSession

/**

* @author oyl

* @create 2022-05-29 17:59

* @Description 通过时间点来查询数据,时间点就是提交的commit时间

*/

object queryDataByTime

def main(args: Array[String]): Unit =

// 创建sparkSQL的运行环境

val conf = new SparkConf().setAppName("updateDataToHudi").setMaster("local[2]")

val spark = SparkSession.builder().config(conf)

// 设置序列化方式:Kryo

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.getOrCreate()

//定义变量:表名,数据存储路径

val tableName : String = "tb1_trips_cow"

val tablePath : String = "/datas/hudi-warehouse/tb1_trips_cow"

import spark.implicits._

//方式一:指定字符串,格式yyyyMMddHHmmss

val df1: DataFrame = spark.read.format("hudi")

.option("as.of.instant","20220529120442")

.load(tablePath)

.sort($"_hoodie_commit_time".desc)

df1.show(20,false)

//方式二:指定字符串,格式yyyy-MM-dd HH:mm:ss

val df2: DataFrame = spark.read.format("hudi")

.option("as.of.instant","2022-05-29 12:04:42")

.load(tablePath)

.sort($"_hoodie_commit_time".desc)

df2.show(20,false)

spark.stop()

3、案例三:更新数据Update

由于官方提供工具类DataGenerator模拟生成更新update数据时,必须要与模拟生成插入insert数据使用同一个DataGenerator对象,

1)、代码编写

package com.ouyangl.hudi.spark.start

import org.apache.hudi.QuickstartUtils.DataGenerator

import org.apache.spark.SparkConf

import org.apache.spark.sql.DataFrame, SaveMode, SparkSession

/**

* @author oyl

* @create 2022-05-29 18:19

* @Description updateData,先生成更新数据,再保存至Hudi表

*/

object updateDataToHudi

def main(args: Array[String]): Unit =

// 创建sparkSQL的运行环境

val conf = new SparkConf().setAppName("updateDataToHudi").setMaster("local[2]")

val spark = SparkSession.builder().config(conf)

// 设置序列化方式:Kryo

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.getOrCreate()

//定义变量:表名,数据存储路径

val tableName : String = "tb1_trips_cow"

val tablePath : String = "/datas/hudi-warehouse/tb1_trips_cow"

import spark.implicits._

import org.apache.hudi.QuickstartUtils._

val dataGen: DataGenerator = new DataGenerator()

import org.apache.hudi.QuickstartUtils._

import scala.collection.JavaConverters._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.config.HoodieWriteConfig._

// 第1步、模拟乘车数据,插入数据到Hudi表

val inserts = convertToStringList(dataGen.generateInserts(150))

val insertDF: DataFrame = spark.read.json(

spark.sparkContext.parallelize(inserts.asScala, 2).toDS()

)

// insertDF.write

// .mode(SaveMode.Overwrite)

// .format("hudi")

// .option("hoodie.insert.shuffle.parallelism", "2")

// .option("hoodie.upsert.shuffle.parallelism", "2")

// // Hudi 表的属性值设置

// .option(PRECOMBINE_FIELD.key(), "ts")

// .option(RECORDKEY_FIELD.key(), "uuid")

// .option(PARTITIONPATH_FIELD.key(), "partitionpath")

// .option(TBL_NAME.key(), tableName)

// .save(tablePath)

val updates = convertToStringList(dataGen.generateUpdates(150))

val updateDF: DataFrame = spark.read.json(

spark.sparkContext.parallelize(updates.asScala, 2).toDS()

)

updateDF.write

.mode(SaveMode.Append)

.format("hudi")

.option("hoodie.insert.shuffle.parallelism", "2")

.option("hoodie.upsert.shuffle.parallelism", "2")

// Hudi 表的属性值设置

.option(PRECOMBINE_FIELD.key(), "ts")

.option(RECORDKEY_FIELD.key(), "uuid")

.option(PARTITIONPATH_FIELD.key(), "partitionpath")

.option(TBL_NAME.key(), tableName)

.save(tablePath)

spark.stop()

2)、执行结果

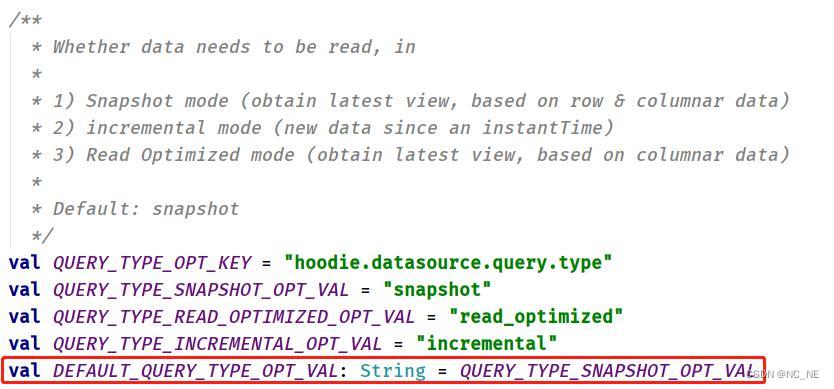

4、案例四:增量查询Incremental query

1)、增量查询方式

当Hudi中表的类型为:COW时,支持2种方式查询:Snapshot Queries、Incremental Queries;默认情况下查询属于:Snapshot Queries快照查询,通过参数:hoodie.datasource.query.type 可以进行设置

2)、如果是incremental增量查询,需要指定时间戳,当Hudi表中数据满足:instant_time > beginTime时,数据将会被加载读取。此外,可设置某个时间范围:endTime > instant_time > begionTime,获取相应的数据

3)、代码编写

首先从Hudi表加载所有数据,获取其中字段值:_hoodie_commit_time,从中选取一个值,作为增量查询:beginTime开始时间;再次设置属性参数,从Hudi表增量查询数据

package com.ouyangl.hudi.spark.start

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

/**

* @author oyl

* @create 2022-05-29 21:17

* @Description incremental增量查询

*/

object incrementalQueryData

def main(args: Array[String]): Unit =

val conf = new SparkConf().setMaster("local[*]").setAppName("incrementalQueryData")

val spark = SparkSession.builder().config(conf)

// 设置序列化方式:Kryo

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.getOrCreate()

//定义变量:表名,数据存储路径

val tableName : String = "tb1_trips_cow"

val tablePath : String = "/datas/hudi-warehouse/tb1_trips_cow"

import spark.implicits._

// 第1步、加载Hudi表数据,获取commit time时间,作为增量查询数据阈值

import org.apache.hudi.DataSourceReadOptions._

spark.read

.format("hudi")

.load(tablePath)

.createOrReplaceTempView("view_temp_hudi_trips")

val commits: Array[String] = spark

.sql(

"""

|select

| distinct(_hoodie_commit_time) as commitTime

|from

| view_temp_hudi_trips

|order by

| commitTime DESC

|""".stripMargin

)

.map(row => row.getString(0))

.take(50)

val beginTime = commits(commits.length - 1) // commit time we are interested in

println(s"beginTime = $beginTime")

// 第2步、设置Hudi数据CommitTime时间阈值,进行增量数据查询

val tripsIncrementalDF = spark.read

.format("hudi")

// 设置查询数据模式为:incremental,增量读取

.option(QUERY_TYPE.key(), QUERY_TYPE_INCREMENTAL_OPT_VAL)

// 设置增量读取数据时开始时间

.option(BEGIN_INSTANTTIME.key(), beginTime)

.load(tablePath)

// 第3步、将增量查询数据注册为临时视图,查询费用大于20数据

tripsIncrementalDF.createOrReplaceTempView("hudi_trips_incremental")

spark

.sql(

"""

|select

| `_hoodie_commit_time`, fare, begin_lon, begin_lat, ts

|from

| hudi_trips_incremental

|where

| fare > 20.0

|""".stripMargin

)

.show(10, truncate = false)

spark.stop()

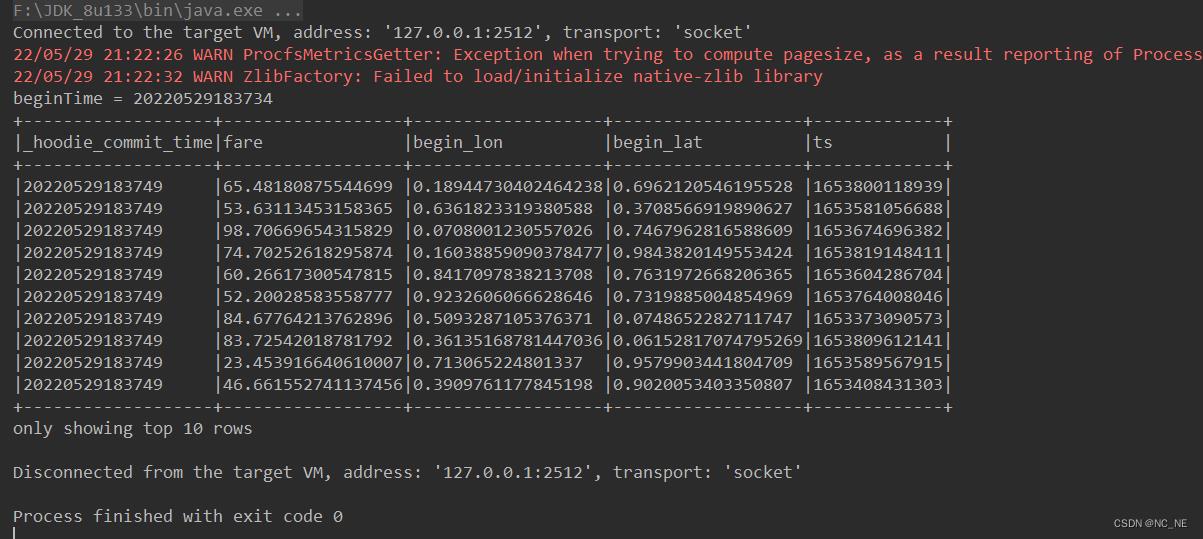

4)、增量查询数据结果

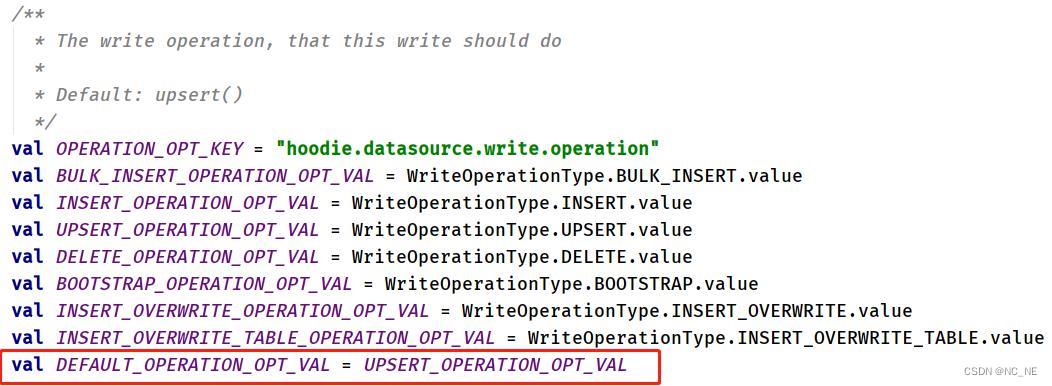

5、案例五:删除数据Delete

使用DataGenerator数据生成器,基于已有数据构建要删除的数据,最终保存到Hudi表中,需要设置属性参数:hoodie.datasource.write.operation 值为:delete

1)、代码编写

先从Hudi表获取50条数据,然后构建出数据格式,最后保存到Hudi表

package com.ouyangl.hudi.spark.start

import org.apache.spark.SparkConf

import org.apache.spark.sql.DataFrame, SaveMode, SparkSession

/**

* @author oyl

* @create 2022-05-29 21:25

* @Description 删除Hudi数据

*/

object deleteDataFromHudi

def main(args: Array[String]): Unit =

val conf = new SparkConf().setMaster("local[*]").setAppName("deleteDataFromHudi")

val spark: SparkSession = SparkSession.builder().config(conf)

// 设置序列化方式:Kryo

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.getOrCreate()

//定义变量:表名,数据存储路径

val tableName : String = "tb1_trips_cow"

val tablePath : String = "/datas/hudi-warehouse/tb1_trips_cow"

import spark.implicits._

// 第1步、加载Hudi表数据,获取条目数

val tripsDF: DataFrame = spark.read.format("hudi").load(tablePath)

println(s"Raw Count = $tripsDF.count()")

// 第2步、模拟要删除的数据,从Hudi中加载数据,获取几条数据,转换为要删除数据集合

val dataframe = tripsDF.limit(50).select($"uuid", $"partitionpath")

import org.apache.hudi.QuickstartUtils._

val dataGenerator = new DataGenerator()

val deletes = dataGenerator.generateDeletes(dataframe.collectAsList())

import scala.collection.JavaConverters._

val deleteDF = spark.read.json(spark.sparkContext.parallelize(deletes.asScala, 2))

// 第3步、保存数据到Hudi表中,设置操作类型:DELETE

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.config.HoodieWriteConfig._

deleteDF.write

.mode(SaveMode.Append)

.format("hudi")

.option("hoodie.insert.shuffle.parallelism", "2")

.option("hoodie.upsert.shuffle.parallelism", "2")

// 设置数据操作类型为delete,默认值为upsert

.option(OPERATION.key(), "delete")

.option(PRECOMBINE_FIELD.key(), "ts")

.option(RECORDKEY_FIELD.key(), "uuid")

.option(PARTITIONPATH_FIELD.key(), "partitionpath")

.option(TBL_NAME.key(), tableName)

.save(tablePath)

// 第4步、再次加载Hudi表数据,统计条目数,查看是否减少50条数据

val hudiDF: DataFrame = spark.read.format("hudi").load(tablePath)

println(s"Delete After Count = $hudiDF.count()")

spark.stop()

2)、执行结果,50条数据被删除了

开发者涨薪指南

开发者涨薪指南

48位大咖的思考法则、工作方式、逻辑体系

48位大咖的思考法则、工作方式、逻辑体系

以上是关于Hudi学习三:IDEA操作hudi的主要内容,如果未能解决你的问题,请参考以下文章

Flink 实战系列Flink SQL 实时同步 Kafka 数据到 Hudi(parquet + snappy)并且自动同步数据到 Hive