Prometheus跨集群采集

Posted saynaihe

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Prometheus跨集群采集相关的知识,希望对你有一定的参考价值。

背景

恩不想搭建太多prometheus了,想用一个prometheus,当然了 前提是我A集群可以连通B集群网络,实现

Prometheus跨集群采集采集

关于A集群

A集群 以及prometheus搭建 参照:Kubernetes 1.20.5 安装Prometheus-Oprator

B集群

B集群操作参照:阳明大佬 Prometheus 监控外部 Kubernetes 集群

创建RBAC对象:

cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitoring

kuberctl apply -f rbac.yaml

[root@sh-master-01 prometheus]# kubectl get sa -n monitoring

[root@sh-master-01 prometheus]# kubectl get secret -n monitoring

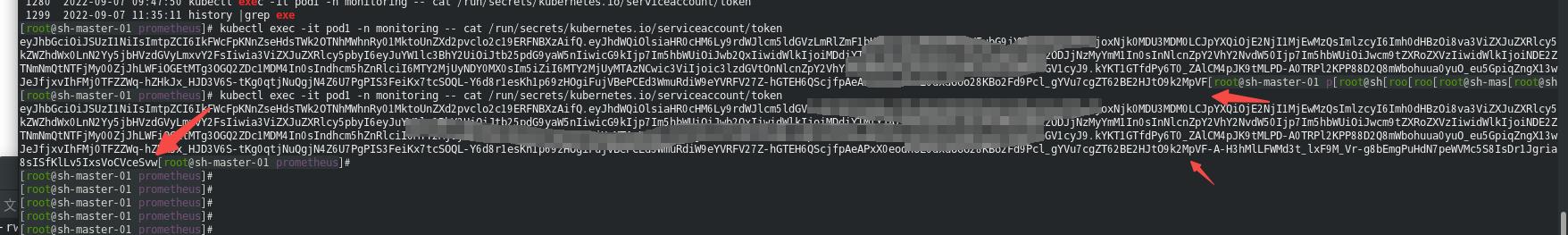

特别强调sa secret token

why怎么没有见secret?参照:https://itnext.io/big-change-in-k8s-1-24-about-serviceaccounts-and-their-secrets-4b909a4af4e0 恩 1.24发生了改变。我这里的版本是1.25.so :

[root@sh-master-01 manifests]# kubectl create token prometheus -n monitoring --duration=999999h

网上很多yq方式用的?

0 kubectl get secret prometheus -n monitoring -o yaml|yq r - data.token|base64 -D

可是yq安装上了还是不太会玩?怎么办?还是用奔方法吧!:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

terminationGracePeriodSeconds: 0

serviceAccount: prometheus

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources:

dnsPolicy: ClusterFirst

restartPolicy: Always

status:

kubectl apply -f pod.yaml -n monitoring

kubectl exec -it pod1 -n monitoring -- cat /run/secrets/kubernetes.io/serviceaccount/token

注意没有获取两次的token还会不一样的这个稍后研究.恩不知道是不是可以kubectl create token prometheus --duration=999999h 这样?(还是参照https://itnext.io/big-change-in-k8s-1-24-about-serviceaccounts-and-their-secrets-4b909a4af4e0)

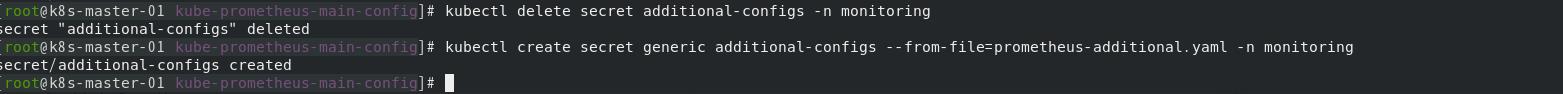

prometheus集群中重新生成additional-configs

A集群 promethus配置文件夹中:修改prometheus-additional.yaml,复制token 替换bearer_token 中XXXXXXXXXXXXXXXXXXX

注:B集群apiserver 地址为10.0.2.28:6443,自己整要修改!

- job_name: 'kubernetes-apiservers-other-cluster'

kubernetes_sd_configs:

- role: endpoints

api_server: https://10.0.2.28:6443

tls_config:

insecure_skip_verify: true

bearer_token: 'XXXXXXXXXXXXXXXXXXX'

tls_config:

insecure_skip_verify: true

bearer_token: 'XXXXXXXXXXXXXXXXXXX'

scheme: https

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- target_label: __address__

replacement: 10.0.2.28:6443

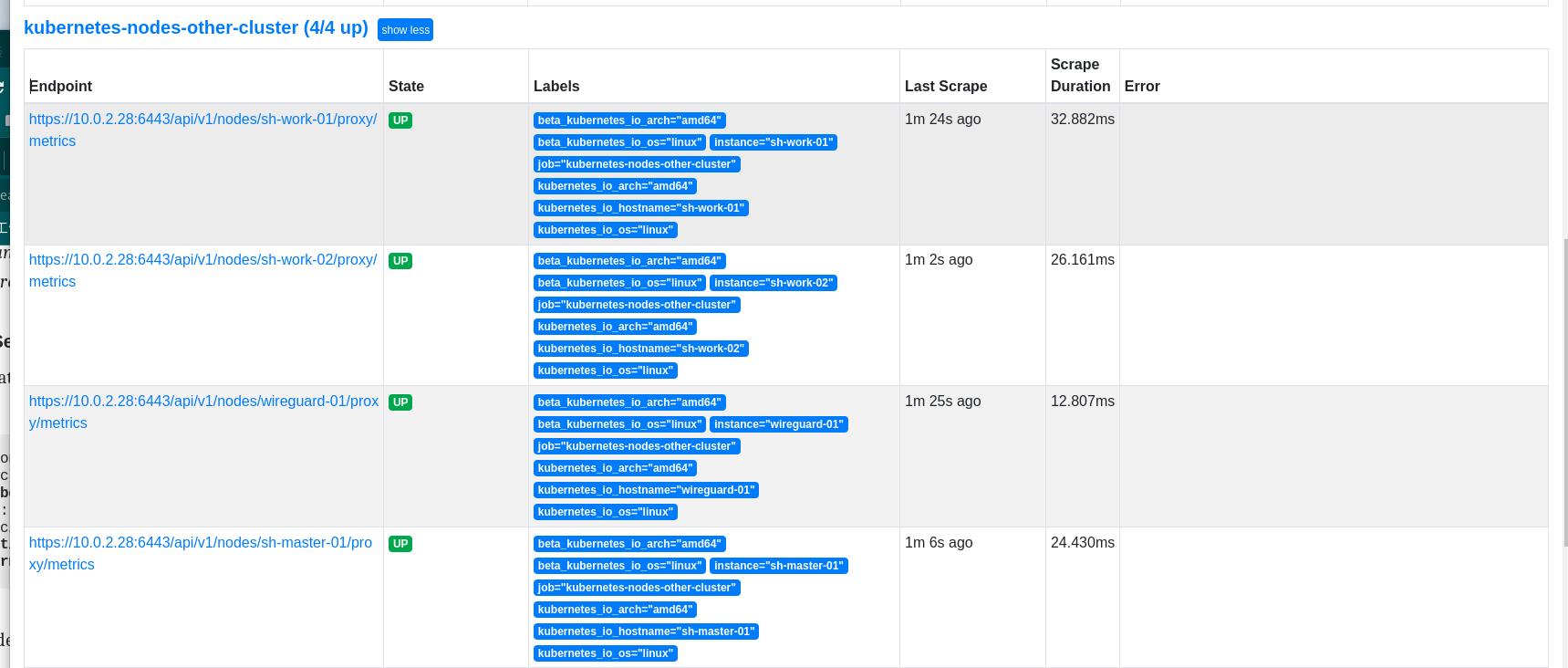

- job_name: 'kubernetes-nodes-other-cluster'

kubernetes_sd_configs:

- role: node

api_server: https://10.0.2.28:6443

tls_config:

insecure_skip_verify: true

bearer_token: 'XXXXXXXXXXXXXXXXXXX'

tls_config:

insecure_skip_verify: true

bearer_token: 'XXXXXXXXXXXXXXXXXXX'

scheme: https

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: 10.0.2.28:6443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/$1/proxy/metrics

- job_name: 'kubernetes-nodes-cadvisor-other-cluster'

kubernetes_sd_configs:

- role: node

api_server: https://10.0.2.28:6443

tls_config:

insecure_skip_verify: true

bearer_token: 'XXXXXXXXXXXXXXXXXXX'

tls_config:

insecure_skip_verify: true

bearer_token: 'XXXXXXXXXXXXXXXXXXX'

scheme: https

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: 10.0.2.28:6443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/$1/proxy/metrics/cadvisor

- job_name: 'kubernetes-state-metrics-other-cluster'

kubernetes_sd_configs:

- role: endpoints

api_server: https://10.0.2.28:6443

tls_config:

insecure_skip_verify: true

bearer_token: 'XXXXXXXXXXXXXXXXXXX'

tls_config:

insecure_skip_verify: true

bearer_token: 'XXXXXXXXXXXXXXXXXXX'

scheme: https

relabel_configs:

- source_labels: [__meta_kubernetes_service_name]

action: keep

regex: '^(kube-state-metrics)$'

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__address__]

action: replace

target_label: instance

- target_label: __address__

replacement: 10.0.2.28:6443

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_pod_name, __meta_kubernetes_pod_container_port_number]

regex: ([^;]+);([^;]+);([^;]+)

target_label: __metrics_path__

replacement: /api/v1/namespaces/$1/pods/http:$2:$3/proxy/metrics

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: service_name

kubectl delete secret additional-configs -n monitoring

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

grafana看一下,图还是有点问题忽略了哈哈哈。不想去研究是版本问题或者其他了,目前这样就算是实现这些吧!

总结一下:

现实环境中我应该不会那么玩,还是跟原来一样,每个k8s集群搞一个prometheus-oprator集群,然后可以连接一个grafana…

其实那么的搞了一圈玩一下就发现了K8s1.24 后BIG change in K8s 1.24 about ServiceAccounts and their Secrets

以上是关于Prometheus跨集群采集的主要内容,如果未能解决你的问题,请参考以下文章