李宏毅 2018最新GAN课程 class 3 Theory behind GAN

Posted ecoflex

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了李宏毅 2018最新GAN课程 class 3 Theory behind GAN相关的知识,希望对你有一定的参考价值。

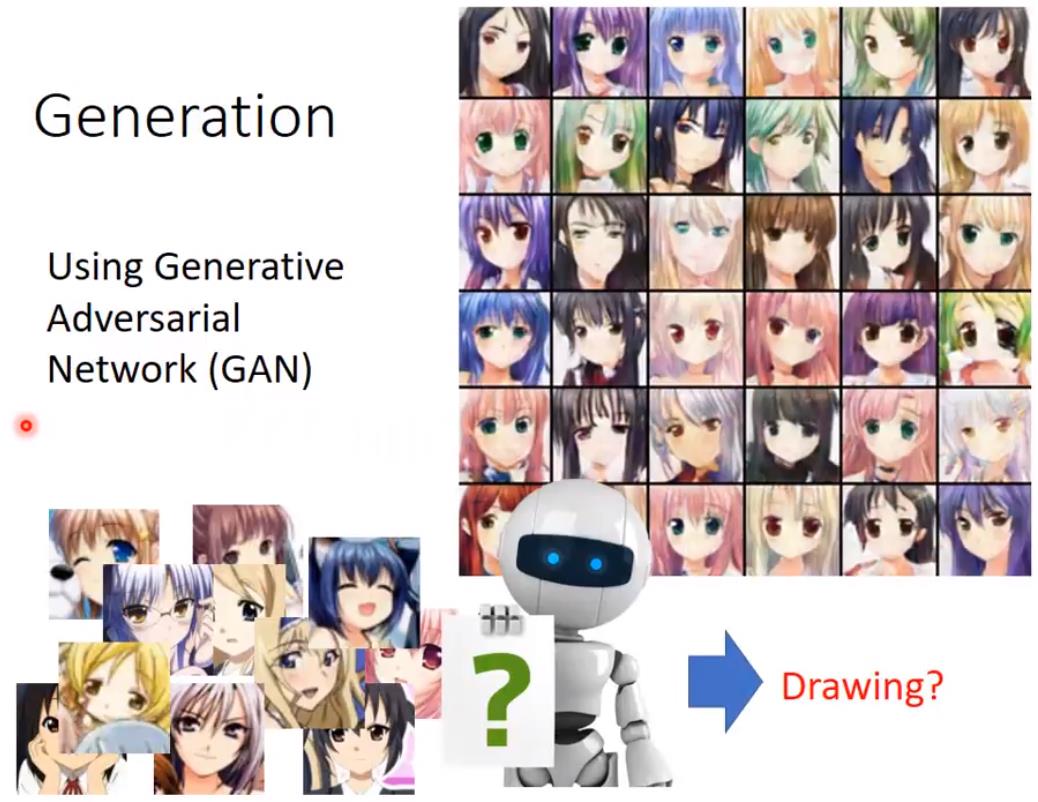

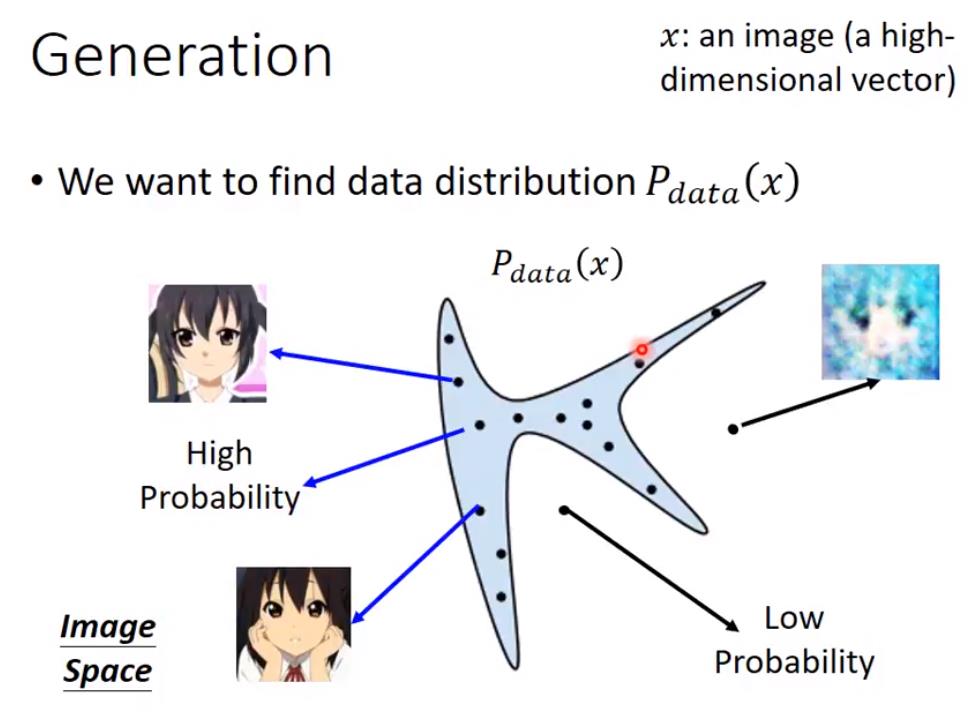

Too much limitation of Gaussian model. The images are too blurry. So any general model?

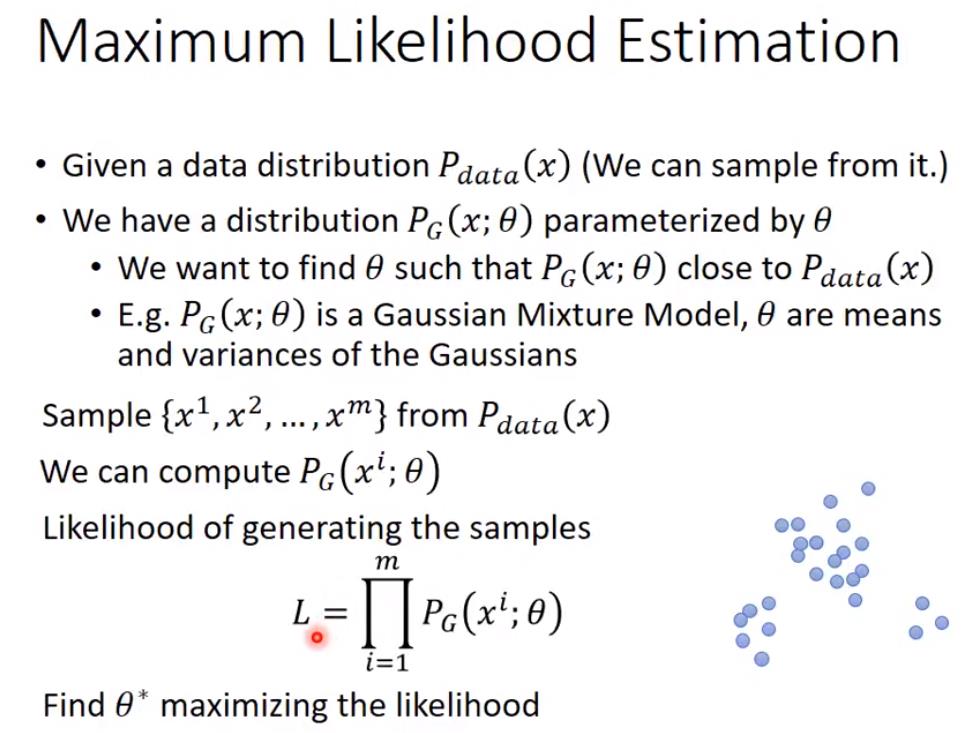

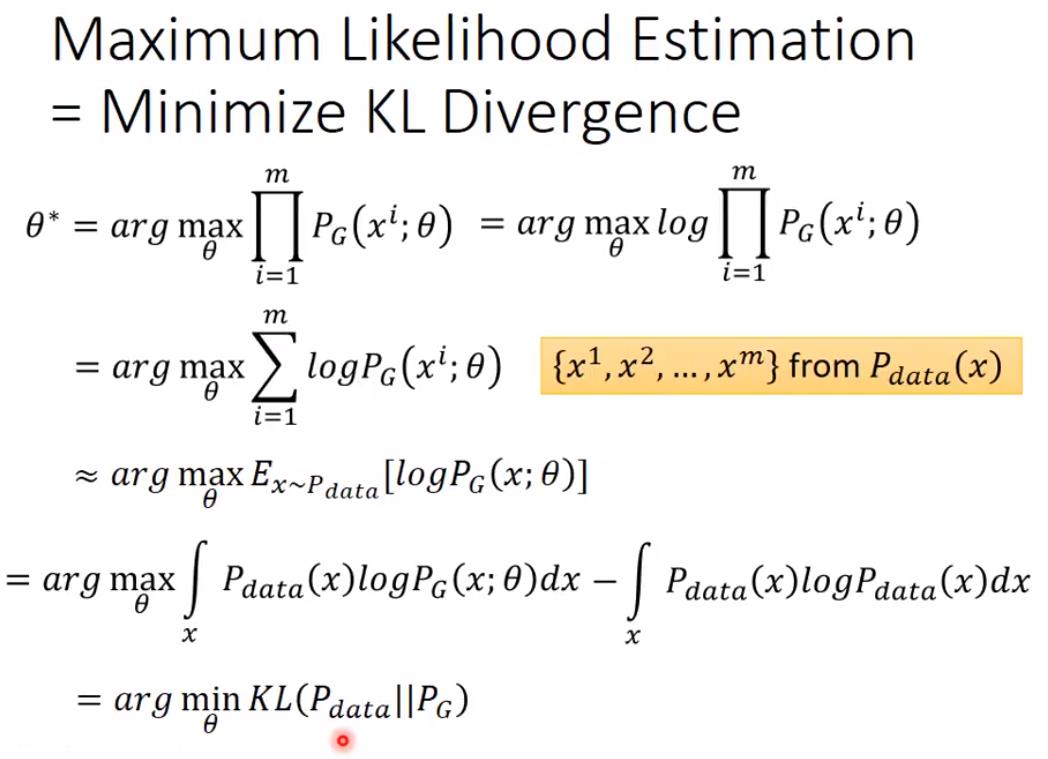

But if PG(x;θ) is a neural network, it\'s impossible to calculate the likelihood. ????

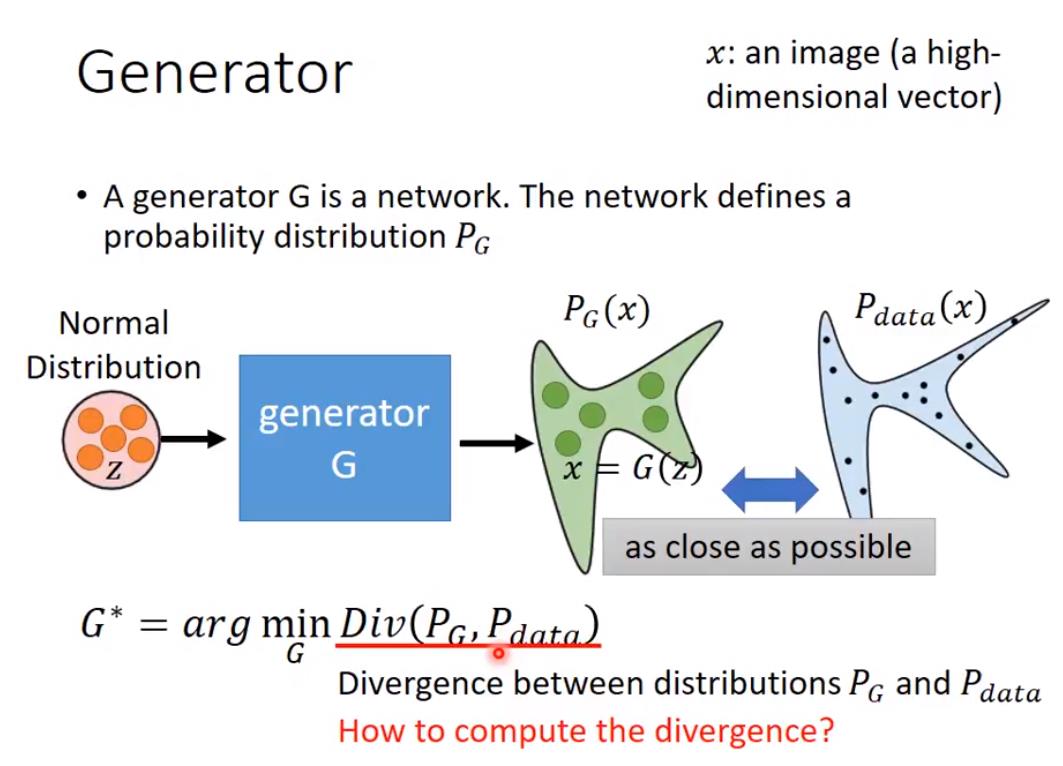

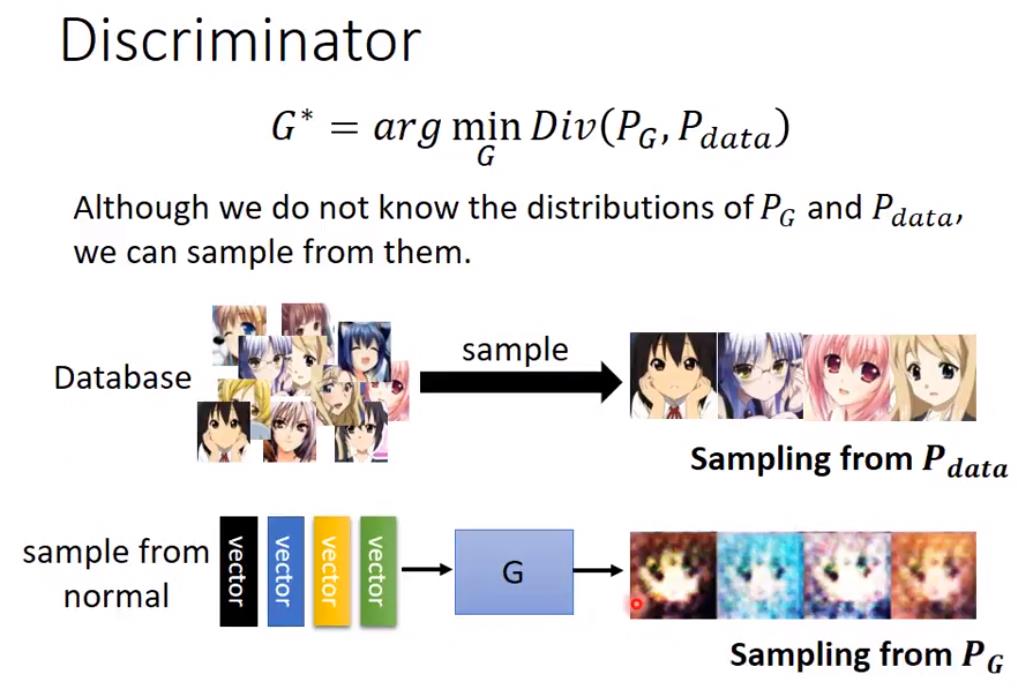

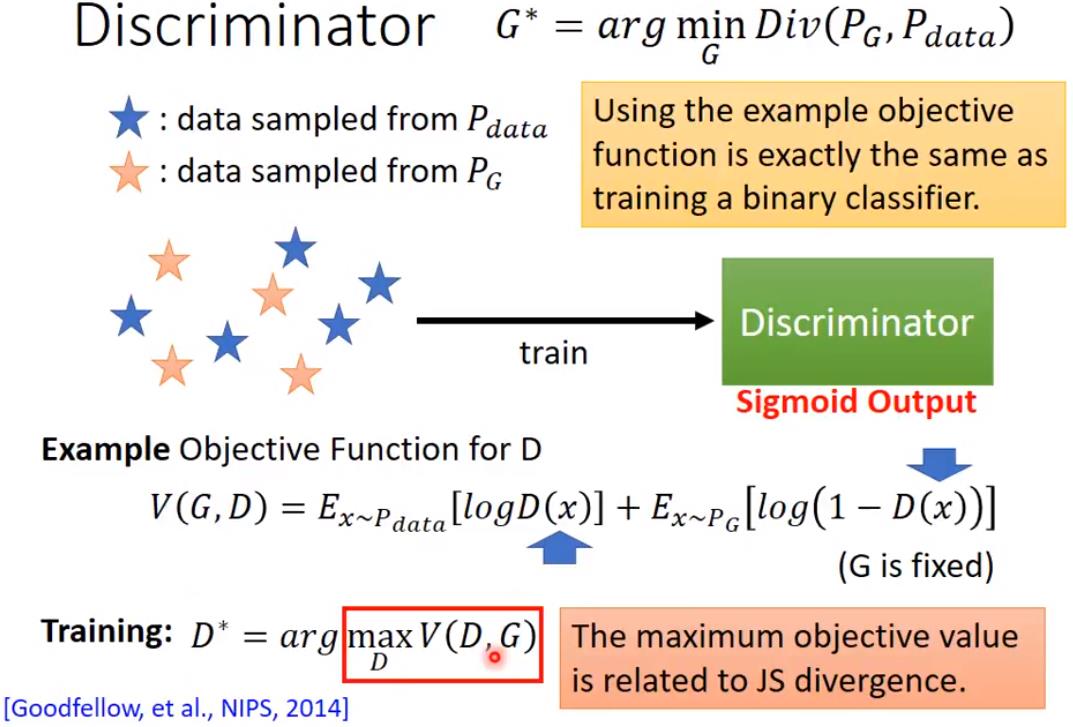

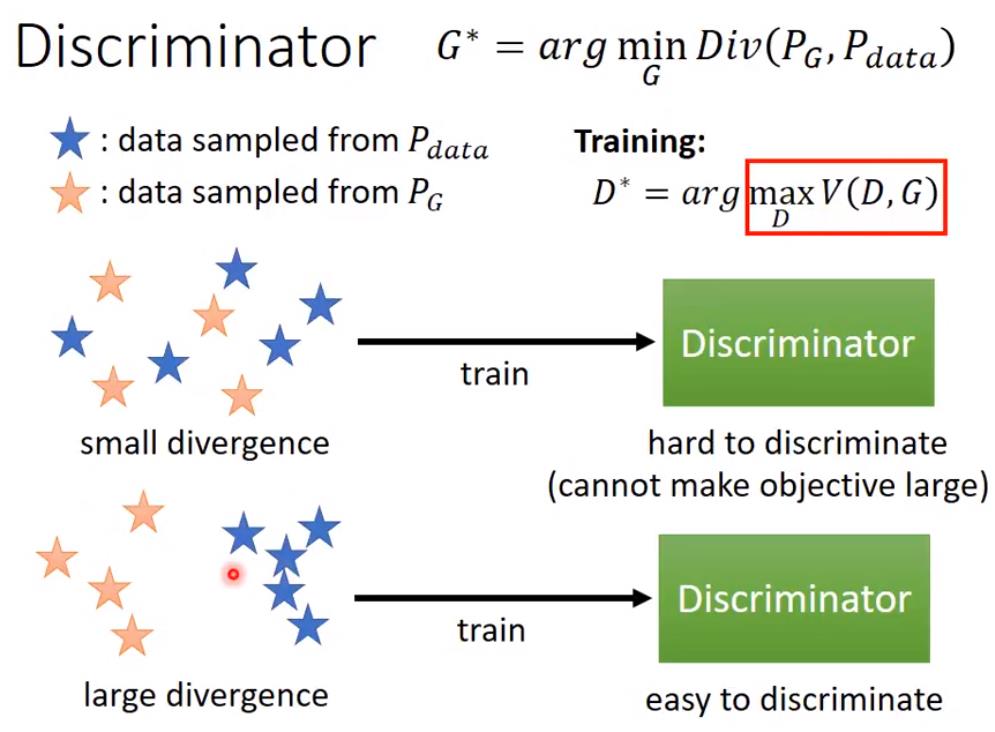

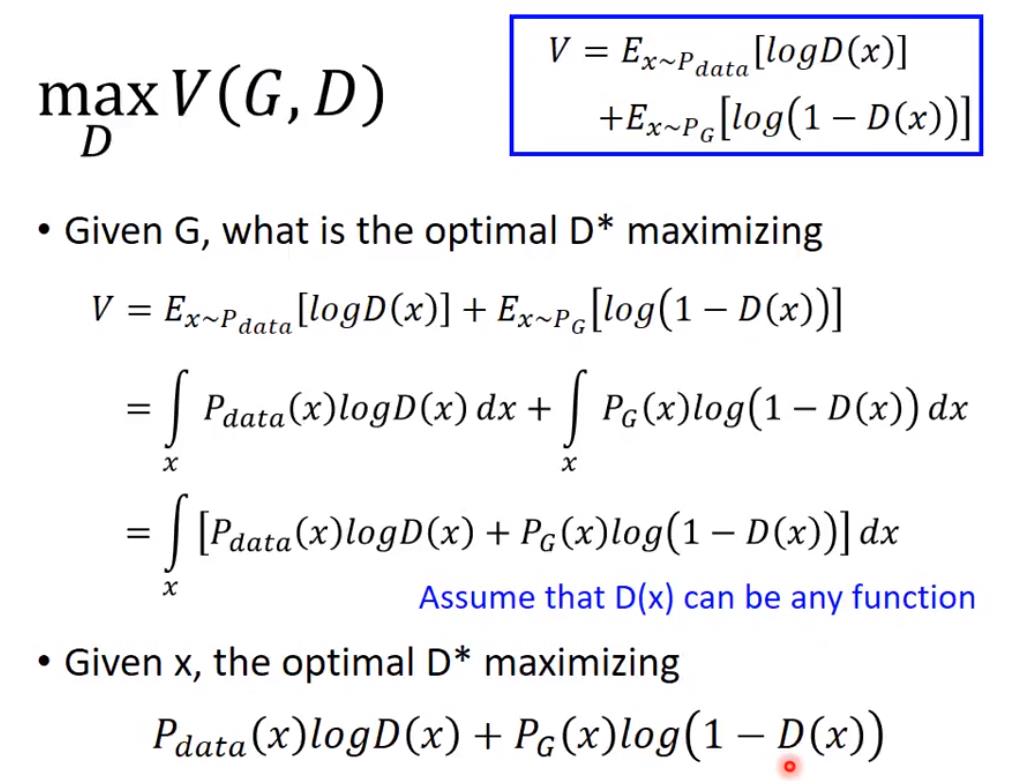

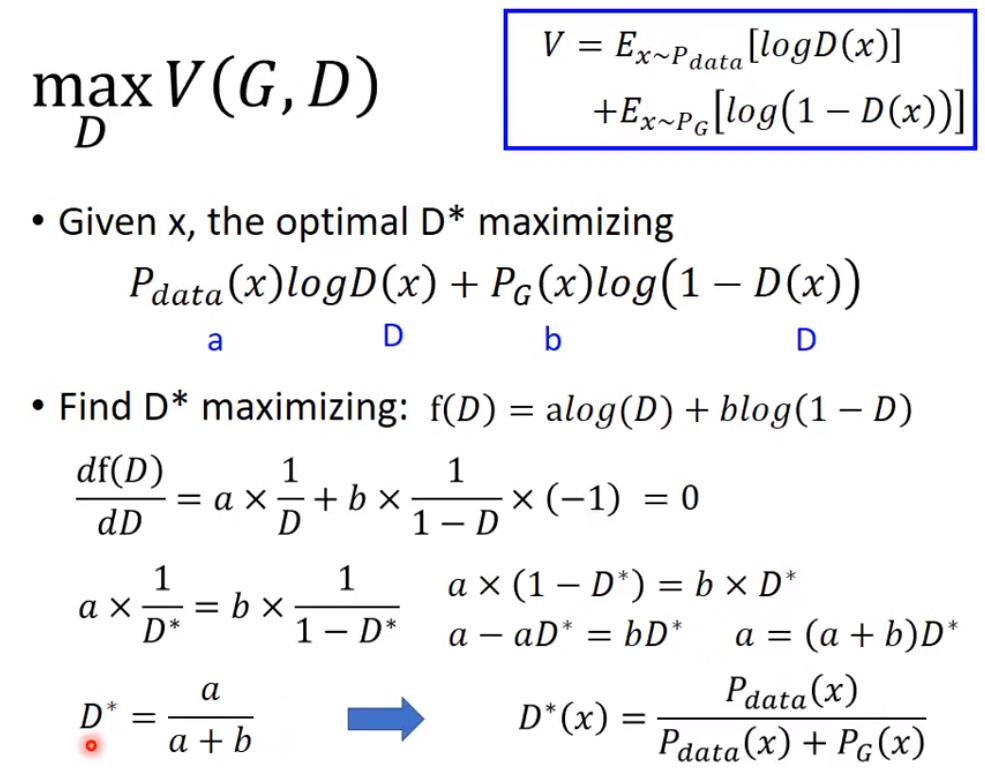

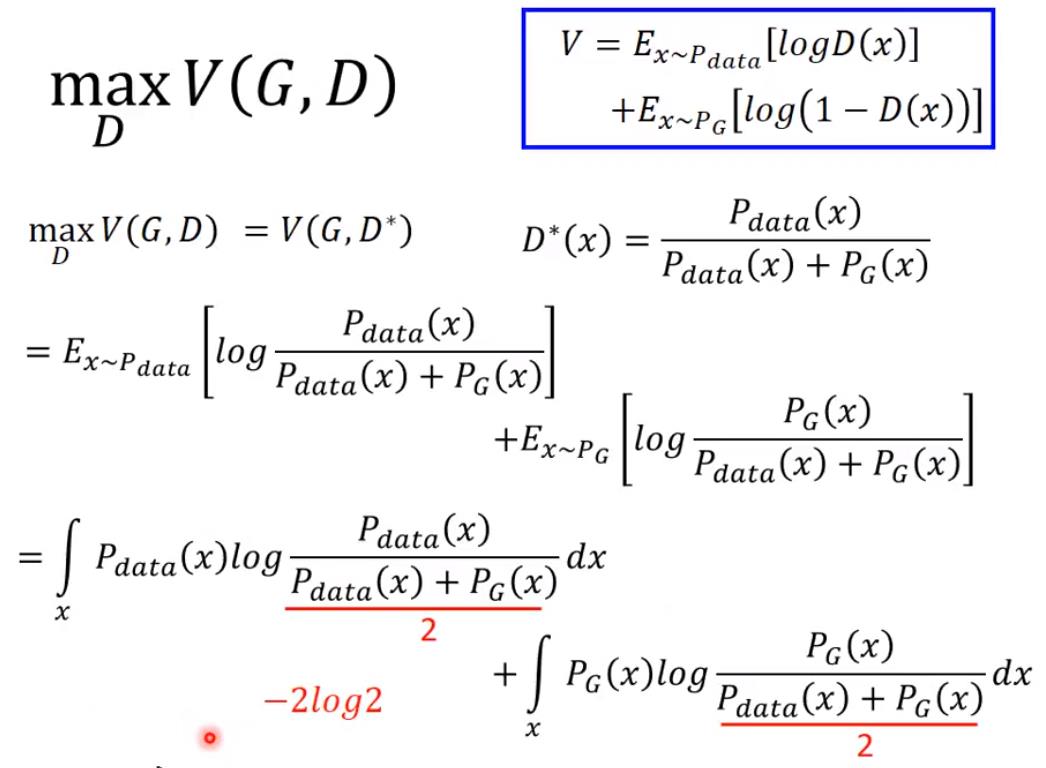

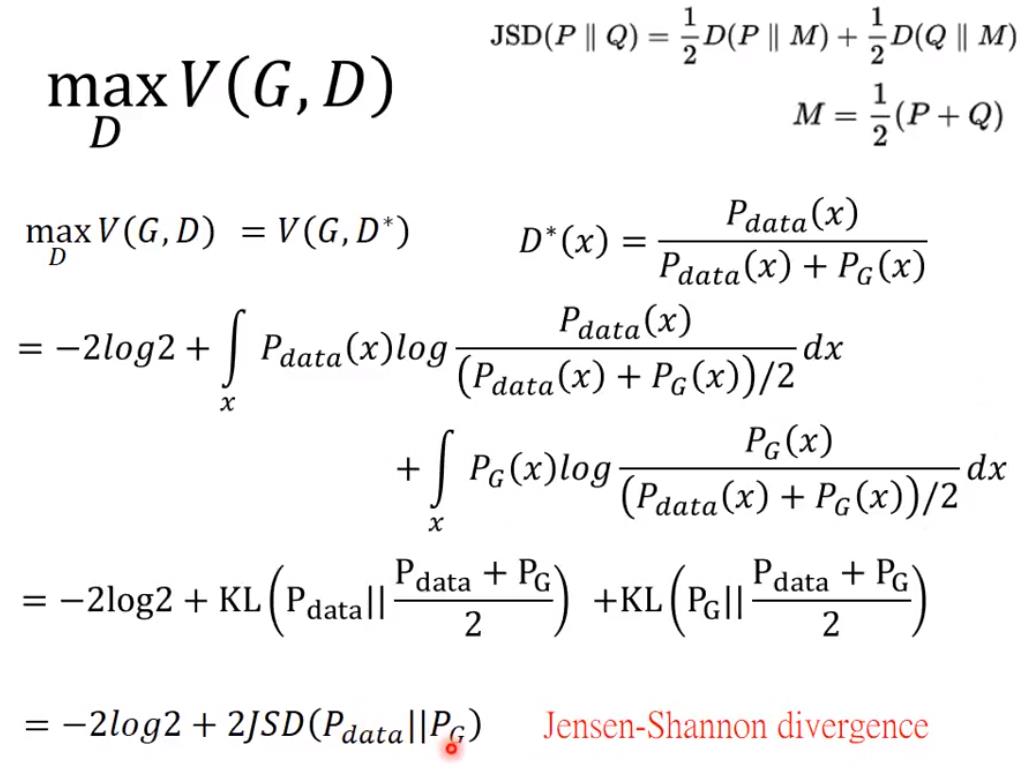

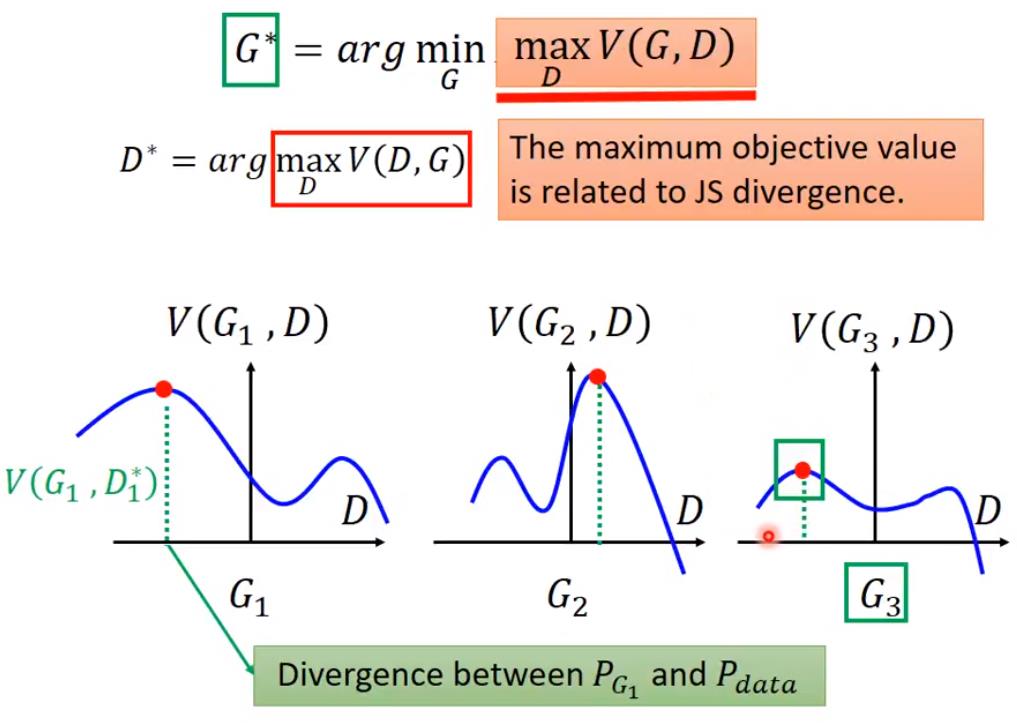

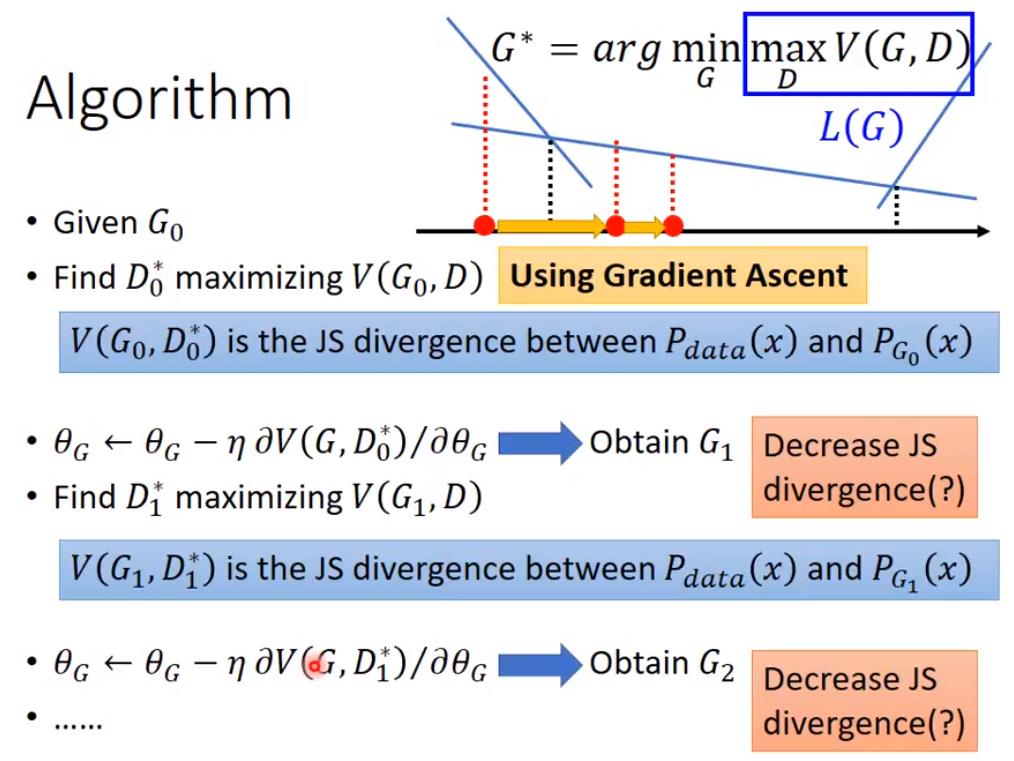

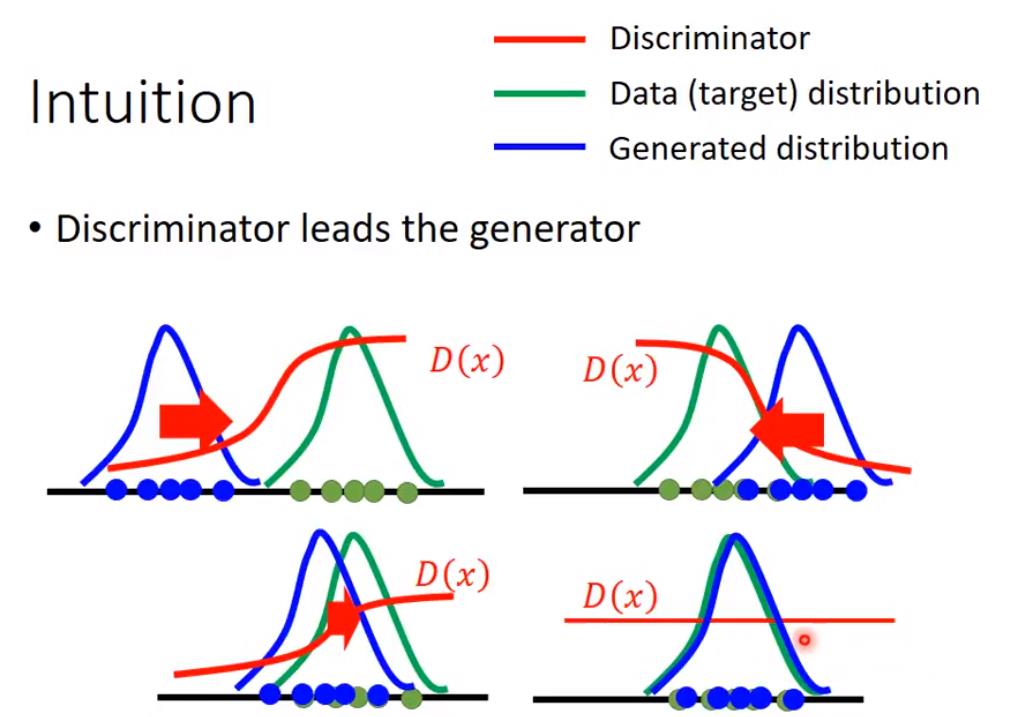

We don\'t know the formulation of PG and Pdata, so how to calculate the divergence???? ---->>> discriminator!!!

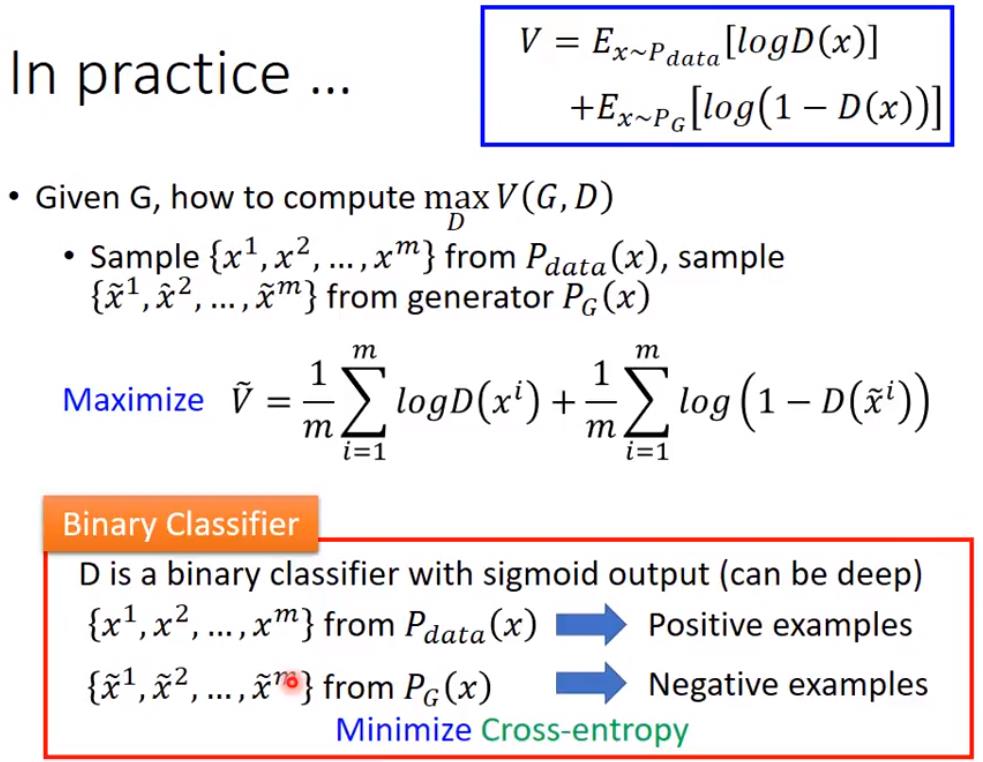

V(G,D) = maximum the output if data come from Pdata, and maximum the output of data from PG. --- >>> this process is identical to a binary classifier

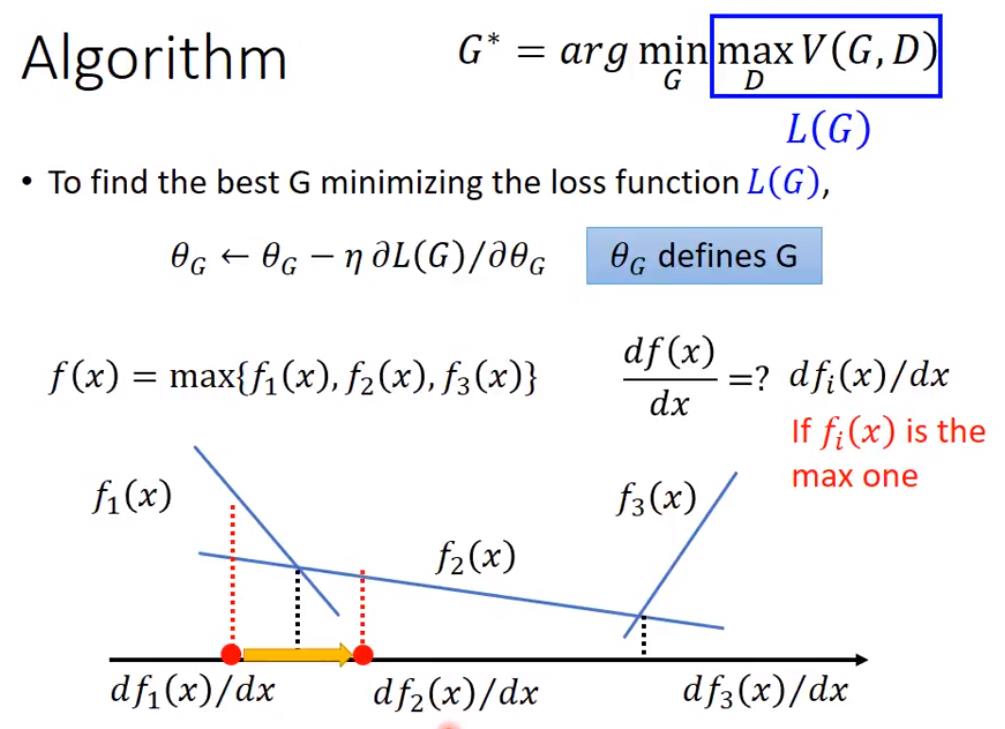

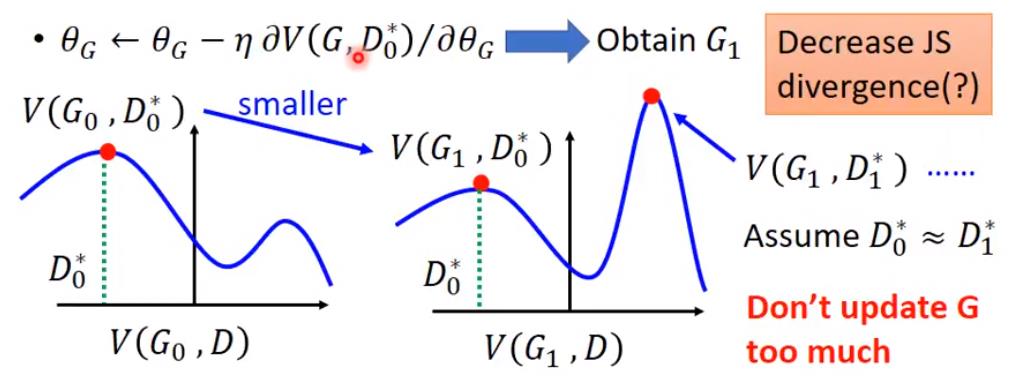

不是minima 和 saddle point

而是maxima

even if L(G) is not differentiable (a Max operation), the derivative is computable.

Tip:

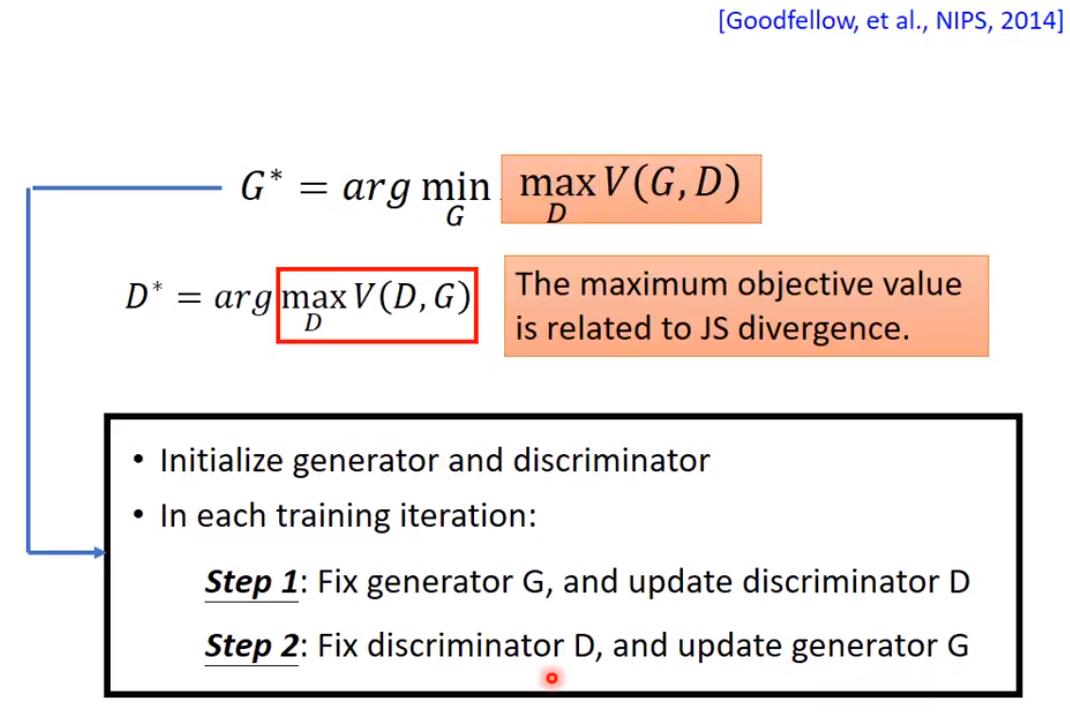

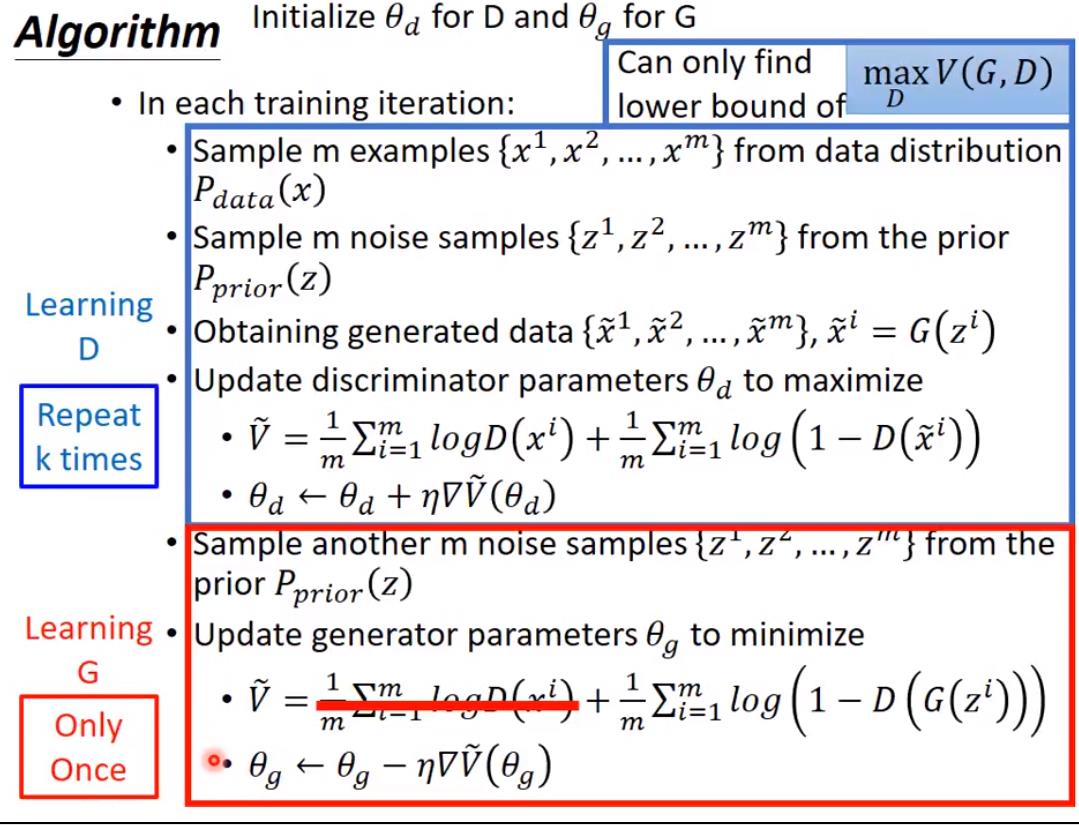

Train D as much as possible

Train G only to a moderate level

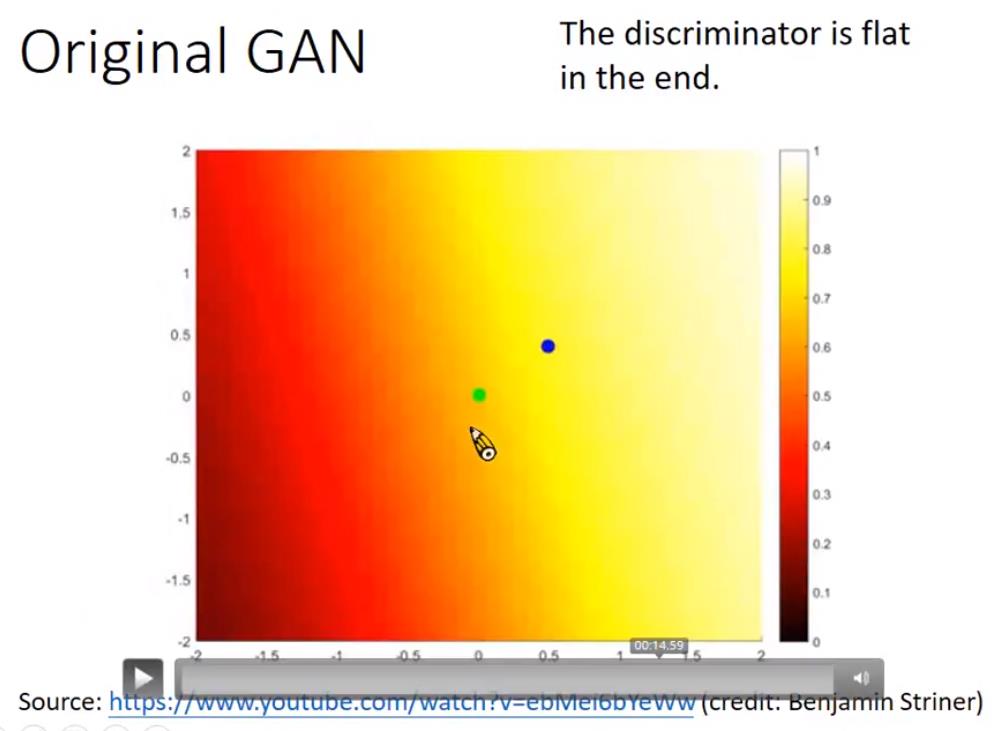

the results are actually similar......

green point is true data, blue point is from genrator

https://www.youtube.com/watch?v=ebMei6bYeWw

Some one would argue that discriminator shouldn\'t initialize with the last discriminator, but the operation also sounds reasonable on another perspective.

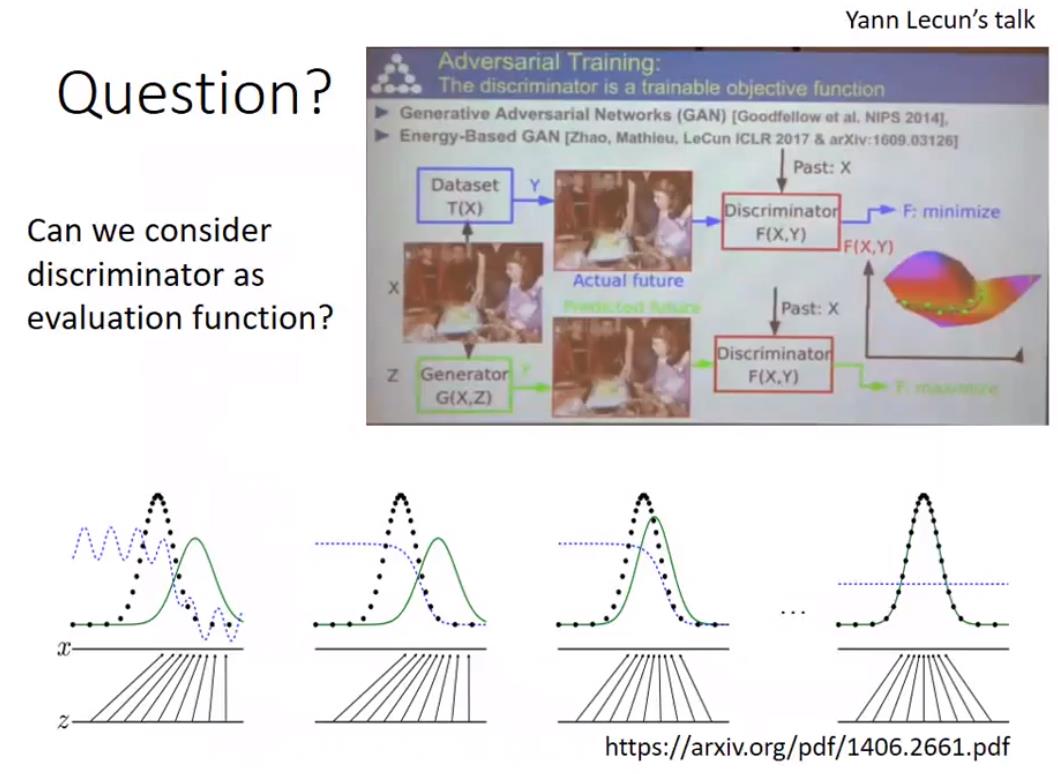

Some one in a paper shows that the performance increases if the samples come from current and past generators, although it may not sound reasonable.

以上是关于李宏毅 2018最新GAN课程 class 3 Theory behind GAN的主要内容,如果未能解决你的问题,请参考以下文章