(版本定制)第12课:Spark Streaming源码解读之Executor容错安全性

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了(版本定制)第12课:Spark Streaming源码解读之Executor容错安全性相关的知识,希望对你有一定的参考价值。

本期内容:

1、Executor的WAL容错机制

2、消息重放

Executor的安全容错主要是数据的安全容错,那为什么不考虑数据计算的安全容错呢?

原因是计算的时候Spark Streaming是借助于Spark Core上RDD的安全容错的,所以天然的安全可靠的。

Executor的安全容错主要有:

1、数据副本:

有两种方式:a.借助底层的BlockManager,BlockManager做备份,通过传入的StorageLevel进行备份。

b. WAL方式进行容错。

2、接受到数据之后,不做副本,但是数据源支持存放,所谓存放就是可以反复的读取源数据。

容错的弊端:耗时间、耗空间。

简单的看下源代码:

/** Store block and report it to driver */

def pushAndReportBlock(

receivedBlock: ReceivedBlock,

metadataOption: Option[Any],

blockIdOption: Option[StreamBlockId]

) {

val blockId = blockIdOption.getOrElse(nextBlockId)

val time = System.currentTimeMillis

val blockStoreResult = receivedBlockHandler.storeBlock(blockId, receivedBlock)

logDebug(s"Pushed block $blockId in ${(System.currentTimeMillis - time)} ms")

val numRecords = blockStoreResult.numRecords

val blockInfo = ReceivedBlockInfo(streamId, numRecords, metadataOption, blockStoreResult)

trackerEndpoint.askWithRetry[Boolean](AddBlock(blockInfo))

logDebug(s"Reported block $blockId")

}

private val receivedBlockHandler: ReceivedBlockHandler = {

if (WriteAheadLogUtils.enableReceiverLog(env.conf)) {

if (checkpointDirOption.isEmpty) {

throw new SparkException(

"Cannot enable receiver write-ahead log without checkpoint directory set. " +

"Please use streamingContext.checkpoint() to set the checkpoint directory. " +

"See documentation for more details.")

}

new WriteAheadLogBasedBlockHandler(env.blockManager, receiver.streamId,

receiver.storageLevel, env.conf, hadoopConf, checkpointDirOption.get) //通过WAL容错

} else {

new BlockManagerBasedBlockHandler(env.blockManager, receiver.storageLevel) //通过BlockManager进行容错

}

}

def storeBlock(blockId: StreamBlockId, block: ReceivedBlock): ReceivedBlockStoreResult = {

var numRecords = None: Option[Long]

val putResult: Seq[(BlockId, BlockStatus)] = block match {

case ArrayBufferBlock(arrayBuffer) =>

numRecords = Some(arrayBuffer.size.toLong)

blockManager.putIterator(blockId, arrayBuffer.iterator, storageLevel,

tellMaster = true)

case IteratorBlock(iterator) =>

val countIterator = new CountingIterator(iterator)

val putResult = blockManager.putIterator(blockId, countIterator, storageLevel,

tellMaster = true)

numRecords = countIterator.count

putResult

case ByteBufferBlock(byteBuffer) =>

blockManager.putBytes(blockId, byteBuffer, storageLevel, tellMaster = true)

case o =>

throw new SparkException(

s"Could not store $blockId to block manager, unexpected block type ${o.getClass.getName}")

}

if (!putResult.map { _._1 }.contains(blockId)) {

throw new SparkException(

s"Could not store $blockId to block manager with storage level $storageLevel")

}

BlockManagerBasedStoreResult(blockId, numRecords)

}

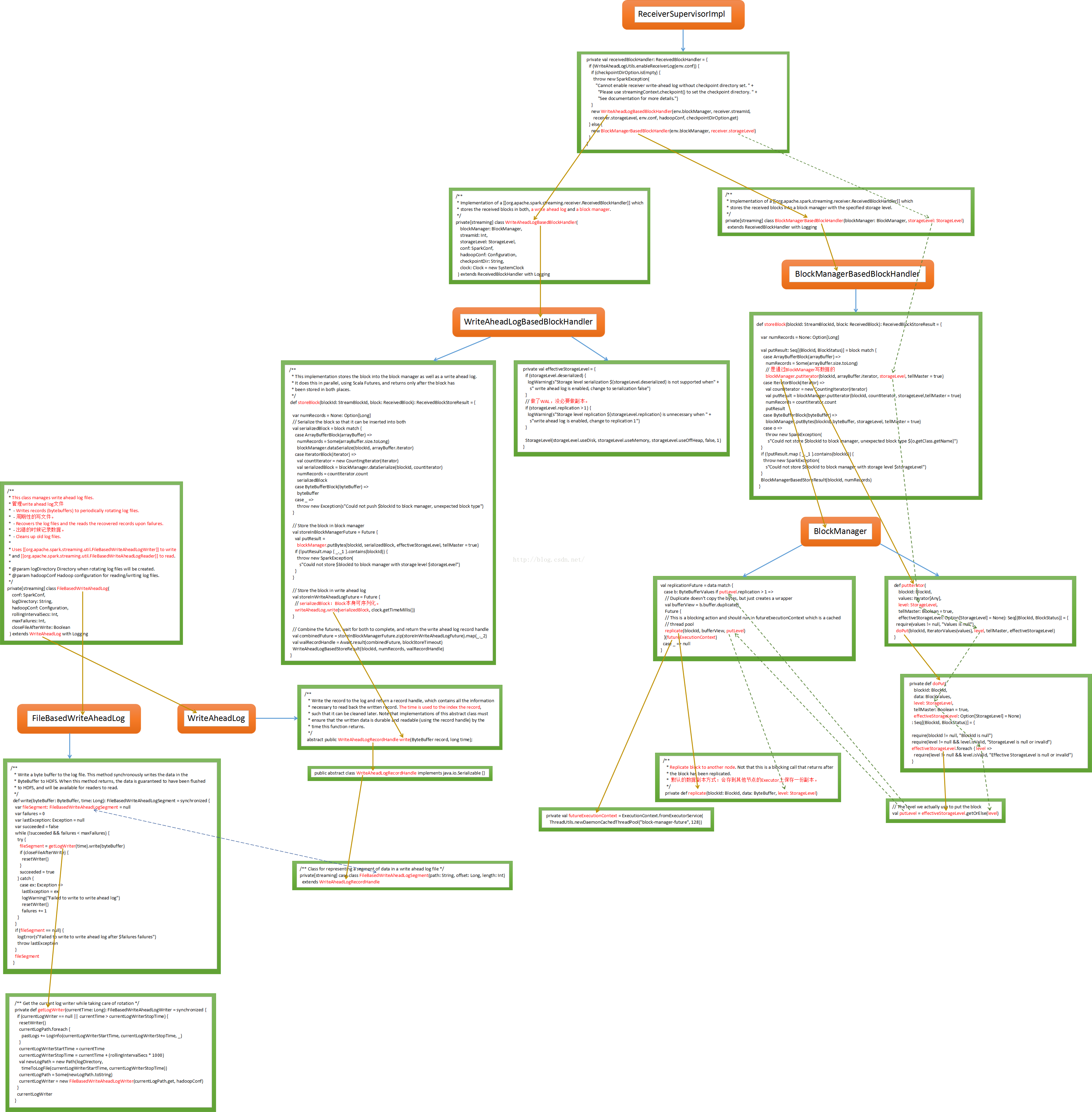

简单流程图:

参考博客:http://blog.csdn.net/hanburgud/article/details/51471089

备注:

资料来源于:DT_大数据梦工厂(Spark发行版本定制)

更多私密内容,请关注微信公众号:DT_Spark

如果您对大数据Spark感兴趣,可以免费听由王家林老师每天晚上20:00开设的Spark永久免费公开课,地址YY房间号:68917580

本文出自 “DT_Spark大数据梦工厂” 博客,请务必保留此出处http://18610086859.blog.51cto.com/11484530/1782179

以上是关于(版本定制)第12课:Spark Streaming源码解读之Executor容错安全性的主要内容,如果未能解决你的问题,请参考以下文章

(版本定制)第16课:Spark Streaming源码解读之数据清理内幕彻底解密

(版本定制)第14课:Spark Streaming源码解读之State管理之updateStateByKey和mapWithState解密

(版本定制)第11课:Spark Streaming源码解读之Driver中的ReceiverTracker彻底研究和思考

(版本定制)第17课:Spark Streaming资源动态申请和动态控制消费速率原理剖析