Deep RL Bootcamp Lecture 8 Derivative Free Methods

Posted ecoflex

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Deep RL Bootcamp Lecture 8 Derivative Free Methods相关的知识,希望对你有一定的参考价值。

you wouldn\'t try to explore any problem structure in DFO

low dimension policy

30 degrees of freedom

120 paramaters to tune

keep the positive results in a smooth way.

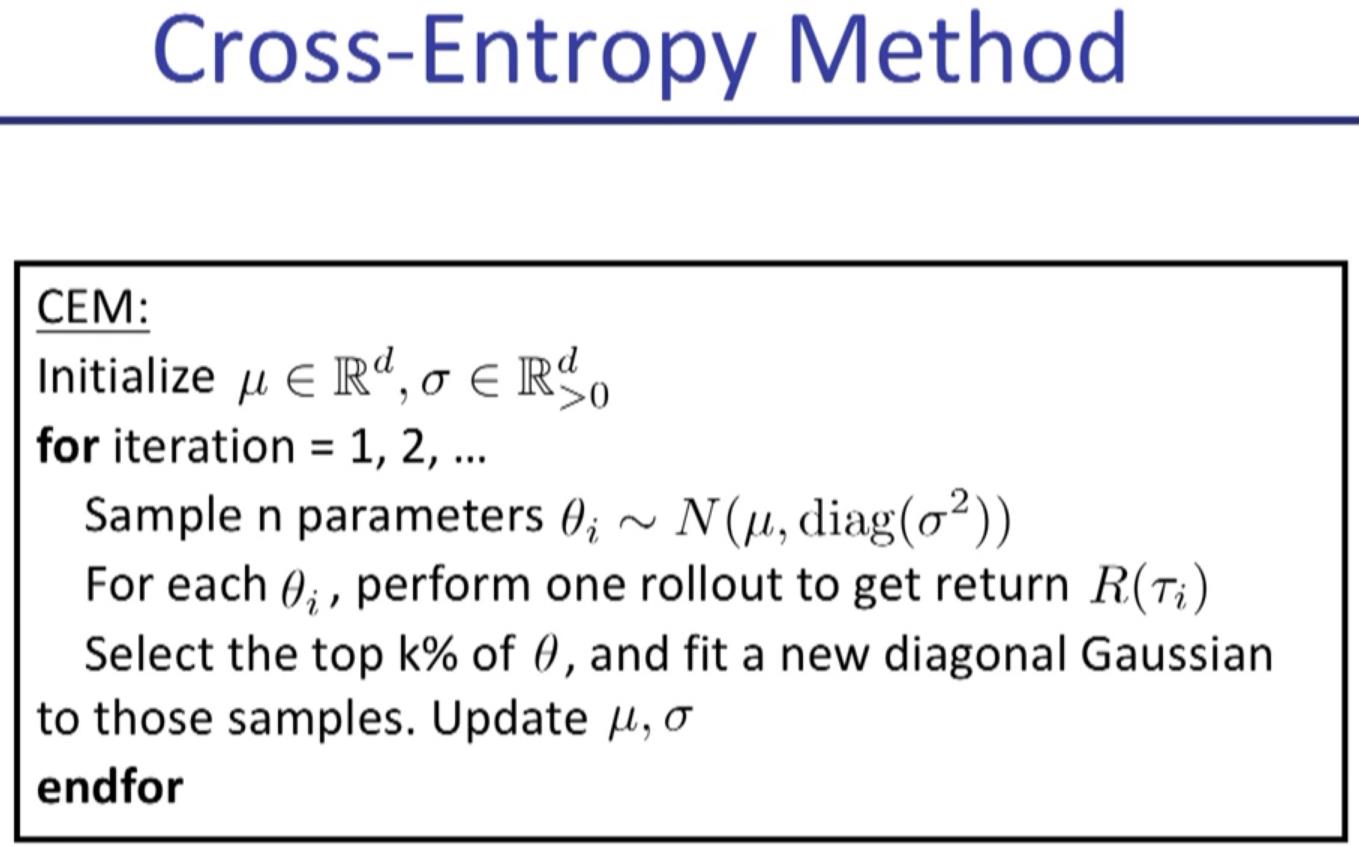

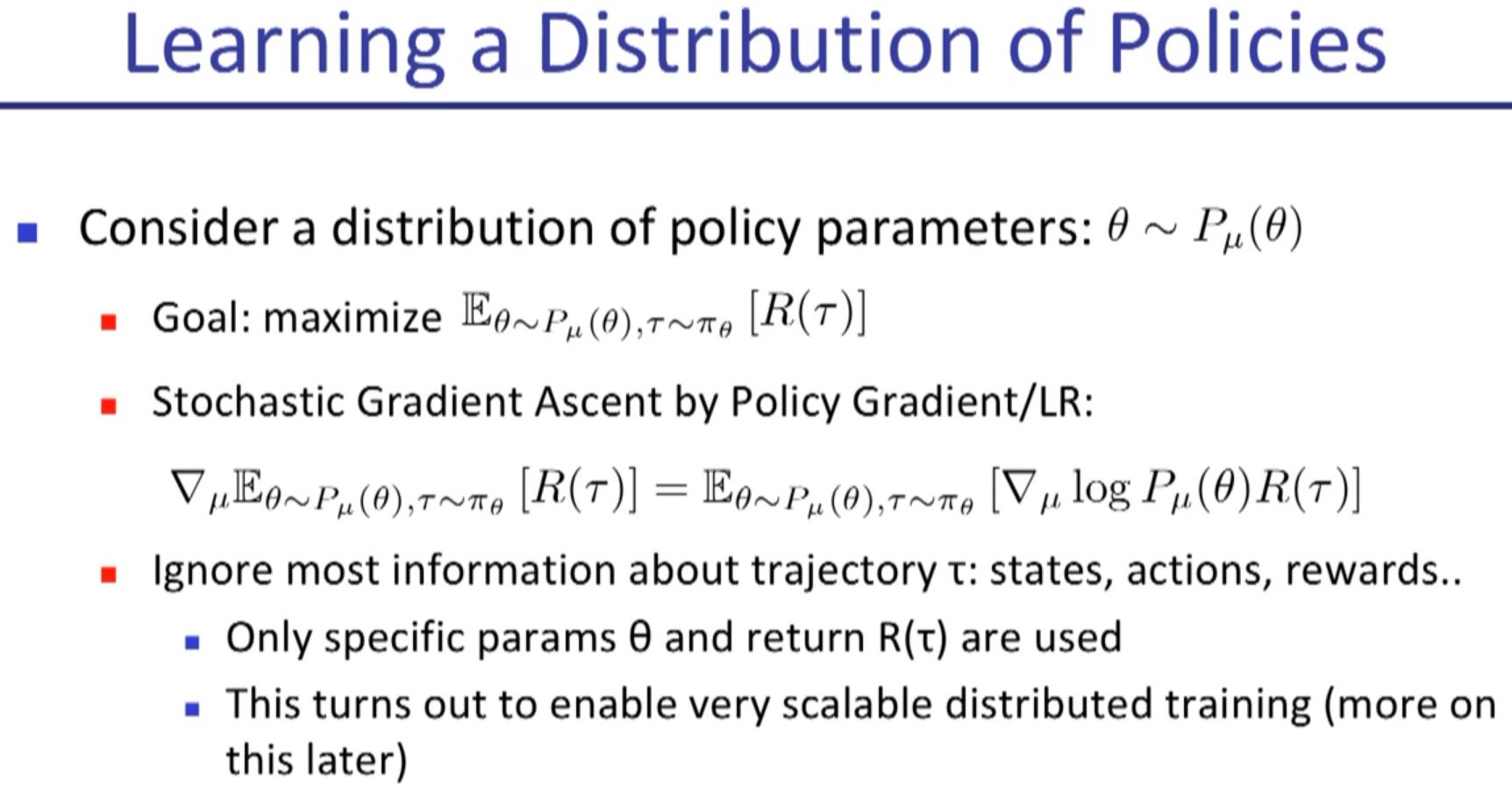

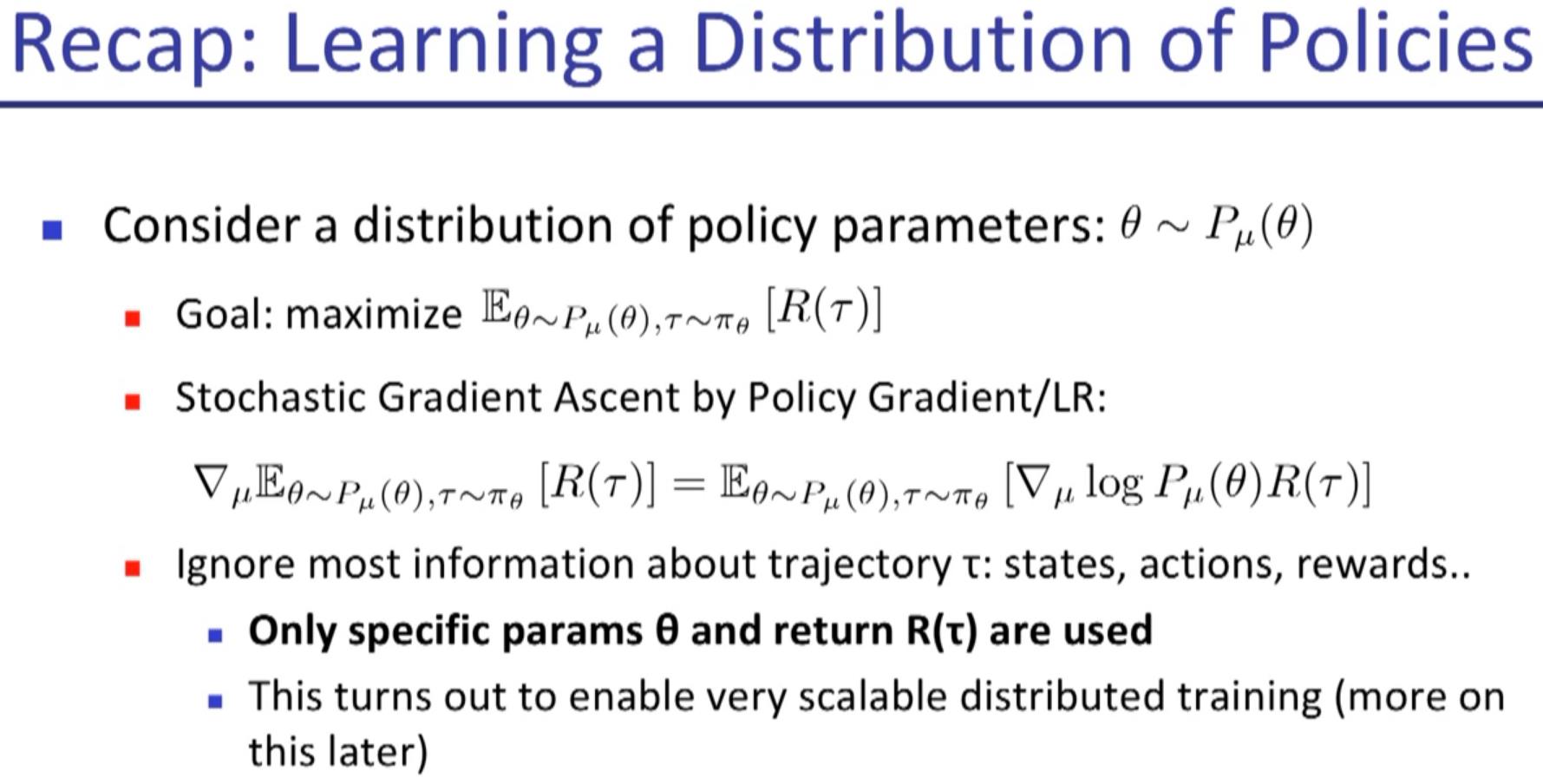

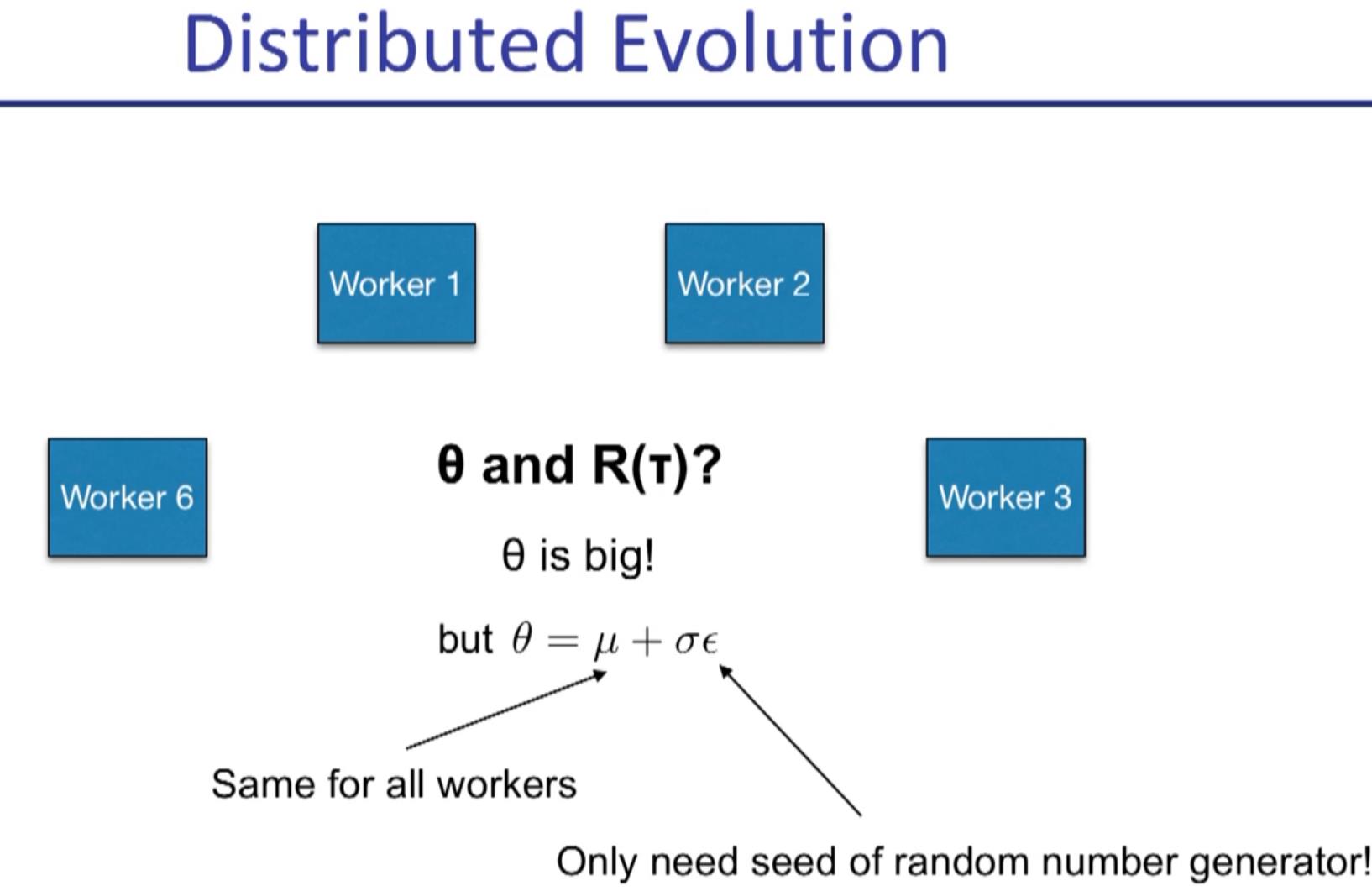

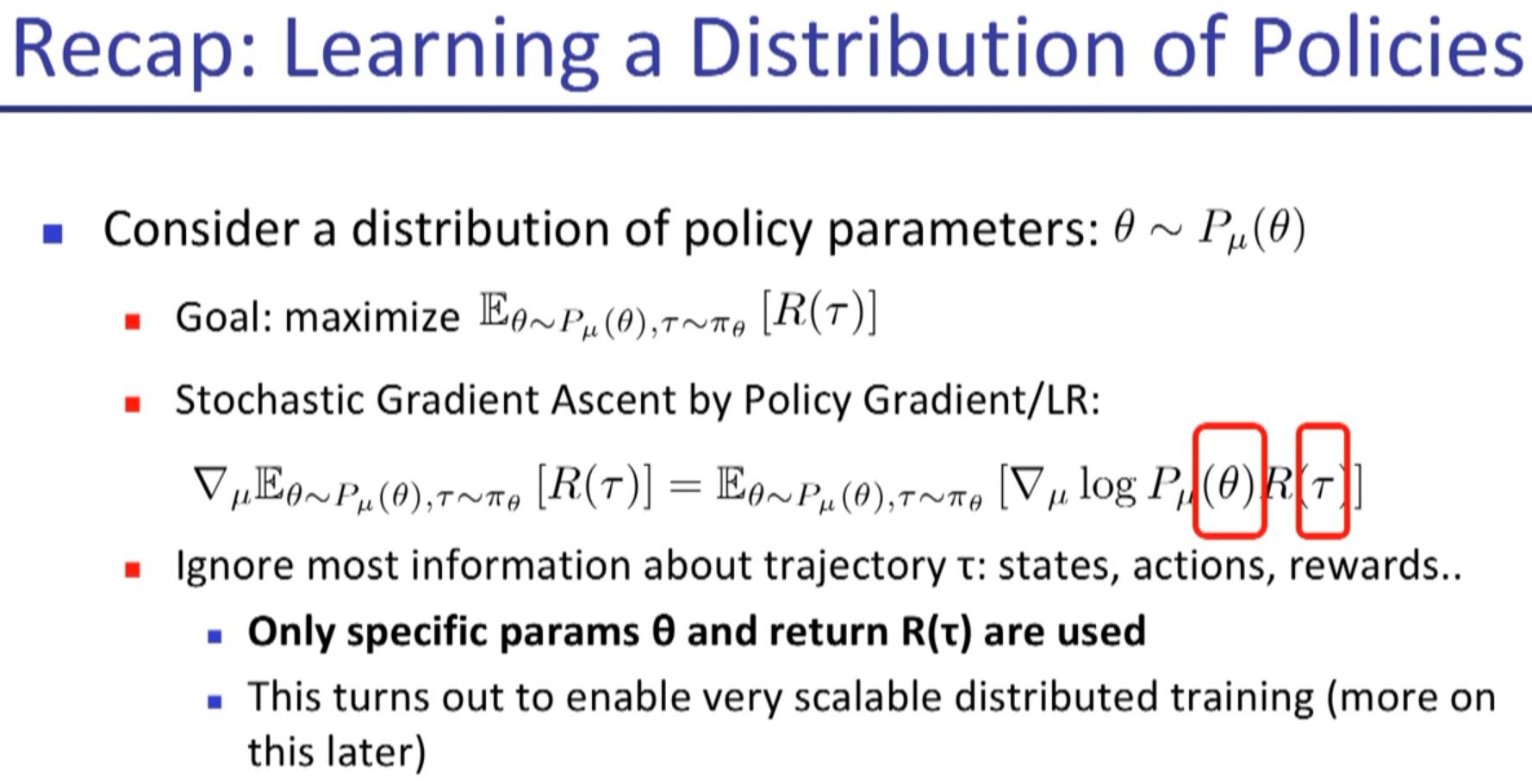

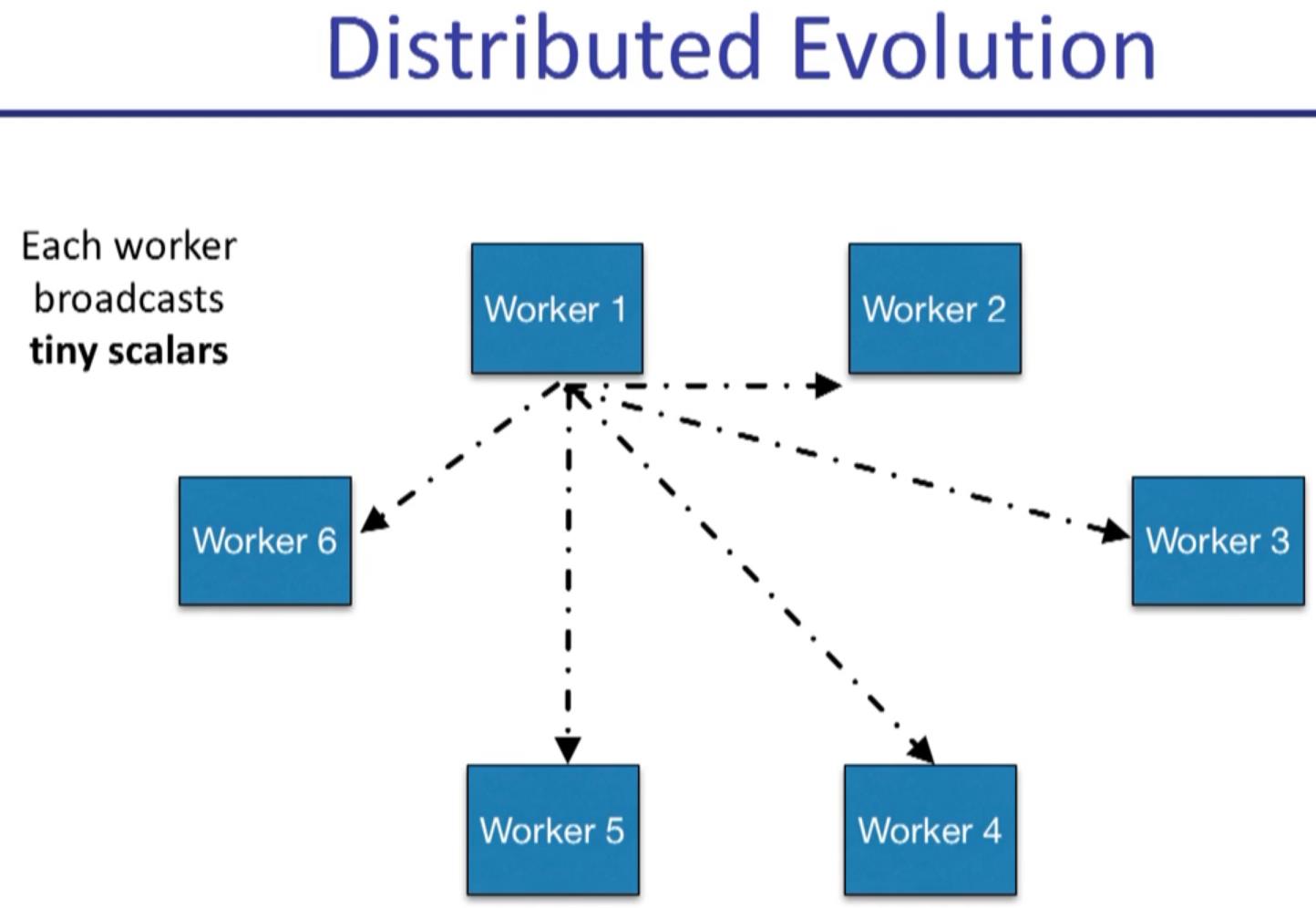

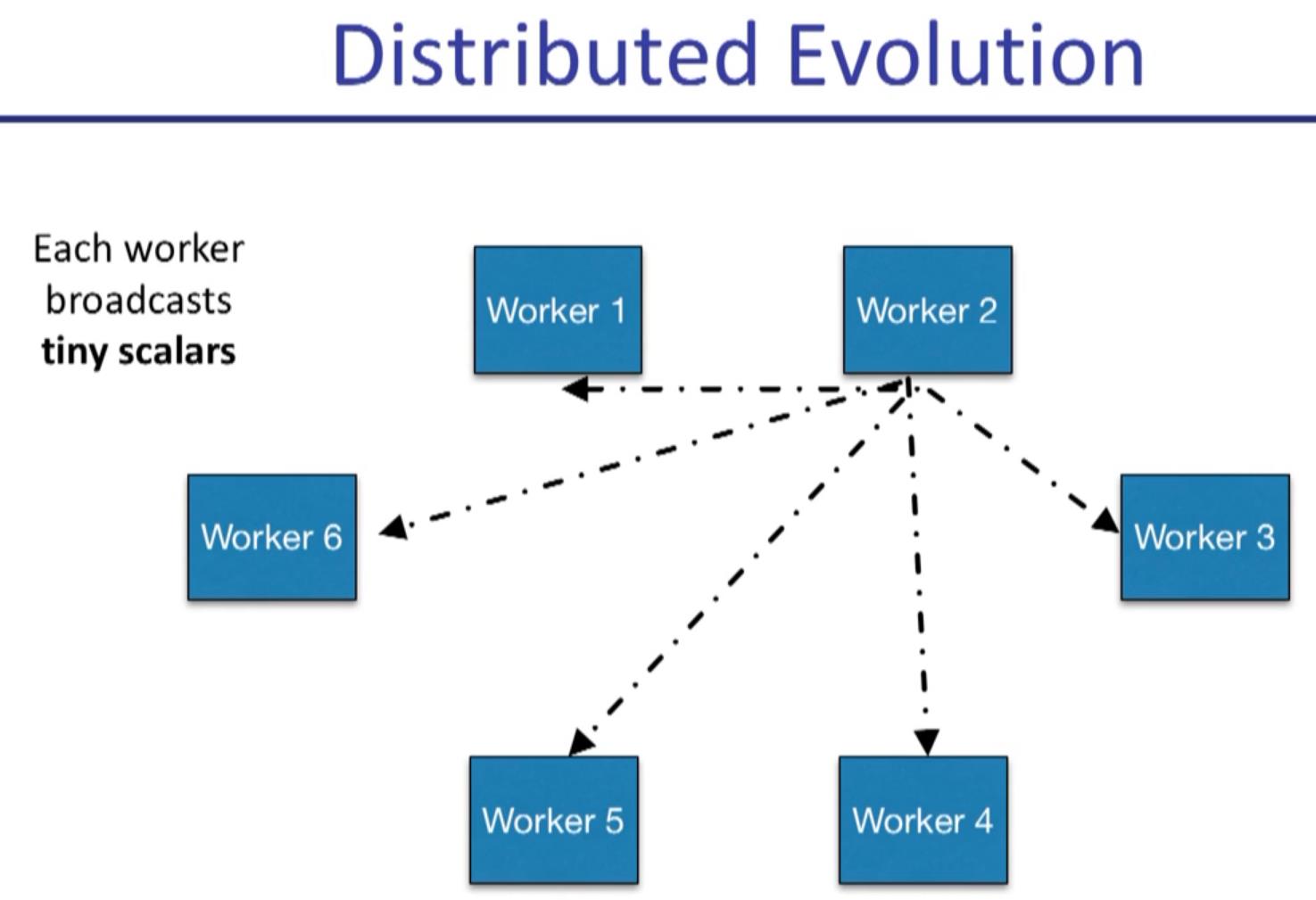

How does evolutionary method work well in high dimensional setting?

If you normalize the data well, evolutionary method could work well in MOJOCO, with random search.

Could always only get stuck at local minima.

humanoid 200k parameters need to be tuned, and it\'s learnt by evolutionary method.

The four videos are actually four different local minima, and once you get stuck on it, it can never get out of it.

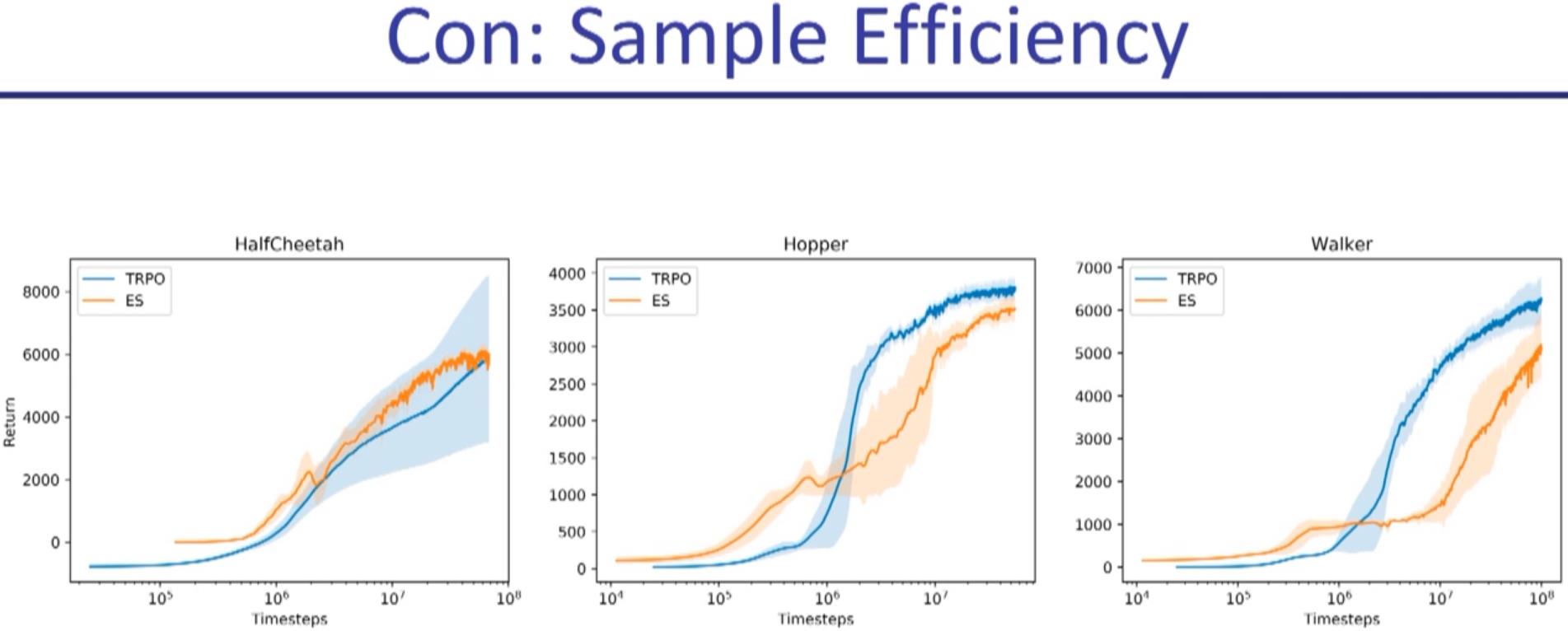

evolutionary method is roughly 10 times worse than action space policy gradient.

evolutionary method is hard to tune because previously people didn\'t get it to work with deep net

以上是关于Deep RL Bootcamp Lecture 8 Derivative Free Methods的主要内容,如果未能解决你的问题,请参考以下文章