爬虫大作业

Posted 098-谢家豪

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了爬虫大作业相关的知识,希望对你有一定的参考价值。

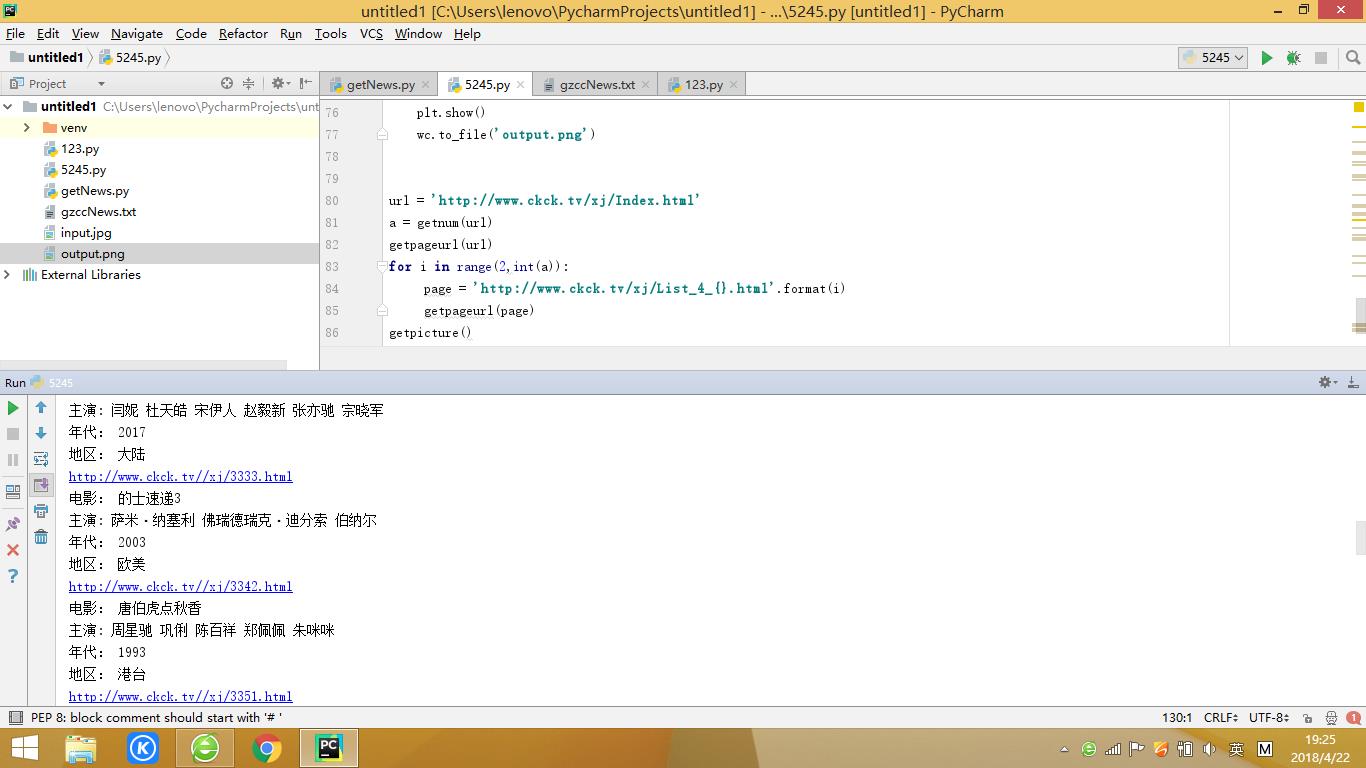

import requests import re from bs4 import BeautifulSoup import jieba.analyse from PIL import Image, ImageSequence import numpy as np import matplotlib.pyplot as plt from wordcloud import WordCloud, ImageColorGenerator # 获取总页数 def getnum(url): res = requests.get(url) res.encoding = \'gb2312\' soup = BeautifulSoup(res.text, \'html.parser\') Info = soup.select(".page-next")[0].extract().text TotalNum = re.search("共(\\d+)页.*",Info).group(1) return TotalNum #获取单个页面所有链接 def getpageurl(url): res = requests.get(url) res.encoding = \'gb2312\' soup = BeautifulSoup(res.text, \'html.parser\') a = soup.select(".list-page ul") for i in soup.select(".list-page ul li"): if len(i.select("a"))>0: info = i.select("a")[0].attrs[\'href\'] pageurl = \'http://www.ckck.tv/\' + info print(pageurl) getinfromation(pageurl) # 获取页面的信息 def getinfromation(url): res = requests.get(url) res.encoding = \'gb2312\' soup = BeautifulSoup(res.text, \'html.parser\') a = soup.select(".content .movie ul h1")[0].text print("电影:",a) b = soup.select(".content .movie ul li")[1].text name = re.search("【主 演】:(.*)",b).group(1) print("主演:",name) c = soup.select(".content .movie ul li")[4].text date = re.search("【年 代】:(.*) 【地 区】:", c).group(1) print("年代:", date) diqu = re.search("【地 区】:(.*)", c).group(1) print("地区:",diqu) # 将标签内容写入文件 f = open(\'gzccNews.txt\', \'a\', encoding=\'utf-8\') f.write(a ) f.write(name ) f.write(date ) f.write(diqu) f.write("\\n") f.close() # 生成词云 def getpicture(): lyric = \'\' f = open(\'gzccNews.txt\', \'r\', encoding=\'utf-8\') for i in f: lyric += f.read() result = jieba.analyse.textrank(lyric, topK=50, withWeight=True) keywords = dict() for i in result: keywords[i[0]] = i[1] print(keywords) image = Image.open(\'input.jpg\') graph = np.array(image) wc = WordCloud(font_path=\'./fonts/simhei.ttf\', background_color=\'White\', max_words=50, mask=graph) wc.generate_from_frequencies(keywords) image_color = ImageColorGenerator(graph) plt.imshow(wc) plt.imshow(wc.recolor(color_func=image_color)) plt.axis("off") plt.show() wc.to_file(\'output.png\') url = \'http://www.ckck.tv/xj/Index.html\' a = getnum(url) getpageurl(url) for i in range(2,int(a)): page = \'http://www.ckck.tv/xj/List_4_{}.html\'.format(i) getpageurl(page) getpicture()

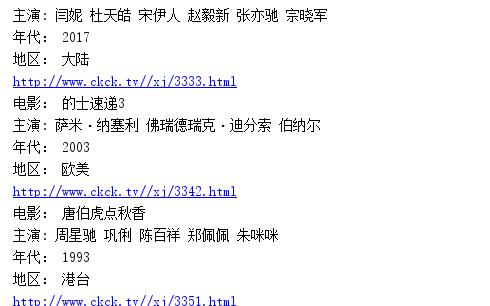

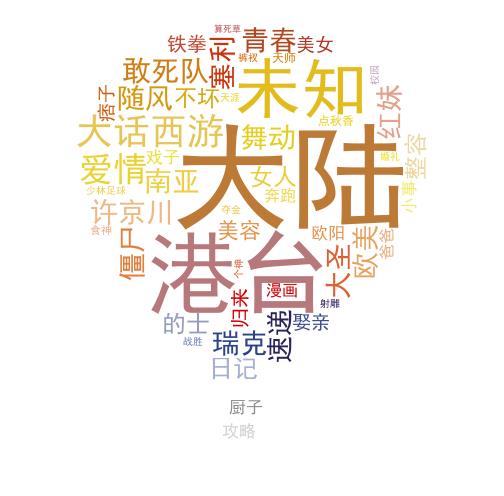

首先定义获取总页面、获取页面所有链接、获取页面信息、生成词云等的函数,过程中就是获取所有页面所有链接出现点问题,归结于找标签问题。这次爬取的是一个电影网站,将网站里面的电影名、主演、年代、地区,然后进行词云生成

以上是关于爬虫大作业的主要内容,如果未能解决你的问题,请参考以下文章

HTML5期末大作业:餐饮美食网站设计——咖啡(10页) HTML+CSS+JavaScript 学生DW网页设计作业成品 web课程设计网页规划与设计 咖啡网页设计 美食餐饮网页设计...(代码片段

Python大作业——爬虫+可视化+数据分析+数据库(可视化篇)