flume 监控hive日志文件

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了flume 监控hive日志文件相关的知识,希望对你有一定的参考价值。

- flume 监控hive 日志文件

一: flume 监控hive的日志

1.1 案例需求:

1. 实时监控某个日志文件,将数据收集到存储hdfs 上面, 此案例使用exec source ,实时监控文件数据,使用Memory Channel 缓存数据,使用HDFS Sink 写入数据

2. 此案例实时监控hive 日志文件,放到hdfs 目录当中。

hive 的日志目录是

hive.log.dir = /home/hadoop/yangyang/hive/logs1.2 在hdfs 上面创建收集目录:

bin/hdfs dfs -mkdir /flume 1.3 拷贝flume 所需要的jar 包

cd /home/hadoop/yangyang/hadoop/

cp -p share/hadoop/hdfs/hadoop-hdfs-2.5.0-cdh5.3.6.jar /home/hadoop/yangyang/flume/lib/

cp -p share/hadoop/common/hadoop-common-2.5.0-cdh5.3.6.jar

/home/hadoop/yangyang/flume/lib/

cp -p share/hadoop/tools/lib/commons-configuration-1.6.jar

/home/hadoop/yangyang/flume/lib/

cp -p share/hadoop/tools/lib/hadoop-auth-2.5.0-cdh5.3.6.jar /home/hadoop/yangyang/flume/lib/

1.4 配置一个新文件的hive-test.properties 文件:

cp -p test-conf.properties hive-conf.propertiesvim hive-conf.properties

# example.conf: A single-node Flume configuration

# Name the components on this agent

a2.sources = r2

a2.sinks = k2

a2.channels = c2

# Describe/configure the source

a2.sources.r2.type = exec

a2.sources.r2.command = tail -f /home/hadoop/yangyang/hive/logs/hive.log

a2.sources.r2.bind = namenode01.hadoop.com

a2.sources.r2.shell = /bin/bash -c

# Describe the sink

a2.sinks.k2.type = hdfs

a2.sinks.k2.hdfs.path = hdfs://namenode01.hadoop.com:8020/flume/%Y%m/%d

a2.sinks.k2.hdfs.fileType = DataStream

a2.sinks.k2.hdfs.writeFormat = Text

a2.sinks.k2.hdfs.batchSize = 10

# 设置二级目录按小时切割

a2.sinks.k2.hdfs.round = true

a2.sinks.k2.hdfs.roundValue = 1

a2.sinks.k2.hdfs.roundUnit = hour

# 设置文件回滚条件

a2.sinks.k2.hdfs.rollInterval = 60

a2.sinks.k2.hdfs.rollsize = 128000000

a2.sinks.k2.hdfs.rollCount = 0

a2.sinks.k2.hdfs.useLocalTimeStamp = true

a2.sinks.k2.hdfs.minBlockReplicas = 1

# Use a channel which buffers events in memory

a2.channels.c2.type = memory

a2.channels.c2.capacity = 1000

a2.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r2.channels = c2

a2.sinks.k2.channel = c2 1.5 运行agent 处理

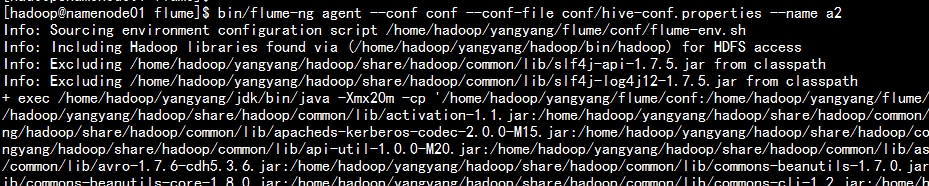

bin/flume-ng agent --conf conf --conf-file conf/hive-conf.properties --name a2

1.6 写入hive 的log日志文件测试:

cd /home/hadoop/yangyang/hive/logs

echo "111" >> hive.log

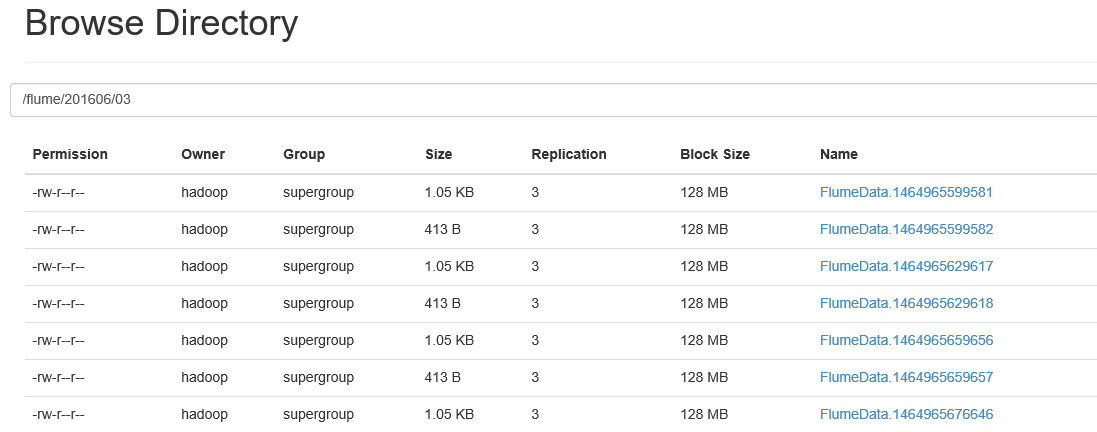

每隔一段时间执行上面的命令测试1.7 去hdfs 上面去查看:

以上是关于flume 监控hive日志文件的主要内容,如果未能解决你的问题,请参考以下文章

Hadoop详解——Hive的原理和安装配置和UDF,flume的安装和配置以及简单使用,flume+hive+Hadoop进行日志处理

Hadoop详解——Hive的原理和安装配置和UDF,flume的安装和配置以及简单使用,flume+hive+Hadoop进行日志处理