爬虫练习-爬取小说

Posted 不会起名字

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了爬虫练习-爬取小说相关的知识,希望对你有一定的参考价值。

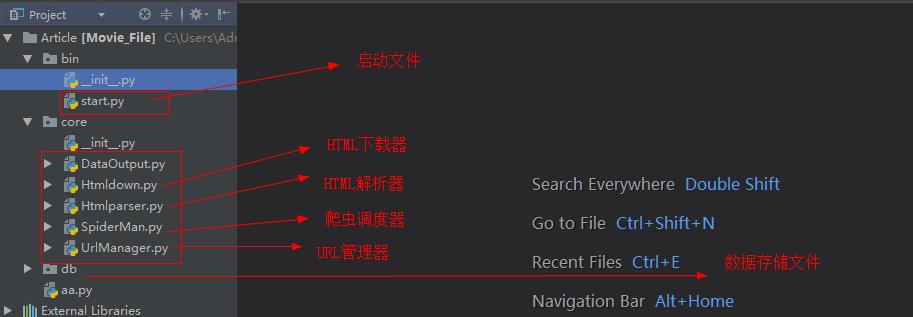

# 程序启动文件 start.py

#!/usr/bin/python # -*- coding: utf-8 -*- import os, sys BASEPATH = os.path.dirname(os.path.dirname(os.path.realpath(__file__))) print(BASEPATH) sys.path.append(BASEPATH) from core import SpiderMan if __name__ == \'__main__\': s=SpiderMan.SpiderMan() s.async()

# 爬虫调度器

#!/usr/bin/python # -*- coding: utf-8 -*- from gevent import spawn,monkey,joinall;monkey.patch_all() from concurrent.futures import ThreadPoolExecutor from core.UrlManager import UrlManager from core.htmldown import Htmldown from core.Htmlparser import Htmlparser # from core.DataOutput import DataOutput class SpiderMan: def __init__(self): self.manager=UrlManager() #url管理 self.downloader=Htmldown() #HTML下载 self.parser=Htmlparser() #HTML解析 # self.output=DataOutput() def index_work(self): \'\'\' 爬取凌霄主页 \'\'\' url = \'http://www.lingxiaozhishang.com\' self.manager.oldurls.add(url) #列表中添加每次传进来的url html_dict = self.downloader.down_page(url) #下载器下载 if html_dict is None: # raise print("爬取主页出错了") print("爬取主页出错了") return None new_urls = self.parser.parser_index(html_dict,url) # 解析二层链接 self.manager.add_urls(new_urls) # 所有的a标签存放的列表 print("爬取 主页 + 所有文章url 完成") def async(self): \'\'\' 开启协程 \'\'\' self.index_work() pool = ThreadPoolExecutor(10) # 开启十个线程池 while True: url = self.manager.get_url() # 从url管理器中获取url if url is None: break pool.submit(self.downloader.down_page,url).add_done_callback(self.parser.parser_page) # 提交下载任务,解析 pool.shutdown(wait=True) #最后得关闭线程池 print("完了-----------------------")

# URL管理器

#!/usr/bin/python # -*- coding: utf-8 -*- class UrlManager: def __init__(self): self.newurls=set() self.oldurls=set() def add_url(self,newurl): \'\'\' 添加小说章节的url :return: \'\'\' if newurl not in self.oldurls: self.newurls.add(newurl) def add_urls(self,newurls): \'\'\' 添加多个小说章节的url :param newurls: :return: \'\'\' if len(newurls)==0:return for url in newurls: self.add_url(url) def get_url(self): \'\'\' 取出一个小说章节的url :return: \'\'\' try: url = self.newurls.pop() if url is not None: self.oldurls.add(url) return url except KeyError: pass def has_oldurls(self): \'\'\' 返回已爬小说章节的数量 :return: \'\'\' return len(self.oldurls)

# HTML下载器

#!/usr/bin/python # -*- coding: utf-8 -*- import requests class Htmldown: def down_page(self,url): \'\'\' 下载网页内容 \'\'\' headers={\'User-Agent\':\'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:55.0) Gecko/20100101 Firefox/55.0\'} r=requests.get(url,headers=headers) r.encoding=\'utf8\' if r.status_code==200: return r.text

# HTML解析器 解析完直接存储到文件了,应该持久化到MongoDB中

#!/usr/bin/python # -*- coding: utf-8 -*- from bs4 import BeautifulSoup class Htmlparser: def parser_index(self,html_conf,url): soup = BeautifulSoup(html_conf, \'html.parser\') list_a = soup.find(class_="chapterlist").find_all(\'a\') new_urls=[] for a in list_a: #url=http://www.lingxiaozhishang.com #/book/439.html new_url ="%s%s"%(url,a.attrs["href"]) new_urls.append(new_url) return new_urls def parser_page(self,html_conf): \'\'\' 解析小说章节页面 :param html_conf: :return: \'\'\' html_conf =html_conf.result() soup=BeautifulSoup(html_conf,\'html.parser\') title = soup.find(\'h1\').get_text() text = soup.find(id="BookText").get_text() filepath = r"C:\\Users\\Administrator\\Desktop\\Article\\db\\%s.txt"%title with open(filepath,"w") as f: f.write(text) print("%s 下载完成"%title)

以上是关于爬虫练习-爬取小说的主要内容,如果未能解决你的问题,请参考以下文章