scrapy 下载图片及存储信息

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了scrapy 下载图片及存储信息相关的知识,希望对你有一定的参考价值。

例1:scrapy项目的使用(利用item收集抓取的返回值)

1、创建scrapy项目

scrapy startproject booklist New Scrapy project 'booklist', using template directory '/usr/local/lib/python3.6/site-packages/scrapy/templates/project', created in: /Users/yuanjicai/booklist You can start your first spider with: cd booklist scrapy genspider example example.com

2、定义要抓取内容的字段(用于回收抓取的数据)

cat booklist/items.py # -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # http://doc.scrapy.org/en/latest/topics/items.html import scrapy class BooklistItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() author = scrapy.Field() publisher = scrapy.Field() editor_date = scrapy.Field() description = scrapy.Field()

3、编写spider进行抓取

cat booklist/spiders/bookspider.py

import scrapy

from booklist.items import BooklistItem

class BookSpider(scrapy.Spider):

name = 'booklist'

start_urls = ['http://www.chinavalue.net/BookInfo/BookList.aspx?page=1']

def parse(self,response):

yield scrapy.Request(response.urljoin("?page=1"),callback=self.parse_page)

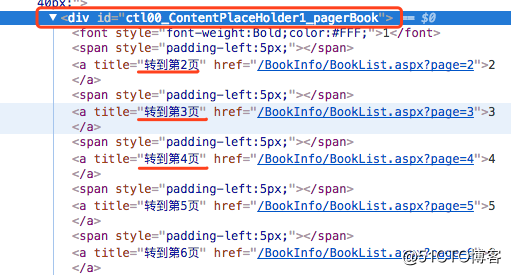

for item in response.xpath('//div[@id="ctl00_ContentPlaceHolder1_pagerBook"]/a/@href').extract():

fullurl=response.urljoin(item)

yield scrapy.Request(fullurl,callback=self.parse_page)

def parse_page(self,response):

for item in response.xpath('//div[@id="divBookList"]/div/div[2]/a[1]'):

detail_url=response.urljoin(item.xpath('@href').extract()[0])

yield scrapy.Request(detail_url,callback=self.parse_bookdetail)

def parse_bookdetail(self,response):

bookinfo=BooklistItem()

basic_info=response.xpath('//*[@id="Container"]/div[6]/div[1]/div[2]/div[1]/div[2]')

bookinfo['name']=basic_info.xpath('div[1]/text()').extract()[0].strip()

bookinfo['author']=basic_info.xpath('div[2]/text()').extract()[0].strip()

bookinfo['publisher']=basic_info.xpath('div[3]/text()').extract()[0].strip()

bookinfo['editor_date']=basic_info.xpath('div[4]/text()').extract()[0].strip()

bookinfo['description']=response.xpath('//*[@id="ctl00_ContentPlaceHolder1_pnlIntroBook"]/div[2]/text()').extract()[0].strip()

yield bookinfoparse函数中的“for循环”处理“下一页”的link(下图所示)

parse_page函数负责解析每一页书单中 各item 标题的link(如下图所示)

parse_bookdetail 负责解析每本书的详细属性及内容(如下图所示)

4、运行项目

scrapy crawl booklist -o book-info.csv

上例中将抓取的信息通过yield返回给item中的各字段,然后再通过 output 选项 存储到 book-info.csv 文件中。

例2:scrapy下载图片,并将抓取信息存储到指定位置(文件、mysql、mongodb)

创建项目(下载图片):

bogon:scrapy yuanjicai$ scrapy startproject bookinfo bogon:douban_booklist yuanjicai$ cd bookinfo/

cat bookinfo/items.py #在item中定义抓取的各字段名,并定义image_urls、image_paths

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # http://doc.scrapy.org/en/latest/topics/items.html import scrapy class BookinfoItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() author = scrapy.Field() publisher = scrapy.Field() price = scrapy.Field() rating = scrapy.Field() editor_date = scrapy.Field() images = scrapy.Field() image_urls = scrapy.Field() image_paths = scrapy.Field()

vim bookinfo/settings.py #通过settings指定抓取时使用的header、agent、pipeline、图片或文件保存的位置、img过期时间、mysql用户名/密码/端口、mongo用户名/密码/端口

grep -E -v '^(#|$)' bookinfo/settings.py

BOT_NAME = 'bookinfo'

SPIDER_MODULES = ['bookinfo.spiders']

NEWSPIDER_MODULE = 'bookinfo.spiders'

ROBOTSTXT_OBEY = True

#from faker import Factory

#f = Factory.create()

#USER_AGENT = f.user_agent()

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Connection': 'keep-alive',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.75 Safari/537.36',

}

ITEM_PIPELINES = {

#'bookinfo.pipelines.BookinfoStoreMysqlPipeline': 200,

#'bookinfo.pipelines.BookinfoStoreFilePipeline': 200,

'bookinfo.pipelines.BookinfoStoreMongoPipeline': 200,

'bookinfo.pipelines.BookImgsDLPipeline': 300,

}

IMAGES_STORE = '/Users/yuanjicai/Downloads/bookinfo'

IMAGES_EXPIRES = 90

#IMAGES_MIN_HEIGHT = 100

#IMAGES_MIN_WIDTH = 100

#IMAGES_THUMBS = {

# 'small': (50, 50),

# 'big': (270, 270),

#}

MYSQL_HOST = '10.18.101.104'

MYSQL_DBNAME = 'book'

MYSQL_USER = 'root'

MYSQL_PASSWD = '123'

MYSQL_PORT = 3306

MONGODB_HOST = '10.18.101.104'

MONGODB_PORT = 27017

MONGODB_DB = 'book'

MONGODB_COLLECTION = 'bookinfo'(1)爬取一个Item,将图片的URLs放入image_urls字段

(2)从Spider返回的Item,传递到Item Pipeline

(3)当Item传递到ImagePipeline,将调用Scrapy 调度器和下载器完成image_urls中的url的调度和下载。ImagePipeline会自动高优先级抓取这些url,于此同时,item会被锁定直到图片抓取完毕才被解锁。

(4)图片下载成功结束后,图片下载路径、url和校验和等信息会被填充到images字段中。

cat bookinfo/spiders/bookinfo_spider.py #实现抓取内容

# -*- coding: utf-8 -*-

import scrapy

import re

from bookinfo.items import BookinfoItem

class bookinfoSpider(scrapy.Spider):

name = "bookinfo"

start_urls = [ "https://book.douban.com/top250" ]

def parse(self,response):

yield scrapy.Request(response.url,callback=self.parse_page)

for page_url in response.xpath('//div[@class="paginator"]/a/@href').extract():

yield scrapy.Request(page_url,callback=self.parse_page)

def parse_page(self,response):

for item in response.xpath('//div[@class="article"]/div[1]/table/tr[1]'):

bookinfo=BookinfoItem() #在for循环内实例化在item中定义的各字段,抓取每个item使用一个新的bookinfo空间,相互不影响

bookinfo['name']=item.xpath("td[2]/div[1]/a/text()").extract()[0].strip()

bookinfo['price']=item.xpath("td[2]/p/text()").extract()[0].strip().split("/")[-1]

bookinfo['editor_date']=item.xpath("td[2]/p/text()").extract()[0].strip().split("/")[-2]

bookinfo['publisher']=item.xpath("td[2]/p/text()").extract()[0].strip().split("/")[-3]

bookinfo['author']=item.xpath("td[2]/p/text()").extract()[0].strip().split("/")[-4]

bookinfo['rating']=item.xpath("td[2]/div[2]/span[2]/text()").extract()[0]

bookinfo['image_urls']=item.xpath("td[1]/a/img/@src").extract_first()

yield bookinfo

cat bookinfo/pipelines.py #由pipeline中定义的类、方法保存抓取的信息及图片

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

from scrapy.pipelines.images import ImagesPipeline

from scrapy.exceptions import DropItem

from scrapy.conf import settings #导入settings 是为了在pipeline中调用setting中定义的各DB参数

import scrapy

import codecs

import json

import pymysql

import pymongo

#通过以下pipeline使用pymysql连接并写入mysql server

class BookinfoStoreMysqlPipeline(object):

def __init__(self):

pass

def dbHandle(self):

conn = pymysql.connect(host='10.18.101.104', db='book', user='root', passwd='123', charset='utf8')

return conn

def process_item(self,item,spider):

conn=self.dbHandle()

cursor=conn.cursor()

insert_sql = 'insert into bookinfo(name,author,publisher,url) VALUES (%s,%s,%s,%s)'

try:

cursor.execute(insert_sql,(item["name"],item["author"],item["publisher"],item["image_urls"]))

conn.commit()

except:

conn.rollback()

conn.close()

return item

def spider_close(self,spider):

pass

#通过以下pipeline使用json.dumps形式将返回的数据写入指定json文件中

class BookinfoStoreFilePipeline(object):

def __init__(self):

self.file=codecs.open('bookinfo.json','w',encoding='utf-8')

def process_item(self,item,spider):

line = json.dumps(dict(item),ensure_ascii=False) + "\n"

self.file.write(line)

return item

def spider_colse(self,spider):

self.file.close()

#通过以下pipeline使用pymongo形式将返回的数据写入mongodb中

class BookinfoStoreMongoPipeline(object):

def __init__(self):

conn=pymongo.MongoClient(settings['MONGODB_HOST'],settings['MONGODB_PORT'])

db=conn[settings['MONGODB_DB']]

self.collection = db[settings['MONGODB_COLLECTION']]

def process_item(self,item,spider):

self.collection.insert(dict(item))

return item

def spider_colse(self,spider):

conn.close()

#通过以下pipeline下载抓取过程中存储在image_urls字段的图片

class BookImgsDLPipeline(ImagesPipeline):

default_headers = {

'accept': 'image/webp,image/*,*/*;q=0.8',

'accept-encoding': 'gzip, deflate, sdch, br',

'accept-language': 'zh-CN,zh;q=0.8,en;q=0.6',

'referer': 'https://book.douban.com/top250/',

'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36',

}

def get_media_requests(self,item,info):

self.default_headers['referer'] = item['image_urls']

yield scrapy.Request(item['image_urls'], headers=self.default_headers, meta={'bookname':item['name']})

#将每个bookname附加在meta中传递给下一个函数处理

def file_path(self,request,response=None,info=None):

bookname=request.meta['bookname']

image_guid = bookname+'_'+request.url.split('/')[-1] #自定义保存图片的名称

filename = 'full/%s' % (image_guid)

return filename

def item_completed(self,results,item,info):

image_paths = [ value['path'] for ok, value in results if ok ]

if not image_paths:

raise DropItem("Item contains no images")

item['image_paths'] = image_paths[0]

item['images'] = [ value for ok, value in results if ok ]

return item说明:result字典值的格式如下所示:

[(True, {'url': 'https://img3.doubanio.com/mpic/s26012674.jpg', 'path': 'full/b8700497fc0014c87e085747c89476e12162c518.jpg', 'checksum': '4da0defa1ec30229ce724d691f694ad1'})]

Pipline 下载图片时,必须是一个继承ImagesPipeline父类的 类 ,该类必须在setting中调用 ;

ImagePipeline

需要在自定义的ImagePipeline类中重载的方法有:get_media_requests(item, info)和item_completed(results, items, info)

正如工作流程所示,Pipeline将从item中获取图片的URLs并下载它们,所以必须重载get_media_requests,并返回一个Request对象,这些请求对象将被Pipeline处理,当完成下载后,结果将发送到item_completed方法,这些结果为一个二元组的list,每个元祖的包含(success, image_info_or_failure)。 success: boolean值,true表示成功下载 ;如果success=true,image_info_or_error词典包含以下键值对:

url:原始URL

path:本地存储路径

checksum:校验码

以上是关于scrapy 下载图片及存储信息的主要内容,如果未能解决你的问题,请参考以下文章

Scrapy基础————图片下载后将本地路径添加到Item中