决策树(决策树的分支深度及重要特征检测)

Posted 挣脱生命的束缚...

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了决策树(决策树的分支深度及重要特征检测)相关的知识,希望对你有一定的参考价值。

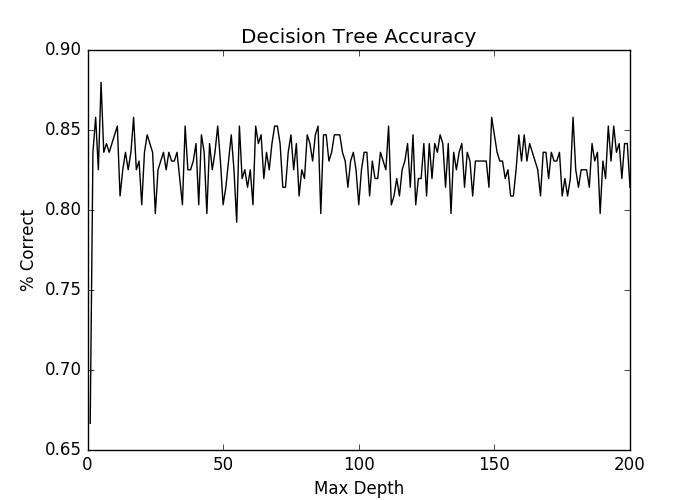

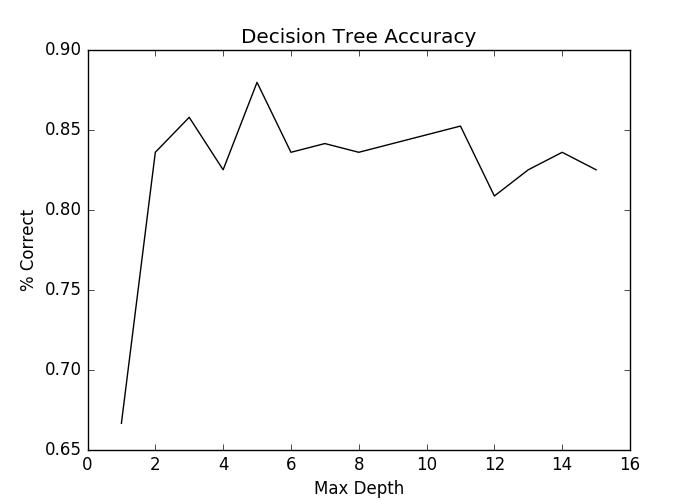

import matplotlib.pyplot as plt from sklearn import datasets import numpy as np from sklearn.tree import DecisionTreeClassifier n_features = 200 X, y = datasets.make_classification(750, 200,n_informative=5) #后面的P,是正负的比例 training = np.random.choice([True, False], p=[.75, .25],size=len(y)) c = 0 for x in training: if(x == True): c = c+1 print(c,c/750) accuracies = [] for x in np.arange(1, n_features+1): dt = DecisionTreeClassifier(max_depth=x) dt.fit(X[training], y[training]) preds = dt.predict(X[~training]) accuracies.append((preds == y[~training]).mean()) f, ax = plt.subplots(figsize=(7, 5)) ax.plot(range(1, n_features+1), accuracies, color=\'k\') ax.set_title("Decision Tree Accuracy") ax.set_ylabel("% Correct") ax.set_xlabel("Max Depth") f.show() N = 15 f, ax = plt.subplots(figsize=(7, 5)) ax.plot(range(1, n_features+1)[:N], accuracies[:N], color=\'k\') ax.set_title("Decision Tree Accuracy") ax.set_ylabel("% Correct") ax.set_xlabel("Max Depth") f.show() \'\'\' 老的版本无这个参数,这个参数很好,可以检查重要的特征 dt_ci = DecisionTreeClassifier(compute_importances=True) dt.fit(X, y) ne0 = dt.feature_importances_ != 0 y_comp = dt.feature_importances_[ne0] x_comp = np.arange(len(dt.feature_importances_))[ne0] f, ax = plt.subplots(figsize=(7, 5)) ax.bar(x_comp, y_comp) f.show() \'\'\'

以上是关于决策树(决策树的分支深度及重要特征检测)的主要内容,如果未能解决你的问题,请参考以下文章