Python3.5 数据处理 –jieba + sklearn库安装及第一个示例

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Python3.5 数据处理 –jieba + sklearn库安装及第一个示例相关的知识,希望对你有一定的参考价值。

一,安装pip3:

#sudo apt-get install pip3

二,安装jieba:

#sudo pip3 install jieba

三,安装sklearn:

#sudo pip3 install scikit-learn

#sudo pip3 install numpy #sudo pip3 install scipy

eg:国内安装时可能出现time-out错误—解决办法如下:

#sudo pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple numpy #sudo pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple scipy

五,简单实现分词并计算TF-IDF值:

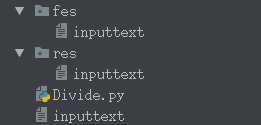

#!/usr/bin python3.5 # coding=utf-8 #import os import jieba import re from sklearn.feature_extraction.text import TfidfTransformer from sklearn.feature_extraction.text import CountVectorizer #import collections class Tfi(object): def __init__(self): self.stop_list = [] pass def fenci(self, file): #list = [] fin = open(file, ‘r‘) read_b = fin.read() fin.close() read_res = ‘‘.join(re.findall(u‘[a-zA-Z0-9\\u4e00-\\u9fa5]+‘, read_b)) cut_res = jieba.cut(read_res, cut_all=True) line_res = ‘‘ for i in cut_res: if i not in self.stop_list: line_res = line_res + i + ‘ ‘ fout = open(‘res/‘ + file, ‘w‘) fout.write(line_res) fout.close() def cipin(self, fil_list): corpus = [] for fil in fil_list: ffout = open(‘res/‘+fil, ‘r‘) read_r = ffout.read() ffout.close() corpus.append(read_r) vectorizer = CountVectorizer() transformer = TfidfTransformer() tfidf = transformer.fit_transform(vectorizer.fit_transform(corpus)) word = vectorizer.get_feature_names() # 所有文本的关键字 weight = tfidf.toarray() for j in range(len(weight)): f = open(‘fes/‘+fil_list[j], ‘w‘) for i in range(len(word)): f.write(word[i]+‘ ‘+str(weight[j][i]) + ‘\\n‘) f.close() if __name__ == ‘__main__‘: first = Tfi() fil_list = [‘inputtext‘] first.fenci(‘inputtext‘) first.cipin(fil_list)

以上就是Python3.5 数据处理 –jieba + sklearn库安装及第一个示例的全部内容了,更多内容请关注:CPP学习网_CPP大学

本文固定链接:CPP学习网_CPP大学-Python3.5 数据处理 –jieba + sklearn库安装及第一个示例

本文固定链接:CPP学习网_CPP大学-Python3.5 数据处理 –jieba + sklearn库安装及第一个示例

以上是关于Python3.5 数据处理 –jieba + sklearn库安装及第一个示例的主要内容,如果未能解决你的问题,请参考以下文章