2017-02-08 01:19:09 Scrapy: pk5_mylist

Posted 乾坤颠倒

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了2017-02-08 01:19:09 Scrapy: pk5_mylist相关的知识,希望对你有一定的参考价值。

[TOC]

###2017-02-08 01:19:09 Scrapy: pk5_mylist.md> Save the records with MongoDB

#### settings.py

```

# -*- coding: utf-8 -*-

BOT_NAME = \'bengbeng\'

SPIDER_MODULES = [\'bengbeng.spiders\']

NEWSPIDER_MODULE = \'bengbeng.spiders\'

ROBOTSTXT_OBEY = True

ITEM_PIPELINES = {

\'bengbeng.pipelines.BengbengPipeline\': 300,

}

DOWNLOADER_MIDDLEWARES = {

\'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware\':None,

\'bengbeng.middlewares.RotateUserAgentMiddleware\':400,

}

DOWNLOAD_DELAY = 0.2

COOKIES_ENABLED = True

MONGODB_HOST = \'127.0.0.1\'

MONGODB_PORT = 27017

MONGODB_DBNAME = \'bengbeng\'

MONGODB_COLLECTION = \'scrapy_pk5_mylist\'

```

#### items.py

```

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class BengbengItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# pm = pk5_mylist

pm_betid = scrapy.Field()

pm_result = scrapy.Field()

pm_result_rate = scrapy.Field()

pm_rate_bao = scrapy.Field()

pm_rate_dui = scrapy.Field()

pm_rate_shun = scrapy.Field()

pm_rate_ban = scrapy.Field()

pm_rate_za = scrapy.Field()

```

#### pipelines.py

```

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don\'t forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

from scrapy.conf import settings

import pymongo

class BengbengPipeline(object):

def __init__(self):

bengbengHost = settings[\'MONGODB_HOST\']

bengbengPort = settings[\'MONGODB_PORT\']

bengbengdbName = settings[\'MONGODB_DBNAME\']

bengbengClient = pymongo.MongoClient(host=bengbengHost, port=bengbengPort)

bengbengtdb = bengbengClient[bengbengdbName]

self.postbengbeng = bengbengtdb[settings[\'MONGODB_COLLECTION\']]

def process_item(self, bengbengitem, spider):

# self.postbengbeng.insert({\'bengbeng_xflink\':bengbengitem[\'bengbeng_xflink\']})

if bengbengitem[\'pm_betid\'] != \'\':

bengbeng_pk5_result = dict(bengbengitem)

# print(item[\'mm6xx_title\'])

# try to ignore the same URLs before insert into DB .

if self.postbengbeng.find({\'pm_betid\':bengbengitem[\'pm_betid\']}).count() == 0:

print(\'{0}\'.format(bengbeng_pk5_result))

self.postbengbeng.insert(bengbeng_pk5_result)

else:

# if data was found , then update

# bengbeng_pk5_result.pop(\'_id\')

print("Record was Found ,Update!".center(40,\'*\'))

result = self.postbengbeng.find_one({\'pm_betid\':bengbengitem[\'pm_betid\']},{\'pm_result\':1})

bengbengitem[\'pm_result\'] = result[\'pm_result\']

# get the pm_result_rate

bengbengitem[\'pm_result_rate\'] = bengbengitem[\'pm_rate_{0}\'.format(result[\'pm_result\'])]

bengbeng_pk5_result = dict(bengbengitem)

# Update : remove the old records , Insert the new one

self.postbengbeng.remove({\'pm_betid\':bengbengitem[\'pm_betid\']})

print(\'Update: {0}\'.format(bengbeng_pk5_result))

self.postbengbeng.insert(bengbeng_pk5_result)

return bengbengitem

```

#### pk5_mylist.py

```

# -*- coding: utf-8 -*-

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from scrapy.http.request import Request

from scrapy.http import Request

from scrapy import Spider

from scrapy.selector import Selector

from bengbeng.items import BengbengItem

import re

import time

# try to list xpath

xpath_betid = \'//*[@id="pkBox"]/ul/*[@class="jl1"]/text()\'

xpath_result = \'//*[@id="pkBox"]/ul/*[@class="jl3"]/img/@src\'

xpath_all_rate = \'//*[@id="pkBox"]/ul/*[@class="ck2"]/text()\'

xpath_rate_betid = \'//*[@id="pkBox"]/div[4]/span[1]/font/text()\'

class Pk5MylistSpider(scrapy.Spider):

name = "pk5_mylist"

allowed_domains = ["bengbeng.com"]

start_urls = [\'http://www.bengbeng.com/pk5mylist.php?p=1\']

my_cookies = {\'PHPSESSID\': \'0gl0onrnocb149v3jcmhpdk3k0\'}

pk5_mylist_item = BengbengItem()

for i in range(2,33): # 2,31

start_urls.append(\'http://www.bengbeng.com/pk5mylist.php?p={0}\'.format(i))

def goForSecondPage(self, response):

all_rate = Selector(response).xpath(xpath_all_rate).extract()

rate_betid = Selector(response).xpath(xpath_rate_betid).extract()

#self.pk5_mylist_item = BengbengItem()

self.pk5_mylist_item[\'pm_betid\'] = rate_betid[0]

self.pk5_mylist_item[\'pm_rate_bao\'] = all_rate[0]

self.pk5_mylist_item[\'pm_rate_dui\'] = all_rate[1]

self.pk5_mylist_item[\'pm_rate_shun\'] = all_rate[2]

self.pk5_mylist_item[\'pm_rate_ban\'] = all_rate[3]

self.pk5_mylist_item[\'pm_rate_za\'] = all_rate[4]

yield self.pk5_mylist_item

#print(self.pk5_mylist_item)

# Reload spiders with new cookies

def start_requests(self):

for url in self.start_urls:

yield Request(url, cookies=self.my_cookies)

def parse(self, response):

all_betid = Selector(response).xpath(xpath_betid).extract()

all_result = Selector(response).xpath(xpath_result).extract()

#self.pk5_mylist_item = BengbengItem()

dict_result = {

\'bz\':\'bao\', # Bao

\'dz\':\'dui\', # Dui

\'sz\':\'shun\', # Shun

\'bs\':\'ban\', # Ban

\'zl\':\'za\', # Za

}

result_search = re.compile(\'_(\\D+)\\.\')

if len(all_result) == 29:

j = 1

for i in range(29):

betid = all_betid[j]

result = dict_result[result_search.findall(all_result[i])[0]]

self.pk5_mylist_item[\'pm_betid\'] = betid

self.pk5_mylist_item[\'pm_result\'] = result

yield self.pk5_mylist_item

url = \'http://www.bengbeng.com/pk5mdetail.php?no=\' + betid

yield Request(url,cookies=self.my_cookies,callback=self.goForSecondPage)

j += 1

#time.sleep(0.6)

else:

for i in range(30):

betid = all_betid[i]

result = dict_result[result_search.findall(all_result[i])[0]]

self.pk5_mylist_item[\'pm_betid\'] = betid

self.pk5_mylist_item[\'pm_result\'] = result

yield self.pk5_mylist_item

url = \'http://www.bengbeng.com/pk5mdetail.php?no=\' + betid

yield Request(url,cookies=self.my_cookies,callback=self.goForSecondPage)

#time.sleep(0.6)

```

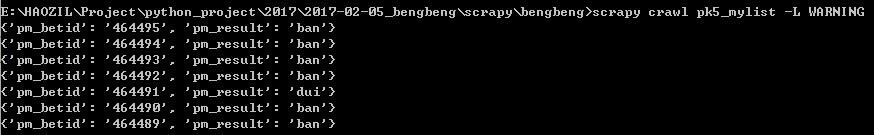

#### Run:

#### Records in DB

```

{

"_id" : ObjectId("5899fac96f59de2268c28918"),

"pm_rate_ban" : "2.7767",

"pm_rate_dui" : "3.7002",

"pm_rate_bao" : "100.8169",

"pm_rate_za" : "3.3057",

"pm_rate_shun" : "16.6743",

"pm_betid" : "463645",

"pm_result" : "ban",

"pm_result_rate" : "2.7767"

}

/* 2 */

{

"_id" : ObjectId("5899fac86f59de2268c28917"),

"pm_rate_ban" : "2.7797",

"pm_rate_dui" : "3.7823",

"pm_rate_bao" : "113.3838",

"pm_rate_za" : "3.3606",

"pm_rate_shun" : "13.7679",

"pm_betid" : "463644",

"pm_result" : "shun",

"pm_result_rate" : "13.7679"

}

```

附件列表

以上是关于2017-02-08 01:19:09 Scrapy: pk5_mylist的主要内容,如果未能解决你的问题,请参考以下文章

RecyclerView 动画原理 | pre-layout,post-layout 与 scrap 缓存的关系