hbase使用出的错,求帮助

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了hbase使用出的错,求帮助相关的知识,希望对你有一定的参考价值。

一、客户端访问hbase时出现 no further information使用java api访问hbase时,一直连不上,查看日志发现以下错误:

java.net.ConnectException: Connection refused: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(Unknown Source)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:933)

10/06/25 15:44:23 WARN zookeeper.ClientCnxn: Ignoring exception during shutdown input

java.nio.channels.ClosedChannelException

at sun.nio.ch.SocketChannelImpl.shutdownInput(Unknown Source)

at sun.nio.ch.SocketAdaptor.shutdownInput(Unknown Source)

查看hbase日志发现有客户端连接信息,但是响应之后一直客户端便一直报上述错误。查资料得知该错一般由于客户端获取hbase regionServer的Ip错误导致,查看zookeeper中的地址发现存的是localhost。经网上资料得知伪分布式式下若未配置host默认获取地址为localhost(大概考虑伪分布式一般都为本机访问吧),在host中将localhost配置成ip即可。

二、HBase同时出现TableNotEnabledException和TableNotDisabledException

在一次enable表的时候由于时间过长而直接ctrl+c结束,结果再次操作时出现TableNotDisabledException错误,而尝试disable却出现

TableNotEnabledException,导致无法启用表。上网查询得知是由于中断操作导致zookeeper中记录的表状态不一致导致。通过以下方法解决。

1、连接zookeeper( 直接通过hbase的工具)hbase zkcli

2、删除hbase下 对应表的数据(或者直接删除/hbase/table路径)delete /hbase/table/表名

3、重启hbase 参考技术A 一、客户端访问hbase时出现 no further information

使用java api访问hbase时,一直连不上,查看日志发现以下错误:

java.net.ConnectException: Connection refused: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(Unknown Source)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:933)

10/06/25 15:44:23 WARN zookeeper.ClientCnxn: Ignoring exception during shutdown input

java.nio.channels.ClosedChannelException

at sun.nio.ch.SocketChannelImpl.shutdownInput(Unknown Source)

at sun.nio.ch.SocketAdaptor.shutdownInput(Unknown Source)

查看hbase日志发现有客户端连接信息,但是响应之后一直客户端便一直报上述错误。查资料得知该错一般由于客户端获取hbase regionServer的Ip错误导致,查看zookeeper中的地址发现存的是localhost。经网上资料得知伪分布式式下若未配置host默认获取地址为localhost(大概考虑伪分布式一般都为本机访问吧),在host中将localhost配置成ip即可。

二、HBase同时出现TableNotEnabledException和TableNotDisabledException

在一次enable表的时候由于时间过长而直接ctrl+c结束,结果再次操作时出现TableNotDisabledException错误,而尝试disable却出现

TableNotEnabledException,导致无法启用表。上网查询得知是由于中断操作导致zookeeper中记录的表状态不一致导致。通过以下方法解决。

1、连接zookeeper( 直接通过hbase的工具)hbase zkcli

2、删除hbase下 对应表的数据(或者直接删除/hbase/table路径)delete /hbase/table/表名

3、重启hbase

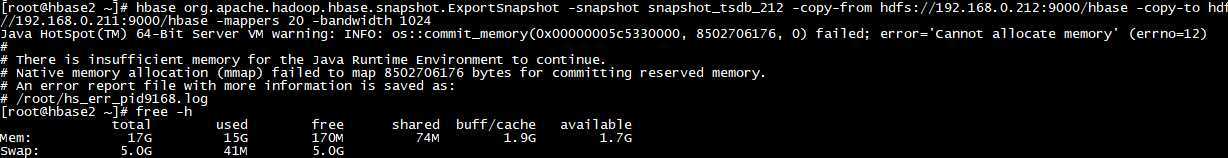

hbase迁移快照ExportSnapshot时遇到的错

1、Cannot allocate memory

报错信息:

Java HotSpot(TM) 64-Bit Server VM warning: INFO: os::commit_memory(0x00000005c5330000, 8502706176, 0) failed; error=‘Cannot allocate memory‘ (errno=12)

#

# There is insufficient memory for the Java Runtime Environment to continue.

# Native memory allocation (mmap) failed to map 8502706176 bytes for committing reserved memory.

# An error report file with more information is saved as:

# /root/hs_err_pid9168.log

日志

上面报错信息提示,查看更多,去/root/hs_err_pid9168.log里面查看。

#查看 vim /root/hs_err_pid9168.log #内容 # # There is insufficient memory for the Java Runtime Environment to continue. # Native memory allocation (mmap) failed to map 8502706176 bytes for committing reserved memory. # Possible reasons: # The system is out of physical RAM or swap space # In 32 bit mode, the process size limit was hit # Possible solutions: # Reduce memory load on the system # Increase physical memory or swap space # Check if swap backing store is full # Use 64 bit Java on a 64 bit OS # Decrease Java heap size (-Xmx/-Xms) # Decrease number of Java threads # Decrease Java thread stack sizes (-Xss) # Set larger code cache with -XX:ReservedCodeCacheSize= # This output file may be truncated or incomplete. # # Out of Memory Error (os_linux.cpp:2743), pid=9168, tid=0x00007f22fdcce700 # # JRE version: (8.0_191-b12) (build ) # Java VM: Java HotSpot(TM) 64-Bit Server VM (25.191-b12 mixed mode linux-amd64 compressed oops) # Core dump written. Default location: /root/core or core.9168 # --------------- T H R E A D --------------- Current thread (0x00007f22f4016000): JavaThread "Unknown thread" [_thread_in_vm, id=9255, stack(0x00007f22fdbcf000,0x00007f22fdccf000)] Stack: [0x00007f22fdbcf000,0x00007f22fdccf000], sp=0x00007f22fdccd4c0, free space=1017k Native frames: (J=compiled Java code, j=interpreted, Vv=VM code, C=native code) V [libjvm.so+0xace425] VMError::report_and_die()+0x2c5 V [libjvm.so+0x4deb77] report_vm_out_of_memory(char const*, int, unsigned long, VMErrorType, char const*)+0x67 V [libjvm.so+0x90c570] os::pd_commit_memory(char*, unsigned long, unsigned long, bool)+0x100 V [libjvm.so+0x903eaf] os::commit_memory(char*, unsigned long, unsigned long, bool)+0x1f V [libjvm.so+0xaca93c] VirtualSpace::initialize(ReservedSpace, unsigned long)+0x20c V [libjvm.so+0x5ea477] CardGeneration::CardGeneration(ReservedSpace, unsigned long, int, GenRemSet*)+0xc7 V [libjvm.so+0x5eb842] GenerationSpec::init(ReservedSpace, int, GenRemSet*)+0x182 V [libjvm.so+0x5d699f] GenCollectedHeap::initialize()+0x20f V [libjvm.so+0xa922ba] Universe::initialize_heap()+0x16a V [libjvm.so+0xa92593] universe_init()+0x33 V [libjvm.so+0x62f0f0] init_globals()+0x50 V [libjvm.so+0xa74c57] Threads::create_vm(JavaVMInitArgs*, bool*)+0x257 V [libjvm.so+0x6d49ff] JNI_CreateJavaVM+0x4f C [libjli.so+0x7e74] JavaMain+0x84 C [libpthread.so.0+0x7dd5] start_thread+0xc5 --------------- P R O C E S S --------------- Java Threads: ( => current thread ) Other Threads: =>0x00007f22f4016000 (exited) JavaThread "Unknown thread" [_thread_in_vm, id=9255, stack(0x00007f22fdbcf000,0x00007f22fdccf000)] VM state:not at safepoint (not fully initialized) VM Mutex/Monitor currently owned by a thread: None GC Heap History (0 events): No events Deoptimization events (0 events): No events Classes redefined (0 events): No events Internal exceptions (0 events): No events Events (0 events): No events Dynamic libraries: 00400000-00401000 r-xp 00000000 fd:00 202099235 /usr/local/soft/jdk/jdk1.8.0_191/bin/java 00600000-00601000 r--p 00000000 fd:00 202099235 /usr/local/soft/jdk/jdk1.8.0_191/bin/java 00601000-00602000 rw-p 00001000 fd:00 202099235 /usr/local/soft/jdk/jdk1.8.0_191/bin/java 00dc0000-00dfa000 rw-p 00000000 00:00 0 [heap] 5c0000000-5c5330000 rw-p 00000000 00:00 0 7f22e5000000-7f22e5270000 rwxp 00000000 00:00 0 7f22e5270000-7f22f4000000 ---p 00000000 00:00 0 7f22f4000000-7f22f4043000 rw-p 00000000 00:00 0 7f22f4043000-7f22f8000000 ---p 00000000 00:00 0 7f22f9b03000-7f22f9ec1000 rw-p 00000000 00:00 0 7f22f9ec1000-7f22fae97000 ---p 00000000 00:00 0 7f22fae97000-7f22fae98000 rw-p 00000000 00:00 0 7f22fae98000-7f22fae99000 ---p 00000000 00:00 0 7f22fae99000-7f22fafa3000 rw-p 00000000 00:00 0 7f22fafa3000-7f22fb359000 ---p 00000000 00:00 0 7f22fb359000-7f22fb373000 r-xp 00000000 fd:00 134320156 /usr/local/soft/jdk/jdk1.8.0_191/jre/lib/amd64/libzip.so 7f22fb373000-7f22fb573000 ---p 0001a000 fd:00 134320156 /usr/local/soft/jdk/jdk1.8.0_191/jre/lib/amd64/libzip.so 7f22fb573000-7f22fb574000 r--p 0001a000 fd:00 134320156 /usr/local/soft/jdk/jdk1.8.0_191/jre/lib/amd64/libzip.so 7f22fb574000-7f22fb575000 rw-p 0001b000 fd:00 134320156 /usr/local/soft/jdk/jdk1.8.0_191/jre/lib/amd64/libzip.so 7f22fb575000-7f22fb581000 r-xp 00000000 fd:00 1048275 /usr/lib64/libnss_files-2.17.so 7f22fb581000-7f22fb780000 ---p 0000c000 fd:00 1048275 /usr/lib64/libnss_files-2.17.so 7f22fb780000-7f22fb781000 r--p 0000b000 fd:00 1048275 /usr/lib64/libnss_files-2.17.so 7f22fb781000-7f22fb782000 rw-p 0000c000 fd:00 1048275 /usr/lib64/libnss_files-2.17.so 7f22fcdb2000-7f22fcfb2000 ---p 00ce2000 fd:00 19060 /usr/local/soft/jdk/jdk1.8.0_191/jre/lib/amd64/server/libjvm.so 7f22fcfb2000-7f22fd048000 r--p 00ce2000 fd:00 19060 /usr/local/soft/jdk/jdk1.8.0_191/jre/lib/amd64/server/libjvm.so 7f22fd048000-7f22fd079000 rw-p 00d78000 fd:00 19060 /usr/local/soft/jdk/jdk1.8.0_191/jre/lib/amd64/server/libjvm.so 7f22fd079000-7f22fd0b4000 rw-p 00000000 00:00 0 7f22fd476000-7f22fd47a000 r--p 001c2000 fd:00 34193 /usr/lib64/libc-2.17.so 7f22fd47a000-7f22fd47c000 rw-p 001c6000 fd:00 34193 /usr/lib64/libc-2.17.so 7f22fd47c000-7f22fd481000 rw-p 00000000 00:00 0 7f22fd481000-7f22fd483000 r-xp 00000000 fd:00 34199 /usr/lib64/libdl-2.17.so 7f22fd483000-7f22fd683000 ---p 00002000 fd:00 34199 /usr/lib64/libdl-2.17.so 7f22fd683000-7f22fd684000 r--p 00002000 fd:00 34199 /usr/lib64/libdl-2.17.so 7f22fd684000-7f22fd685000 rw-p 00003000 fd:00 34199 /usr/lib64/libdl-2.17.so 7f22fd685000-7f22fd69c000 r-xp 00000000 fd:00 67296956 /usr/local/soft/jdk/jdk1.8.0_191/lib/amd64/jli/libjli.so 7f22fd69c000-7f22fd89b000 ---p 00017000 fd:00 67296956 /usr/local/soft/jdk/jdk1.8.0_191/lib/amd64/jli/libjli.so 7f22fd89b000-7f22fd89c000 r--p 00016000 fd:00 67296956 /usr/local/soft/jdk/jdk1.8.0_191/lib/amd64/jli/libjli.so 7f22fd89c000-7f22fd89d000 rw-p 00017000 fd:00 67296956 /usr/local/soft/jdk/jdk1.8.0_191/lib/amd64/jli/libjli.so 7f22fd89d000-7f22fd8b4000 r-xp 00000000 fd:00 1051052 /usr/lib64/libpthread-2.17.so 7f22fd8b4000-7f22fdab3000 ---p 00017000 fd:00 1051052 /usr/lib64/libpthread-2.17.so 7f22fdab3000-7f22fdab4000 r--p 00016000 fd:00 1051052 /usr/lib64/libpthread-2.17.so 7f22fdab4000-7f22fdab5000 rw-p 00017000 fd:00 1051052 /usr/lib64/libpthread-2.17.so 7f22fdab5000-7f22fdab9000 rw-p 00000000 00:00 0 7f22fdab9000-7f22fdadb000 r-xp 00000000 fd:00 33871 /usr/lib64/ld-2.17.so 7f22fdbc6000-7f22fdbce000 rw-s 00000000 fd:00 503948 /tmp/hsperfdata_root/9168 7f22fdbce000-7f22fdbd2000 ---p 00000000 00:00 0 7f22fdbd2000-7f22fdcd3000 rw-p 00000000 00:00 0 7f22fdcd4000-7f22fdcd8000 rw-p 00000000 00:00 0 7f22fdcd8000-7f22fdcd9000 r--p 00000000 00:00 0 7f22fdcd9000-7f22fdcda000 rw-p 00000000 00:00 0 7f22fdcda000-7f22fdcdb000 r--p 00021000 fd:00 33871 /usr/lib64/ld-2.17.so 7f22fdcdb000-7f22fdcdc000 rw-p 00022000 fd:00 33871 /usr/lib64/ld-2.17.so 7f22fdcdc000-7f22fdcdd000 rw-p 00000000 00:00 0 7fff013af000-7fff013d3000 rw-p 00000000 00:00 0 [stack] 7fff013ec000-7fff013ee000 r-xp 00000000 00:00 0 [vdso] ffffffffff600000-ffffffffff601000 r-xp 00000000 00:00 0 [vsyscall] VM Arguments: Launcher Type: SUN_STANDARD Environment Variables: JAVA_HOME=/usr/local/soft/jdk/jdk1.8.0_191 LD_LIBRARY_PATH=:/opt/hadoop-3.1.2/lib:/opt/hadoop-3.1.2/lib/native SHELL=/bin/bash Signal Handlers: SIGSEGV: [libjvm.so+0xaced60], sa_mask[0]=11111111011111111101111111111110, sa_flags=SA_RESTART|SA_SIGINFO SIGBUS: [libjvm.so+0xaced60], sa_mask[0]=11111111011111111101111111111110, sa_flags=SA_RESTART|SA_SIGINFO SIGFPE: [libjvm.so+0x907ca0], sa_mask[0]=11111111011111111101111111111110, sa_flags=SA_RESTART|SA_SIGINFO SIGPIPE: [libjvm.so+0x907ca0], sa_mask[0]=11111111011111111101111111111110, sa_flags=SA_RESTART|SA_SIGINFO SIGXFSZ: [libjvm.so+0x907ca0], sa_mask[0]=11111111011111111101111111111110, sa_flags=SA_RESTART|SA_SIGINFO SIGILL: [libjvm.so+0x907ca0], sa_mask[0]=11111111011111111101111111111110, sa_flags=SA_RESTART|SA_SIGINFO SIGUSR1: SIG_DFL, sa_mask[0]=00000000000000000000000000000000, sa_flags=none SIGUSR2: [libjvm.so+0x907b70], sa_mask[0]=00000000000000000000000000000000, sa_flags=SA_RESTART|SA_SIGINFO SIGHUP: SIG_DFL, sa_mask[0]=00000000000000000000000000000000, sa_flags=none SIGINT: SIG_DFL, sa_mask[0]=00000000000000000000000000000000, sa_flags=none SIGTERM: SIG_DFL, sa_mask[0]=00000000000000000000000000000000, sa_flags=none SIGQUIT: SIG_DFL, sa_mask[0]=00000000000000000000000000000000, sa_flags=none --------------- S Y S T E M --------------- OS:CentOS Linux release 7.5.1804 (Core) uname:Linux 3.10.0-862.el7.x86_64 #1 SMP Fri Apr 20 16:44:24 UTC 2018 x86_64 libc:glibc 2.17 NPTL 2.17 rlimit: STACK 8192k, CORE infinity, NPROC 71318, NOFILE 102400, AS infinity load average:4.18 4.20 4.11 /proc/meminfo: MemTotal: 18281924 kB MemFree: 199928 kB MemAvailable: 1747332 kB Buffers: 0 kB Cached: 1806360 kB SwapCached: 2488 kB Active: 15057324 kB Inactive(anon): 1729572 kB Active(file): 865640 kB Inactive(file): 864956 kB Unevictable: 0 kB Mlocked: 0 kB SwapTotal: 5238780 kB SwapFree: 5196284 kB Dirty: 11104 kB Writeback: 0 kB AnonPages: 15843208 kB Mapped: 70700 kB Shmem: 75776 kB Slab: 202108 kB SReclaimable: 156948 kB SUnreclaim: 45160 kB KernelStack: 14960 kB PageTables: 38372 kB NFS_Unstable: 0 kB Bounce: 0 kB WritebackTmp: 0 kB CommitLimit: 14379740 kB HardwareCorrupted: 0 kB AnonHugePages: 2625536 kB CmaTotal: 0 kB CmaFree: 0 kB HugePages_Total: 0 HugePages_Free: 0 HugePages_Rsvd: 0 HugePages_Surp: 0 Hugepagesize: 2048 kB DirectMap4k: 81792 kB DirectMap2M: 5111808 kB DirectMap1G: 13631488 kB container (cgroup) information: container_type: cgroupv1 cpu_cpuset_cpus: 0 cpu_memory_nodes: 0 active_processor_count: 1 cpu_quota: -1 cpu_period: 100000 cpu_shares: -1 memory_limit_in_bytes: -1 memory_and_swap_limit_in_bytes: -1 memory_soft_limit_in_bytes: -1 memory_usage_in_bytes: 18073210880 memory_max_usage_in_bytes: 0 CPU:total 1 (initial active 1) (1 cores per cpu, 1 threads per core) family 6 model 85 stepping 4, cmov, cx8, fxsr, mmx, sse, sse2, sse3, ssse3, sse4.1, sse 4.2, popcnt, avx, avx2, aes, clmul, erms, rtm, 3dnowpref, lzcnt, tsc, tscinvbit, bmi1, bmi2, adx /proc/cpuinfo: processor : 0 vendor_id : GenuineIntel cpu family : 6 model : 85 model name : Intel(R) Xeon(R) Gold 5118 CPU @ 2.30GHz stepping : 4 microcode : 0x200004d cpu MHz : 2294.738 cache size : 16896 KB physical id : 0 siblings : 1 core id : 0 cpu cores : 1 apicid : 0 initial apicid : 0 fpu : yes fpu_exception : yes cpuid level : 22 wp : yes flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant _tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc eagerfpu pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer ae s xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx avx512f avx512dq rdseed adx smap cl flushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec ibpb ibrs stibp arat pku ospke spec_ctrl intel_stibp arch_capabilities bogomips : 4589.47 clflush size : 64 cache_alignment : 64 address sizes : 43 bits physical, 48 bits virtual power management: Memory: 4k page, physical 18281924k(199928k free), swap 5238780k(5196284k free) vm_info: Java HotSpot(TM) 64-Bit Server VM (25.191-b12) for linux-amd64 JRE (1.8.0_191-b12), built on Oct 6 2018 05:43:09 by "java_re" with gcc 7.3.0 time: Tue Sep 17 09:54:53 2019 elapsed time: 0 seconds (0d 0h 0m 0s)

原因分析

明显是由于内存不够,查看内存占用

df -h

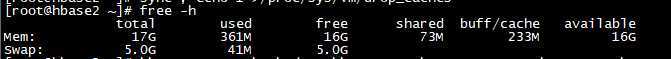

解决

#重启hbase、hadoop stop-hbase.sh stop-all.sh start-all.sh start-hbase.sh #清除缓存 sync ; echo 1 >/proc/sys/vm/drop_caches echo 2 >/proc/sys/vm/drop_caches echo 3 >/proc/sys/vm/drop_caches #再次查看内存占用 df -h

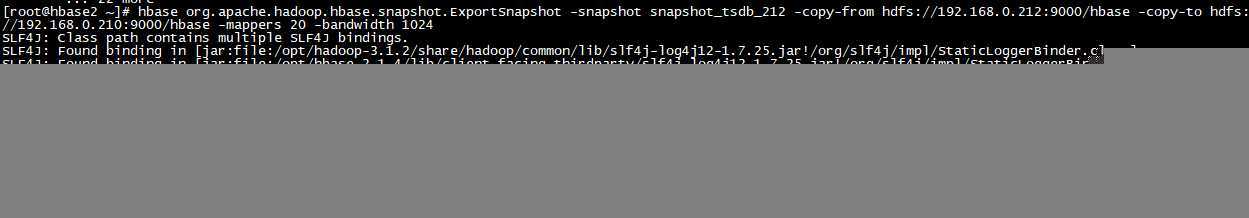

2、Application is added to the scheduler and is not yet activated

再次迁移快照时,任务一直停留着不动

查看web,显示如下:

Application is added to the scheduler and is not yet activated. Skipping AM assignment as cluster resource is empty. Details :

AM Partition = <DEFAULT_PARTITION>; AM Resource Request = <memory:2048, vCores:1>; Queue Resource Limit for AM = <memory:0, vCores:0>;

User AM Resource Limit of the queue = <memory:0, vCores:0>; Queue AM Resource Usage = <memory:0, vCores:0>;

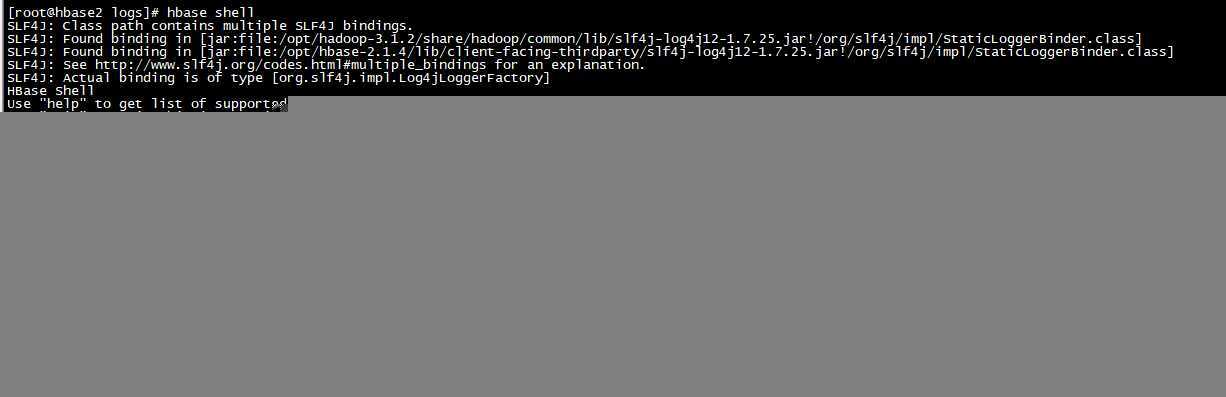

hbase shell能正常进入,但是输入命令,报错

ERROR: org.apache.hadoop.hbase.PleaseHoldException: Master is initializing at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:2977) at org.apache.hadoop.hbase.master.MasterRpcServices.getCompletedSnapshots(MasterRpcServices.java:949) at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java) at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:413) at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:130) at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:324) at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:304)

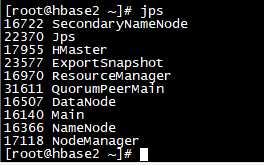

jps查看,HRegionServer没有起来

分析HRegionServer的日志

tailf /opt/hbase-2.1.4/logs/hbase-root-regionserver-hbase2.log -n 500 #报错信息

2019-09-17 10:59:22,539 INFO [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-0] coordination.ZkSplitLogWorkerCoordination: successfully transitioned task /hbase/splitWAL/WALs%2Fhbase2%2C16020%2C1568617738890-splitting%2Fhbase2%252C16020%252C1568617738890.1568684725860 to final state ERR hbase2,16020,1568688376805

2019-09-17 10:59:22,539 INFO [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-0] handler.WALSplitterHandler: Worker hbase2,16020,1568688376805 done with task org.apache.hadoop.hbase.coordination.ZkSplitLogWorkerCoordination$ZkSplitTaskDetails@9885422 in 200ms. Status = ERR

2019-09-17 10:59:23,158 INFO [SplitLogWorker-hbase2:16020] coordination.ZkSplitLogWorkerCoordination: worker hbase2,16020,1568688376805 acquired task /hbase/splitWAL/WALs%2Fhbase2%2C16020%2C1568617738890-splitting%2Fhbase2%252C16020%252C1568617738890.1568683832472

2019-09-17 10:59:23,179 INFO [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1] wal.WALSplitter: Splitting WAL=hdfs://hbase2:9000/hbase/WALs/hbase2,16020,1568617738890-splitting/hbase2%2C16020%2C1568617738890.1568683832472, length=138158040

2019-09-17 10:59:23,183 INFO [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1] util.FSHDFSUtils: Recover lease on dfs file hdfs://hbase2:9000/hbase/WALs/hbase2,16020,1568617738890-splitting/hbase2%2C16020%2C1568617738890.1568683832472

2019-09-17 10:59:23,183 INFO [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1] util.FSHDFSUtils: Recovered lease, attempt=0 on file=hdfs://hbase2:9000/hbase/WALs/hbase2,16020,1568617738890-splitting/hbase2%2C16020%2C1568617738890.1568683832472 after 0ms

2019-09-17 10:59:23,201 WARN [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1-Writer-1] wal.WALSplitter:

Found old edits file. It could be the result of a previous failed split attempt.

Deleting hdfs://hbase2:9000/hbase/default/tsdb/0d0a4577bfb611d1f8f7b903e296b38f/recovered.edits/0000000000007153973-hbase2%2C16020%2C1568617738890.1568683832472.temp, length=0

2019-09-17 10:59:23,222 WARN [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1-Writer-0] wal.WALSplitter: Found old edits file.

It could be the result of a previous failed split attempt.

Deleting hdfs://hbase2:9000/hbase/default/tsdb/2501a70608674eab4974e7f8006dac12/recovered.edits/0000000000007214923-hbase2%2C16020%2C1568617738890.1568683832472.temp, length=0

2019-09-17 10:59:23,235 WARN [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1-Writer-2] wal.WALSplitter: Found old edits file. It could be the result of a previous failed split attempt. Deleting hdfs://hbase2:9000/hbase/default/tsdb/8d5fe84d54f4170f35d33dff7b830444/recovered.edits/0000000000006173823-hbase2%2C16020%2C1568617738890.1568683832472.temp, length=0

2019-09-17 10:59:23,411 WARN [Thread-8138] hdfs.DFSClient: DataStreamer Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /hbase/default/tsdb/0d0a4577bfb611d1f8f7b903e296b38f/recovered.edits/0000000000007153973-hbase2%2C16020%2C1568617738890.1568683832472.temp could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2121)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:875)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:561)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:872)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2678)

at org.apache.hadoop.ipc.Client.call(Client.java:1476)

at org.apache.hadoop.ipc.Client.call(Client.java:1413)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy18.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:418)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy19.addBlock(Unknown Source)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hbase.fs.HFileSystem$1.invoke(HFileSystem.java:372)

at com.sun.proxy.$Proxy20.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1603)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1388)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:554)

2019-09-17 10:59:23,412 ERROR [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1-Writer-1] wal.WALSplitter: Got while writing log entry to log

java.io.IOException: File /hbase/default/tsdb/0d0a4577bfb611d1f8f7b903e296b38f/recovered.edits/0000000000007153973-hbase2%2C16020%2C1568617738890.1568683832472.temp could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2121)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:875)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:561)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:872)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2678)

at sun.reflect.GeneratedConstructorAccessor8.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:95)

at org.apache.hadoop.hbase.wal.WALSplitter$LogRecoveredEditsOutputSink.appendBuffer(WALSplitter.java:1601)

at org.apache.hadoop.hbase.wal.WALSplitter$LogRecoveredEditsOutputSink.append(WALSplitter.java:1559)

at org.apache.hadoop.hbase.wal.WALSplitter$WriterThread.writeBuffer(WALSplitter.java:1084)

at org.apache.hadoop.hbase.wal.WALSplitter$WriterThread.doRun(WALSplitter.java:1076)

at org.apache.hadoop.hbase.wal.WALSplitter$WriterThread.run(WALSplitter.java:1046)

Caused by: org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /hbase/default/tsdb/0d0a4577bfb611d1f8f7b903e296b38f/recovered.edits/0000000000007153973-hbase2%2C16020%2C1568617738890.1568683832472.temp could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2121)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:875)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:561)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:872)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2678)

at org.apache.hadoop.ipc.Client.call(Client.java:1476)

at org.apache.hadoop.ipc.Client.call(Client.java:1413)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy18.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:418)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy19.addBlock(Unknown Source)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hbase.fs.HFileSystem$1.invoke(HFileSystem.java:372)

at com.sun.proxy.$Proxy20.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1603)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1388)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:554)

2019-09-17 10:59:23,412 ERROR [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1-Writer-1] wal.WALSplitter: Exiting thread

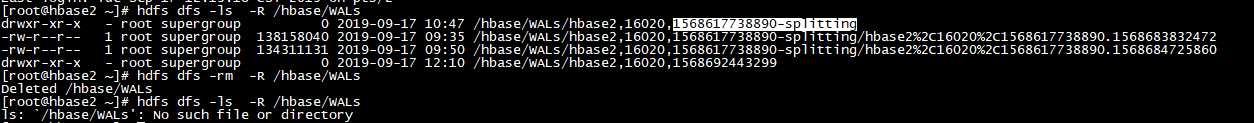

分析日志,发现,在反复切割1568617738890文件,那我们将这个文件删除,再重启

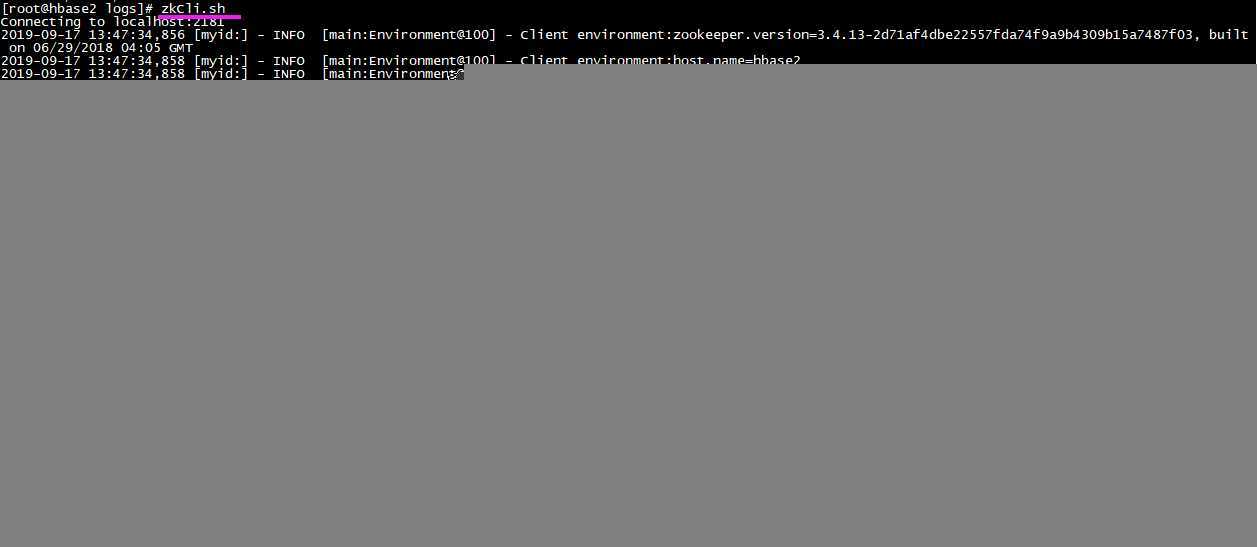

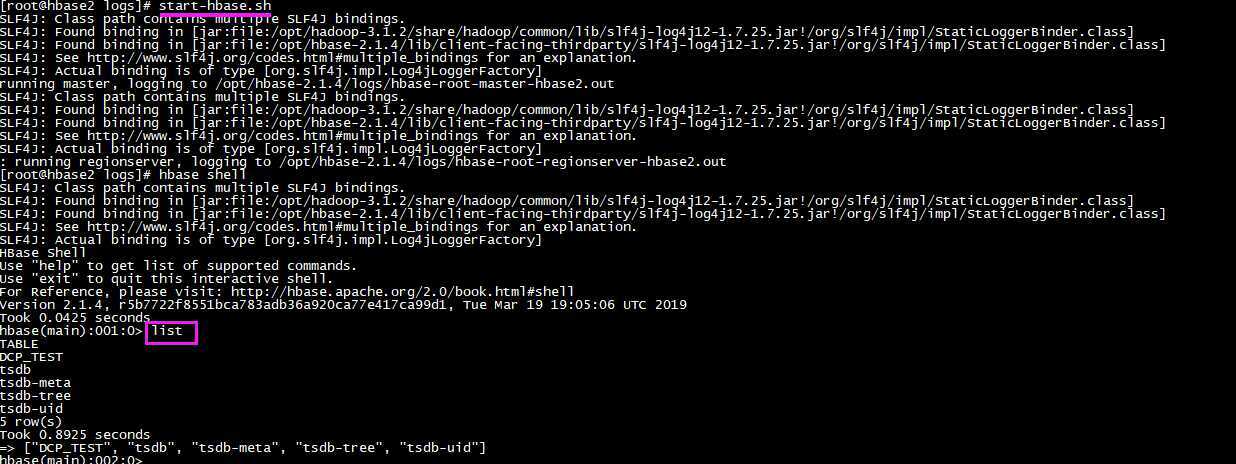

#停止hbase stop-hbase.sh #查看WALs hdfs dfs -ls -R /hbase/WALs #删除WALs hdfs dfs -rm -R /hbase/WALs #清空zk里面的hbase zkCli.sh

rmr /hbase #启动hbase start-hbase.sh

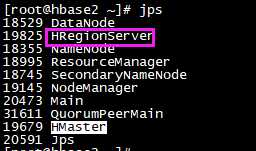

再次jps查看

发现HRegionServer成功启动,去hbase shell里面输命令

以上是关于hbase使用出的错,求帮助的主要内容,如果未能解决你的问题,请参考以下文章

python3使用pyinstaller打包apscheduler出的错