LB集群之LVS/keepalived

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了LB集群之LVS/keepalived相关的知识,希望对你有一定的参考价值。

在上一个实验的基础上LVS/DR

(NTA,DR HA 都是用 nginx 服务操作的,省去了安装nginx安装配置)

lvs虽然已经配置成功也实现了负载均衡,但是我们测试的时候发现,当某台real server把httpd进程停掉,那么director照样会把请求转发过去,这样就造成了某些请求不正常。所以需要有一种机制用来检测real server的状态,这就是keepalived。它的作用除了可以检测rs状态外,还可以检测备用director的状态,也就是说keepalived(集HA+LB于一体)可以实现ha集群的功能,当然了也需要一台备用director.

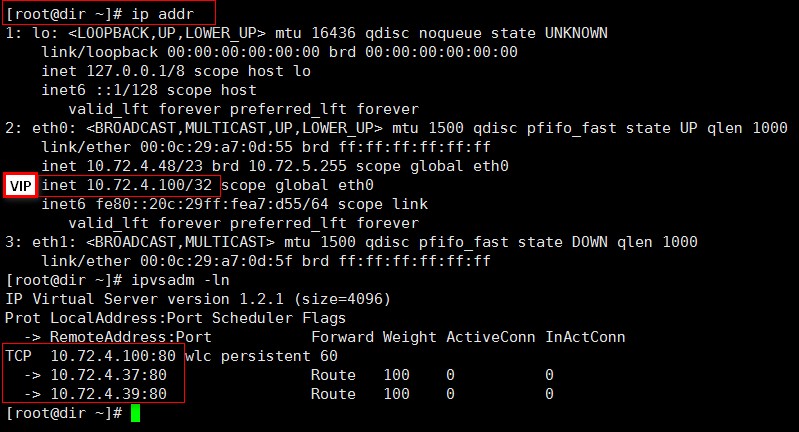

实验环境:三台虚拟机,10.72.4.48 (dir/master),10.72.4.39(rs2&slave),10.72.4.37 (rs2)

[[email protected] ~]# ipvsadm -C #清空之前的规则

[[email protected] ~]# yum install -y keepalived #安装keepalived

[[email protected] ~]# vim /etc/keepalived/keepalived.conf

state MASTER #备用服务器上为 BACKUP

interface eth0

virtual_router_id 51

priority 100 #备用服务器上为90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.72.4.100

}

}

virtual_server 10.72.4.100 80 {

delay_loop 6 #(每隔10秒查询realserver状态)

lb_algo wlc #(lvs 算法)

lb_kind DR #(Direct Route)

persistence_timeout 60 #(同一IP的连接60秒内被分配到同一台realserver)

protocol TCP #(用TCP协议检查realserver状态)

real_server 10.72.4.37 80 {

weight 100 #(权重)

TCP_CHECK {

connect_timeout 10 #(10秒无响应超时)

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 10.72.4.39 80 {

weight 100

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

------------------------------------------------

[[email protected] ~]# scp /etc/keepalived/keepalived.conf 10.72.4.39:/etc/keepalived/keepalived.conf #拷贝到从上

[[email protected] ~]# vim /etc/keepalived/keepalived.conf #只改以下两处,其他地方不都不用改

vrrp_instance VI_1 {

state BACKUP #备用服务器上为 BACKUP

interface eth0

virtual_router_id 51

priority 90 #备用服务器上为90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.72.4.100

}

}

------------------------------------------

[[email protected]dir ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[[email protected]rs2 ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[[email protected]rs1 ~]vim /usr/local/sbin/lvs_nat.sh #编译脚本

#! /bin/bash

vip= 10.72.4.100

10.72.4.100

ifconfig lo:0 $vip broadcast $vip netmask  255.255.255.255 up

255.255.255.255 up

route add -host $vip lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

[[email protected] ~]sh /usr/local/sbin/lvs_nat.sh #执行脚本

[[email protected]rs2 ~]vim /usr/local/sbin/lvs_nat.sh #编译脚本

#! /bin/bash

vip= 10.72.4.100

10.72.4.100

ifconfig lo:0 $vip broadcast $vip netmask  255.255.255.255 up

255.255.255.255 up

route add -host $vip lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

[[email protected]rs2 ~]sh /usr/local/sbin/lvs_nat.sh #执行脚本

[[email protected] ~]# vim /usr/local/sbin/lvs_dr.sh #编译打开dir 上的端口转发脚本

#! /bin/bash

echo 1 > /proc/sys/net/ipv4/ip_forward

[[email protected] ~]# sh /usr/local/sbin/lvs_dr.sh #执行脚本

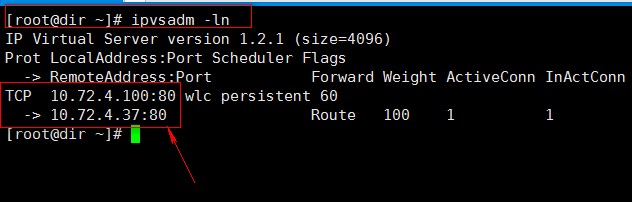

[[email protected]rs2 ~]# /etc/init.d/nginx stop #停掉 rs2 上的nginx ,

Stopping nginx: [ OK ]

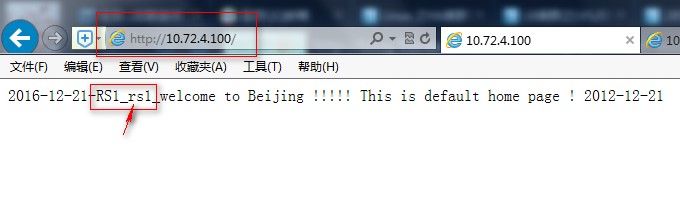

#停掉rs2 上的nginx 后,IE 刷新 10.72.4.100,不会再出现rs2 上的内容。且主服务器也会把rs2 的IP 踢除,重新启动rs2 上的nginx 服务,会恢复正常。

--------------------------------------------------------

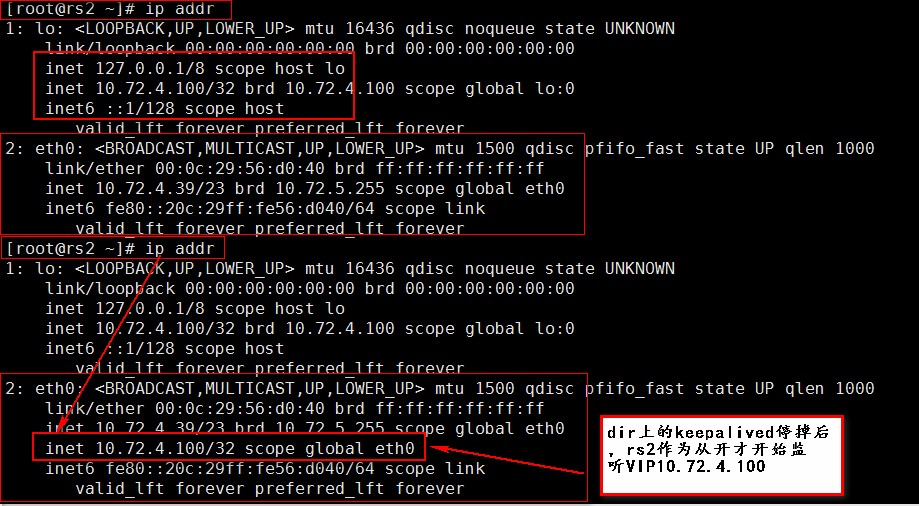

[[email protected] ~]# /etc/init.d/keepalived stop #停掉主dir上的 keepalived ,rs2从上的keepalived就会启动,取代dir 成为主,继续工作。

Stopping keepalived: [ OK ]

再次重新启动主的 keepalived ,就会恢复到之前的状态。

本文出自 “CBO#Boy_Linux之路” 博客,请务必保留此出处http://20151213start.blog.51cto.com/9472657/1885165

以上是关于LB集群之LVS/keepalived的主要内容,如果未能解决你的问题,请参考以下文章