实战 LVS+Keepalived 实现负载均衡和高可用

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了实战 LVS+Keepalived 实现负载均衡和高可用相关的知识,希望对你有一定的参考价值。

1.软件介绍

LVS是Linux Virtual Server的简写,意即Linux虚拟服务器,是一个虚拟的服务器集群系统,可以实现LINUX平台下的简单负载均衡。

LVS负载均衡有4种负载均衡方式

a.DR 模式

b.NAT 模式

c.Tunnel 模式

d.Full NAT 模式

LVS负载均衡8种调度算法

(rr,wrr,lc,wlc,lblc,lblcr,dh,sh)

各个模式的原理在此不再赘述,本文使用DR模式,rr调度算法。

Keepalived 是运行在lvs 之上,它的主要功能是实现真实机的故障隔离及负载均衡器间的失败切换,提高系统的可用性。

2.实战安装

环境配置

[[email protected] ~]# cat /etc/redhat-release

CentOS release 6.8 (Final)

[[email protected] ~]# uname -r

2.6.32-642.6.1.el6.x86_64

软件

ipvsadm-1.26.tar.gz keepalived-1.1.19.tar.gz

信息列表

服务器名称 IP

| LVS 做主(LVS-master) | 10.89.3.102 |

| Keepalived 做备(LVS-backup) | 10.89.3.103 |

| lamp 做web1(real_server) | 10.89.3.100 |

| lnmp 做web2(real_server) | 10.89.3.101 |

| LVS 绑定 vip(VIP) | 10.89.3.168 |

LVS+keepalived的安装和配置-2台电脑(LVS,Keepalived)都要安装。

安装lvs软件

ln -s /usr/src/kernels/2.6.32-642.6.1.el6.x86_64/ /usr/src/linux

yum install libnl* popt* -y

tar zxf ipvsadm-1.26.tar.gz

cd ipvsadm-1.26

make

make install

[[email protected] ipvsadm-1.26]# modprobe ip_vs #把ip_vs模块加入内核

[[email protected] ipvsadm-1.26]# lsmod|grep ip_vs #查看是否安装

ip_vs 126897 0

libcrc32c 1246 1 ip_vs

ipv6 336282 265 ip_vs

#出现上面的结果,表示安装OK

安装keepalived软件

ln -s /usr/src/kernels/2.6.32-642.6.1.el6.x86_64 /usr/src/linux

yum install openssl openssl-devel -y

tar xf keepalived-1.1.19.tar.gz

cd keepalived-1.1.19

./configure

make

make install

配置keepalived规范启动

/bin/cp /usr/local/etc/rc.d/init.d/keepalived /etc/init.d/

/bin/cp /usr/local/etc/sysconfig/keepalived /etc/sysconfig/

mkdir /etc/keepalived -p

/bin/cp /usr/local/etc/keepalived/keepalived.conf /etc/keepalived/

/bin/cp /usr/local/sbin/keepalived /usr/sbin/

/etc/init.d/keepalived start

ps -ef|grep keep

root 6342 1 0 17:42 ? 00:00:00 keepalived -D

root 6344 6342 0 17:42 ? 00:00:00 keepalived -D

root 6345 6342 0 17:42 ? 00:00:00 keepalived -D

root 6347 3490 0 17:42 pts/0 00:00:00 grep keep

#出现 3个keepalived -D,表示安装OK

3. 配置keepadlived

LVS-Master的配置文件如下:

[[email protected] ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_7

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 55

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.89.3.168/24

}

}

virtual_server 10.89.3.168 80 {

delay_loop 6

lb_algo wrr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 300

protocol TCP

real_server 10.89.3.100 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 10.89.3.101 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

LVS-backup的配置文件如下:

[[email protected] ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 55

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.89.3.168/24

}

}

virtual_server 10.89.3.168 80 {

delay_loop 6

lb_algo wrr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 300

protocol TCP

real_server 10.89.3.100 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 10.89.3.101 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

real_server的配置,2台客户端的脚本都一样!

[[email protected]]# cat /server/scripts/ipvs_client.sh

#!/bin/bash

# description: Config realserver lo and apply noarp

SNS_VIP=10.89.3.168

. /etc/rc.d/init.d/functions

case "$1" in

start)

/sbin/ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP

/sbin/route add -host $SNS_VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

ifconfig lo:0 down

route del $SNS_VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0

----------------------------------------------

4.实战测试

4.1 测试VIP漂移

#使用命令 ip add 查看VIP

[[email protected] ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[[email protected] ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:4e:ca:a4 brd ff:ff:ff:ff:ff:ff

inet 10.89.3.102/24 brd 10.89.3.255 scope global eth0

inet 10.89.3.168/24 scope global secondary eth0

inet6 fe80::20c:29ff:fe4e:caa4/64 scope link

valid_lft forever preferred_lft forever

----------------------------------------------------------------

[[email protected] ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:3f:7c:0b brd ff:ff:ff:ff:ff:ff

inet 10.89.3.103/24 brd 10.89.3.255 scope global eth0

inet6 fe80::20c:29ff:fe3f:7c0b/64 scope link

valid_lft forever preferred_lft forever

------------------------------------------------------------------

#观察发现,此时VIP 在Lvs这台服务器上,现在把这台服务器Keepalived 服务停止

[[email protected] ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[[email protected] ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:4e:ca:a4 brd ff:ff:ff:ff:ff:ff

inet 10.89.3.102/24 brd 10.89.3.255 scope global eth0

inet6 fe80::20c:29ff:fe4e:caa4/64 scope link

valid_lft forever preferred_lft forever

#发现VIP已经漂移到另外一台上了,我们去看看

[[email protected] ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:3f:7c:0b brd ff:ff:ff:ff:ff:ff

inet 10.89.3.103/24 brd 10.89.3.255 scope global eth0

inet 10.89.3.168/24 scope global secondary eth0

inet6 fe80::20c:29ff:fe3f:7c0b/64 scope link

valid_lft forever preferred_lft forever

---------------------------------------------------------------------

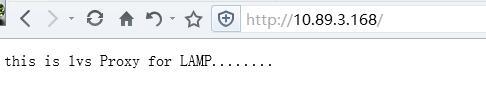

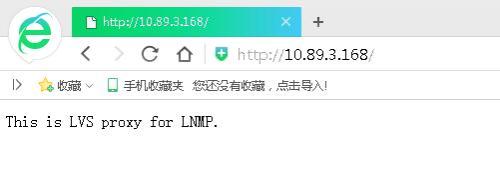

4.2 测试web 服务

4.3查看连接情况

[[email protected] ~]# ipvsadm -Lnc

IPVS connection entries

pro expire state source virtual destination

TCP 01:01 FIN_WAIT 10.89.1.158:62899 10.89.3.168:80 10.89.3.101:80

TCP 00:56 FIN_WAIT 10.89.3.1:58996 10.89.3.168:80 10.89.3.100:80

TCP 00:56 FIN_WAIT 10.89.3.1:58997 10.89.3.168:80 10.89.3.100:80

TCP 02:06 NONE 10.89.3.1:0 10.89.3.168:80 10.89.3.100:80

TCP 03:44 NONE 10.89.1.158:0 10.89.3.168:80 10.89.3.101:80

TCP 01:51 FIN_WAIT 10.89.1.158:62603 10.89.3.168:80 10.89.3.101:80

总结:LVS+Keepalived 可以实现负载均衡和高可用,到此我们验证配置结束!

本文出自 “知识改变命运” 博客,请务必保留此出处http://ahtornado.blog.51cto.com/4826737/1866716

以上是关于实战 LVS+Keepalived 实现负载均衡和高可用的主要内容,如果未能解决你的问题,请参考以下文章

Keepalived+LVS实战案例: 双主架构实现WEB和MariaDB负载均衡及高可用

LVS负载均衡群集——keepalived+DR模式(实战!)

Keepalived+LVS实战案例: 单主架构实现WEB负载均衡及可用