Docker 1.12 集群

Posted MOSS

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Docker 1.12 集群相关的知识,希望对你有一定的参考价值。

环境介绍

虚拟机两台,vmware ,网络为NAT

node139:192.168.190.139

Node140: 192.168.190.140

设置hostname

以139为例

[demo@localhost ~]$ hostname

localhost.localdomain

[demo@localhost ~]$ sudo hostnamectl set-hostname node139

[sudo] password for demo:

[demo@localhost ~]$ hostname

node139

修改/etc/hosts文件内容如下

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

127.0.0.1 node139

140机做同样的设置

安装dokcer 1.12,使用yum

1.登录到你的机器用户sudo或root权限。

2.确保您现有的包是最新的更新

$ sudo yum update

3.添加yum

$ sudo tee /etc/yum.repos.d/docker.repo <<-\'EOF\'

[dockerrepo]

name=Docker Repository

baseurl=https://yum.dockerproject.org/repo/main/centos/7/

enabled=1

gpgcheck=1

gpgkey=https://yum.dockerproject.org/gpg

EOF

4.安装

$ sudo yum install docker-engine

5 启用该服务。

$ sudo systemctl enable docker.service

6.启动守护进程

$ sudo systemctl start docker

为了避免使用sudo,您使用docker的命令,创建一个名为UNIX组docker和用户添加到它

创建docker 组

$ sudo groupadd docker

你的用户添加到docker组。

$ sudo usermod -aG docker your_username

注销并重新登录

Docker监听远程端口实现远程调用API

[demo@node139 ~]$ sudo vim /lib/systemd/system/docker.service

添加以下加粗内容

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock

重启Docker程序

[demo@node139 ~]$ sudo systemctl daemon-reload

[demo@node139 ~]$ sudo systemctl restart docker.service

140机器做同样处理

解决docker info报错docker bridge-nf-call-iptables is disabled办法

[demo@node139 ~]$ sudo vim /etc/sysctl.conf

添加如下内容

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

140机器做同样处理

swarm集群

1防火墙开启

Open ports between the hosts

The following ports must be available. On some systems, these ports are open by default.

- TCP port 2377 for cluster management communications

- TCP and UDP port 7946 for communication among nodes

- TCP and UDP port 4789 for overlay network traffic

firewall-cmd --zone=public --add-port=2377/tcp --permanent

firewall-cmd --zone=public --add-port=4789/tcp --permanent

firewall-cmd --zone=public --add-port=4789/udp --permanent

firewall-cmd --zone=public --add-port=7946/tcp --permanent

firewall-cmd --zone=public --add-port=7946/udp --permanent

2.初始化 swarm manager node

[demo@node139 ~]$ docker swarm init --advertise-addr 192.168.190.139

Swarm initialized: current node (cpm3a11fqlvrk5e3xp7zsazum) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join \\

--token SWMTKN-1-5osh8sobuw823tdts3unsyww6uj6pu32pqucijtjip1syra6z7-chme7butzz1gdz5s15nl99g2x \\

192.168.190.139:2377

To add a manager to this swarm, run \'docker swarm join-token manager\' and follow the instructions.

3.查看节点信息

[demo@node139 ~]$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

cpm3a11fqlvrk5e3xp7zsazum * node139 Ready Active Leader

4.增加一个 swarm worker node

查看worker token,如果加入manater输入 docker swarm join-token manager查看token信息

[demo@node139 ~]$ docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join \\

--token SWMTKN-1-5osh8sobuw823tdts3unsyww6uj6pu32pqucijtjip1syra6z7-chme7butzz1gdz5s15nl99g2x \\

192.168.190.139:2377

切换到140机器

[demo@node140 ~]$ docker swarm join \\

> --token SWMTKN-1-5osh8sobuw823tdts3unsyww6uj6pu32pqucijtjip1syra6z7-chme7butzz1gdz5s15nl99g2x \\

> 192.168.190.139:2377

This node joined a swarm as a worker.

查看节点信息139机器

[demo@node139 ~]$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

9rrk4thpqq06pxo6u7zoomcyw node140 Ready Active

cpm3a11fqlvrk5e3xp7zsazum * node139 Ready Active Leader

创建网络

[demo@node139 ~]$ docker network create -d overlay mynet

8peggzi7rk1a06g079rtq048r

创建服务

docker service create --replicas 2 --name logapi --network mynet -p 6000:60000 logapi1_0

挂载目录方式mount参数: docker service create --replicas 4 --name logapi --network mynet --mount type=bind,dst=/var/log/,src=/var/log -p 6000:60000 logapi1_0

[demo@node139 /]$ docker service create --replicas 4 --name logapi --network mynet -p 6000:60000 logapi1_0

94i7tb0fohz0xuzy69ljw18s9

[demo@node139 /]$ docker service ps logapi

[demo@node139 ~]$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

fbc336872210 logapi1_0:latest "dotnet YiChe.App.Log" 5 minutes ago Up 5 minutes 60000/tcp logapi.4.b8i17ujd2zqyw7bigchwvfjfo

19a058bce9b4 logapi1_0:latest "dotnet YiChe.App.Log" 5 minutes ago Up 5 minutes 60000/tcp logapi.1.b3gxlsmvtrsl81okyl3ww27lm

[demo@node140 bin]$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

beb939249cfa logapi1_0:latest "dotnet YiChe.App.Log" 6 minutes ago Up 6 minutes 60000/tcp logapi.2.775g8phtr0i9p9xpou29pkk2v

24a1363d1a33 logapi1_0:latest "dotnet YiChe.App.Log" 6 minutes ago Up 6 minutes 60000/tcp logapi.3.6cihlua6w85vddnhuttuseu5i

访问服务

通过6000端口访问4 次api,结果可以访问

[demo@node139 ~]$ curl http://192.168.190.139:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"fbc336872210","ip":["10.0.1.6","10.0.1.6","10.0.1.6","172.18.0.4","172.18.0.4","172.18.0.4","10.255.0.9","10.255.0.9","10.255.0.9"],"version":null}}[demo@node139 ~]$

[demo@node139 ~]$ curl http://192.168.190.139:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"24a1363d1a33","ip":["10.255.0.8","10.255.0.8","10.255.0.8","172.18.0.3","172.18.0.3","172.18.0.3","10.0.1.5","10.0.1.5","10.0.1.5"],"version":null}}[demo@node139 ~]$

[demo@node139 ~]$ curl http://192.168.190.139:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"beb939249cfa","ip":["10.255.0.7","10.255.0.7","10.255.0.7","172.18.0.4","172.18.0.4","172.18.0.4","10.0.1.4","10.0.1.4","10.0.1.4"],"version":null}}[demo@node139 ~]$

[demo@node139 ~]$ curl http://192.168.190.139:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"19a058bce9b4","ip":["10.255.0.6","10.255.0.6","10.255.0.6","172.18.0.3","172.18.0.3","172.18.0.3","10.0.1.3","10.0.1.3","10.0.1.3"],"version":null}}[demo@node139 ~]$

[demo@node139 ~]$ curl http://192.168.190.140:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"beb939249cfa","ip":["10.255.0.7","10.255.0.7","10.255.0.7","172.18.0.4","172.18.0.4","172.18.0.4","10.0.1.4","10.0.1.4","10.0.1.4"],"version":null}}[demo@node139 ~]$

[demo@node139 ~]$ curl http://192.168.190.140:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"24a1363d1a33","ip":["10.255.0.8","10.255.0.8","10.255.0.8","172.18.0.3","172.18.0.3","172.18.0.3","10.0.1.5","10.0.1.5","10.0.1.5"],"version":null}}[demo@node139 ~]$

[demo@node139 ~]$ curl http://192.168.190.140:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"19a058bce9b4","ip":["10.255.0.6","10.255.0.6","10.255.0.6","172.18.0.3","172.18.0.3","172.18.0.3","10.0.1.3","10.0.1.3","10.0.1.3"],"version":null}}[demo@node139 ~]$

[demo@node139 ~]$ curl http://192.168.190.140:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"fbc336872210","ip":["10.0.1.6","10.0.1.6","10.0.1.6","172.18.0.4","172.18.0.4","172.18.0.4","10.255.0.9","10.255.0.9","10.255.0.9"],"version":null}}[demo@node139 ~]$

控制容器数量

[demo@node139 ~]$ docker service scale logapi=2

logapi scaled to 2

[demo@node139 ~]$ docker service ps logapi

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

b3gxlsmvtrsl81okyl3ww27lm logapi.1 logapi1_0 node139 Running Running 9 minutes ago

775g8phtr0i9p9xpou29pkk2v logapi.2 logapi1_0 node140 Shutdown Shutdown 9 seconds ago

6cihlua6w85vddnhuttuseu5i logapi.3 logapi1_0 node140 Running Running 9 minutes ago

b8i17ujd2zqyw7bigchwvfjfo logapi.4 logapi1_0 node139 Shutdown Shutdown 9 seconds ago

再次访问4次,只有两个容器ip,符合预期

[demo@node139 ~]$ curl http://192.168.190.140:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"24a1363d1a33","ip":["10.255.0.8","10.255.0.8","10.255.0.8","172.18.0.3","172.18.0.3","172.18.0.3","10.0.1.5","10.0.1.5","10.0.1.5"],"version":null}}[demo@node139 ~]$ curl http://192.168.190.140:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"19a058bce9b4","ip":["10.255.0.6","10.255.0.6","10.255.0.6","172.18.0.3","172.18.0.3","172.18.0.3","10.0.1.3","10.0.1.3","10.0.1.3"],"version":null}}[demo@node139 ~]$ curl http://192.168.190.140:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"24a1363d1a33","ip":["10.255.0.8","10.255.0.8","10.255.0.8","172.18.0.3","172.18.0.3","172.18.0.3","10.0.1.5","10.0.1.5","10.0.1.5"],"version":null}}[demo@node139 ~]$ curl http://192.168.190.140:6000/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"19a058bce9b4","ip":["10.255.0.6","10.255.0.6","10.255.0.6","172.18.0.3","172.18.0.3","172.18.0.3","10.0.1.3","10.0.1.3","10.0.1.3"],"version":null}}[demo@node139 ~]$

关闭一台机器

或 docker node update --availability drain node140

节点的管理参考:https://docker.github.io/engine/swarm/manage-nodes/

[demo@node139 ~]$ docker service ps logapi

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

b3gxlsmvtrsl81okyl3ww27lm logapi.1 logapi1_0 node139 Running Running 33 minutes ago

775g8phtr0i9p9xpou29pkk2v logapi.2 logapi1_0 node140 Shutdown Shutdown 24 minutes ago

13m72ukox3z12u5vahvzla99h logapi.3 logapi1_0 node139 Running Running 21 minutes ago

6cihlua6w85vddnhuttuseu5i \\_ logapi.3 logapi1_0 node140 Shutdown Running 33 minutes ago

b8i17ujd2zqyw7bigchwvfjfo logapi.4 logapi1_0 node139 Shutdown Shutdown 24 minutes ago

结果显示139机器上运行2个容器

139机器再次开启后,容器的任务不会自动转回来,这个功能1.12版本还不支持

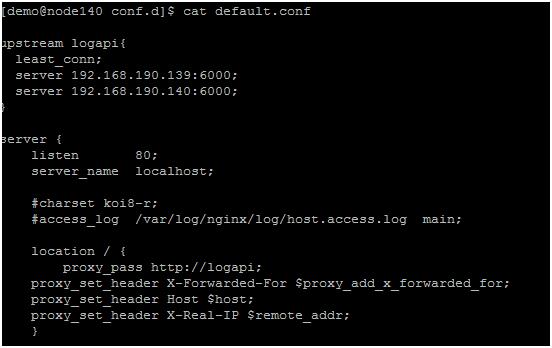

配置外网访问

配置nginx文件如下

重新加载nginx

[demo@node140 conf.d]$ sudo nginx -s reload

[demo@node140 conf.d]$ sudo setenforce 0

[demo@node140 conf.d]$ curl http://192.168.190.140/SettingsManager/GetIp

{"status":1,"message":"","data":{"hostName":"562d8d89146e","ip":["10.255.0.7","10.255.0.7","10.255.0.7","172.18.0.6","172.18.0.6","172.18.0.6","10.0.1.4","10.0.1.4","10.0.1.4"],"version":null}}[demo@node140 conf.d]$

容器升级

[demo@node140 YiChe.App.LogApi]$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

logapi2_0 latest 6ed5eef24908 6 seconds ago 269.4 MB

logapi1_0 latest db03033fc5a0 2 days ago 269.4 MB

[demo@node140 YiChe.App.LogApi]$ docker service ps logapi

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

b3gxlsmvtrsl81okyl3ww27lm logapi.1 logapi1_0 node139 Shutdown Shutdown about an hour ago

73ugfdrc1gtoveul5pixko27s logapi.2 logapi1_0 node140 Running Running about an hour ago

775g8phtr0i9p9xpou29pkk2v \\_ logapi.2 logapi1_0 node140 Shutdown Shutdown 2 hours ago

13m72ukox3z12u5vahvzla99h logapi.3 logapi1_0 node139 Running Running 2 hours ago

6cihlua6w85vddnhuttuseu5i \\_ logapi.3 logapi1_0 node140 Shutdown Failed about an hour ago "task: non-zero exit (

b8i17ujd2zqyw7bigchwvfjfo logapi.4 logapi1_0 node139 Shutdown Shutdown 2 hours ago

升级容器

升级前要把容器先下载到节点上[demo@node140 YiChe.App.LogApi]$ docker service update --image logapi2_0 logapi

logapi

[demo@node140 YiChe.App.LogApi]$ docker service ps logapi

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

b3gxlsmvtrsl81okyl3ww27lm logapi.1 logapi1_0 node139 Shutdown Shutdown about an hour ago

73ugfdrc1gtoveul5pixko27s logapi.2 logapi1_0 node140 Running Running about an hour ago

775g8phtr0i9p9xpou29pkk2v \\_ logapi.2 logapi1_0 node140 Shutdown Shutdown 2 hours ago

atwtihzoja4tu78wptq2ohb7u logapi.3 logapi2_0 node140 Running Preparing 12 seconds ago

13m72ukox3z12u5vahvzla99h \\_ logapi.3 logapi1_0 node139 Shutdown Shutdown 11 seconds ago

6cihlua6w85vddnhuttuseu5i \\_ logapi.3 logapi1_0 node140 Shutdown Failed about an hour ago "task: non-zero exit (1)"

只有一台升级完成,升过程需要一些时间

挂载目录,mount

--mount type=volume,src=<VOLUME-NAME>,dst=<CONTAINER-PATH>,volume-driver=<DRIVER>,volume-opt=<KEY0>=<VALUE0>,volume-opt=<KEY1>=<VALUE1>

target或dst = 容器里面的路径, source或src = 本地硬盘路径

修改已有服务,添加挂载目录,创建服务时用--mount参数

docker service update logapi --mount-add type=bind,target=/var/log/,source=/var/log

查看服务信息

[demo@node139 ~]$ docker service inspect logapi

[

{

"ID": "8nmmguchzhz34i6onuijdmy94",

"Version": {

"Index": 39

},

"CreatedAt": "2016-10-26T18:43:07.480086156Z",

"UpdatedAt": "2016-10-26T18:43:07.485602124Z",

"Spec": {

"Name": "logapi",

"TaskTemplate": {

"ContainerSpec": {

"Image": "logapi1_0"

},

"Resources": {

"Limits": {},

"Reservations": {}

},

"RestartPolicy": {

"Condition": "any",

"MaxAttempts": 0

},

"Placement": {}

},

"Mode": {

"Replicated": {

"Replicas": 4

}

},

"UpdateConfig": {

"Parallelism": 1,

"FailureAction": "pause"

},

"Networks": [

{

"Target": "8peggzi7rk1a06g079rtq048r"

}

],

"EndpointSpec": {

"Mode": "vip"

}

},

"Endpoint": {

"Spec": {

"Mode": "vip"

},

"VirtualIPs": [

{

"NetworkID": "8peggzi7rk1a06g079rtq048r",

"Addr": "10.0.1.2/24"

}

]

},

"UpdateStatus": {

"StartedAt": "0001-01-01T00:00:00Z",

"CompletedAt": "0001-01-01T00:00:00Z"

}

}

]

先创建一个同样使用mynet的overlay网络 的服务

[demo@node139 ~]$ docker service create --name my-busybox --network mynet busybox sleep 3000

194l7kv87mffdn4nh1db1mcia

等待服务启动后, 用docker exec 连接进busybox的容器里

[demo@node139 ~]$ docker service ps my-busybox

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

csk8n7jaxbd91mft20wngcmke my-busybox.1 busybox node139 Running Running about a minute ago

[demo@node139 ~]$ docker ps -a | grep my-busybox

23b3023dbbbe busybox:latest "sleep 3000" About a minute ago Up About a minute my-busybox.1.csk8n7jaxbd91mft20wngcmke

[demo@node139 ~]$ docker exec -it 23b3023dbbbe sh

# nslookup logapi

Server: 127.0.0.11

Address 1: 127.0.0.11

Name: logapi

Address 1: 10.0.1.2

/ # nslookup tasks.logapi

Server: 127.0.0.11

Address 1: 127.0.0.11

Name: tasks.logapi

Address 1: 10.0.1.5 logapi.2.amnpfq3m3cfuric8obztn17zt.mynet

Address 2: 10.0.1.6 logapi.3.1qicmzulf9fbf4lpvcns5xno0.mynet

Address 3: 10.0.1.4 logapi.1.5vqhxhee0jfqq45lsmc1w0lw8.mynet

Address 4: 10.0.1.3 logapi.4.9fxx2jswq4zkp25uk9rno1cq2.mynet

分别ping 虚拟ip和服务对应的4个ip 都是通的

# ping 10.0.1.2

PING 10.0.1.2 (10.0.1.2): 56 data bytes

64 bytes from 10.0.1.2: seq=0 ttl=64 time=1.734 ms

以上是关于Docker 1.12 集群的主要内容,如果未能解决你的问题,请参考以下文章

markdown 在AWS上创建Docker 1.12 Swarm集群