Ceph分布式集群文件系统测试

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Ceph分布式集群文件系统测试相关的知识,希望对你有一定的参考价值。

1、使用Ceph rados bech工具进行测试

rados bech工具是Ceph内置的基准性能测试工具,用于测试Ceph集群存储池层面的性能。rados bech支持写、连续读、和随机读等 基准测试。

rados bech工具命令语法:

#rados bench -p <pool name> <seconds> <write|seq|rand> -b <blcoksize> -t <> --no-cleanup

-p:pool存储池名称

<second>:测试时间,单位秒

<write|seq|rand>:写、顺序读、随机读

-b:块大小,默认是4秒

-t:并发线程数,默认是16

--no-cleanup:临时写入存储池中的数据不被清除,默认会清除

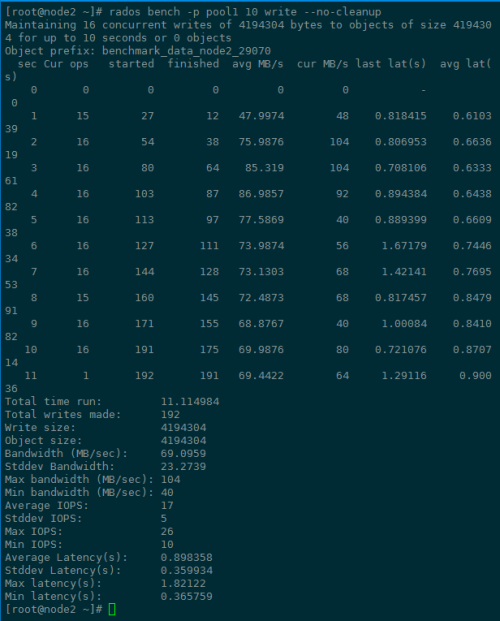

在不清除数据的情况下,进行1000秒的写测试:

[[email protected] ~]# rados bench -p pool1 1000 write --no-cleanup

Total time run: 1003.149409

Total writes made: 6627

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 26.4248

Stddev Bandwidth: 13.4511

Max bandwidth (MB/sec): 116

Min bandwidth (MB/sec): 0

Average IOPS: 6

Stddev IOPS: 3

Max IOPS: 29

Min IOPS: 0

Average Latency(s): 2.42078

Stddev Latency(s): 0.951884

Max latency(s): 6.59119

Min latency(s): 0.119255

使用ceph df查看到集群空间使用情况:

[[email protected] ~]# ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

40966G 40555G 411G 1.00

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

rbd 0 6168M 0.03 19357G 1543

pool1 1 89872M 0.43 19357G 22469

pool2 2 111G 0.54 19357G 28510

对存储池进行300秒顺序读测试

[[email protected] ~]# rados bench -p pool1 300 seq

Total time run: 125.494596

Total reads made: 6627

Read size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 211.228

Average IOPS 52

Stddev IOPS: 8

Max IOPS: 73

Min IOPS: 29

Average Latency(s): 0.301849

Max latency(s): 2.17986

Min latency(s): 0.0179132

对pool1存储池进行随机读测试,测试时间为10分钟

1、

[[email protected] ~]# rados bench -p rbd 600 rand

2016-09-22 22:19:41.815759 min lat: 0.0178813 max lat: 2.69039 avg lat: 0.33182

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

600 16 28860 28844 192.274 192 0.511941 0.33182

Total time run: 600.544174

Total reads made: 28860

Read size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 192.226

Average IOPS: 48

Stddev IOPS: 7

Max IOPS: 77

Min IOPS: 19

Average Latency(s): 0.332086

Max latency(s): 2.69039

Min latency(s): 0.0178813

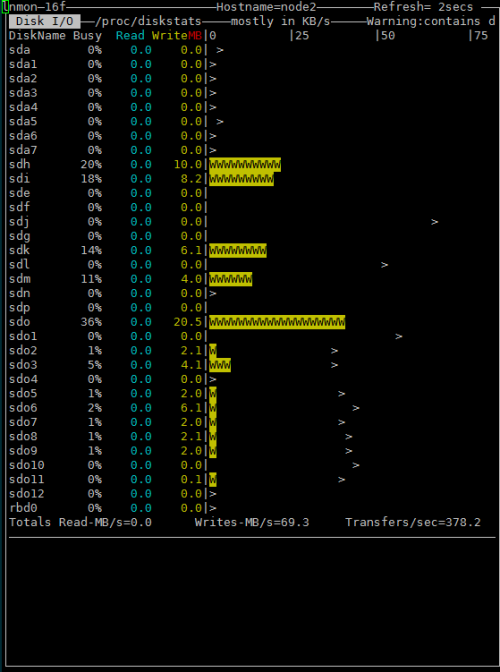

使用nmon查看disk读写情况

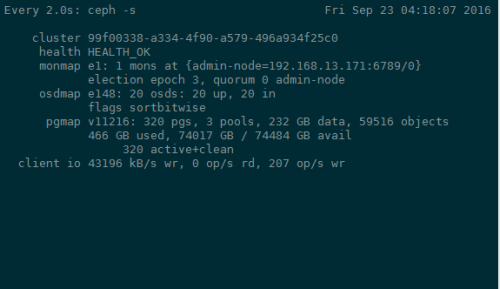

使用watch ceph -s查看集群读写情况

2、rado load-gen工具

rados load-gen用于生成Ceph集群负载,也可用于高负载下模拟测试

参数说明如下:

rados -p rbd load-gen \

--num-objects 50 \

#对象总数

--min-objects-size 4M \

#对象最小字节数

--max-objects-size 4M \

#对象最大字节数

--max-ops 16 \

#操作最大数

--min-ops \

#操作最小数

--min-op-len 4M \

#操作最小长度

--max-op-len 4M \

#操作最大长度

--percent 5 \

#读操作百分比,读写混合中读的比例

--target-throughput 2000 \

目标吞吐量,单位MB

--run-length 60

运行时间,单位秒

[[email protected] ~]# cat rados-load-gen.sh

#!/bin/bash

rados -p rbd load-gen \

--num-objects 50 \

--min-objects-size 4M \

--max-objects-size 4M \

--max-ops 16 \

--min-op-len 4M \

--max-op-len 4M \

--percent 5 \

--target-throughput 2000 \

--run-length 60

命令会通过将50个对像写到rbd存储池来生成负载,每个对象大小为4MB,读写比例为5%,总的运行时间为60秒

测试:

[[email protected] ~]# sh rados-load-gen.sh

57: throughput=55.6MB/sec pending data=8388608

READ : oid=obj-pR0IiIQUAhoG2vr off=539347588 len=4194304

op 829 completed, throughput=55.6MB/sec

READ : oid=obj-QJuP_GXN91TZANt off=894509182 len=4194304

op 831 completed, throughput=55.5MB/sec

READ : oid=obj-FSNdJlg0iLzWeR7 off=1550916982 len=4194304

op 832 completed, throughput=55.6MB/sec

READ : oid=obj-pqhOi8w3ChbnMSF off=302850550 len=4194304

op 833 completed, throughput=55.5MB/sec

waiting for all operations to complete

op 834 completed, throughput=55.5MB/sec

cleaning up objects

op 773 completed, throughput=29.9MB/sec

3、Ceph块设备基准测试

[[email protected] ~]# cat rdb-bench-write.sh

#!/bin/bash

#1、创建CEPH块设备,名为block-device,size为1GB,并映射它:

rbd create block-device --size 10240

rbd info --image block-device

rbd map block-device

rbd showmapped

#2、在块设备上创建文件系统并挂载它:

mkfs.xfs /dev/rbd0

mkdir -p /mnt/ceph-block-device

mount /dev/rbd0 /mnt/ceph-block-device

df -h /mnt/ceph-block/device

#3、对blcok-device进行5GB数据写基准测试:

rbd bench-write block-device --io-total 5368709200

rdb bench-write语法格式

#rdb bech-write <RBD Image name>

--io-size:写入字节,默认为4MB

--io-threads:线程数,默认为16

--io-total:写入总字节,默认为1024MB

--io-pattern <seq/rand>:写模式,默认为顺序写

创建Ceph块设备

[[email protected] ~]# rbd create block-device1 --size 1G --image-format 1

rbd: image format 1 is deprecated

[[email protected] ~]# rbd info --image block-device1

rbd image ‘block-device1‘:

size 1024 MB in 256 objects

order 22 (4096 kB objects)

block_name_prefix: rb.0.19d5.238e1f29

format: 1

[[email protected] ~]# rbd map block-device1

/dev/rbd0

[[email protected] ~]# rbd showmapped

id pool image snap device

0 rbd block-device1 - /dev/rbd0

在块设备上创建文件系统并挂载它:

[[email protected] ~]# mkfs.xfs /dev/rbd0

meta-data=/dev/rbd0 isize=256 agcount=9, agsize=31744 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=262144, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[[email protected] ~]# mkdir -p /mnt/ceph-block-device1

[[email protected] ~]# mount /dev/rbd0 /mnt/ceph-block-device

ceph-block-device/ ceph-block-device1/

[[email protected] ~]# mount /dev/rbd0 /mnt/ceph-block-device1

[[email protected] ~]# df -h /mnt/ceph-block-device1

Filesystem Size Used Avail Use% Mounted on

/dev/rbd0 1014M 33M 982M 4% /mnt/ceph-block-device1

对blcok-device进行5GB数据写基准测试:

rand:随机写,seq顺序写

[[email protected] ~]# rbd bench-write block-device1 --io-total 5368709200 --io-pattern rand

elapsed: 488 ops: 1310721 ops/sec: 2681.48 bytes/sec: 10983362.38

[[email protected] ~]# rbd bench-write block-device1 --io-total 5368709200 --io-pattern seq

elapsed: 170 ops: 1310721 ops/sec: 7701.13 bytes/sec: 31543830.94

4、使用FIO做Ceph rbd基准测试

测试方法:

先创建一个块设备,映射到ceph客户端节点。前面测试已经创建block-device1,使用它进行测试

安装下fio,使用yum install fio,fio支持RBD IO引擎,我们需要提供RBD映像名称、存储池用用于连接ceph集群的用户名就可以,创建下面fio配置文件:

rw=write,进行顺序写测试

[[email protected] ~]# vim write.fio

[write-4M]

description="write test with block size of 4M"

ioengine=rbd

clientname=admin

pool=rbd

rbdname=block-device1

iodepth=32

runtime=120

rw=write

bs=4M

Last login: Fri Sep 23 02:54:12 2016 from 192.168.13.101

[[email protected] ~]# fio write.fio

write-4M: (g=0): rw=write, bs=4M-4M/4M-4M/4M-4M, ioengine=rbd, iodepth=32

fio-2.3

time 15258 cycles_start=124103204639059

Starting 1 process

rbd engine: RBD version: 0.1.10

Jobs: 1 (f=1): [W(1)] [21.1% done] [0KB/98304KB/0KB /s] [0/24/0 iops] [eta 00m:15sJobs: 1 (f=1): [W(1)] [29.4% done] [0KB/90112KB/0KB /s] [0/22/0 iops] [eta 00m:12sJobs: 1 (f=1): [W(1)] [37.5% done] [0KB/77824KB/0KB /s] [0/19/0 iops] [eta 00m:10sJobs: 1 (f=1): [W(1)] [46.7% done] [0KB/98304KB/0KB /s] [0/24/0 iops] [eta 00m:08sJobs: 1 (f=1): [W(1)] [47.1% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:09s] Jobs: 1 (f=1): [W(1)] [56.2% done] [0KB/98304KB/0KB /s] [0/24/0 iops] [eta 00m:07sJobs: 1 (f=1): [W(1)] [66.7% done] [0KB/104.0MB/0KB /s] [0/26/0 iops] [eta 00m:05sJobs: 1 (f=1): [W(1)] [68.8% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:05s] Jobs: 1 (f=1): [W(1)] [80.0% done] [0KB/128.0MB/0KB /s] [0/32/0 iops] [eta 00m:03sJobs: 1 (f=1): [W(1)] [81.2% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:03s] Jobs: 1 (f=1): [W(1)] [93.8% done] [0KB/112.0MB/0KB /s] [0/28/0 iops] [eta 00m:01sJobs: 1 (f=1): [W(1)] [94.1% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:01s]

write-4M: (groupid=0, jobs=1): err= 0: pid=15285: Fri Sep 23 02:58:55 2016

Description : ["write test with block size of 4M"]

write: io=1024.0MB, bw=64365KB/s, iops=15, runt= 16291msec

slat (usec): min=70, max=527, avg=239.13, stdev=86.36

clat (msec): min=953, max=4987, avg=2000.13, stdev=806.32

lat (msec): min=953, max=4987, avg=2000.37, stdev=806.32

clat percentiles (msec):

| 1.00th=[ 955], 5.00th=[ 963], 10.00th=[ 1139], 20.00th=[ 1188],

| 30.00th=[ 1483], 40.00th=[ 1827], 50.00th=[ 1827], 60.00th=[ 2008],

| 70.00th=[ 2114], 80.00th=[ 2868], 90.00th=[ 3195], 95.00th=[ 3523],

| 99.00th=[ 5014], 99.50th=[ 5014], 99.90th=[ 5014], 99.95th=[ 5014],

| 99.99th=[ 5014]

bw (KB /s): min= 2773, max=103290, per=98.03%, avg=63095.40, stdev=30372.18

lat (msec) : 1000=8.98%, 2000=44.92%, >=2000=46.09%

cpu : usr=0.38%, sys=0.00%, ctx=14, majf=0, minf=0

IO depths : 1=0.8%, 2=1.6%, 4=5.9%, 8=26.6%, 16=61.3%, 32=3.9%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=96.3%, 8=0.0%, 16=0.0%, 32=3.7%, 64=0.0%, >=64=0.0%

issued : total=r=0/w=256/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

WRITE: io=1024.0MB, aggrb=64365KB/s, minb=64365KB/s, maxb=64365KB/s, mint=16291msec, maxt=16291msec

Disk stats (read/write):

sda: ios=0/6, merge=0/0, ticks=0/100, in_queue=100, util=0.31%

rw=read,进行读测试

[[email protected] ~]# vim write.fio

[write-4M]

description="write test with block size of 4M"

ioengine=rbd

clientname=admin

pool=rbd

rbdname=block-device1

iodepth=32

runtime=120

rw=read

bs=4M

[[email protected] ~]# fio write.fio

write-4M: (g=0): rw=read, bs=4M-4M/4M-4M/4M-4M, ioengine=rbd, iodepth=32

fio-2.3

time 15383 cycles_start=124168557431260

Starting 1 process

rbd engine: RBD version: 0.1.10

Jobs: 1 (f=1): [R(1)] [50.0% done] [124.0MB/0KB/0KB /s] [31/0/0 iops] [eta 00m:03sJobs: 1 (f=1): [R(1)] [66.7% done] [224.0MB/0KB/0KB /s] [56/0/0 iops] [eta 00m:02sJobs: 1 (f=1): [R(1)] [100.0% done] [216.0MB/0KB/0KB /s] [54/0/0 iops] [eta 00m:00s]

write-4M: (groupid=0, jobs=1): err= 0: pid=15410: Fri Sep 23 03:00:52 2016

Description : ["write test with block size of 4M"]

read : io=1024.0MB, bw=198745KB/s, iops=48, runt= 5276msec

slat (usec): min=3, max=17, avg= 4.19, stdev= 2.61

clat (msec): min=162, max=1339, avg=640.89, stdev=201.60

lat (msec): min=162, max=1339, avg=640.90, stdev=201.60

clat percentiles (msec):

| 1.00th=[ 163], 5.00th=[ 412], 10.00th=[ 449], 20.00th=[ 465],

| 30.00th=[ 494], 40.00th=[ 586], 50.00th=[ 660], 60.00th=[ 676],

| 70.00th=[ 685], 80.00th=[ 693], 90.00th=[ 955], 95.00th=[ 1090],

| 99.00th=[ 1270], 99.50th=[ 1270], 99.90th=[ 1336], 99.95th=[ 1336],

| 99.99th=[ 1336]

bw (KB /s): min= 5927, max=244017, per=86.44%, avg=171799.00, stdev=83676.59

lat (msec) : 250=1.17%, 500=33.20%, 750=51.95%, 1000=7.42%, 2000=6.25%

cpu : usr=0.06%, sys=0.00%, ctx=18, majf=0, minf=0

IO depths : 1=0.8%, 2=3.1%, 4=8.2%, 8=28.1%, 16=56.2%, 32=3.5%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=96.6%, 8=0.0%, 16=0.0%, 32=3.4%, 64=0.0%, >=64=0.0%

issued : total=r=256/w=0/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

READ: io=1024.0MB, aggrb=198744KB/s, minb=198744KB/s, maxb=198744KB/s, mint=5276msec, maxt=5276msec

Disk stats (read/write):

sda: ios=0/0, merge=0/0, ticks=0/0, in_queue=0, util=0.00%

rw=randread,进行随机读测试

[[email protected] ~]# cat write.fio

[write-4M]

description="write test with block size of 4M"

ioengine=rbd

clientname=admin

pool=rbd

rbdname=block-device1

iodepth=32

runtime=120

rw=randread

bs=4M

[[email protected] ~]# fio write.fio

write-4M: (g=0): rw=randread, bs=4M-4M/4M-4M/4M-4M, ioengine=rbd, iodepth=32

fio-2.3

time 16722 cycles_start=124259845112132

Starting 1 process

rbd engine: RBD version: 0.1.10

Jobs: 1 (f=1): [r(1)] [50.0% done] [120.0MB/0KB/0KB /s] [30/0/0 iops] [eta 00m:03sJobs: 1 (f=1): [r(1)] [66.7% done] [128.0MB/0KB/0KB /s] [32/0/0 iops] [eta 00m:02sJobs: 1 (f=1): [r(1)] [100.0% done] [248.0MB/0KB/0KB /s] [62/0/0 iops] [eta 00m:00s]

write-4M: (groupid=0, jobs=1): err= 0: pid=16749: Fri Sep 23 03:03:50 2016

Description : ["write test with block size of 4M"]

read : io=1024.0MB, bw=194181KB/s, iops=47, runt= 5400msec

slat (usec): min=3, max=20, avg= 4.32, stdev= 2.58

clat (msec): min=359, max=1491, avg=650.89, stdev=174.32

lat (msec): min=359, max=1491, avg=650.90, stdev=174.32

clat percentiles (msec):

| 1.00th=[ 359], 5.00th=[ 375], 10.00th=[ 375], 20.00th=[ 490],

| 30.00th=[ 611], 40.00th=[ 627], 50.00th=[ 685], 60.00th=[ 734],

| 70.00th=[ 734], 80.00th=[ 758], 90.00th=[ 766], 95.00th=[ 766],

| 99.00th=[ 1450], 99.50th=[ 1500], 99.90th=[ 1500], 99.95th=[ 1500],

| 99.99th=[ 1500]

bw (KB /s): min=102767, max=318950, per=98.17%, avg=190635.00, stdev=65800.97

lat (msec) : 500=23.83%, 750=48.83%, 1000=23.83%, 2000=3.52%

cpu : usr=0.06%, sys=0.00%, ctx=15, majf=0, minf=0

IO depths : 1=1.6%, 2=5.1%, 4=12.1%, 8=28.1%, 16=50.0%, 32=3.1%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=96.6%, 8=0.0%, 16=0.4%, 32=3.0%, 64=0.0%, >=64=0.0%

issued : total=r=256/w=0/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

READ: io=1024.0MB, aggrb=194180KB/s, minb=194180KB/s, maxb=194180KB/s, mint=5400msec, maxt=5400msec

Disk stats (read/write):

sda: ios=0/2, merge=0/0, ticks=0/20, in_queue=20, util=0.37%

[[email protected] ~]#

rw= randwrite,进行随机写测试

[[email protected] ~]# cat write.fio

[write-4M]

description="write test with block size of 4M"

ioengine=rbd

clientname=admin

pool=rbd

rbdname=block-device1

iodepth=32

runtime=120

rw=randwrite

bs=4M

[[email protected] ~]# fio write.fio

write-4M: (g=0): rw=randwrite, bs=4M-4M/4M-4M/4M-4M, ioengine=rbd, iodepth=32

fio-2.3

time 16834 cycles_start=124288020352425

Starting 1 process

rbd engine: RBD version: 0.1.10

Jobs: 1 (f=1): [w(1)] [21.1% done] [0KB/116.0MB/0KB /s] [0/29/0 iops] [eta 00m:15sJobs: 1 (f=1): [w(1)] [29.4% done] [0KB/86016KB/0KB /s] [0/21/0 iops] [eta 00m:12sJobs: 1 (f=1): [w(1)] [42.9% done] [0KB/124.0MB/0KB /s] [0/31/0 iops] [eta 00m:08sJobs: 1 (f=1): [w(1)] [41.2% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:10s] Jobs: 1 (f=1): [w(1)] [57.1% done] [0KB/128.0MB/0KB /s] [0/32/0 iops] [eta 00m:06sJobs: 1 (f=1): [w(1)] [56.2% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:07s] Jobs: 1 (f=1): [w(1)] [66.7% done] [0KB/128.0MB/0KB /s] [0/32/0 iops] [eta 00m:05sJobs: 1 (f=1): [w(1)] [68.8% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:05s] Jobs: 1 (f=1): [w(1)] [81.2% done] [0KB/128.0MB/0KB /s] [0/32/0 iops] [eta 00m:03sJobs: 1 (f=1): [w(1)] [82.4% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:03s] Jobs: 1 (f=1): [w(1)] [88.9% done] [0KB/104.0MB/0KB /s] [0/26/0 iops] [eta 00m:02sJobs: 1 (f=1): [w(1)] [89.5% done] [0KB/0KB/0KB /s] [0/0/0 iops] [eta 00m:02s]

write-4M: (groupid=0, jobs=1): err= 0: pid=16861: Fri Sep 23 03:04:57 2016

Description : ["write test with block size of 4M"]

write: io=1024.0MB, bw=63465KB/s, iops=15, runt= 16522msec

slat (usec): min=89, max=508, avg=236.04, stdev=85.71

clat (msec): min=1059, max=4915, avg=2038.61, stdev=762.83

lat (msec): min=1060, max=4916, avg=2038.84, stdev=762.83

clat percentiles (msec):

| 1.00th=[ 1057], 5.00th=[ 1057], 10.00th=[ 1074], 20.00th=[ 1254],

| 30.00th=[ 1270], 40.00th=[ 1975], 50.00th=[ 2008], 60.00th=[ 2343],

| 70.00th=[ 2442], 80.00th=[ 2474], 90.00th=[ 2933], 95.00th=[ 2933],

| 99.00th=[ 4883], 99.50th=[ 4948], 99.90th=[ 4948], 99.95th=[ 4948],

| 99.99th=[ 4948]

bw (KB /s): min= 3202, max=110909, per=95.10%, avg=60356.44, stdev=30229.78

lat (msec) : 2000=41.41%, >=2000=58.59%

cpu : usr=0.38%, sys=0.00%, ctx=11, majf=0, minf=0

IO depths : 1=0.8%, 2=3.1%, 4=9.8%, 8=26.2%, 16=55.9%, 32=4.3%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=96.6%, 8=0.0%, 16=0.0%, 32=3.4%, 64=0.0%, >=64=0.0%

issued : total=r=0/w=256/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

WRITE: io=1024.0MB, aggrb=63465KB/s, minb=63465KB/s, maxb=63465KB/s, mint=16522msec, maxt=16522msec

Disk stats (read/write):

sda: ios=0/3, merge=0/0, ticks=0/20, in_queue=20, util=0.12%

5、单个磁盘性能测试

[[email protected] ~]# ceho 3 > /proc/sys/vm/drop_caches

[[email protected] ~]# dd if=/dev/zero of=/var/lib/ceph/osd/ceph-12/deleteme bs=10G count=1 oflag=direct

0+1 records in

0+1 records out

2147418112 bytes (2.1 GB) copied, 13.0371 s, 165 MB/s

[[email protected] ~]#

单个磁盘读性能

[[email protected] ~]# dd if=/var/lib/ceph/osd/ceph-12/deleteme of=/dev/null bs=10G count=1 iflag=direct

0+1 records in

0+1 records out

2147418112 bytes (2.1 GB) copied, 11.8353 s, 181 MB/s

6、使用Iperf进行ceph节点之间的网络性能,

A,在第一个ceph节点执行iperf服务器端选项,带-s 参数并使用-p选项指定端口进行监听。

[[email protected] ~]# iperf -s -p 6900

------------------------------------------------------------

Server listening on TCP port 6900

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

[ 4] local 10.1.1.30 port 6900 connected with 10.1.1.20 port 45437

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 10.7 GBytes 9.17 Gbits/sec

B,在另一个ceph节点使用-c参数以客户端选项运行,并接server端节点名

[[email protected] ~]# iperf -c node3 -p 6900

------------------------------------------------------------

Client connecting to node3, TCP port 6900

TCP window size: 85.0 KByte (default)

------------------------------------------------------------

[ 3] local 10.1.1.20 port 45437 connected with 10.1.1.30 port 6900

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 10.7 GBytes 9.17 Gbits/sec

本文出自 “技术成就梦想” 博客,请务必保留此出处http://andyliu.blog.51cto.com/518879/1856017

以上是关于Ceph分布式集群文件系统测试的主要内容,如果未能解决你的问题,请参考以下文章