Hadoop基本开发环境搭建(原创,已实践)

Posted 霏霏暮雨

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hadoop基本开发环境搭建(原创,已实践)相关的知识,希望对你有一定的参考价值。

软件包:

hadoop-2.7.2.tar.gz

hadoop-eclipse-plugin-2.7.2.jar

hadoop-common-2.7.1-bin.zip

eclipse

jdk1.8.45

hadoop-2.7.2(linux和windows各一份)

Linux系统(centos或其它)

Hadoop安装环境

准备环境:

安装Hadoop,安装步骤参见Hadoop安装章节。

安装eclipse。

搭建过程如下:

1. 将hadoop-eclipse-plugin-2.7.2.jar拷贝到eclipse/dropins目录下。

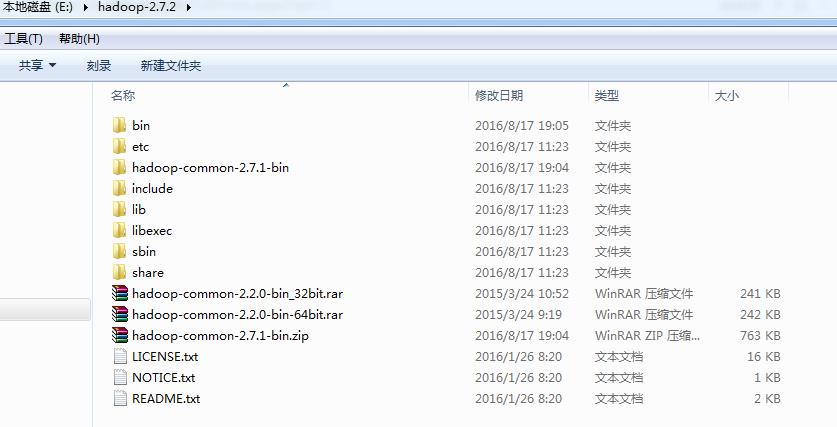

2. 解压hadoop-2.7.2.tar.gz到E盘下。

3. 下载或者编译hadoop-common-2.7.2(由于hadoop-common-2.7.1可以兼容hadoop-common-2.7.2,因此这里使用hadoop-common-2.7.1),如果想编译可参考相关文章。

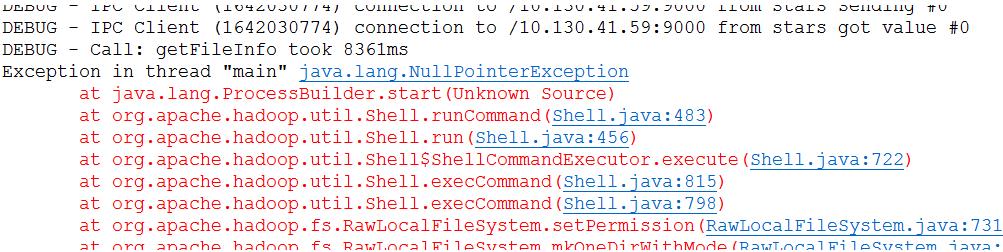

4. 将hadoop-common-2.7.1下的文件全部拷贝到E:\\hadoop-2.7.2\\bin下面,hadoop.dll在system32下面也要放一个,否则会报下图的错误:

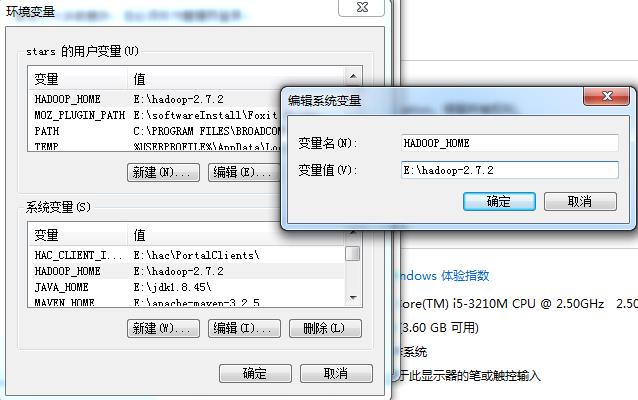

并配置系统环境变量HADOOP_HOME:

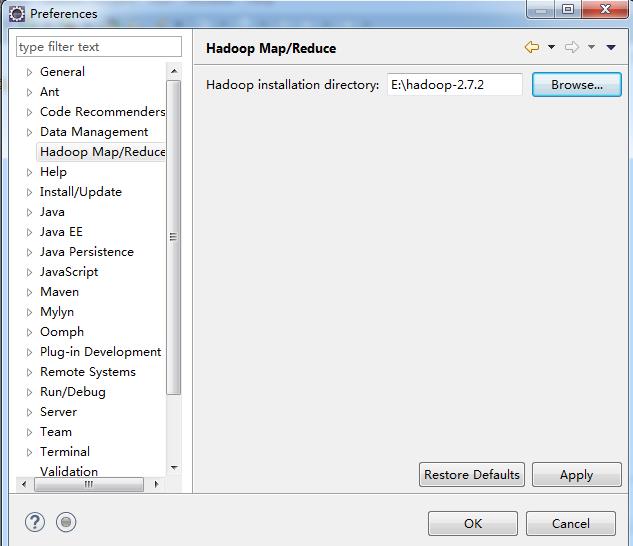

5. 启动eclipse,打开windows->Preferences的Hadoop Map/Reduce中设置安装目录:

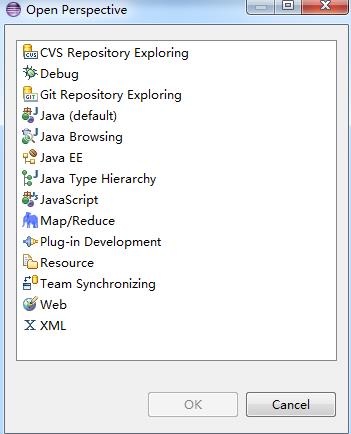

6. 打开Windows->Open Perspective中的Map/Reduce,在此perspective下进行hadoop程序开发。

7. 打开Windows->Show View中的Map/Reduce Locations,如下图右键选择New Hadoop location…新建hadoop连接。

8.

9. 新建工程并添加WordCount类:

10. 把log4j.properties和hadoop集群中的core-site.xml加入到classpath中。我的示例工程是maven组织,因此放到src/main/resources目录。

11. log4j.properties文件内容如下:

log4j.rootLogger=debug,stdout,R log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%5p - %m%n log4j.appender.R=org.apache.log4j.RollingFileAppender log4j.appender.R.File=mapreduce_test.log log4j.appender.R.MaxFileSize=1MB log4j.appender.R.MaxBackupIndex=1 log4j.appender.R.layout=org.apache.log4j.PatternLayout log4j.appender.R.layout.ConversionPattern=%p %t %c - %m%n log4j.logger.com.codefutures=DEBUG

12. 在HDFS上创建目录input

hadoop dfs -mkdir input

13. 拷贝本地README.txt到HDFS的input里

hadoop dfs -copyFromLocal /usr/local/hadoop/README.txt input

14. hadoop集群中hdfs-site.xml中要添加下面的配置,否则在eclipse中无法向hdfs中上传文件:

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

15. 若碰到Cannot connect to VM com.sun.jdi.connect.TransportTimeoutException,则关闭防火墙。

16. 书写代码如下:

package com.hadoop.example; import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.util.GenericOptionsParser; public class WordCount { public static class TokenizerMapper extends Mapper<Object, Text, Text, IntWritable> { private final static IntWritable one = new IntWritable(1); private Text word = new Text(); public void map(Object key, Text value, Context context) throws IOException, InterruptedException { StringTokenizer itr = new StringTokenizer(value.toString()); System.out.print("--map: " + value.toString() + "\\n"); while (itr.hasMoreTokens()) { word.set(itr.nextToken()); System.out.print("--map token: " + word.toString() + "\\n"); context.write(word, one); System.out.print("--context: " + word.toString() + "," + one.toString() + "\\n"); } } } public static class IntSumReducer extends Reducer<Text, IntWritable, Text, IntWritable> { private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable val : values) { sum += val.get(); } result.set(sum); context.write(key, result); System.out.print("--reduce: " + key.toString() + "," + result.toString() + "\\n"); } } public static void main(String[] args) throws Exception { System.setProperty("hadoop.home.dir", "E:\\\\hadoop-2.7.2"); Configuration conf = new Configuration(); String[] otherArgs = new GenericOptionsParser(conf, args) .getRemainingArgs(); if (otherArgs.length != 2) { System.err.println("Usage: wordcount <in> <out>"); System.exit(2); } Job job = new Job(conf, "word count"); job.setJarByClass(WordCount.class); job.setMapperClass(TokenizerMapper.class); job.setCombinerClass(IntSumReducer.class); job.setReducerClass(IntSumReducer.class); job.setNumReduceTasks(2); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); FileInputFormat.addInputPath(job, new Path(otherArgs[0])); FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

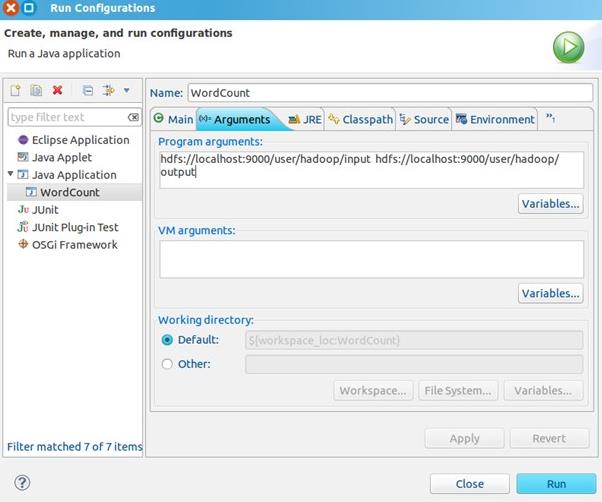

17. 点击WordCount.java,右键,点击Run As—>Run Configurations,配置运行参数,即输入和输出文件夹,java application里面如果没有wordcount就先把当前project run--->java applation一下。

hdfs://localhost:9000/user/hadoop/input hdfs://localhost:9000/user/hadoop/output

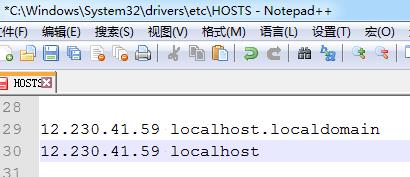

其中的localhost为hadoop集群的域名,也可以直接使用IP,如果使用域名的话需要编辑C:\\Windows\\System32\\drivers\\etc\\HOSTS,添加IP与域名的映射关系

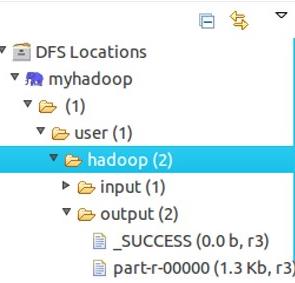

18. 运行完成后,查看运行结果:

方法1:

hadoop dfs -ls output

可以看到有两个输出结果,_SUCCESS和part-r-00000

执行hadoop dfs -cat output/*

方法2:

以上是关于Hadoop基本开发环境搭建(原创,已实践)的主要内容,如果未能解决你的问题,请参考以下文章

原创 Hadoop&Spark 动手实践 5Spark 基础入门,集群搭建以及Spark Shell