Linux内存控制器

Posted bubbleben

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Linux内存控制器相关的知识,希望对你有一定的参考价值。

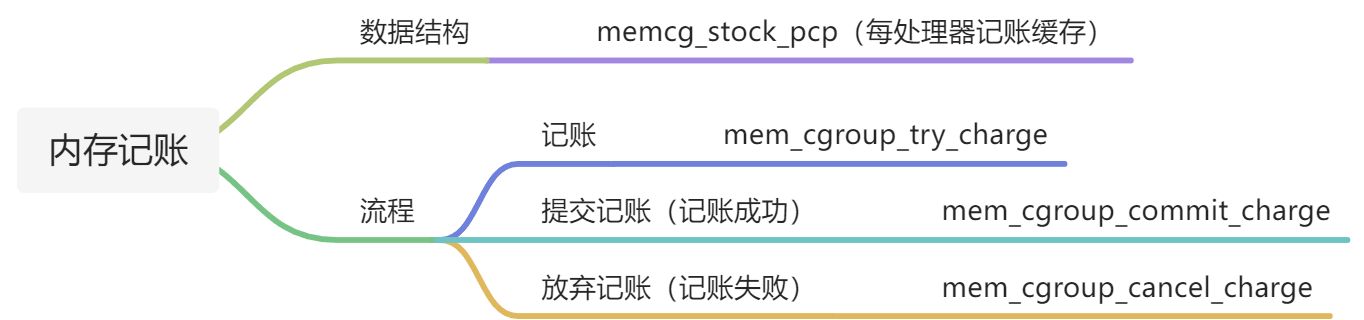

1. memcg_stock_pcp

// 每处理器记账缓存一次从内存控制组批量申请32页, 然后把内存控制组的内存使用量加上32页

#define CHARGE_BATCH 32U

// 在内存控制组记账(charge)时, 先查看当前处理器的memcg_stock_pcp

// 如果memcg_stock_pcp保存的内存控制组(memcg_stock_pcp->cached)正是准备记账的内存控制组

// 并且预留页数(memcg_stock_pcp->nr_pages)大于或等于准备记账的页数, 则将预留页数减去准备记账的页数

struct memcg_stock_pcp

// 内存控制组(非根控制组)

struct mem_cgroup *cached; /* this never be root cgroup */

// 预留页数

unsigned int nr_pages;

// 工作任务

struct work_struct work;

unsigned long flags;

#define FLUSHING_CACHED_CHARGE 0

;

// 为减少处理器之间的竞争, 提高内存记账效率, 定义一个每处理器记账缓存

static DEFINE_PER_CPU(struct memcg_stock_pcp, memcg_stock);

static DEFINE_MUTEX(percpu_charge_mutex);

2. mem_cgroup_try_charge

/* Whether the swap controller is active */

#ifdef CONFIG_MEMCG_SWAP

// 启用交换分区记账

int do_swap_account __read_mostly;

#else

#define do_swap_account 0

#endif

// 尝试记账一个page

// page: 准备记账的页

// mm: 指向申请物理页的进程的内存描述符

// gfp_mask: 申请分配物理页的分配掩码

// memcgp: 输出参数, 返回记账的内存控制组

// compound: 以复合页还是单页的方式记账

int mem_cgroup_try_charge(struct page *page, struct mm_struct *mm,

gfp_t gfp_mask, struct mem_cgroup **memcgp,

bool compound)

struct mem_cgroup *memcg = NULL;

// 如果是复合页则需要计算页数, 否则就是单页

unsigned int nr_pages = compound ? hpage_nr_pages(page) : 1;

int ret = 0;

// 如果禁用内存控制组则返回0

if (mem_cgroup_disabled())

goto out;

// page在swap cache中

if (PageSwapCache(page))

/*

* Every swap fault against a single page tries to charge the

* page, bail as early as possible. shmem_unuse() encounters

* already charged pages, too. The USED bit is protected by

* the page lock, which serializes swap cache removal, which

* in turn serializes uncharging.

*/

VM_BUG_ON_PAGE(!PageLocked(page), page);

if (compound_head(page)->mem_cgroup)

goto out;

if (do_swap_account)

// 从page->private成员得到交换项

swp_entry_t ent = .val = page_private(page), ;

// 根据交换项得到内存控制组id

unsigned short id = lookup_swap_cgroup_id(ent);

rcu_read_lock();

// 根据id查找内存控制组

memcg = mem_cgroup_from_id(id);

// 如果该内存控制组存在, 则引用计算加1

if (memcg && !css_tryget_online(&memcg->css))

memcg = NULL;

rcu_read_unlock();

// 如果内存控制组为空, 则根据内存描述符查找进程所属的内存控制组

if (!memcg)

memcg = get_mem_cgroup_from_mm(mm);

// 进入真正的尝试记账流程[见2.1节]

ret = try_charge(memcg, gfp_mask, nr_pages);

// 内存控制组的引用计数减1

css_put(&memcg->css);

out:

// 入参memcgp用于返回记账的内存控制组

*memcgp = memcg;

return ret;

2.1 try_charge

static int try_charge(struct mem_cgroup *memcg, gfp_t gfp_mask,

unsigned int nr_pages)

// 取32和记账页数较大的那个值

unsigned int batch = max(CHARGE_BATCH, nr_pages);

// 最多重试5次

int nr_retries = MEM_CGROUP_RECLAIM_RETRIES;

// 用于记录超过内存使用限制(硬限制)的控制组

struct mem_cgroup *mem_over_limit;

// 记账的页面计数器

struct page_counter *counter;

// 回收的页面数量

unsigned long nr_reclaimed;

bool may_swap = true;

bool drained = false;

// 进程属于根控制组: 因为根控制组对内存使用量没有限制, 所以不需要记账直接返回

if (mem_cgroup_is_root(memcg))

return 0;

retry:

// 如果当前cpu的记账缓存从准备记账的内存控制组预留的页数足够多

// 那么从记账缓存减去准备记账的页数(而无需向内存控制组记账), 并结束记账返回成功[见2.2节]

if (consume_stock(memcg, nr_pages))

return 0;

// 1. 没有开启内存+交换分区记账, 直接进入mem_cgroup->memory记账流程[见2.3节]

// 2. 开启内存+交换分区记账, 则首先进入memsw记账流程, 成功后再进入memory记账流程

if (!do_memsw_account() ||

page_counter_try_charge(&memcg->memsw, batch, &counter))

// 当前内存控制组和其所有启用分层记账的祖先的内存使用量没有超过限制, 则进入记账成功流程

if (page_counter_try_charge(&memcg->memory, batch, &counter))

goto done_restock;

// 如果mem_cgroup->memory超过限制记账失败

// 而且开启内存+交换分区记账, 则还需要撤销29行内存+交换分区的记账

if (do_memsw_account())

page_counter_uncharge(&memcg->memsw, batch);

// 记录内存使用量超过限制的内存控制组

mem_over_limit = mem_cgroup_from_counter(counter, memory);

else

// 记录内存+交换分区使用量超过限制的内存控制组

mem_over_limit = mem_cgroup_from_counter(counter, memsw);

may_swap = false;

// 如果batch大于准备记账的实际页数, 则使用实际页数重试记账流程

if (batch > nr_pages)

batch = nr_pages;

goto retry;

/*

* Unlike in global OOM situations, memcg is not in a physical

* memory shortage. Allow dying and OOM-killed tasks to

* bypass the last charges so that they can exit quickly and

* free their memory.

*/

if (unlikely(tsk_is_oom_victim(current) ||

fatal_signal_pending(current) ||

current->flags & PF_EXITING))

goto force;

/*

* Prevent unbounded recursion when reclaim operations need to

* allocate memory. This might exceed the limits temporarily,

* but we prefer facilitating memory reclaim and getting back

* under the limit over triggering OOM kills in these cases.

*/

if (unlikely(current->flags & PF_MEMALLOC))

goto force;

if (unlikely(task_in_memcg_oom(current)))

goto nomem;

if (!gfpflags_allow_blocking(gfp_mask))

goto nomem;

// 记录MEMCG_MAX事件到mem_cgroup_stat_cpu

mem_cgroup_event(mem_over_limit, MEMCG_MAX);

// mem_over_limit记录了超过限制的内存控制组

// 这里尝试针对该控制组进行内存回收, 并返回回收的页面数量

nr_reclaimed = try_to_free_mem_cgroup_pages(mem_over_limit, nr_pages,

gfp_mask, may_swap);

// 内存回收之后, 剩余的内存使用量已经足够记账申请的页数, 则进入重试流程[见2.4节]

if (mem_cgroup_margin(mem_over_limit) >= nr_pages)

goto retry;

// drained默认为false, 代表是否有把每处理器记账缓存预留的页数归还给内存控制组

if (!drained)

// 把每处理器记账缓存预留的页数归还给内存控制组, 并进入重试流程[见2.5节]

drain_all_stock(mem_over_limit);

drained = true;

goto retry;

// 如果申请页面时不允许重试, 则进入nomem流程

if (gfp_mask & __GFP_NORETRY)

goto nomem;

/*

* Even though the limit is exceeded at this point, reclaim

* may have been able to free some pages. Retry the charge

* before killing the task.

*

* Only for regular pages, though: huge pages are rather

* unlikely to succeed so close to the limit, and we fall back

* to regular pages anyway in case of failure.

*/

// 如果从超过限制的内存控制组回收的页数大于0, 并且准备记账的页数没有超过8, 则进入重试流程

if (nr_reclaimed && nr_pages <= (1 << PAGE_ALLOC_COSTLY_ORDER))

goto retry;

/*

* At task move, charge accounts can be doubly counted. So, it's

* better to wait until the end of task_move if something is going on.

*/

if (mem_cgroup_wait_acct_move(mem_over_limit))

goto retry;

// 最多重试5次

if (nr_retries--)

goto retry;

// 如果申请页面时不允许失败, 则强制记账允许内存使用超过限制

if (gfp_mask & __GFP_NOFAIL)

goto force;

if (fatal_signal_pending(current))

goto force;

// 记录MEMCG_OOM事件到mem_cgroup_stat_cpu

mem_cgroup_event(mem_over_limit, MEMCG_OOM);

// 把进程设置为内存控制组内存耗尽状态

mem_cgroup_oom(mem_over_limit, gfp_mask,

get_order(nr_pages * PAGE_SIZE));

nomem:

// 如果申请页面时允许失败, 则返回错误码ENOMEM

if (!(gfp_mask & __GFP_NOFAIL))

return -ENOMEM;

force:

/*

* The allocation either can't fail or will lead to more memory

* being freed very soon. Allow memory usage go over the limit

* temporarily by force charging it.

*/

// 如果申请页面时不允许失败, 则强制记账, 允许内存使用量临时超过硬限制

page_counter_charge(&memcg->memory, nr_pages);

if (do_memsw_account())

page_counter_charge(&memcg->memsw, nr_pages);

// 内存控制组的引用计数加上记账的页数

css_get_many(&memcg->css, nr_pages);

return 0;

done_restock:

// 内存控制组引用计数加上记账的页数

css_get_many(&memcg->css, batch);

// 把记账多余的页数保留到当前cpu的记账缓存[见2.6节]

if (batch > nr_pages)

refill_stock(memcg, batch - nr_pages);

/*

* If the hierarchy is above the normal consumption range, schedule

* reclaim on returning to userland. We can perform reclaim here

* if __GFP_RECLAIM but let's always punt for simplicity and so that

* GFP_KERNEL can consistently be used during reclaim. @memcg is

* not recorded as it most likely matches current's and won't

* change in the meantime. As high limit is checked again before

* reclaim, the cost of mismatch is negligible.

*/

do

if (page_counter_read(&memcg->memory) > memcg->high)

/* Don't bother a random interrupted task */

if (in_interrupt())

schedule_work(&memcg->high_work);

break;

current->memcg_nr_pages_over_high += batch;

set_notify_resume(current);

break;

while ((memcg = parent_mem_cgroup(memcg)));

return 0;

2.2 consume_stock

static bool consume_stock(struct mem_cgroup *memcg, unsigned int nr_pages)

struct memcg_stock_pcp *stock;

unsigned long flags;

bool ret = false;

// 如果需要申请的页数大于记账缓存预留的最大页数32, 则直接返回, 准备从内存控制组中申请

if (nr_pages > CHARGE_BATCH)

return ret;

local_irq_save(flags);

// 取出当前cpu的记账缓存

stock = this_cpu_ptr(&memcg_stock);

// 满足以下2个条件时, 从记账缓存中分配相应的页数

// 1. 准备记账的内存控制组与当前记账缓存保存的内存控制组是同一个

// 2. 记账缓存中预留的页数大于申请的页数

if (memcg == stock->cached && stock->nr_pages >= nr_pages)

// 记账缓存预留的页数减去记账页数

stock->nr_pages -= nr_pages;

ret = true;

local_irq_restore(flags);

return ret;

2.3 page_counter_try_charge

// counter: mem_cgroup的页面计数器(包括memory, memsw, kmem, tcpmem和swap)

// nr_pages: 记账的页数

// fail: 需要返回的参数, 记录超过限制的页面计数器

bool page_counter_try_charge(struct page_counter *counter,

unsigned long nr_pages,

struct page_counter **fail)

struct page_counter *c;

// 遍历当前页面计数器以及所有支持分层记账的父控制组的页面计数器

for (c = counter; c; c = c->parent)

long new;

// 将记账的页数与页面计数器当前使用量相加得到新的使用量

new = atomic_long_add_return(nr_pages, &c->count);

// 判断新的使用量是否超过页面计数器的硬限制

if (new > c->limit)

// 如果超过硬限制, 则将记账的页数从新的使用量中减掉

atomic_long_sub(nr_pages, &c->count);

/*

* This is racy, but we can live with some

* inaccuracy in the failcnt.

*/

// 将页面计数器超过硬限制的次数加1, 并返回超过硬限制的页面计数器

c->failcnt++;

*fail = c;

goto failed;

/*

* Just like with failcnt, we can live with some

* inaccuracy in the watermark.

*/

// 如果未超过硬限制而且新的内存使用量大于记录的历史最大内存使用量, 则更新最大内存使用量

if (new > c->watermark)

c->watermark = new;

return true;

failed:

// 如果超过硬限制则代表记账失败, 需要取消之前所有父控制组的页面计数器的记账

for (c = counter; c != *fail; c = c->parent)

page_counter_cancel(c, nr_pages);

return false;

2.4 mem_cgroup_margin

static unsigned long mem_cgroup_margin(struct mem_cgroup *memcg)

unsigned long margin = 0;

unsigned long count;

unsigned long limit;

// 计算memory计数器的内存使用量

count = page_counter_read(&memcg->memory);

// 计算memory计数器的内存使用硬限制

limit = READ_ONCE(memcg->memory.limit);

// 计算剩余可用的内存使用量

if (count < limit)

margin = limit - count;

// 如果支持内存+交换分区记账

if (do_memsw_account())

// 计算memsw计数器的内存使用量

count = page_counter_read(&memcg->memsw);

// 计算memsw计数器的内存使用硬限制

limit = READ_ONCE(memcg->memsw.limit);

// 计算剩余可用的内存+交换分区使用量, 并取与剩余可用内存使用量之间较小值作为总剩余可用内存

if (count <= limit)

margin = min(margin, limit - count);

else

margin = 0;

return margin;

2.5 drain_all_stock

/*

* Drains all per-CPU charge caches for given root_memcg resp. subtree

* of the hierarchy under it.

*/

// 将每处理器记账缓存预留的页面返回给内存控制组

static void drain_all_stock(struct mem_cgroup *root_memcg)

int cpu, curcpu;

/* If someone's already draining, avoid adding running more workers. */

if (!mutex_trylock(&percpu_charge_mutex))

return;

/*

* Notify other cpus that system-wide "drain" is running

* We do not care about races with the cpu hotplug because cpu down

* as well as workers from this path always operate on the local

* per-cpu data. CPU up doesn't touch memcg_stock at all.

*/

// 获取当前cpu

curcpu = get_cpu();

// 遍历每个cpu

for_each_online_cpu(cpu)

// 取出每个每处理器记账缓存

struct memcg_stock_pcp *stock = &per_cpu(memcg_stock, cpu);

struct mem_cgroup *memcg;

// 取出每处理器记账缓存保存的内存控制组

memcg = stock->cached;

// 如果保存的内存控制组为空, 或者记账缓存预留的页数等于0, 或者控制组引用计数为0, 则跳过此次循环

if (!memcg || !stock->nr_pages || !css_tryget(&memcg->css))

continue;

// 判断当前内存控制组是否是根控制组的后代

if (!mem_cgroup_is_descendant(memcg, root_memcg))

css_put(&memcg->css);

continue;

// 给memcg_stock_pcp设置FLUSHING_CACHED_CHARGE标志位

if (!test_and_set_bit(FLUSHING_CACHED_CHARGE, &stock->flags))

// 如果是当前cpu, 则将其对应的记账缓存预留页面返回给内存控制组[见2.5.1节]

if (cpu == curcpu)

drain_local_stock(&stock->work);

else

schedule_work_on(cpu, &stock->work);

css_put(&memcg->css);

put_cpu();

mutex_unlock(&percpu_charge_mutex);

2.5.1 drain_local_stock

static void drain_local_stock(struct work_struct *dummy)

struct memcg_stock_pcp *stock;

unsigned long flags;

/*

* The only protection from memory hotplug vs. drain_stock races is

* that we always operate on local CPU stock here with IRQ disabled

*/

local_irq_save(flags);

// 获取当前cpu每处理器缓存

stock = this_cpu_ptr(&memcg_stock);

// 见2.5.2节

drain_stock(stock);

// 清除FLUSHING_CACHED_CHARGE标志位

clear_bit(FLUSHING_CACHED_CHARGE, &stock->flags);

local_irq_restore(flags);

2.5.2 drain_stock

/*

* Returns stocks cached in percpu and reset cached information.

*/

static void drain_stock(struct memcg_stock_pcp *stock)

// 当前记账缓存保存的内存控制组

struct mem_cgroup *old = stock->cached;

// 记账缓存预留的页数大于0

if (stock->nr_pages)

// 将预留页返回给控制组后, 控制组的内存使用量也相应的较少这么多

page_counter_uncharge(&old->memory, stock->nr_pages);

// 如果支持内存+交换分区记账: 控制组的内存+交换分区使用量也减少这么多

if (do_memsw_account())

page_counter_uncharge(&old->memsw, stock->nr_pages);

css_put_many(&old->css, stock->nr_pages);

// 记账缓存预留页重置为0

stock->nr_pages = 0;

// 记账缓存保留的内存控制组重置为空

stock->cached = NULL;

2.6 refill_stock

static void refill_stock(struct mem_cgroup *memcg, unsigned int nr_pages)

struct memcg_stock_pcp *stock;

unsigned long flags;

local_irq_save(flags);

// 取出当前cpu的记账缓存

stock = this_cpu_ptr(&memcg_stock);

// 当前cpu记账缓存保存的内存控制组与目标控制组不是同一个

if (stock->cached != memcg) /* reset if necessary */

// 将记账缓存预留的页面返还给内存控制组

drain_stock(stock);

// 将记账缓存保存的内存控制组设置为当前控制组

stock->cached = memcg;

// 将多余的页数预留给记账缓存

stock->nr_pages += nr_pages;

// 如果总的预留的页数已经超过32, 则需要归还给内存控制组

if 以上是关于Linux内存控制器的主要内容,如果未能解决你的问题,请参考以下文章

Linux 内核 内存管理内存管理架构 ③ ( Linux 内核中的内存管理模块 | 页分配器 | 不连续页分配器 | 内存控制组 | 硬件设备内存管理 | MMU | 页表缓存 | 高速缓存 )

Linux 内核 内存管理内存管理架构 ③ ( Linux 内核中的内存管理模块 | 页分配器 | 不连续页分配器 | 内存控制组 | 硬件设备内存管理 | MMU | 页表缓存 | 高速缓存 )