ansible + kubeasz 二进制部署K8S高可用集群方案

Posted 我的紫霞辣辣

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ansible + kubeasz 二进制部署K8S高可用集群方案相关的知识,希望对你有一定的参考价值。

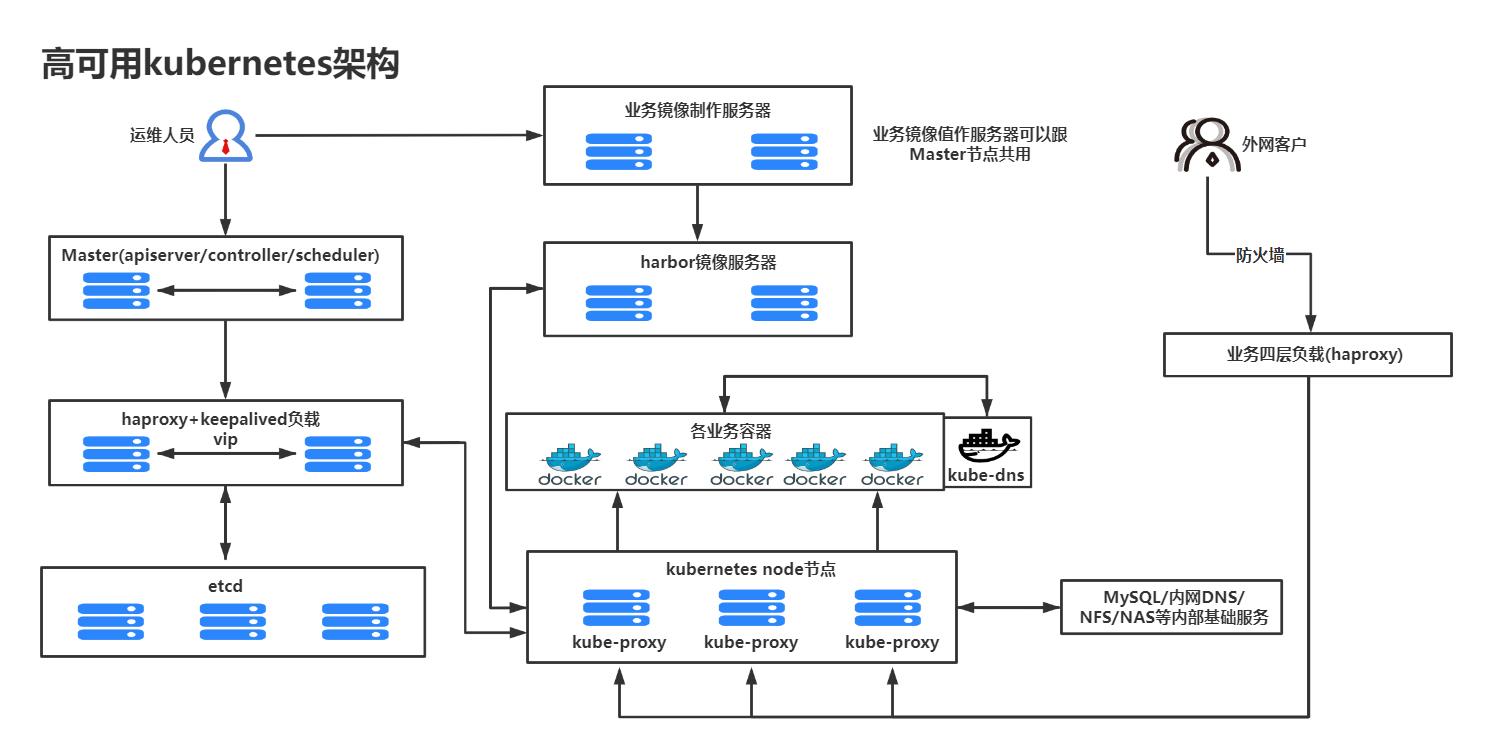

K8S架构图

基础环境配置

| 类型 | 服务器IP地址 | 备注 |

|---|---|---|

| Ansible(2台) | 192.168.15.101/102 | K8S集群部署服务器,可以和Master节点在共用 |

| K8s Master(2台) | 192.168.15.101/102 | K8S控制端,通过一个VIP做主备高可用 |

| Harbor(2台) | 192.168.15.103/104 | 高可用镜像服务器 |

| Etcd(最少3台) | 192.168.15.105/106/107 | 保存K8S集群数据的服务器 |

| Haproxy(2台) | 192.168.15.108/109 | 高可用etcd代理服务器 |

| K8s Noder(2-N台) | 192.168.15.110/111/… | 真正运行容器的服务器,高可用环境至少两台 |

主机名设置

| 主机名 | 服务器IP | VIP |

|---|---|---|

| k8s-master01 | 192.168.15.101 | 192.168.15.210 |

| k8s-master02 | 192.168.15.102 | 192.168.15.210 |

| k8s-harbor01 | 192.168.15.103 | |

| k8s-etcd01 | 192.168.15.104 | |

| k8s-etcd02 | 192.168.15.105 | |

| k8s-etcd03 | 192.168.15.106 | |

| k8s-ha01 | 192.168.15.107 | |

| k8s-ha02 | 192.168.15.108 | |

| k8s-node01 | 192.168.15.109 | |

| k8s-node02 | 192.168.15.110 |

harbor镜像仓库之Https

安装docker

# 卸载之前安装过得docker(若之前没有安装过docker,直接跳过此步)

sudo yum remove docker docker-common docker-selinux docker-engine

# 安装docker需要的依赖包 (之前执行过,可以省略)

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

# 安装docker软件

yum install docker-ce -y

# 配置镜像下载加速器

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

"registry-mirrors": ["https://hahexyip.mirror.aliyuncs.com"]

EOF

# 启动docker并加入开机自启动

systemctl enable docker && systemctl start docker

# 查看docker是否成功安装

docker version

在harbor主机安装docker-compose

# 下载安装Docker Compose

curl -L https://download.fastgit.org/docker/compose/releases/download/1.27.4/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

# 添加执行权限

chmod +x /usr/local/bin/docker-compose

# 检查安装版本

docker-compose --version

# bash命令补全

curl -L https://raw.githubusercontent.com/docker/compose/1.25.5/contrib/completion/bash/er-compose > /etc/bash_completion.d/docker-compose

创建CA证书

# 存放密钥的仓库

mkdir -p /usr/local/src/harbor/certs

# 生成私钥key

openssl genrsa -out /usr/local/src/harbor/certs/harbor-ca.key

ls /usr/local/src/harbor/certs/

# harbor-ca.key

# 签证(生成公钥key)

openssl req -x509 -new -nodes -key /usr/local/src/harbor/certs/harbor-ca.key -subj "/CN=harbor.nana.com" -days 3560 -out /usr/local/src/harbor/certs/harbor-ca.crt

ls /usr/local/src/harbor/certs/

# harbor-ca.crt harbor-ca.key

在harbor主机上部署harbor服务

链接:harbor-offline-installer-v2.1.0.tgz

提取码:1234

mkdir /apps

cd /apps

rz -E harbor-offline-installer-v2.1.0.tgz

tar -xvf harbor-offline-installer-v2.1.0.tgz

cd harbor/

cp harbor.yml.tmpl harbor.yml

# 修改配置harbor文件

vim harbor.yml

...

hostname: harbor.nana.com # harbor的web页面域名

...

certificate: /usr/local/src/harbor/certs/harbor-ca.crt # 公钥路径

private_key: /usr/local/src/harbor/certs/harbor-ca.key # 私钥路径

...

harbor_admin_password: 123 # harbor的web页面登陆密码

...

data_volume: /data # 生产环境最好修改成数据盘

# 安装harbor仓库

./install.sh

client同步crt证书

- k8s-master01主机先安装好docker

# k8s-master01,客户端主机创建存放公钥的目录; 添加域名解析

k8s-master01: mkdir -p /etc/docker/certs.d/harbor.nana.com

k8s-master01: echo 192.168.15.103 harbor.nana.com >> /etc/hosts

# 将harbor主机的公钥发送给k8s-master01主机

k8s-harbor01: scp /usr/local/src/harbor/certs/harbor-ca.crt 192.168.15.101:/etc/docker/certs.d/harbor.nana.com

# docker服务的请求添加域名解析

k8s-master01:

vim /usr/lib/systemd/system/docker.service

...

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --insecure-registry harbor.nana.com

...

# 重启docker服务

k8s-master01:

systemctl daemon-reload

systemctl restart docker

测试登陆harbor,在k8s-master01主机进行操作

# 登陆到harbor仓库

docker login harbor.nana.com

# 拉取镜像

docker pull alpine

# 给alpine镜像打上标签

docker tag alpine harbor.nana.com/library/alpine:latest

# 上传镜像到harbor仓库

docker push harbor.nana.com/library/alpine:latest

Haproxy+keepalived 高可用负载均衡

配置keepalived

- k8s-ha01主机进行操作

# 下载haproxy keepalived

yum -y install haproxy keepalived

# 配置keepalived

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs

notification_email

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_instance VI_1

state MASTER

interface eth0

virtual_router_id 55

priority 100

advert_int 1

authentication

auth_type PASS

auth_pass 1111

virtual_ipaddress

192.168.15.188 dev eth0 label eth0:0

# 启动keepalived服务

systemctl restart keepalived && systemctl enable keepalived

- k8s-ha02主机进行操作

# 下载haproxy keepalived

yum -y install haproxy keepalived

# 配置keepalived

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs

notification_email

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_instance VI_1

state BACKUP

interface eth0

virtual_router_id 55

priority 80

advert_int 1

authentication

auth_type PASS

auth_pass 1111

virtual_ipaddress

192.168.15.188 dev eth0 label eth0:0

# 启动keepalived服务

systemctl restart keepalived && systemctl enable keepalived

配置Haproxy

- 优化内核参数,k8s-ha01和k8s-ha02主机都进行操作,必须做内核参数优化,否则haproxy服务启动失败

vim /etc/sysctl.conf

# Controls source route verification

net.ipv4.conf.default.rp_filter = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

# Do not accept source routing

net.ipv4.conf.default.accept_source_route = 0

# Controls the System Request debugging functionality of the kernel

kernel.sysrq = 0

# Controls whether core dumps will append the PID to the core filename.

# Useful for debugging multi-threaded applications.

kernel.core_uses_pid = 1

# Controls the use of TCP syncookies

net.ipv4.tcp_syncookies = 1

# Disable netfilter on bridges.

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

# Controls the default maxmimum size of a mesage queue

kernel.msgmnb = 65536

# # Controls the maximum size of a message, in bytes

kernel.msgmax = 65536

# Controls the maximum shared segment size, in bytes

kernel.shmmax = 68719476736

# # Controls the maximum number of shared memory segments, in pages

kernel.shmall = 4294967296

# TCP kernel paramater

net.ipv4.tcp_mem = 786432 1048576 1572864

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_sack = 1

# socket buffer

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 262144

net.core.somaxconn = 20480

net.core.optmem_max = 81920

# TCP conn

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_syn_retries = 3

net.ipv4.tcp_retries1 = 3

net.ipv4.tcp_retries2 = 15

# tcp conn reuse

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_tw_recycle = 0

net.ipv4.tcp_fin_timeout = 30

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_max_tw_buckets = 20000

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_syncookies = 1

# keepalive conn

net.ipv4.tcp_keepalive_time = 300

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.ip_local_port_range = 10001 65000

# swap

vm.overcommit_memory = 0

vm.swappiness = 10

#net.ipv4.conf.eth1.rp_filter = 0

#net.ipv4.conf.lo.arp_ignore = 1

#net.ipv4.conf.lo.arp_announce = 2

#net.ipv4.conf.all.arp_ignore = 1

#net.ipv4.conf.all.arp_announce = 2

# 重载内核参数

sysctl -p

- k8s-ha01和k8s-ha02主机都进行操作

# 修改配置文件

vim /etc/haproxy/haproxy.cfg

...

listen k8s-6443

bind 192.168.15.188:6443

mode tcp

# balance leastconn

# apiserver的默认端口号为6443

server 192.168.15.101 192.168.15.101:6443 check inter 2s fall 3 rise 5

server 192.168.15.102 192.168.15.102:6443 check inter 2s fall 3 rise 5

# 启动haproxy服务

systemctl restart haproxy && systemctl enable haproxy

基础环境配置

k8s-master01主机,批量分发公钥脚本

# 生成key

ssh-keygen

# 编写密钥分发脚本

vim scp_key.sh

#/bin/bash

# 下载密钥分发工具

yum -y install sshpass

# 目标主机列表,harbor主机和ha主机不需要拷贝密钥

IP="

192.168.15.101

192.168.15.102

192.168.15.104

192.168.15.105

192.168.15.106

192.168.15.109

192.168.15.110

"

for node in $IP;do

sshpass -p 123 ssh-copy-id $node -o StrictHostKeyChecking=no &> /dev/null

if [ $? -eq 0 ];then

echo "$node 密钥copy完成"

else

echo "$node 密钥copy失败"

fi

done

master集群,etcd集群和node集群都下载docker

# 安装docker需要的依赖包 (之前执行过,可以省略)

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

# 安装docker软件

yum install docker-ce -y

# 配置镜像下载加速器

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

"registry-mirrors": ["https://hahexyip.mirror.aliyuncs.com"]

EOF

# 启动docker并加入开机自启动

systemctl enable docker && systemctl start docker

# 查看docker是否成功安装

docker version

k8s-master01主机基础环境配置

- vim /etc/sysctl.conf

# Controls source route verification

net.ipv4.conf.default.rp_filter = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

# Do not accept source routing

net.ipv4.conf.default.accept_source_route = 0

# Controls the System Request debugging functionality of the kernel

kernel.sysrq = 0

# Controls whether core dumps will append the PID to the core filename.

# Useful for debugging multi-threaded applications.

kernel.core_uses_pid = 1

# Controls the use of TCP syncookies

net.ipv4.tcp_syncookies = 1

# Disable netfilter on bridges.

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

# Controls the default maxmimum size of a mesage queue

kernel.msgmnb = 65536

# # Controls the maximum size of a message, in bytes

kernel.msgmax = 65536

# Controls the maximum shared segment size, in bytes

kernel.shmmax = 68719476736

# # Controls the maximum number of shared memory segments, in pages

kernel.shmall = 4294967296

# TCP kernel paramater

net.ipv4.tcp_mem = 786432 1048576 1572864

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_sack = 1

# socket buffer

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 262144

net.core.somaxconn = 20480

net.core.optmem_max = 81920

# TCP conn

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_syn_retries = 3

net.ipv4.tcp_retries1 = 3

net.ipv4.tcp_retries2 = 15

# tcp conn reuse

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_tw_recycle = 0

net.ipv4.tcp_fin_timeout = 30

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_max_tw_buckets = 20000

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_syncookies = 1

# keepalive conn

net.ipv4.tcp_keepalive_time = 300

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.ip_local_port_range = 10001 65000

# swap

vm.overcommit_memory = 0

vm.swappiness = 10

#net.ipv4.conf.eth1.rp_filter = 0

#net.ipv4.conf.lo.arp_ignore = 1

#net.ipv4.conf.lo.arp_announce = 2

#net.ipv4.conf.all.arp_ignore = 1

#net.ipv4.conf.all.arp_announce = 2

- vim /etc/security/limits.conf

root soft core unlimited

root hard core unlimited

root soft nproc 1000000

root hard nproc 1000000

root soft nofile 1000000

root hard nofile 1000000

root soft memlock 32000

root hard memlock 32000

root soft msgqueue 8192000

root hard msgqueue 8192000

k8s-master01主机,批量分发文件脚本

vim scp_file.sh

#/bin/bash

# 目标主机列表,harbor主机和ha主机不需要拷贝密钥

IP="

192.168.15.101

192.168.15.102

192.168.15.104

192.168.15.105

192.168.15.106

192.168.15.109

192.168.15.110

"

for node in $IP;do

ssh $node "mkdir -p /etc/docker/certs.d/harbor.nana.com"

echo "Harbor 证书目录创建成功!"

scp /etc/docker/certs.d/harbor.nana.com/harbor-ca.crt $node:/etc/docker/certs.d/harbor.nana.com

echo "Harbor 证书拷贝成功!"

ssh $node "echo 192.168.15.103 harbor.nana.com >> /etc/hosts"

echo "host解析修改完成"

scp /usr/lib/systemd/system/docker.service $node:/usr/lib/systemd/system/docker.service

ssh $node "systemctl daemon-reload && systemctl restart docker"

echo "docker.service添加https证书域名完成"

scp /etc/sysctl.conf $node:/etc/sysctl.conf

scp /etc/security/limits.conf $node:/etc/security/limits.conf

echo "基础环境配置完成"

done

重启master集群,etcd集群和node集群

reboot

Ansible脚本安装K8S集群

下载项目源码、二进制及离线镜像

# 下载工具脚本ezdown,举例使用kubeasz版本3.0.0

export release=3.0.0

curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/$release/ezdown

chmod +x ./ezdown

# 如果需要指定版本下载组件,参考该脚本相关用法

./ezdown --help

# 使用工具脚本下载

./ezdown -D

上述脚本运行成功后,所有文件(kubeasz代码、二进制、离线镜像)均已整理好放入目录/etc/kubeasz

所有服务器都进行时间同步

# 时间同步阿里云服务器

yum -y install ntpdate

ntpdate ntp1.aliyun.com

# 配置定时任务

crontab -e

*/5 * * * * ntpdate ntp1.aliyun.com &> /dev/null

修改hosts配置文件

cp -r /etc/kubeasz/* /etc/ansible/

cd /etc/ansible

cp example/hosts.multi-node hosts

# 修改hosts文件

vim hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

# [必须配置]etcd集群主机的ip,必须是单数

[etcd]

192.168.15.104

192.168.15.105

192.168.15.106

# master node(s)

# [必须配置]主节点的IP

[kube_master]

192.168.15.101

192.168.15.102

# work node(s)

# [必须配置]工作节点的IP

[kube_node]

192.168.15.109

192.168.15.110

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'yes' to install a harbor server; 'no' to integrate with existed one

# 'SELF_SIGNED_CERT': 'no' you need put files of certificates named harbor.pem and harbor-key.pem in directory 'down'

[harbor]

#192.168.1.8 HARBOR_DOMAIN="harbor.yourdomain.com" NEW_INSTALL=no SELF_SIGNED_CERT=yes

# [可选项] 用于从外部访问k8s的负载平衡

[ex_lb]

192.168.15.108 LB_ROLE=backup EX_APISERVER_VIP=192.168.15.188 EX_APISERVER_PORT=6443

192.168.15.107 LB_ROLE=master EX_APISERVER_VIP=192.168.15.188 EX_APISERVER_PORT=6443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# 支持集群容器运行时:docker、containerd

CONTAINER_RUNTIME="docker"

# 支持网络插件: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# kube-proxy的服务代理模式: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S服务CIDR,不与节点(主机)网络重叠

SERVICE_CIDR="172.10.0.0/16"

# 集群CIDR (Pod CIDR),与节点(主机)网络不重叠

CLUSTER_CIDR="10.100.0.0/16"

# 节点端口范围

NODE_PORT_RANGE="30000-60000"

# 群集DNS域

CLUSTER_DNS_DOMAIN="nana.local."

# -------- Additional Variables (don't change the default value right now) ---

# 二进制文件目录

bin_dir="/usr/bin"

# 部署目录(kubeasz工作区)

base_dir="/etc/ansible"

# 特定群集的目录

cluster_dir=" base_dir /clusters/_cluster_name_"

# CA和其他组件证书/密钥目录

ca_dir="/etc/kubernetes/ssl"

步骤 01.prepare.yml 主要完成创建证书和环境准备

修改playbooks剧本文件

cd /etc/ansible/playbooks

vim 01.prepare.yml

# [optional] to synchronize system time of nodes with 'chrony'

- hosts:

- kube_master

- kube_node

- etcd

# - ex_lb lb和chorny我们是通过手动安装的,直接给这两个注释掉,否则安装过程ansible会有报错

# - chrony

# roles:

# - role: os-harden, when: "OS_HARDEN|bool"

# - role: chrony, when: "groups['chrony']|length > 0"

# to create CA, kubeconfig, kube-proxy.kubeconfig etc.

- hosts: localhost

roles:

- deploy

# prepare tasks for all nodes

- hosts:

- kube_master

- kube_node

- etcd

roles:

- prepare

配置CA证书

# 修改CA证书签证区域

cd /etc/ansible/roles/deploy/templates

grep HangZhou *

sed -i "s/HangZhou/ShangHai/g" *

ll /etc/ansible/roles/deploy/templates

# total 32

# -rw-r--r-- 1 root root 225 Aug 11 16:43 admin-csr.json.j2

# -rw-r--r-- 1 root root 515 Aug 11 16:43 ca-config.json.j2

# -rw-r--r-- 1 root root 251 Aug 11 16:43 ca-csr.json.j2

# -rw-r--r-- 1 root root 344 Aug 11 16:43 crb.yaml.j2

# -rw-r--r-- 1 root root 266 Aug 11 16:43 kube-controller-manager-csr.json.j2

# -rw-r--r-- 1 root root 226 Aug 11 16:43 kube-proxy-csr.json.j2

# -rw-r--r-- 1 root root 248 Aug 11 16:43 kube-scheduler-csr.json.j2

# -rw-r--r-- 1 root root 224 Aug 11 16:43 user-csr.json.j2

配置变量

vim /etc/ansible/roles/deploy/vars/main.yml

# apiserver 默认第一个master节点

KUBE_APISERVER: "https:// groups['kube_master'][0] :6443"

# 设置CA证书有效期

ADD_KCFG: false

CUSTOM_EXPIRY: "876000h"

CA_EXPIRY: "876000h"

CERT_EXPIRY: "876000h"

# kubeconfig 配置参数,注意权限根据"USER_NAME"设置;

# "admin"表示创建集群管理员(所有)权限的kubeconfig

# "read"表示创建只读权限的kubeconfig

CLUSTER_NAME: "cluster1"

USER_NAME: "admin"

CONTEXT_NAME: "context- CLUSTER_NAME - USER_NAME "

修改安装的依赖包

vim /etc/ansible/roles/prepare/tasks/centos.yml

...

- name: 安装基础软件包

yum:

name:

- bash-completion # bash命令补全工具,需要重新登录服务器生效

- conntrack-tools # ipvs 模式需要

- ipset # ipvs 模式需要

- ipvsadm # ipvs 模式需要

- libseccomp # 安装containerd需要

- nfs-utils # 挂载nfs 共享文件需要 (创建基于 nfs的PV 需要)

- psmisc # 安装psmisc 才能使用命令killall,keepalive的监测脚本需要

- rsync # 文件同步工具,分发证书等配置文件需要

- socat # 用于port forwarding

state: present

# when: 'INSTALL_SOURCE != "offline"' # K8S是在线安装还是离线安装,INSTALL_SOURCE没有定义变量,在线安装按当前进行注释即可

# 离线安装基础软件包

#- import_tasks: offline.yml

# when: 'INSTALL_SOURCE == "offline"'

...

执行剧本文件进行安装

cd /etc/ansible/playbooks

ansible-playbook 01.prepare.yml

步骤 02.etcd.yml 主要完成 安装etcd集群

ansible-playbook 02.etcd.yml

步骤 03.runtime.yml 主要完成 安装docker服务

ansible-playbook 03.runtime.yml

步骤 04.kube-master.yml 主要完成 安装kube-master节点

配置变量

vim /etc/ansible/roles/kube-master/vars/main.yml

# etcd 集群服务地址列表, 根据etcd组成员自动生成

TMP_ENDPOINTS: "% for h in groups['etcd'] %https:// h :2379,% endfor %"

ETCD_ENDPOINTS: " TMP_ENDPOINTS.rstrip(',') "

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

MASTER_CERT_HOSTS: # 需要添加master vip ip地址,或添加域名

- "192.168.15.210"

# - "lovebabay.nana.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

# 是否启用本地DNS缓存

ENABLE_LOCAL_DNS_CACHE: true

# 设置 local dns cache 地址,默认为169.254.20.10

LOCAL_DNS_CACHE: "169.254.20.10"

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

# node节点最大pod 数

MAX_PODS: 110

# [containerd]基础容器镜像

SANDBOX_IMAGE: "easzlab/pause-amd64:3.2"

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# coredns 自动安装

dns_install: "yes"

corednsVer: "__coredns__"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "__dns_node_cache__"

ansible-playbook 04.kube-master.yml

步骤 05.kube-node.yml 主要完成安装kube-node节点

vim /etc/ansible/roles/kube-node/vars/main.yml

# 设置 APISERVER 地址

KUBE_APISERVER: "%- if inventory_hostname in groups['kube_master'] -% \\

https:// inventory_hostname :6443 \\

%- else -% \\

%- if groups['kube_master']|length > 1 -% \\

https://127.0.0.1:6443 \\

%- else -% \\

https:// groups['kube_master'][0] :6443 \\

%- endif -% \\

%- endif -%"

# node local dns cache 离线镜像

dnscache_offline: "k8s-dns-node-cache_ dnsNodeCacheVer .tar"

# 增加/删除 master 节点时,node 节点需要重新配置 haproxy

MASTER_CHG: "no"

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# haproxy balance mode

BALANCE_ALG: "roundrobin"

# coredns 自动安装

dns_install: "yes"

corednsVer: "__coredns__"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "__dns_node_cache__"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

# node节点最大pod 数

MAX_PODS: 110

# [containerd]基础容器镜像

SANDBOX_IMAGE: "easzlab/pause-amd64:3.2"

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

ansible-playbook 05.kube-node.yml

步骤 06.network.yml 主要完成安装网络组件

vim /etc/ansible/roles/calico/vars/main.yml

# etcd 集群服务地址列表, 根据etcd组成员自动生成

TMP_ENDPOINTS: "% for h in groups['etcd'] %https:// h :2379,% endfor %"

ETCD_ENDPOINTS: " TMP_ENDPOINTS.rstrip(',') "

# [calico]calico 主版本

calico_ver_main: " calico_ver.split('.')[0] . calico_ver.split('.')[1] "

# [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x]

calicover: "v3.15.3"

calico_ver: " calicover "

# [calico]calico 主版本

calico_ver_main: " calico_ver.split('.')[0] . calico_ver.split('.')[1] "

# [calico]离线镜像tar包

calico_offline: "calico_ calico_ver .tar"

# [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach= groups['kube_master'][0] "

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

ansible + kubeasz 二进制部署K8S高可用集群方案