KUBERNETES02_集群安装逻辑前置环境搭建一主两从部署dashboard访问页面

Posted 所得皆惊喜

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了KUBERNETES02_集群安装逻辑前置环境搭建一主两从部署dashboard访问页面相关的知识,希望对你有一定的参考价值。

文章目录

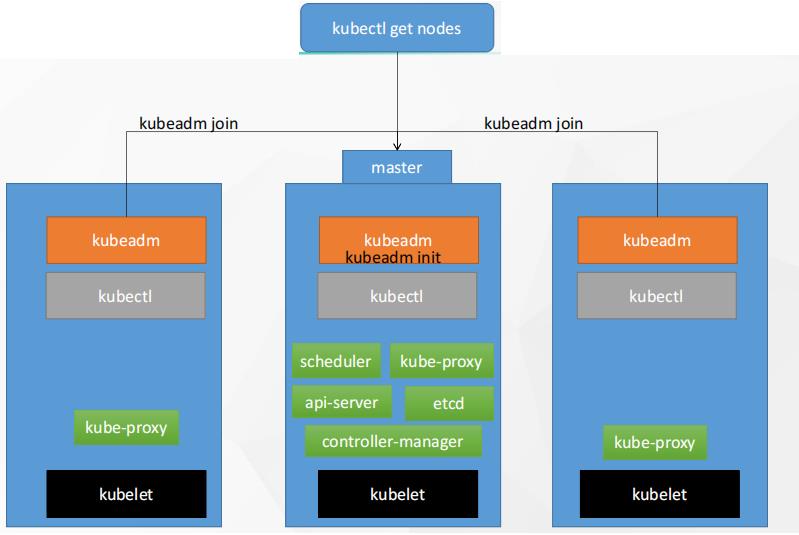

①. 集群安装逻辑

- ①. 我们需要为每一台机器去安装kubelet(相当于厂长)、kubeadm(帮程序员管理集群的)、kubectl(程序员用的命令行,经常给k8s发送命令,装在总部,master)

②. 安装集群前置环境

-

①.一台兼容的Linux主机。Kubernetes项目为基于Debian和RedHat的Linux发行版以及一些不提供包管理器的发行版提供通用的指令

每台机器2GB或更多的RAM(如果少于这个数字将会影响你应用的运行内存)、2CPU核或更多 -

②.集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)(设置防火墙放行规则)

-

③.节点之中不可以有重复的主机名、MAC地址或product_uuid。请参见这里了解更多详细信息。

(设置不同hostname) -

④.开启机器上的某些端口。请参见这里了解更多详细信息。(内网互信)

-

⑤.禁用交换分区。为了保证kubelet正常工作,你必须禁用交换分区。(永久关闭)

-

⑥.关闭防火墙(一定要关闭,不然会出现从节点加入不进去master)

如果是云服务器,需要设置安全组策略放行端口 -

⑦. 上述所有的必须先安装docker

(1). 移除以前docker相关包

sudo yum remove docker \\

docker-client \\

docker-client-latest \\

docker-common \\

docker-latest \\

docker-latest-logrotate \\

docker-logrotate \\

docker-engine

(2). 配置yum源

sudo yum install -y yum-utils

sudo yum-config-manager \\

--add-repo \\

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

(3). 安装docker

sudo yum install -y docker-ce docker-ce-cli containerd.io

#以下是在安装k8s的时候使用

yum install -y docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io-1.4.6

(4). 启动

systemctl enable docker --now

(5). 配置加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts":

"max-size": "100m"

,

"storage-driver": "overlay2"

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

#(1). 基础环境(所有机器执行以下操作)

#注意:kubelet 现在每隔几秒就会重启,因为它陷入了一个等待 kubeadm 指令的死循环

#各个机器设置自己的域名

hostnamectl set-hostname xxxx

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

# (2). 安装kubelet、kubeadm、kubectl

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

③. kubeadm引导集群

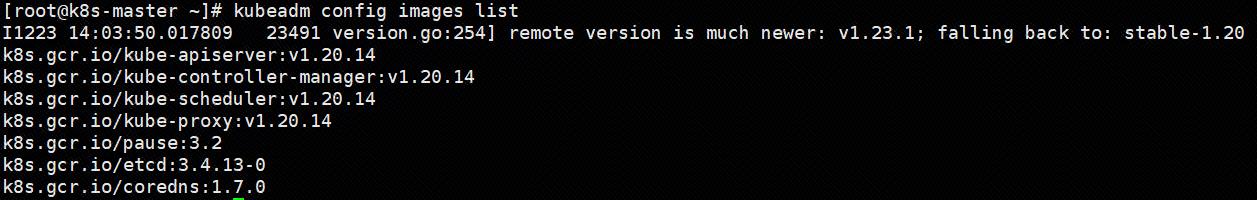

- ①. 下载各个机器需要的镜像(需要在三台机器上都执行如下命令)

# 下载核心镜像 kubeadm config images list:查看需要哪些镜像

# kubeadm config images list

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in $images[@] ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

- ②. 初始化主节点

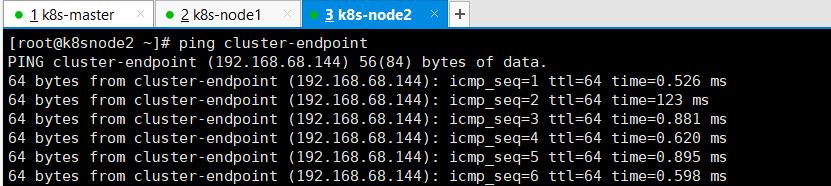

指定一个网络可达范围pod的子网范围+service负载均衡网络的子网范围+本机ip的子网范围不能有重复域

注意:下面这个ip(192.168.68.144)地址需要改成自己的ip地址

#所有机器添加master域名映射,以下需要修改为自己的

echo "192.168.68.144 k8s-master" >> /etc/hosts

#主节点初始化

kubeadm init \\

--apiserver-advertise-address=192.168.68.144 \\

--control-plane-endpoint=k8s-master \\

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \\

--kubernetes-version v1.20.9 \\

--service-cidr=10.96.0.0/16 \\

--pod-network-cidr=11.168.0.0/16

#所有网络范围不重叠

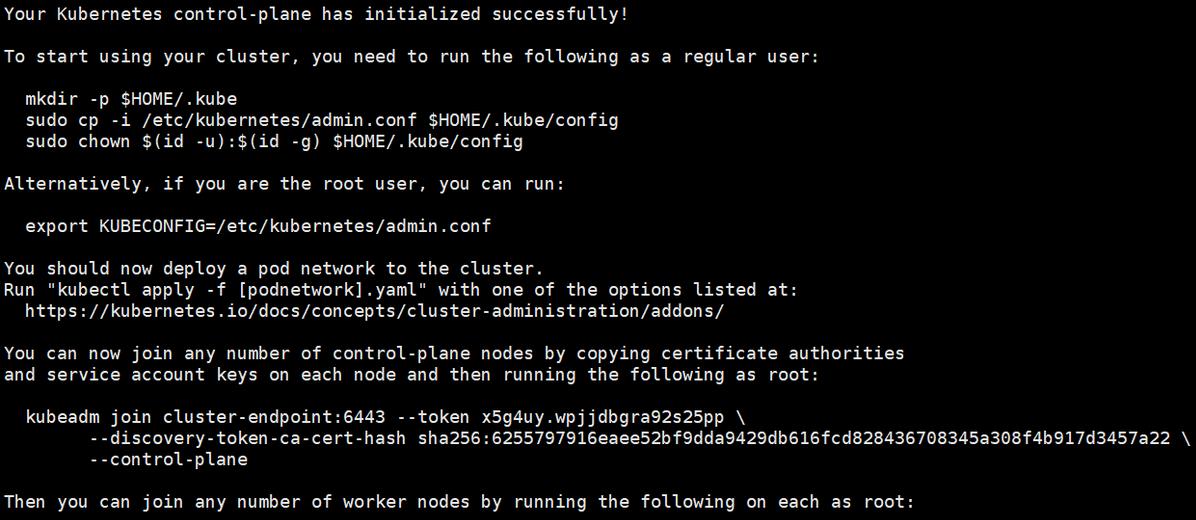

Your Kubernetes control-plane has initialized successfully!

######按照提示继续######

## init完成后第一步:复制相关文件夹

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

## 导出环境变量

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

### 部署一个pod网络

You should now deploy a pod network to the cluster.

##############如下:安装calico#####################

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token hums8f.vyx71prsg74ofce7 \\

--discovery-token-ca-cert-hash sha256:a394d059dd51d68bb007a532a037d0a477131480ae95f75840c461e85e2c6ae3 \\

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token hums8f.vyx71prsg74ofce7 \\

--discovery-token-ca-cert-hash sha256:a394d059dd51d68bb007a532a037d0a477131480ae95f75840c461e85e2c6ae3

- ③. 根据提示继续:master成功后提示如下

- ④. 设置.kube/config(复制上面命令执行)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml

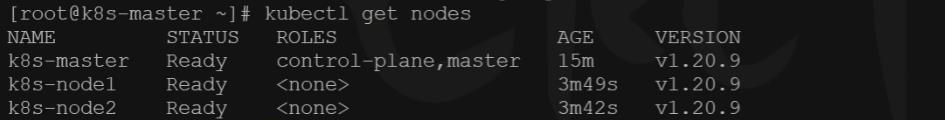

- ⑥. 加入node节点

kubeadm join cluster-endpoint:6443 --token x5g4uy.wpjjdbgra92s25pp \\

--discovery-token-ca-cert-hash sha256:6255797916eaee52bf9dda9429db616fcd828436708345a308f4b917d3457a22

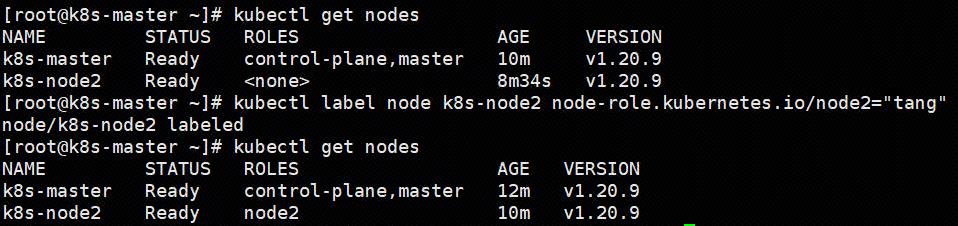

- ⑦. 给节点打标签

# 加标签 <h1>

kubectl label node k8s-node2 node-role.kubernetes.io/node2="tang"

# 去标签

kubectl label node k8s-02 node-role.kubernetes.io/worker-

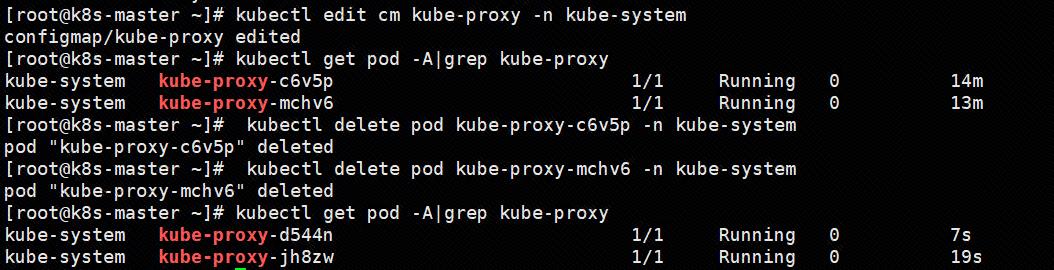

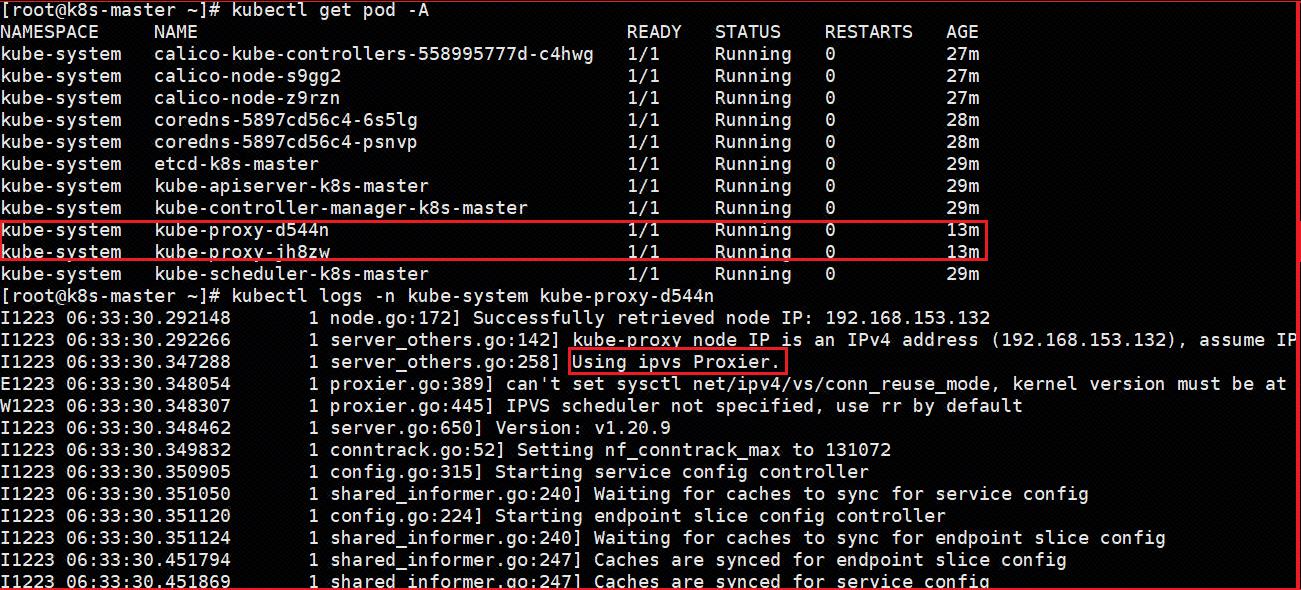

- ⑧. 设置kube-proxy的ipvs模式

(k8s整个集群为了访问通;默认是用iptables,性能下(kube-proxy在集群之间同步iptables的内容))

# 1. 查看默认kube-proxy 使用的模式

kubectl logs -n kube-system kube-proxy-28xv4

#2. 修改kube-proxy默认的配置

kubectl edit cm kube-proxy -n kube-system

# 修改mode: "ipvs"

# 改完以后重启kube-proxy

# 查到所有的kube-proxy

kubectl get pod -n kube-system |grep kube-proxy

# 删除之前的即可

kubectl delete pod [用自己查出来的kube-proxy-dw5sf kube-proxy-hsrwp kube-proxy-vqv7n] -n kube-system

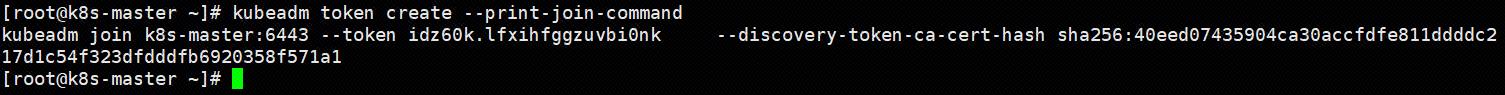

④. 关于token过期问题

- ①. 使用kubeadm token create --print-join-command生成token

[root@master ~]#kubeadm token generate #生成token

7r3l16.5yzfksso5ty2zzie #下面这条命令中会用到该结果

[root@master ~]# kubeadm token create 7r3l16.5yzfksso5ty2zzie --print-join-command --ttl=0 #根据token输出添加命令

W0604 10:35:00.523781 14568 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0604 10:35:00.523827 14568 validation.go:28] Cannot validate kubelet config - no validator is available

kubeadm join 192.168.254.100:6443 --token 7r3l16.5yzfksso5ty2zzie --discovery-token-ca-cert-hash sha256:56281a8be264fa334bb98cac5206aa190527a03180c9f397c253ece41d997e8a

⑤. 部署dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

- ②. 有可能下载不下来https后面的yaml配置,可以用下面的配置来做:创建dashboard.yaml,粘贴如下,执行kubectl apply -f dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

以上是关于KUBERNETES02_集群安装逻辑前置环境搭建一主两从部署dashboard访问页面的主要内容,如果未能解决你的问题,请参考以下文章

KUBERNETES02_集群安装逻辑前置环境搭建一主两从部署dashboard访问页面

KUBERNETES02_集群安装逻辑前置环境搭建一主两从部署dashboard访问页面

Kubernetes部署_02_从零开始搭建k8s集群v1.21.0(亲测可用)