Docker&Kubernetes ❀ Kubernetes集群安装部署过程与常见的错误解决方法

Posted 无糖可乐没有灵魂

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Docker&Kubernetes ❀ Kubernetes集群安装部署过程与常见的错误解决方法相关的知识,希望对你有一定的参考价值。

文章目录

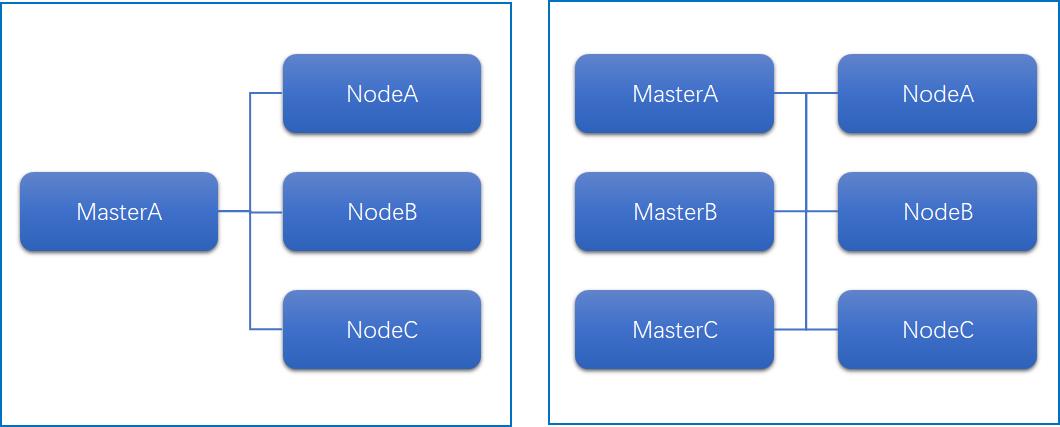

1、集群类型

Kubernetes集群大体上分为两类:

- 一主多从:一台Master节点和多台Node节点,搭建简单,单机故障风险高,适合测试环境;

- 多主多从:多台Master节点和多台Node节点,搭建麻烦,安全性高,适合生产环境;

2、安装方式

Kubernetes有多种部署方式,目前主流的方式有:

- minikube:一个用户快速搭建单节点Kubernetes的工具;

- kubeadm:一个用于快速搭建Kubernetes集群的工具;

- 二进制包:从官网下载每个组件的二进制包,依次安装,此方式对于理解Kubernetes组件更具有效;

3、环境搭建

表格如下:IP地址、操作系统、配置参数根据需求而定,不限于表格内容

| 作用 | IP地址 | 操作系统 | 配置参数 |

|---|---|---|---|

| Master | 192.168.88.130 | centos 7.3 1611 | 2c 2G 50G |

| Node1 | 192.168.88.131 | centos 7.3 1611 | 2c 2G 50G |

| Node2 | 192.168.88.132 | centos 7.3 1611 | 2c 2G 50G |

3.1 主机安装

本次环境需要搭建三台centos服务器(部署方式为一主二从),每台服务器中分布安装docker、kubeadm、kublet、kubectl程序;

系统安装配置如下:

- 操作系统硬件参数:CPU 2c、Mem 2G、磁盘 50G;

- 系统语言:English

- 软件选择:基础设施服务器

- 分区选择:自动分区(此处可以删除swap分区,后面可以通过命令删除,自选方式)

- 网络配置:三台服务器互相通信、可下载对应RPM即可

3.2 环境初始化

检查操作系统版本信息

#安装Kubernetes集群要求Centos版本要在7.5或以上

[root@master ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

配置主机名称解析

为了方便后面集群节点间的直接调用,配置主机名称解析,生产环境中推荐使用DNS解析服务器;

#主机名称解析配置

[root@master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.88.130 master.kubernetes

192.168.88.131 node1.kubernetes

192.168.88.132 node2.kubernetes

#将配置好的/etc/hosts传输到node1与node2

[root@master ~]# scp /etc/hosts root@192.168.88.131:/etc/hosts

root@192.168.88.131's password:

[root@master ~]# scp /etc/hosts root@192.168.88.132:/etc/hosts

root@192.168.88.132's password:

时间同步

Kubernetes要求集群中的节点时间必须一致,使用chronyd服务从网络同步时间;

#启动chronyd服务

[root@master ~]# systemctl start chronyd

#开机自启chronyd服务

[root@master ~]# systemctl enable chronyd

#查看时间

[root@master ~]# date

Fri Dec 10 17:14:32 CST 2021

禁用iptables和firewalld服务

Kubernetes在运行中会产生大量的防火墙规则,为了不让系统规则混淆,此处需要关闭,生产环境可以不关闭,防火墙策略必须配置准确;

#关闭firewalld服务

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

#关闭iptables服务

[root@localhost ~]# systemctl stop iptables

[root@localhost ~]# systemctl disable iptables

禁用SELinux

#关闭后需要重启Linux系统让配置文件生效

[root@master ~]# setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

禁用swap分区

swap分区指的是虚拟内存分区,它的作用是在物理内存使用完成之后,将磁盘空间虚拟成内存来使用,启用swap的设备会对系统的性能产生非常负面的影响,因此在Kubernetes中要求每个节点都要禁用swap分区,但是如果因为某些原因不能关闭,需要在集群安装过程中通过明确的参数配置进行说明;

#编辑文件,注释swap,需要重启Linux系统

[root@master ~]# vim /etc/fstab

#/dev/mapper/rhel-swap swap swap defaults 0 0

#查看swap分区是否被禁用

[root@master ~]# free -m

total used free shared buff/cache available

Mem: 1819 602 443 26 773 996

Swap: 0 0 0

修改Linux的内核参数

#添加网桥过滤和地址转发功能

[root@master ~]# vim /etc/sysctl.d/kubernetes.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

#重新加载配置

[root@master ~]# sysctl -p

#加载网络过滤模块

[root@master ~]# modprobe br_netfilter

#查看网桥过滤模块是否加载成功

[root@master ~]# lsmod |grep br_netfilter

br_netfilter 22256 0

bridge 151336 1 br_netfilter

配置ipvs功能

#清理yum源,并本地缓存

[root@master ~]# yum clean all

[root@master ~]# yum makecache

#安装ipset和ipvsadm

[root@localhost ~]# yum install -y ipvsadmin ipset

#确认安装是否成功

[root@master ~]# rpm -qa ipvsadm ipset

ipset-7.1-1.el8.x86_64

ipvsadm-1.31-1.el8.x86_64

#添加需要加载的模块写入脚本

[root@localhost ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

#为脚本文件添加执行权限

[root@localhost ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@localhost ~]# ll $_

-rwxr-xr-x. 1 root root 124 Dec 10 17:32 /etc/sysconfig/modules/ipvs.modules

#执行脚本文件

[root@master ~]# /bin/bash /etc/sysconfig/modules/ipvs.modules

#查看对应模块是否加载成功

[root@localhost ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_defrag_ipv6 20480 1 ip_vs

nf_conntrack_ipv4 16384 12

nf_defrag_ipv4 16384 1 nf_conntrack_ipv4

nf_conntrack 155648 8 xt_conntrack,nf_conntrack_ipv4,nf_nat,ipt_MASQUERADE,nf_nat_ipv4,xt_nat,nf_conntrack_netlink,ip_vs

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

系统重启

[root@localhost ~]# reboot -f

3.3 安装Docker服务

切换镜像源

[root@master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

--2021-12-11 08:57:11-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 222.35.67.242, 222.35.67.244, 222.35.67.248, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|222.35.67.242|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2081 (2.0K) [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/docker-ce.repo’

100%[=============================================================>] 2,081 --.-K/s in 0s

2021-12-11 08:57:11 (462 MB/s) - ‘/etc/yum.repos.d/docker-ce.repo’ saved [2081/2081]

安装Docker服务(离线安装)

#查看可以安装的docker服务版本

[root@master ~]# yum list docker-ce --showduplicates | sort -r

#安装最新版本docker服务

[root@master ~]# yum install -y docker-ce

#确认安装docker服务RPM包

[root@master ~]# rpm -qa docker-ce

docker-ce-20.10.11-3.el7.x86_64

添加docker配置文件

Docker默认情况下使用的Cgroup Driver为cgroupfs,而Kubernetes推荐使用systemd代替cgroupfs

[root@master ~]# mkdir /etc/docker

#配置docker配置文件

[root@master ~]# vim /etc/docker/daemon.json

[root@master ~]# cat $_

"exec-opts" : ["native.cgroupdriver=systemd"],

"registry-mirrors" : ["https://kn0t2bca.mirror.aliyuncs.com"]

#重新加载配置文件,重启docker服务,设置开机自启

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

[root@master ~]# systemctl enable docker

查看Docker服务版本

[root@master ~]# docker version

Client: Docker Engine - Community

Version: 20.10.11

3.4 安装Kubernetes组件

配置国内Kubernetes镜像源,国外源下载较慢,推荐使用国内源

[root@master ~]# vim /etc/yum.repos.d/kubernetes.repo

[root@master ~]# cat $_

[kubernetes]

name=kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enable=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

安装kubeadm、kubelet、kubectl服务

[root@master ~]# yum install -y kubeadm kubectl kubelet

#确认已安装服务RPM包

[root@master ~]# rpm -qa kubeadm kubectl kubelet

kubeadm-1.23.0-0.x86_64

kubelet-1.23.0-0.x86_64

kubectl-1.23.0-0.x86_64

配置kubelet的cgroup

[root@master ~]# vim /etc/sysconfig/kubelet

[root@master ~]# cat $_

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

设置kubelet服务开机自启

#kubelet服务即为K8S服务

[root@master ~]# systemctl enable kubelet

3.5 准备集群镜像

在安装Kubernetes集群之前,需要准备好集群需要的镜像,可以通过下面命令查看所需镜像;

[root@master ~]# kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.23.0

k8s.gcr.io/kube-controller-manager:v1.23.0

k8s.gcr.io/kube-scheduler:v1.23.0

k8s.gcr.io/kube-proxy:v1.23.0

k8s.gcr.io/pause:3.6

k8s.gcr.io/etcd:3.5.1-0

k8s.gcr.io/coredns/coredns:v1.8.6

下载镜像,此镜像在Kubernetes的仓库中,此处由于网络原因,切换使用阿里云镜像,配置脚本文件进行下载

[root@master ~]# vim ./imagePull.sh

[root@master ~]# cat $_

#定义所需下载的K8S镜像版本

images=(

kube-apiserver:v1.23.0

kube-controller-manager:v1.23.0

kube-scheduler:v1.23.0

kube-proxy:v1.23.0

pause:3.6

etcd:3.5.1-0

coredns:v1.8.6

)

for imageName in $images[@] ; do

#拉取阿里云K8S镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

#修改镜像名称为K8S官方名称,由于阿里云镜像名称不是官方设置名称,因此必须修改

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

#修改完成后删除原来本阿里云镜像

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

#脚本添加执行权限后并执行

[root@master ~]# chmod a+x imagePull.sh

[root@master ~]# ./imagePull.sh

#将脚本传输至node1、node2节点并执行

[root@master ~]# scp ./imagePull.sh root@192.168.88.131:/root/imagePull.sh

root@192.168.88.131's password:

[root@master ~]# scp ./imagePull.sh root@192.168.88.132:/root/imagePull.sh

root@192.168.88.132's password:

[root@node1 ~]# ./imagePull.sh

[root@node2 ~]# ./imagePull.sh

#由于阿里云镜像名称不对应,需要单独修改coredns镜像名称(建议所有均修改,镜像保持一致)

[root@master ~]# docker tag k8s.gcr.io/coredns:1.8.6 k8s.gcr.io/coredns/coredns:v1.8.6

#删除原有镜像

[root@master ~]# docker rmi k8s.gcr.io/coredns:1.8.6

Untagged: k8s.gcr.io/coredns:1.8.6

[root@master ~]# docker images k8s.gcr.io/coredns

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/coredns 1.8.6 a4ca41631cc7 2 months ago 46.8MB

[root@master ~]# docker images k8s.gcr.io/coredns/coredns

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/coredns/coredns v1.8.6 a4ca41631cc7 2 months ago 46.8MB

为防止内网镜像下载故障问题,已将所需镜像上传至百度网盘,请自行下载,放入对应目录进行镜像导入即可使用;

#镜像存放路径此处为/root/

[root@master ~]# docker load -i cni_v3.17.1.tar controller-manager_v1.23.0.tar coredns_v1.8.6.tar etcd_3.5.1-0.tar flannel_v0.12.0-amd64.tar kube-apiserver_v1.23.0.tar kube-proxy_v1.23.0.tar kube-scheduler_v1.23.0.tar node_v3.17.1.tar pause_3.6.tar pod2daemon-flexvol_v3.17.1.tar

#此处为导入镜像数量,正常链接下载不含有网络插件calico与flannel镜像(注意镜像名称)

[root@master k8s_images]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-apiserver v1.23.0 e6bf5ddd4098 5 days ago 135MB

k8s.gcr.io/kube-controller-manager v1.23.0 37c6aeb3663b 5 days ago 125MB

k8s.gcr.io/kube-proxy v1.23.0 e03484a90585 5 days ago 112MB

k8s.gcr.io/kube-scheduler v1.23.0 56c5af1d00b5 5 days ago 53.5MB

k8s.gcr.io/etcd 3.5.1-0 25f8c7f3da61 5 weeks ago 293MB

k8s.gcr.io/coredns/coredns v1.8.6 a4ca41631cc7 2 months ago 46.8MB

k8s.gcr.io/pause 3.6 6270bb605e12 3 months ago 683kB

calico/pod2daemon-flexvol v3.17.1 819d15844f0c 12 months ago 21.7MB

calico/cni v3.17.1 64e5dfd8d597 12 months ago 128MB

calico/node v3.17.1 183b53858d7d 12 months ago 165MB

quay.io/coreos/flannel v0.12.0-amd64 4e9f801d2217 21 months ago 52.8MB

查看已经下载的镜像

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-apiserver v1.23.0 e6bf5ddd4098 4 days ago 135MB

k8s.gcr.io/kube-proxy v1.23.0 e03484a90585 4 days ago 112MB

k8s.gcr.io/kube-controller-manager v1.23.0 37c6aeb3663b 4 days ago 125MB

k8s.gcr.io/kube-scheduler v1.23.0 56c5af1d00b5 4 days ago 53.5MB

k8s.gcr.io/etcd 3.5.1-0 25f8c7f3da61 5 weeks ago 293MB

k8s.gcr.io/coredns/coredns v1.8.6 a4ca41631cc7 2 months ago 46.8MB

k8s.gcr.io/pause 3.6 6270bb605e12 3 months ago 683kB

3.6 集群初始化

创建集群,只需要在master节点上执行

#查看kubeadm版本信息

[root@master k8s_images]# rpm -qa kubeadm

kubeadm-1.23.0-0.x86_64

[root@master ~]# kubeadm init --kubernetes-version=v1.23.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=192.168.88.130

#命令参数解释

[root@master ~]# kubeadm init \\

--kubernetes-version=v1.23.0 \\ /kubeadm版本;

--pod-network-cidr=10.244.0.0/16 \\ /Pod网络;

--service-cidr=10.96.0.0/12 \\ /Service网络;

--apiserver-advertise-address=192.168.88.130 /注意修改Master主节点IP地址;

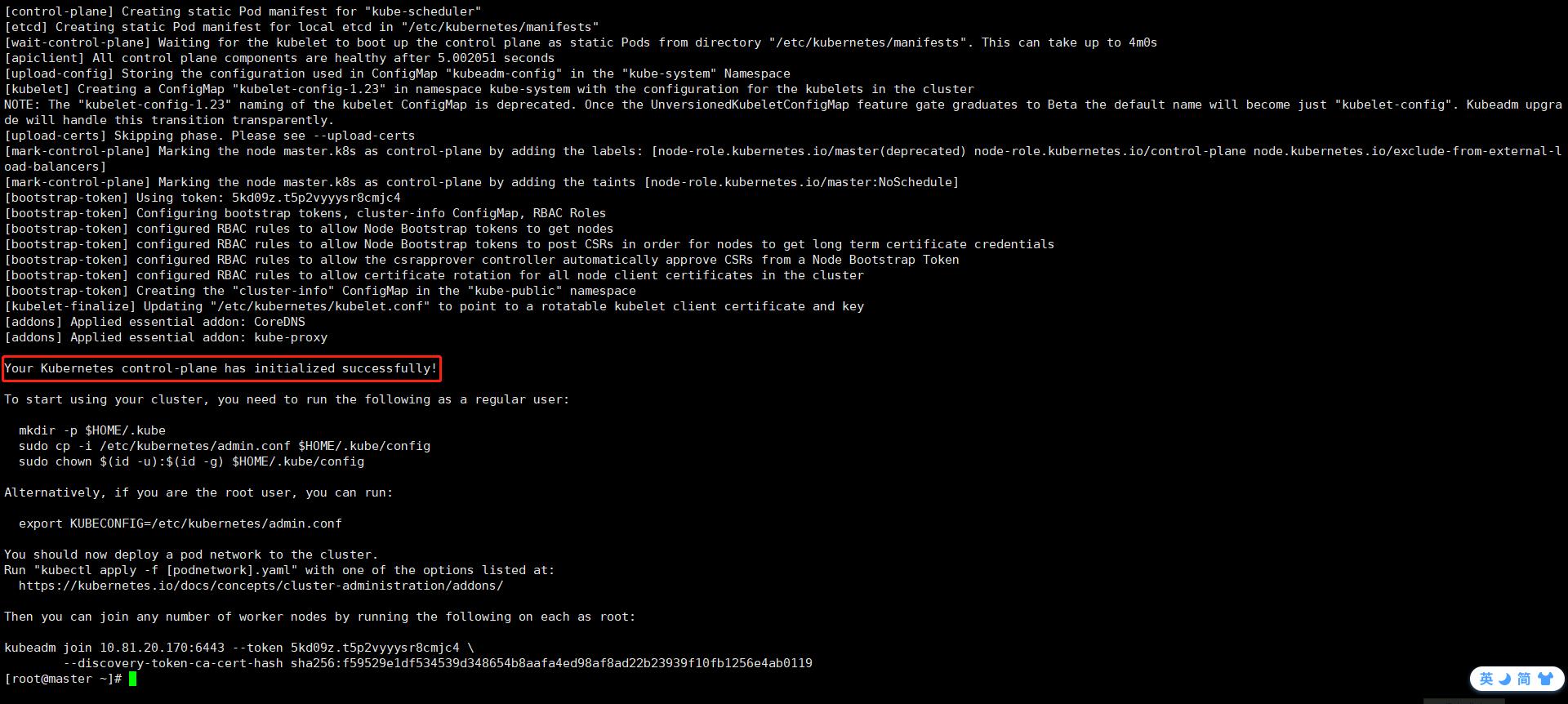

执行结果提示安装成功即可

Your Kubernetes control-plane has initialized successfully!

Master创建集群成功图示如下,界面会提示创建必要文件与Node加入Master配置所需命令,直接复制即可

创建必要文件(必须执行)

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

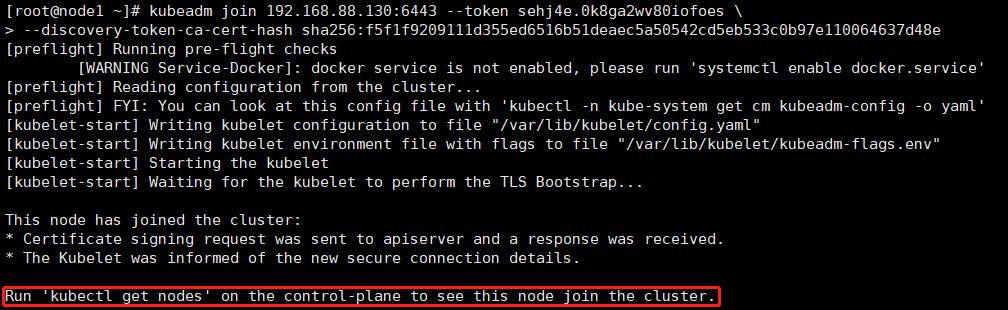

配置节点node1、node2加入master

[root@node1 ~]# kubeadm join 192.168.88.130:6443 --token sehj4e.0k8ga2wv80iofoes \\

--discovery-token-ca-cert-hash sha256:f5f1f9209111d355ed6516b51deaec5a50542cd5eb533c0b97e110064637d48e

[root@node2 ~]# kubeadm join 192.168.88.130:6443 --token sehj4e.0k8ga2wv80iofoes \\

--discovery-token-ca-cert-hash sha256:f5f1f9209111d355ed6516b51deaec5a50542cd5eb533c0b97e110064637d48e

节点加入Master图示如下

查看节点是否添加成功,状态此时为NotReady的原因是网络插件尚未安装

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master.kubernetes NotReady control-plane,master 8m6s v1.23.0

node1.kubernetes NotReady <none> 44s v1.23.0

node2.kubernetes NotReady <none> 43s v1.23.0

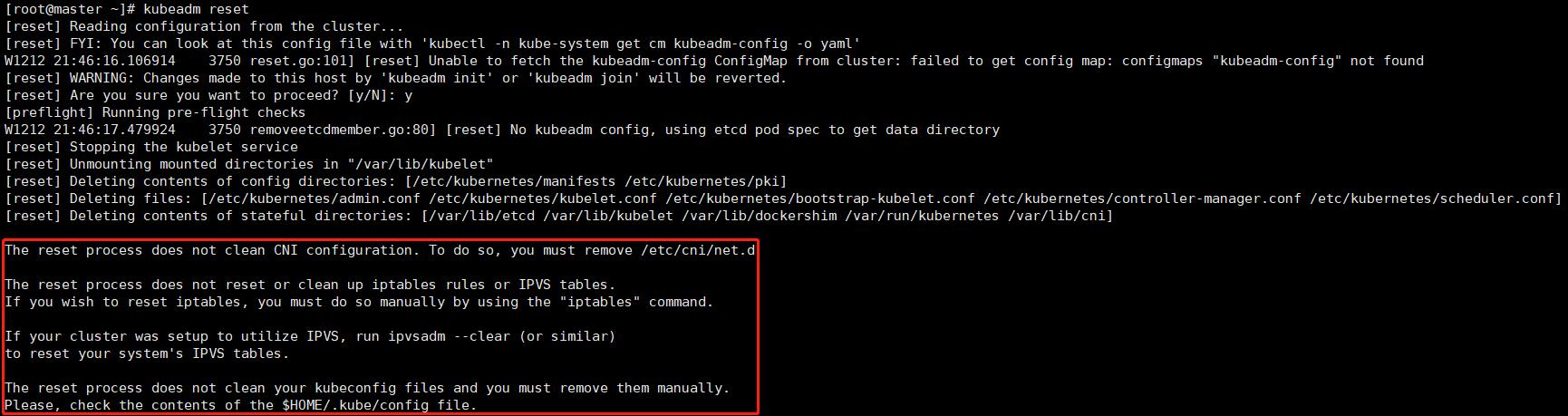

Master创建失败解决办法

若是初始化环境不干净,Master集群会产生报错,重新执行命令无法正常运行,按照如下方法重新清理环境

重置kubeadm

[root@master ~]# kubeadm reset

图示如下,重置完成后,需要清理部分文件;

[root@master ~]# rm -rf /etc/cni/net.d

[root@master ~]# rm -rf $HOME/.kube/config

[root@master ~]# rm -rf /etc/kubernetes/

清理完成后重新执行kubeadm安装

[root@master ~]# kubeadm init --kubernetes-version=v1.23.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=192.168.88.130

图示如下:

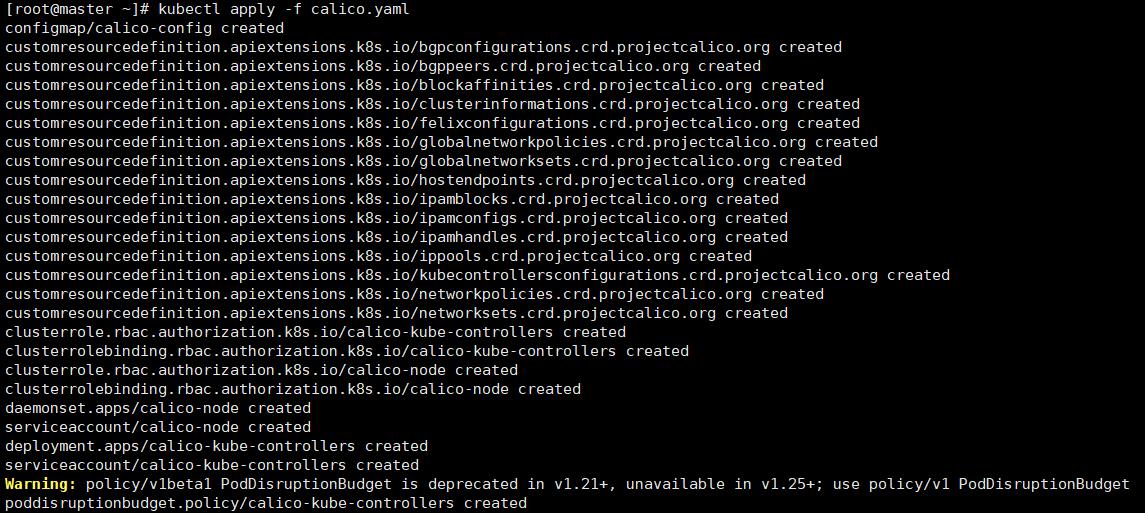

3.7 安装网络插件

#kubernetes master没有与本机绑定,集群初始化的时候没有绑定,此时设置在本机的环境变量即可解决问题

[root@master ~]# kubectl apply -f /tmp/k8s_images/calico.yaml

The connection to the server localhost:8080 was refused - did you specify the right host or port?

#解决方法

[root@master ~]# ll /etc/kubernetes/admin.conf

-rw------- 1 root root 5640 Dec 12 21:47 /etc/kubernetes/admin.conf

[root@master ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

[root@master ~]# source /etc/profile

#应用calico.yaml文件

[root@master ~]# kubectl apply -f calico.yaml

图示如下:

验证网络插件calico.yaml是否启动成功

#基础查看

[root@master ~]# kubectl get nodes

#查看详细信息

[root@master ~]# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master.kubernetes Ready control-plane,master 64m v1.23.0 192.168.88.130 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.11

node1.kubernetes Ready <none> 57m v1.23.0 192.168.88.131 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.11

node2.kubernetes Ready <none> 57m v1.23.0 192.168.88.132 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.11

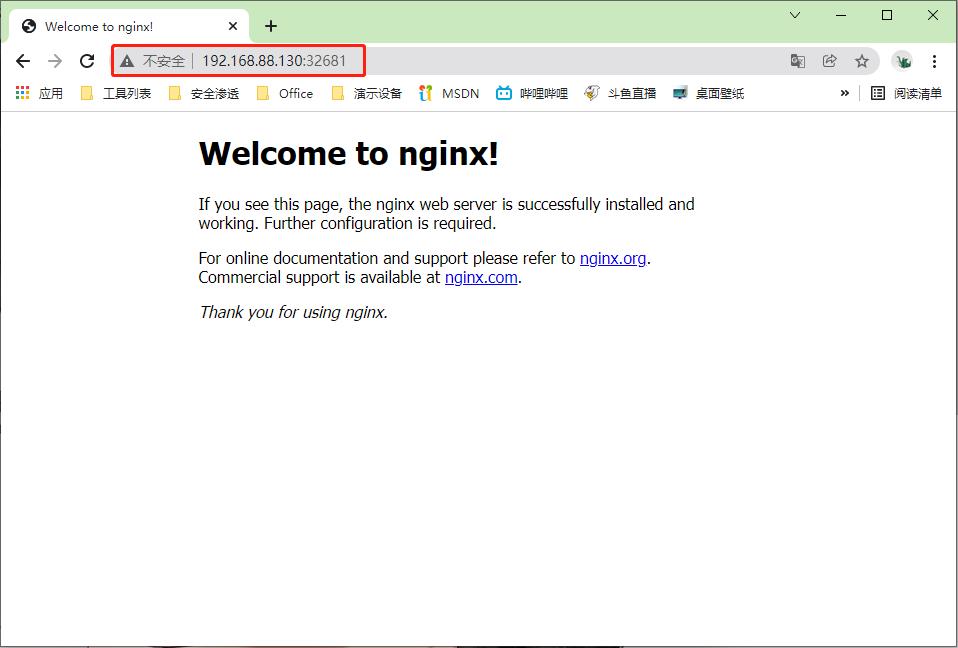

3.8 集群部署服务验证

#部署nginx

[root@master ~]# kubectl create deployment nginx --image=nginx:1.14-alpine

deployment.apps/nginx created

#暴露80端口

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

#查看Pod

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7cbb8cd5d8-xfkmk 1/1 Running 0 36s

#查看Services

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 61m

nginx NodePort 10.110.188.49 <none> 80:32681/TCP 14s

验证Nginx网页是否正常

4、命令介绍

kubectl为Kubernetes基础配置命令,以下为常见操作解析;

[root@master ~]# kubectl --help

kubectl controls the Kubernetes cluster manager.

Find more information at:

https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner): /基本命令 初级

create Create a resource from a file or from stdin /创建资源

expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes service /暴露服务

run Run a particular image on the cluster /运行

set Set specific features on objects /设置

Basic Commands (Intermediate): /基本命令 中级

explain Get documentation for a resource

get Display one or many resources

edit Edit a resource on the server

delete Delete resources by file names, stdin, resources and names, or by resources and label selector

Deploy Commands: /部署命令

rollout Manage the rollout of a resource

scale Set a new size for a deployment, replica set, or replication controller

autoscale Auto-scale a deployment, replica set, stateful set, or replication controller

Cluster Management Commands: /集群管理命令

certificate Modify certificate resources.

cluster-info Display cluster information

top Display resource (CPU/memory) usage

cordon Mark node as unschedulable

uncordon Mark node as schedulable

drain Drain node in preparation for maintenance

taint Update the taints on one or more nodes

Troubleshooting and Debugging Commands: /故障处理和调试命令

describe Show details of a specific resource or group of resources

logs Print the logs for a container in a pod

attach Attach to a running container

exec Execute a command in a container

port-forward Forward one or more local ports to a pod

proxy Run a proxy to the Kubernetes API server

cp Copy files and directories to and from containers

auth Inspect authorization

debug Create debugging sessions for troubleshooting workloads and

nodes

Advanced Commands: /前沿命令

diff Diff the live version against a would-be applied version

apply Apply a configuration to a resource by file name or stdin

patch Update fields of a resource

replace Replace a resource by file name or stdin

wait Experimental: Wait for a specific condition on one or many resources

kustomize Build a kustomization target from a directory or URL.

Settings Commands: /设置命令

label Update the labels on a resource

annotate Update the annotations on a resource

completion Output shell completion code for the specified shell (bash, zsh or fish)

Other Commands: /其他命令

alpha Commands for features in alpha

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config Modify kubeconfig files

plugin Provides utilities for interacting with plugins

version Print the client and server version information

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all

commands).

以上是关于Docker&Kubernetes ❀ Kubernetes集群安装部署过程与常见的错误解决方法的主要内容,如果未能解决你的问题,请参考以下文章

Docker&Kubernetes ❀ Kubernetes集群安装部署过程与常见的错误解决方法

Docker&Kubernetes ❀ Kubernetes集群安装部署过程与常见的错误解决方法

Docker&Kubernetes ❀ Kubernetes集群实践与部署笔记知识点梳理

Docker&Kubernetes ❀ Docker 容器技术笔记链接梳理