从0开始搭建ELK及采集日志的简单应用

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了从0开始搭建ELK及采集日志的简单应用相关的知识,希望对你有一定的参考价值。

关于ELK的理论介绍、架构图解说,很多博客都有很详细的讲解可以参考。本文主要记录了elk的简单搭建和简单应用。

安装前准备

1、环境说明:

| IP | 主机名 | 部署服务 |

|---|---|---|

| 10.0.0.101(centos7) | test101 | jdk、elasticsearch、logstash、kibana及filebeat(filebeat用于测试采集test101服务器自身messages日志) |

| 10.0.0.102(centos7) | test102 | nginx及filebeat(filebeat用于测试采集test102服务器nginx日志) |

2、安装包准备:

jdk-8u151-linux-x64.tar.gz

elasticsearch-6.4.2.tar.gz

kibana-6.4.2-linux-x86_64.tar.gz

logstash-6.4.2.tar.gz

elk官网下载地址:https://www.elastic.co/cn/downloads

部署ELK工具服务端

先在test101主机部署jdk、elasticsearch、logstash、kibana,部署好elk的服务端。把上面的四个安装包上传到test101服务器的/root下面。

1、部署jdk

# tar xf jdk-8u151-linux-x64.tar.gz -C /usr/local/

# echo -e "export JAVA_HOME=/usr/local/jdk1.8.0_151

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH" >>/etc/profile

# source /etc/profile

# java -version #或者执行jps命令也OK备注:要是一不小心改坏了/etc/profile,可以参考博文:《/etc/profile文件改坏了,所有命令无法执行了怎么办?》

2、创建elk专用用户

elk用户用于启动elasticsearch,和后面采集日志的时候,配置在filebeat配置文件里面。

# useradd elk;echo 12345678|passwd elk --stdin #创建elk用户,密码设置为123456783、部署elasticsearch

3.1 解压安装包:

# tar xf elasticsearch-6.4.2.tar.gz -C /usr/local/3.2 修改配置文件/usr/local/elasticsearch-6.4.2/config/elasticsearch.yml,修改如下:

[[email protected] config]# egrep -v "^#|^$" /usr/local/elasticsearch-6.4.2/config/elasticsearch.yml

cluster.name: elk

node.name: node-1

path.data: /opt/elk/es_data

path.logs: /opt/elk/es_logs

network.host: 10.0.0.101

http.port: 9200

discovery.zen.ping.unicast.hosts: ["10.0.0.101:9300"]

discovery.zen.minimum_master_nodes: 1

[[email protected] config]# 3.3 修改配置文件/etc/security/limits.conf和/etc/sysctl.conf如下:

# echo -e "* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

" >>/etc/security/limits.conf

# echo "vm.max_map_count=655360" >>/etc/sysctl.conf

# sysctl -p3.4 创建data和log目录并授权给elk用户:

# mkdir /opt/elk/{es_data,es_logs} -p

# chown elk:elk -R /opt/elk/

# chown elk:elk -R /usr/local/elasticsearch-6.4.2/3.5 启动elasticsearch:

# cd /usr/local/elasticsearch-6.4.2/bin/

# su elk

$ nohup /usr/local/elasticsearch-6.4.2/bin/elasticsearch >/dev/null 2>&1 &3.6 检查进程和端口:

[[email protected] ~]# ss -ntlup| grep -E "9200|9300"

tcp LISTEN 0 128 ::ffff:10.0.0.101:9200 :::* users:(("java",pid=6001,fd=193))

tcp LISTEN 0 128 ::ffff:10.0.0.101:9300 :::* users:(("java",pid=6001,fd=186))

[[email protected] ~]# 备注:

如果万一遇到elasticsearch服务起不来,可以排查一下es目录的权限、服务器内存什么的:《总结—elasticsearch启动失败的几种情况及解决》

4、部署logstash

4.1 解压安装包:

# tar xf logstash-6.4.2.tar.gz -C /usr/local/4.2 修改配置文件/usr/local/logstash-6.4.2/config/logstash.yml,修改如下:

[[email protected] logstash-6.4.2]# egrep -v "^#|^$" /usr/local/logstash-6.4.2/config/logstash.yml

path.data: /opt/elk/logstash_data

http.host: "10.0.0.101"

path.logs: /opt/elk/logstash_logs

path.config: /usr/local/logstash-6.4.2/conf.d #这一行配置文件没有的,自己加到文件末尾就好了

[[email protected] logstash-6.4.2]# 4.3 创建conf.d,添加日志处理文件syslog.conf:

[[email protected] conf.d]# mkdir /usr/local/logstash-6.4.2/conf.d

[[email protected] conf.d]# cat /usr/local/logstash-6.4.2/conf.d/syslog.conf

input {

#filebeat客户端

beats {

port => 5044

}

}

#筛选

#filter { }

output {

#标准输出,调试使用

stdout {

codec => rubydebug { }

}

# 输出到es

elasticsearch {

hosts => ["http://10.0.0.101:9200"]

index => "%{type}-%{+YYYY.MM.dd}"

}

}

[[email protected] conf.d]# 4.4 创建创建data和log目录并授权给elk用户:

# mkdir /opt/elk/{logstash_data,logstash_logs} -p

# chown -R elk:elk /opt/elk/

# chown -R elk:elk /usr/local/logstash-6.4.2/4.5 调试启动服务:

[[email protected] conf.d]# /usr/local/logstash-6.4.2/bin/logstash -f /usr/local/logstash-6.4.2/conf.d/syslog.conf --config.test_and_exit #这一步可能需要等待一会儿才会有反应

Sending Logstash logs to /opt/elk/logstash_logs which is now configured via log4j2.properties

[2018-11-01T09:49:14,299][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/opt/elk/logstash_data/queue"}

[2018-11-01T09:49:14,352][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/opt/elk/logstash_data/dead_letter_queue"}

[2018-11-01T09:49:16,547][WARN ][logstash.config.source.multilocal] Ignoring the ‘pipelines.yml‘ file because modules or command line options are specified

Configuration OK

[2018-11-01T09:49:26,510][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

[[email protected] conf.d]# 4.6 正式启动服务:

# nohup /usr/local/logstash-6.4.2/bin/logstash -f /usr/local/logstash-6.4.2/conf.d/syslog.conf >/dev/null 2>&1 & #后台启动4.7 查看进程和端口:

[[email protected] local]# ps -ef|grep logstash

root 6325 926 17 10:08 pts/0 00:01:55 /usr/local/jdk1.8.0_151/bin/java -Xms1g -Xmx1g -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -Djruby.jit.threshold=0 -XX:+HeapDumpOnOutOfMemoryError -Djava.security.egd=file:/dev/urandom -cp /usr/local/logstash-6.4.2/logstash-core/lib/jars/animal-sniffer-annotations-1.14.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/commons-codec-1.11.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/commons-compiler-3.0.8.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/error_prone_annotations-2.0.18.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/google-java-format-1.1.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/gradle-license-report-0.7.1.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/guava-22.0.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/j2objc-annotations-1.1.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/jackson-annotations-2.9.5.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/jackson-core-2.9.5.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/jackson-databind-2.9.5.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/jackson-dataformat-cbor-2.9.5.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/janino-3.0.8.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/jruby-complete-9.1.13.0.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/jsr305-1.3.9.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/log4j-api-2.9.1.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/log4j-core-2.9.1.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/log4j-slf4j-impl-2.9.1.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/logstash-core.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.core.commands-3.6.0.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.core.contenttype-3.4.100.jar:/usr/locallogstash-6.4.2/logstash-core/lib/jars/org.eclipse.core.expressions-3.4.300.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.core.filesystem-1.3.100.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.core.jobs-3.5.100.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.core.resources-3.7.100.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.core.runtime-3.7.0.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.equinox.app-1.3.100.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.equinox.common-3.6.0.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.equinox.preferences-3.4.1.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.equinox.registry-3.5.101.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.jdt.core-3.10.0.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.osgi-3.7.1.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/org.eclipse.text-3.5.101.jar:/usr/local/logstash-6.4.2/logstash-core/lib/jars/slf4j-api-1.7.25.jar org.logstash.Logstash -f /usr/local/logstash-6.4.2/conf.d/syslog.conf

root 6430 926 0 10:19 pts/0 00:00:00 grep --color=auto logstash

[[email protected] local]# netstat -tlunp|grep 6325

tcp6 0 0 :::5044 :::* LISTEN 6325/java

tcp6 0 0 10.0.0.101:9600 :::* LISTEN 6325/java

[[email protected] local]# 5、部署kibana

5.1 解压安装包:

# tar xf kibana-6.4.2-linux-x86_64.tar.gz -C /usr/local/5.2 修改配置文件/usr/local/kibana-6.4.2-linux-x86_64/config/kibana.yml,修改如下:

[[email protected] ~]# egrep -v "^#|^$" /usr/local/kibana-6.4.2-linux-x86_64/config/kibana.yml

server.port: 5601

server.host: "10.0.0.101"

elasticsearch.url: "http://10.0.0.101:9200"

kibana.index: ".kibana"

[[email protected] ~]# 5.3 修改kibana目录属主为elk:

# chown elk:elk -R /usr/local/kibana-6.4.2-linux-x86_64/5.4 启动kibana:

# nohup /usr/local/kibana-6.4.2-linux-x86_64/bin/kibana >/dev/null 2>&1 &5.5 查看进程和端口:

[[email protected] local]# ps -ef|grep kibana

root 6381 926 28 10:16 pts/0 00:00:53 /usr/local/kibana-6.4.2-linux-x86_64/bin/../node/bin/node --no-warnings /usr/local/kibana-6.4.2-linux-x86_64/bin/../src/cli

root 6432 926 0 10:19 pts/0 00:00:00 grep --color=auto kibana

[[email protected] local]# netstat -tlunp|grep 6381

tcp 0 0 10.0.0.101:5601 0.0.0.0:* LISTEN 6381/node

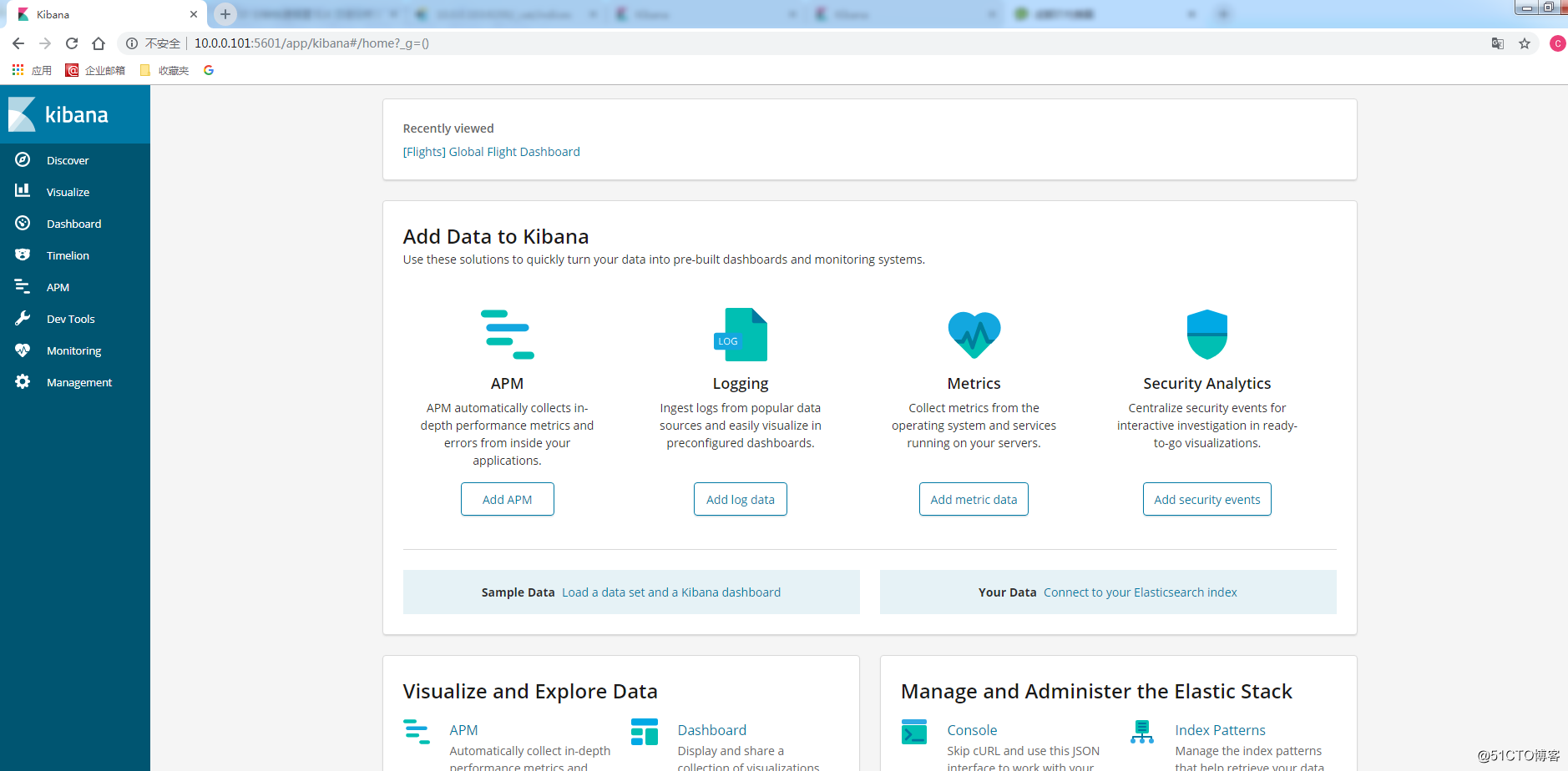

[[email protected] local]# 5.6 http://10.0.0.101:5601 访问kibana界面:

至此,整个elk工具的服务端搭建完毕。

ELK采集日志应用

服务端部署好之后,就是配置日志采集了,这时候就需要用到filebeat了

应用一:采集ELK本机(test101)的messages日志和secure日志

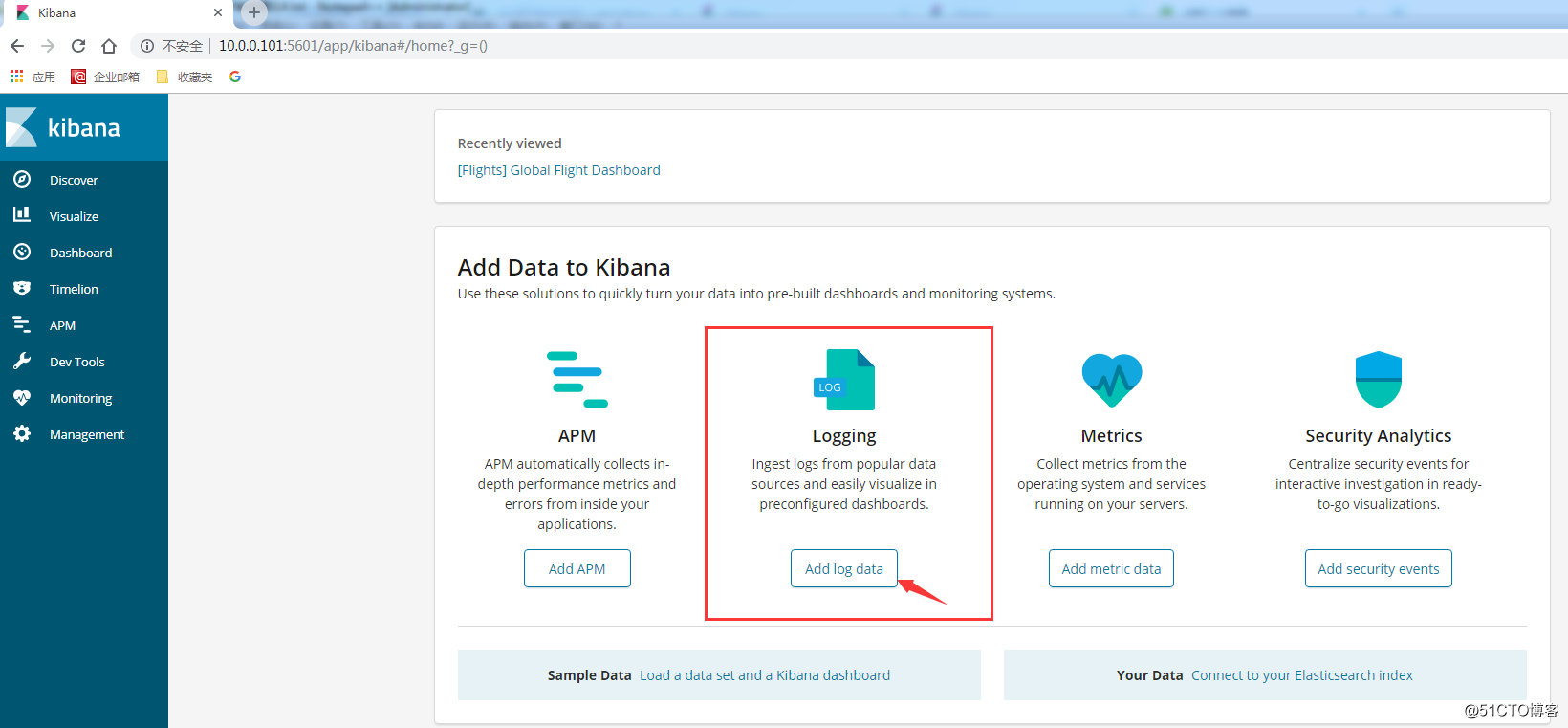

1、在kibana主页界面,点击“Add log data” :

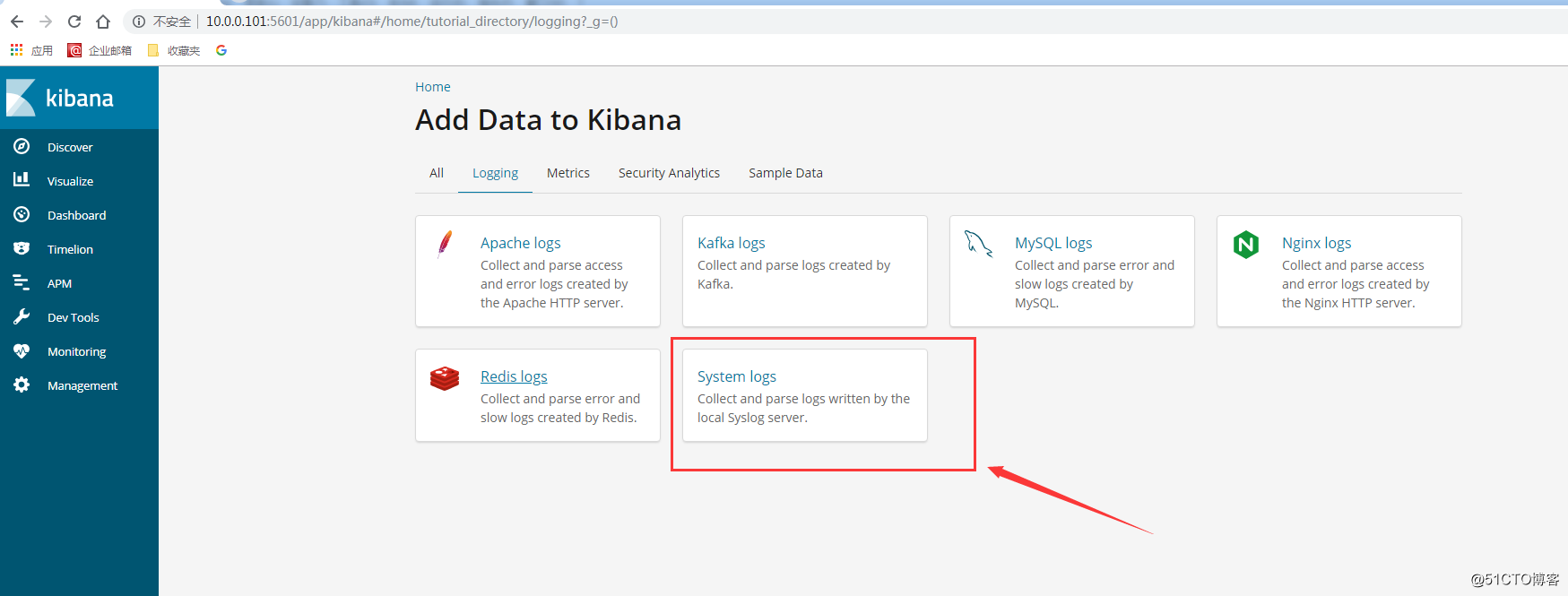

2、选择system log:

3、选择RPM,这里有添加日志的步骤(但是步骤有个小坑,可以参考如下的配置步骤:):

3.1 在test101服务器的es下面安装插件:

# cd /usr/local/elasticsearch-6.4.2/bin/

# ./elasticsearch-plugin install ingest-geoip3.2 在test101服务器下载并安装filebeat:

# curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.4.2-x86_64.rpm

# rpm -vi filebeat-6.4.2-x86_64.rpm3.3 在test101服务器配置filebeat,修改/etc/filebeat/filebeat.yml下面几个地方:

#=========================== Filebeat inputs =============================

filebeat.inputs:

- type: log

# Change to true to enable this input configuration.

enabled: true #注意:这里默认是false,在kibana界面上没有提到要修改,但是不改成true,kibana界面就看不到日志内容

paths: #配置要采集的日志,这里我采集了messages日志和secure日志

- /var/log/messages*

- /var/log/secure*

#============================== Kibana =====================================

setup.kibana:

host: "10.0.0.101:5601"

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

hosts: ["10.0.0.101:9200"]

username: "elk"

password: "12345678"3.4 在test101服务器执行如下命令修改 /etc/filebeat/modules.d/system.yml:

# filebeat modules enable system3.5 在test101服务器启动filebeat:

# filebeat setup

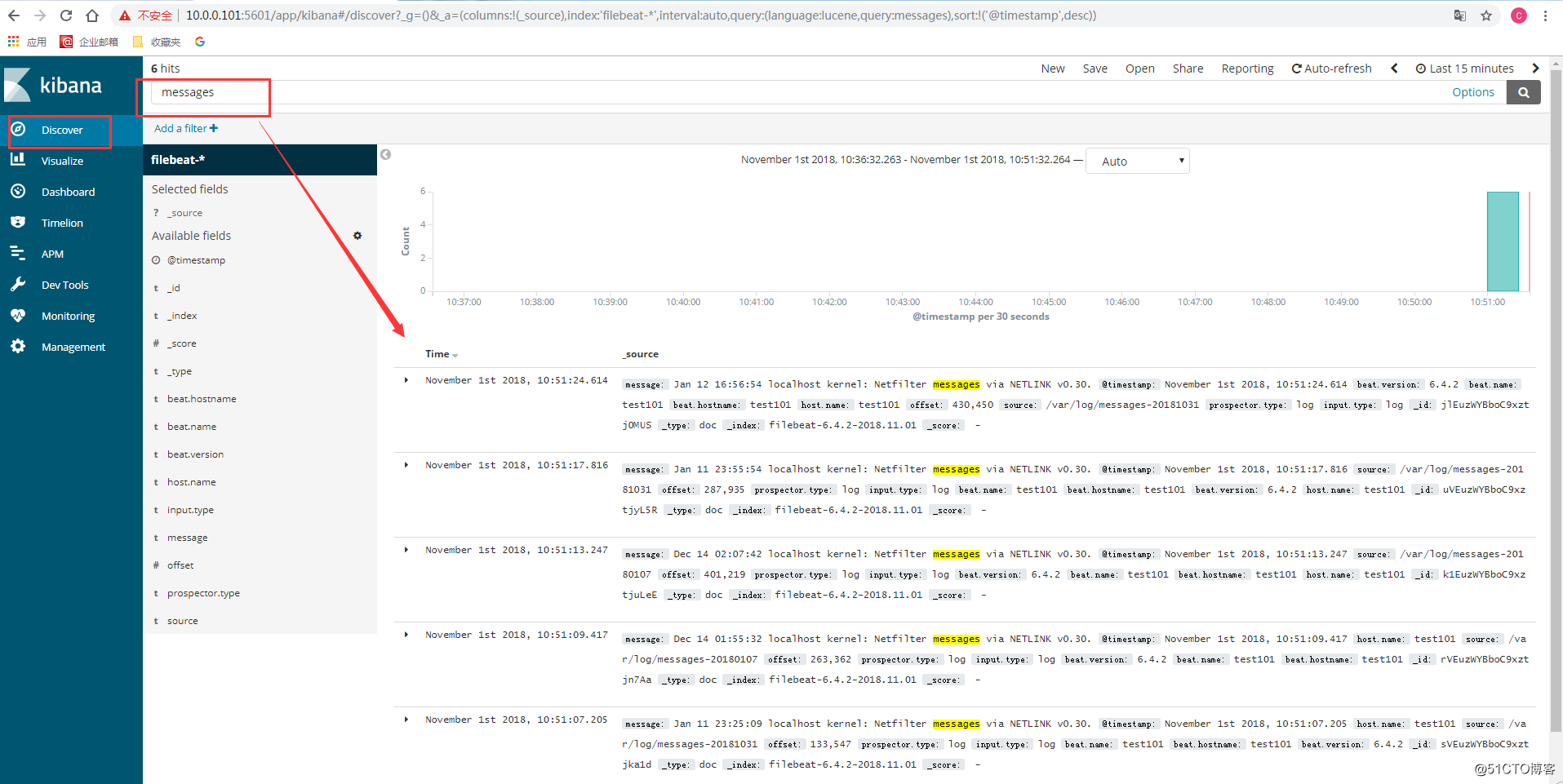

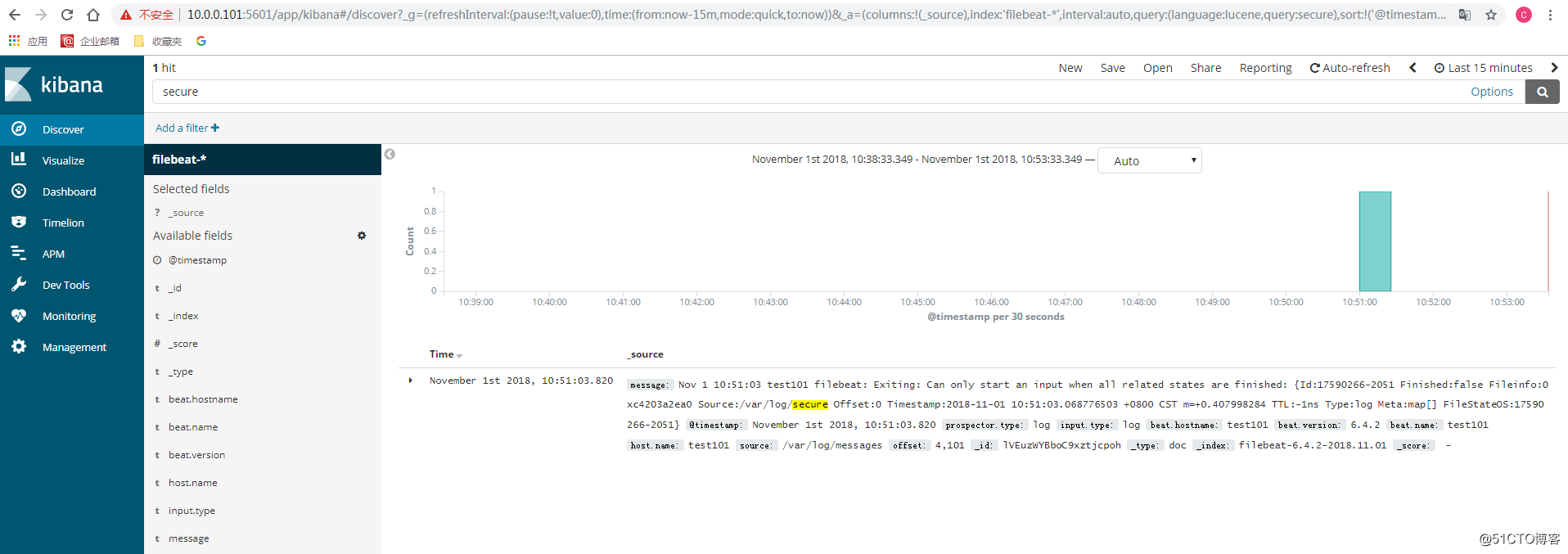

# service filebeat start3.6 然后回到kibana的Discover界面,搜索关键字messages和secure,就能看到相关的日志了:

应用二:采集10.0.0.102(test102)服务器的nginx日志

在应用一,我们采集了elk本身服务器的日志,现在再采集一下test102的日志

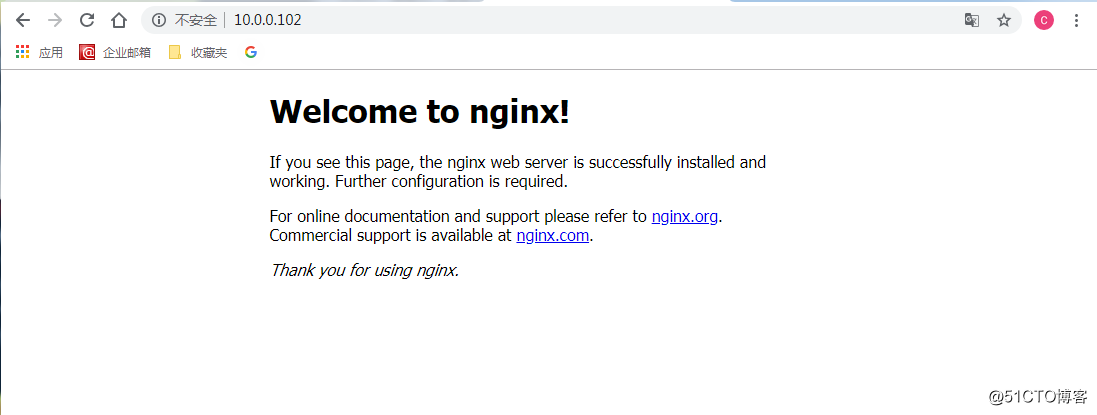

1、在nginx上安装一个nginx:

# yum -y install nginx

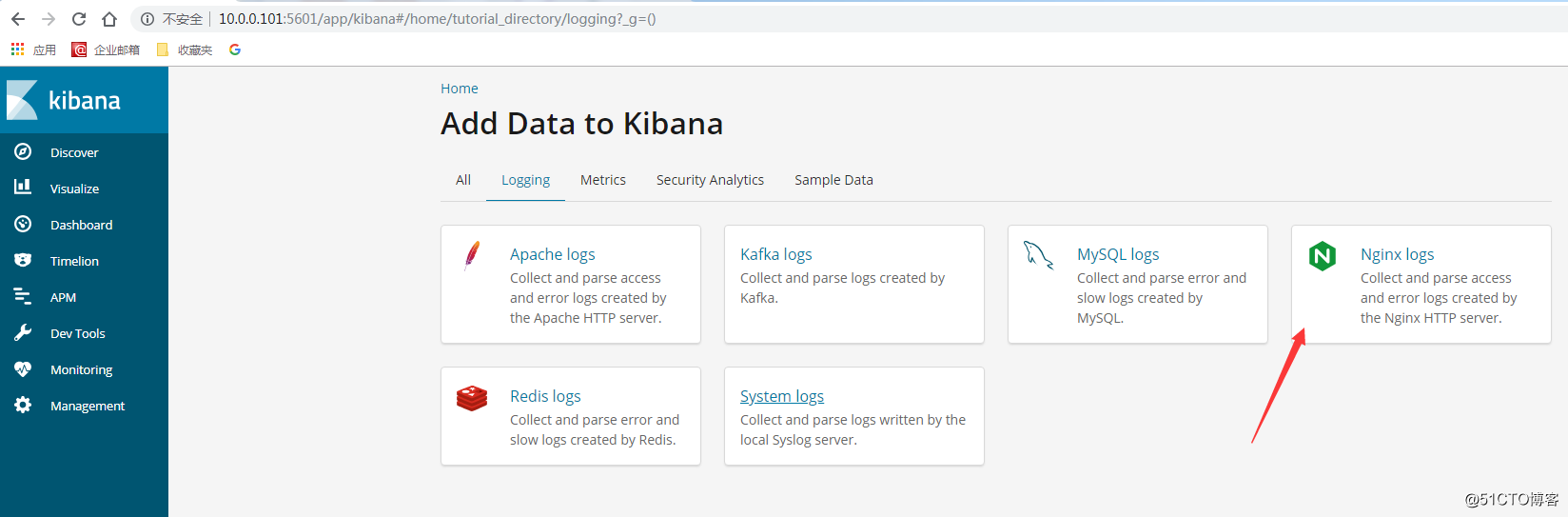

2、跟应用一一样,在kibana的首页,点击“Add log data”,然后选择nginx logs,找到安装步骤:

3、选择RPM,这里有添加日志的步骤(可以参考如下的配置步骤:):

3.1 在test101服务器的es下面安装插件:

# cd /usr/local/elasticsearch-6.4.2/bin/

# ./elasticsearch-plugin install ingest-geoip #这个在应用一装过了,可以省略

# ./elasticsearch-plugin install ingest-user-agent=======以下都在10.0.0.102(test102)服务器进行=======

3.2 在test102服务器下载并安装filebeat:

# curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.4.2-x86_64.rpm

# rpm -vi filebeat-6.4.2-x86_64.rpm3.3 在test102服务器配置filebeat,修改/etc/filebeat/filebeat.yml下面几个地方:

#=========================== Filebeat inputs =============================

filebeat.inputs:

- type: log

# Change to true to enable this input configuration.

enabled: true #注意:这里默认是false,在kibana界面上没有提到要修改,但是不改成true,kibana界面就看不到日志内容

paths: #配置要采集的日志,这里我采集了/var/log/nginx/目录下的所有日志文件,包括access.log和error.log,就用了*

- /var/log/nginx/*

#============================== Kibana =====================================

setup.kibana:

host: "10.0.0.101:5601"

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

hosts: ["10.0.0.101:9200"]

username: "elk"

password: "12345678"3.4 在test102服务器执行如下命令修改/etc/filebeat/modules.d/nginx.yml:

# filebeat modules enable nginx执行之后,看到文件写入了如下的内容:

[[email protected] ~]# cat /etc/filebeat/modules.d/nginx.yml

- module: nginx

# Access logs

access:

enabled: true

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:

# Error logs

error:

enabled: true

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:

[[email protected] ~]# 3.5 在test102服务器启动filebeat:

# filebeat setup

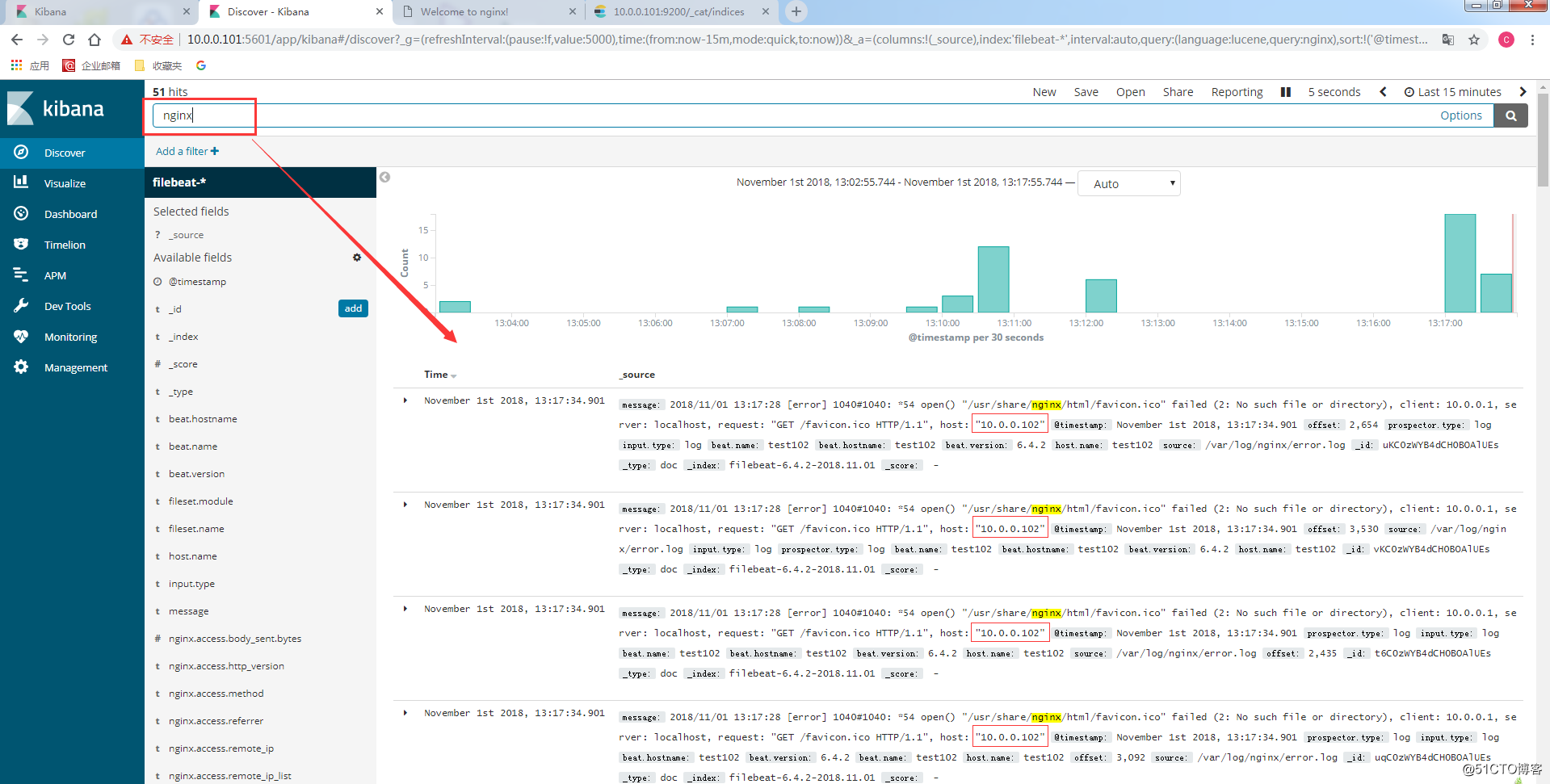

# service filebeat start3.6 然后回到kibana的Discover界面,就能看到相关的日志了:

备注:

有些文章安装了elasticsearch-head插件,本文没有安装

以上是关于从0开始搭建ELK及采集日志的简单应用的主要内容,如果未能解决你的问题,请参考以下文章

Elasticsearch+Logstash+Kibana日志采集服务搭建并简单整合应用